An SEO Marketing Expert Is an Expert on Search: AI Is Good for You. Adapt

December 2, 2025

![green-dino_thumb_thumb[1]_thumb green-dino_thumb_thumb[1]_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb1_thumb_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I found it interesting to learn that a marketer is an expert on search and retrieval. Why? The expert has accrued 20 years of experience in search engine optimization aka SEO. I wondered, “Was this 20 years of diverse involvement in search and retrieval, or one year of SEO wizardry repeated 20 times?” I don’t know.

I spotted information about this person’s view of search in a newsletter from a group whose name I do not know how to pronounce. (I don’t know much.) The entity does business as BrXnd.ai. After some thought (maybe two seconds) I concluded that the name represented the concept “branding” with a dollop of hipness or ai.

Am I correct? I don’t know. Hey, that’s three admissions of intellectual failure a 10 seconds. Full disclosure: I know does not care.

Agentic SEO will put every company on the map. Relevance will become product sales. The methodology will be automated. The marketing humanoids will get fat bonuses. The quality of information available will soar upwards. Well, probably downwards. But no worries. Thanks, Venice.ai. Good enough.

The article is titled “The Future of Search and the Death of Links // BRXND Dispatch vol 96.” It points to a video called “The Future of Search and the Death of Links.” You can view the 22 minute talk at this link. Have at it, please.

Let me quote from the BrXnd.ai write up:

…we’re moving from an era of links to an era of recommendations. AI overviews now appear on 30-40% of search results, and when they do, clicks drop 20-40%. Google’s AI Mode sends six times fewer clicks than traditional search.

I think I have heard that Google handles 75 to 85 percent of global searches. If these data are on the money or even close to the eyeballs Google’s advertising money machine flogs, the estimable company will definitely be [a] pushing for subscriptions to anything and everything it once subsidized with oodles of advertisers’ cash; [b] sticking price tags on services positioned as free; [c] charging YouTube TV viewers the way disliked cable TV companies squeezed subscribers for money; [d] praying to the gods of AI that the next big thing becomes a Google sandbox; and [e] embracing its belief that it can control governments and neuter regulators with more than 0.01 milliliters of testosterone.

The write up states:

When search worked through links, you actively chose what to click—it was manual research, even if imperfect. Recommendations flip that relationship. AI decides what you should see based on what it thinks it knows about you. That creates interesting pressure on brands: they can’t just game algorithms with SEO tricks anymore. They need genuine value propositions because AI won’t recommend bad products. But it also raises questions about what happens to our relationship with information when we move from active searching to passive receiving.

Okay, let’s work through a couple of the ideas in this quoted passage.

First, clicking on links is indeed semi-difficult and manual job. (Wow. Take a break from entering 2.3 words and looking for a likely source on the first page of search results. Demanding work indeed.) However, what if those links are biased by inept programmers, the biases of the data set processed by the search machine, or intentionally manipulated to weaponize content to achieve a goal?

Second, the hook for the argument is that brands can no longer can game algorithms. Bid farewell to keyword stuffing. There is a new game in town: Putting a content object in as many places as possible in multiple formats, including the knowledge nugget delivered by TikTok-type services. Most people it seems don’t think about this and rely on consultants to help them.

Finally, the notion of moving from clicking and reading to letting a BAIT (big AI tech) company define one’s knowledge universe strikes me as something that SEO experts don’t find problematic. Good for them. Like me, the SEO mavens think the business opportunities for consulting, odd ball metrics, and ineffectual work will be rewarding.

I appreciate BrXnd.ai for giving me this glimpse of a the search and retrieval utopia I will now have available. Am I excited? Yeah, sure. However, I will not be dipping into the archive of the 95 previous issues of BrXnd “dispatches.” I know this to be a fact.

Stephen E Arnold, December 2, 2025

AI-Yai-Yai: Two Wizards Unload on What VCs and Consultants Ignore

December 2, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I read “Ilya Sutskever, Yann LeCun and the End of Just Add GPUs.” The write up is unlikely to find too many accelerationists printing out the write up and handing it out to their pals at Philz Coffee. What does this indigestion maker way? Let’s take a quick look.

The write up says:

Ilya Sutskever – co-founder of OpenAI and now head of Safe Superintelligence Inc. – argued that the industry is moving from an “age of scaling” to an “age of research”. At the same time, Yann LeCun, VP & Chief AI Scientist at Meta, has been loudly insisting that LLMs are not the future of AI at all and that we need a completely different path based on “world models” and architectures like JEPA. [Beyond Search note because the author of the article was apparently making assumptions about what readers know. JEPA is short hand for Joint Embedding Predictive Architecture. The idea is to find a recipe to all machines learn about the world in a way a human does.]

I like to try to make things simple. Simple things are easier for me to remember. This passage means: Dead end. New approaches needed. Your interpretation may be different. I want to point out that my experience with LLMs in the past few months have left me with a sense that a “No Outlet” sign is ahead.

Thanks, Venice.ai. The signs are pointing in weird directions, but close enough for horse shoes.

Let’s take a look at another passage in the cited article.

“The real bottleneck [is] generalization. For Sutskever, the biggest unsolved problem is generalization. Humans can:

learn a new concept from a handful of examples

transfer knowledge between domains

keep learning continuously without forgetting everything

Models, by comparison, still need:

huge amounts of data

careful evals (sic) to avoid weird corner-case failures

extensive guardrails and fine-tuning

Even the best systems today generalize much worse than people. Fixing that is not a matter of another 10,000 GPUs; it needs new theory and new training methods.”

I assume “generalization” to AI wizards has this freight of meaning. For me, this is a big word way of saying, “Current AI models don’t work or perform like humans.” I do like the clarity of “needs new theory and training methods.” The “old” way of training has not made too many pals among those who hold copyright in my opinion. The article calls this “new recipes.”

Yann LeCun points out:

LLMs, as we know them, are not the path to real intelligence.

Yann LeCun likes world models. These have these attributes:

- “learn by watching the world (especially video)

- build an internal representation of objects, space and time

- can predict what will happen next in that world, not just what word comes next”

What’s the fix? You can navigate to the cited article and read the punch line to the experts’ views of today’s AI.

Several observations are warranted:

- Lots of money is now committed to what strikes these experts as dead ends

- The move fast and break things believers are in a spot where they may be going too fast to stop when the “Dead End” sign comes into view

- The likelihood of AI companies demonstrating that they can wish, think, and believe they have the next big thing and are operating with a willing suspension of disbelief.

I wonder if they positions presented in this article provide some insight into Google’s building dedicated AI data centers for big buck, security conscious clients like NATO and Pavel Durov’s decision to build the SETI-type of system he has announced.

Stephen E Arnold, December 2, 2025

Palantir Channels Moses, Blue Chip Consulting Baloney, and PR

December 2, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Palantir Technologies is a company in search of an identity. You may know the company latched on to the Lord of the Rings as a touchstone. The Palantir team adopted the “seeing stone.” The idea was that its technology could do magical things. There are several hundred companies with comparable technology. Datawalk has suggested that its system is the equivalent of Palantir’s. Is this true? I don’t know, but when one company is used by another company to make sales, it suggests that Palantir has done something of note.

I am thinking about Palantir because I did a small job for i2 Ltd. when Mike Hunter still was engaged with the firm. Shortly after this interesting work, I learned that Palantir was engaged in litigation with i2 Ltd. The allegations included Palantir’s setting up a straw man company to license the i2 Ltd.’s Analyst Notebook software development kit. i2 was the ur-intelware. Many of the companies marketing link analysis, analytics focused on making sense of call logs, and other arcana of little interest to most people are relatives of i2. Some acknowledge this bloodline. Others, particularly young intelware company employees working trade shows, just look confused if I mention i2 Ltd. Time is like sandpaper. Facts get smoothed, rounded, or worn to invisibility.

We have an illustration output by MidJourney. It shows a person dressed in a wardrobe that is out of step with traditional business attired. The machine-generated figure is trying to convince potential customers that the peculiarly garbed speaker can be trusted. The sign would have been viewed as good marketing centuries ago. Today it is just peculiar, possibly desperate on some level.

I read “Palantir Uses the ‘5 Whys’ Approach to Problem Solving — Here’s How It Works.” What struck me about the article is that Palantir’s CEO Alex Karp is recycling business school truisms as the insights that have powered the company to record government contracts. Toyota was one of the first company’s to focus on asking “why questions.” That firm tried to approach selling automobiles in a way different from the American auto giants. US firms were the world leaders when Toyota was cranking out cheap vehicles. The company pushed songs, communal exercise, and ideas different from the chrome trim crowd in Detroit; for example, humility, something called genchi genbutsu or go and see first hand, employee responsibility regardless of paygrade, continuous improvement (usually not adding chrome trim), and thinking beyond quarterly results. To an America, Mr. Toyoda’s ideas were nutso.

The write up reports:

Karp is a firm believer in the Five Whys, a simple system that aims to uncover the root cause of an issue that may not be immediately apparent. The process is straightforward. When an issue arises, someone asks, “Why?” Whatever the answer may be, they ask “why?” again and again until they have done so five times. “We have found is that those who are willing to chase the causal thread, and really follow it where it leads, can often unravel the knots that hold organizations back” …

The article adds this bit of color:

Palantir’s culture is almost as iconoclastic as its leader.

We have the Lord of the Rings, we have a Japanese auto company’s business method, and we have the CEO as an iconoclast.

Let’s think about this type of PR. Obviously Palantir and its formal and informal “leadership” want to be more than an outfit known for ending up in court as a result of a less-than-intelligent end run about an outfit whose primary market was law enforcement and intelligence professionals. Palantir is in the money or at least money from government contract, and it rarely mentions its long march to today’s apparent success. The firm was founded in May 2003. After a couple of years, Palantir landed its first customer: The US Central Intelligence Agency.

The company ingested about $3 billion in venture funding and reported its first profitable quarter in 2022. That’s 19 years, one interesting legal dust up, and numerous attempts to establish long-term relationships with its “customers.” Palantir did some work for do-good outfits. It tried its hand at commercial projects. But the firm remained anchored to government agencies in the US and the UK.

But something was lacking. The article is part of a content marketing campaign to make the firm’s CEO a luminary among technology leaders. Thus, we have the myth building block like the five why’s. These are not exactly intellectual home runs. The method is not proprietary. The method breaks down in many engagements. People don’t know why something happened. Consultants or forward deployed engineers scurry around trying to figure out what’s going. At some blue chip consulting firms, trotting out Toyota’s precepts as a way to deal with social media cyber security threats might result in the client saying, “No, thanks. We need a less superficial approach.”

I am not going to get a T shirt that says, “The knots that hold organizations back.” I favor

From my point of view, there are a couple of differences between the Toyota and it why era and Palantir today; for instance, Toyota was into measured, mostly disciplined process improvement. Palantir is more like the “move fast, break things” Silicon Valley outfit. Toyota was reasonably transparent about its processes. I did see the lights out factory near the Tokyo airport which was off limits to Kentucky people like. Palantir is in my mind associated with faux secrecy, legal paperwork, and those i2-related sealed documents.

Net net: Palantir’s myth making PR campaign is underway. I have no doubt it will work for many people. Good for them.

Stephen E Arnold, December x, 2025

AI Breaks Career Ladders

December 2, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

My father used to tell me that it was important to work at a company and climb the career ladder. I did not understand the concept. In my first job, I entered at a reasonably elevated level. I reported to a senior vice president and was given a big, messy project to fix up and make successful. In my second job, I was hired to report to the president of a “group” of companies. I don’t think I had a title. People referred to me as Mr. X’s “get things done person.” My father continued to tell me about the career ladder, but it did not resonate with me.

Thanks Venice.ai. I fired five prompts before you came close to what I specified. Good work, considering.

Only later, when I ran my own small consulting firm did the concept connect. I realized that as people worked on tasks, some demonstrated exceptional skill. I tried to find ways to expand those individuals capabilities. I think I succeeded, and several have contacted me years after I retired to tell me they were grateful for the opportunities I provided.

Imagine my surprise when I read “The Career Ladder Just Got Terminated: AI Kills Jobs Before They’re Born.” I understand. Co workers have no way to learn and earn the right to pursue different opportunities in order to grow their capabilities.

The write up says:

Artificial intelligence isn’t just taking jobs. It’s removing the rungs of the ladder that turn rookies into experts.

Here’s a statement from the rock and roll magazine that will make some young, bright eyed overachievers nervous:

In addition to making labor more efficient, it [AI] actually makes labor optional. And the disruption won’t unfold over generations like past revolutions; it’s happening in real time, collapsing decades of economic evolution into a few short years.

Forget optional. If software can replace hard to manage, unpredictable, and good enough humans, AI will get the nod. The goal of most organizations is to generate surplus cash. Then that cash is disbursed to stakeholders, deserving members of the organization’s leadership, and lavish off site meetings, among other important uses.

Here’s another passage that unintentionally will make art history majors, programmers, and, yes, even some MBA with the right stuff think about becoming a plumber:

And this AI job problem isn’t confined to entertainment. It’s happening in law, medicine, finance, architecture, engineering, journalism — you name it. But not every field faces the same cliff. There’s one place where the apprenticeship still happens in real time: live entertainment and sports.

Perhaps there will be an MBA Comedy Club? Maybe some computer scientists will lean into their athletic prowess for table tennis or quoits?

Here’s another cause of heart burn for the young job hunter:

Today, AI isn’t hunting our heroes; it’s erasing their apprentices before they can exist. The bigger danger is letting short-term profits dictate our long-term cultural destiny. If the goal is simply to make the next quarter’s numbers look good, then automating and cutting is the easy answer. But if the goal is culture, originality and progress, then the choice is just as clear: protect the training grounds, take risks on the unknown and invest in the people who will surprise us.

I don’t see the BAIT (big AI technology companies) leaning into altruistic behavior for society. These outfits want to win, knock off the competition, and direct the masses to work within the bowling alley of life between two gutters. Okay, job hunters, have at it. As a dinobaby, I have no idea what it impact job hunting in the early days of AI will have. Did I mention plumbing?

Stephen E Arnold, December 2, 2025

China Smart US Dumb: An AI Content Marketing Push?

December 1, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I have been monitoring the China Smart, US Dumb campaign for some time. Most of the methods are below the radar; for example, YouTube videos featuring industrious people who seem to be similar to the owner of the Chinese restaurant not far from my office or posts on social media that remind me of the number of Chinese patents achieved each year. Sometimes influencers tout the wonders of a China-developed electric vehicle. None of these sticks out like a semi mainstream media push.

Thanks, Venice.ai, not exactly the hutong I had in mind but close enough for chicken kung pao in Kentucky.

However, that background “China Smart, US Dumb” messaging may be cranking up. I don’t know for sure, but this NBC News (not the Miss Now news) report caught my attention. Here’s the title:

The subtitle is snappier than Girl Fixes Generator, but you judge for yourself:

AI Startups Are Seeing Record Valuations, But Many Are Building on a Foundation of Cheap, Free-to-Download Chinese AI Models.

The write up states:

Surveying the state of America’s artificial intelligence landscape earlier this year, Misha Laskin was concerned. Laskin, a theoretical physicist and machine learning engineer who helped create some of Google’s most powerful AI models, saw a growing embrace among American AI companies of free, customizable and increasingly powerful “open” AI models.

We have a Xoogler who is concerned. What troubles the wizardly Misha Laskin? NBC News intones in a Stone Phillips’ tone:

Over the past year, a growing share of America’s hottest AI startups have turned to open Chinese AI models that increasingly rival, and sometimes replace, expensive U.S. systems as the foundation for American AI products.

Ever cautious, NBC News asserts:

The growing embrace could pose a problem for the U.S. AI industry. Investors have staked tens of billions on OpenAI and Anthropic, wagering that leading American artificial intelligence companies will dominate the world’s AI market. But the increasing use of free Chinese models by American companies raises questions about how exceptional those models actually are — and whether America’s pursuit of closed models might be misguided altogether.

Bingo! The theme is China smart and the US “misguided.” And not just misguided, but “misguided altogether.”

NBC News slams the point home with more force that the generator repairing Asian female closes the generator’s housing:

in the past year, Chinese companies like Deepseek and Alibaba have made huge technological advancements. Their open-source products now closely approach or even match the performance of leading closed American models in many domains, according to metrics tracked by Artificial Analysis, an independent AI benchmarking company.

I know from personal conversations that most of the people with whom I interreact don’t care. Most just accept the belief that the US is chugging along. Not doing great. Not doing terribly. Just moving along. Therefore, I don’t expect you, gentle reader, to think much of this NBC News report.

That’s why the China Smart, US Dumb messaging is effective. But this single example raises the question, “What’s the next major messaging outlet to cover this story?”

Stephen E Arnold, December 1, 2025

AI ASICs: China May Have Plans for AI Software and AI Hardware

December 1, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I try to avoid wild and crazy generalizations, but I want to step back from the US-centric AI craziness and ask a question, “Why is the solution to anticipated AI growth more data centers?” Data centers seem like a trivial part of the broader AI challenge to some of the venture firms, BAIT (big AI technology) companies, and some online pundits. Building a data center is a cheap building filled with racks of computers, some specialized gizmos, a connection to the local power company, and a handful of network engineers. Bingo. You are good to go.

But what happens if the compute is provided by Application-Specific Integrated Circuits or ASICs? When ASICs became available for crypto currency mining, the individual or small-scale miner was no longer attractive. What happened is that large, industrialized crypto mining farms pushed out the individual miners or mom-and-pop data centers.

The Ghana ASIC roll out appears to have overwhelmed the person taking orders. Demand for cheap AI compute is strong. Is that person in the blue suit from Nvidia? Thanks, MidJourney. Good enough, the mark of excellence today.

Amazon, Google, and probably other BAIT outfits want to design their own AI chips. The problem is similar to moving silos of corn to a processing plant with a couple of pick up trucks. Capacity at chip fabrication facilities is constrained. Big chip ideas today may not be possible on the time scale set by the team designing NFL arena size data centers in Rhode Island- or Mississippi-type locations.

A Chinese startup founded by a former Google engineer claims to have created a new ultra-efficient and relatively low cost AI chip using older manufacturing techniques. Meanwhile, Google itself is now reportedly considering whether to make its own specialized AI chips available to buy. Together, these chips could represent the start of a new processing paradigm which could do for the AI industry what ASICs did for bitcoin mining.

What those ASICs did for crypto mining was shift calculations from individuals to large, centralized data centers. Yep, centralization is definitely better. Big is a positive as well.

The write up adds:

The Chinese startup is Zhonghao Xinying. Its Ghana chip is claimed to offer 1.5 times the performance of Nvidia’s A100 AI GPU while reducing power consumption by 75%. And it does that courtesy of a domestic Chinese chip manufacturing process that the company says is "an order of magnitude lower than that of leading overseas GPU chips." By "an order or magnitude lower," the assumption is that means well behind in technological terms given China’s home-grown chip manufacturing is probably a couple of generations behind the best that TSMC in Taiwan can offer and behind even what the likes of Intel and Samsung can offer, too.

The idea is that if these chips become widely available, they won’t be very good. Probably like the first Chinese BYD electric vehicles. But after some iterative engineering, the Chinese chips are likely to improve. If these improvements coincide with the turn on of the massive data centers the BAIT outfits are building, there might be rethinking required by the Silicon Valley wizards.

Several observations will be offered but these are probably not warranted by anyone other than myself:

- China might subsidize its home grown chips. The Googler is not the only person in the Middle Kingdom trying to find a way around the US approach to smart software. Cheap wins or is disruptive until neutralized in some way.

- New data centers based on the Chinese chips might find customers interested in stepping away from dependence on a technology that most AI companies are using for “me too”, imitative AI services. Competition is good, says Silicon Valley, until it impinges on our business. At that point, touch-to-predict actions come into play.

- Nvidia and other AI-centric companies might find themselves trapped in AI strategies that are comparable to a large US aircraft carrier. These ships are impressive, but it takes time to slow them down, turn them, and steam in a new direction. If Chinese AI ASICs hit the market and improve rapidly, the captains of the US-flagged Transformer vessels will have their hands full and financial officers clamoring for the leaderships’ attention.

Net net: Ponder this question: What is Ghana gonna do?

Stephen E Arnold, December 1, 2025

Deloitte and AI: Impact, Professionalism, and Integrity. Absolutely But Don’t Forget Billable

December 1, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Do you recognize any of these catchphrases?

- Making an impact that matters

- Professionalism in Practice

- Serving Those Who Serve Business

- Service with Integrity

Time’s up. Each of these was — note the past tense — associated in my mind with Deloitte (originally Deloitte Haskins & Sell before the firm became a general consulting firm. Today’s Deloitte is a representative blue chip consulting outfit. I am not exactly what shade of blue is appropriate. There is true blue, Jack Benny’s blue eyes, and the St. Louis blues. Then there are the blues associated with a “small” misstep with AI. Understatement is useful at blue chip consulting services firms.

Thanks, Venice.ai. Good enough.

I read Fortune Magazine’s story “Deloitte Allegedly Cited AI-Generated Research in a Million-Dollar Report for a Canadian Provincial Government.” The write up states with the alleged hedge:

The Deloitte report contained false citations, pulled from made-up academic papers to draw conclusions for cost-effectiveness analyses, and cited real researchers on papers they hadn’t worked on, the Independent found. It included fictional papers coauthored by researchers who said they had never worked together.

Now I have had some experience with blue chip and light blue chip consulting firms in my half century of professional work. I have watched some interesting methods used to assemble documents for clients. The most memorable was employed by a special consultant dragooned by a former high ranking US government official who served in both the Nixon and Ford administrations. The “special” dude who was smarter than anyone else at my blue chip firm at the time because he told me he was used his university lecture notes as part of our original research. Okay, that worked and was approved by the former government big wheel who was on a contract with our firm.

I do not recall making up data for any project on which I worked. I thought that my boss did engage in science fiction when he dreamed up our group’s revenue goals for each quarter, but the client did not get these fanciful, often juicy numbers.

The write up presents what Deloitte allegedly said:

“Deloitte Canada firmly stands behind the recommendations put forward in our report,” a Deloitte Canada spokesperson told Fortune in a statement. “We are revising the report to make a small number of citation corrections, which do not impact the report findings. AI was not used to write the report; it was selectively used to support a small number of research citations.”

Several random thoughts:

- Deloitte seems to be okay with their professionals’ use of smart software. I wonder if the framing of the problem, the upsides, the downsides of options, and strategic observations were output as a result of AI prompts?

- AI does make errors. Does Deloitte have a process in place to verify the information in documents submitted to a client? If the answer is yes, it is not working. If the answer is no, perhaps Deloitte should consider developing such a system?

- I am not surprised. Based on the blue chippers I have met in the last couple of years, I was stunned that some of these people were hired by big name firms. I assumed their mom or dad had useful connections at high levels which their child could use to score a win.

Net net: Clients will pay for billable hours even though the “efficiencies” of AI may not show up in the statement. I would wager $1.00 that the upside from the “efficiencies” will boost some partners’ bonuses, but that’s just a wild guess. Perhaps the money will flow to needy families?

Stephen E Arnold, December 1, 2025

Mother Nature Does Not Like AI

December 1, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Nature, the online service and still maybe a printed magazine, published a sour lemonade story. Its title is “Major AI Conference Flooded with Peer Reviews Written Fully by AI.” My reaction was, “Duh! Did you expect originality from AI professionals chasing big bucks?” In my experience, AI innovation appears in the marketing collateral, the cute price trickery for Google Gemini, and the slide decks presented to VCs who don’t want to miss out on the next big thing.

The Nature article states this shocker:

Controversy has erupted after 21% of manuscript reviews for an international AI conference were found to be generated by artificial intelligence.

Once again: Duh!

How about this statement from the write up and its sources?

The conference organizers say they will now use automated tools to assess whether submissions and peer reviews breached policies on using AI in submissions and peer reviews. This is the first time that the conference has faced this issue at scale, says Bharath Hariharan, a computer scientist at Cornell University in Ithaca, New York, and senior program chair for ICLR 2026. “After we go through all this process … that will give us a better notion of trust.”

Yep, trust. That’s a quality I admire.

I want to point out that Nature, a publication interested in sticking to the facts, does a little soft shoe and some fancy dancing in the cited article. For example, there are causal claims about how conferences operate. I did not spot any data, but I am a dinobaby prone to overlook the nuances of modern scientific write ups. Also, the article seems to want a fix now. Yeah, well, that is unlikely. LLMs change so that smart software tuned to find AI generated content are not exactly as reliable as a 2025 Toyota RAV.

Also, I am not sure fixes implemented by human reviewers and abstract readers will do the job. When I had the joyful opportunity to review submissions for a big time technical journal, I did a pretty good job on the first one or two papers tossed at me. But, to be honest, by paper three I was not sure I had the foggiest idea what I was doing. I probably would have approved something written by a French bulldog taking mushrooms for inspiration.

If you are in the journal article writing game or giving talks at conferences, think about AI. Whether you use it or not, you may be accused of taking short cuts. That’s important because professional publishers and conference organizers never take short cuts. They take money.

Stephen E Arnold, December 1, 2025

An AI Outfit Promises Something Infinite: Er, Really? Infinite?

November 28, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Venture Beat publishes some write ups that strike me like marketing collateral. I am not sure what to make of an article that asserts an “infinite” service. Let’s not skip over the idea of infinity. I think a couple of mathy types drove themselves crazy thinking about infinities of infinities working two ways: Bigger numbers and then smaller numbers. Yep, those close to these mathy types checked them into a facility for those sufficiently unusual. Would this article “Anthropic’s Claude Opus 4.5 Is Here” Cheaper, AI, Infinite Chats, and Coding Skills That Beat Humans (sic).”

Georg Cantor makes an appearance at the Happy Valley Rest Home. He tells the nurse delivering his scopolamine that he wants to talk about the central infinite-cardinality problem before he is placed in isolation. Thanks, Venice.ai. Close enough, although the setting does not look too much like a 19th century facility. Oh, well.

The first step on what will be a short journey I assure you. Let’s let Anthropic Claude 4.5 define “infinite”, shall we? Here’s the response:

Infinity represents unboundedness—a concept of endlessness without limit. In mathematics, it describes quantities exceeding any finite value or processes continuing indefinitely. Infinity isn’t a number but an abstract notion of limitlessness, fundamental to calculus, set theory, and cosmology.

Notice that Claude 4.5 did not mention marketing and PR speak. This is important for “Anthropic’s Claude Opus 4.5 Is Here: Cheaper AI, Infinite Chats, and Coding Skills That Beat Humans.” Note the two-word phrase “infinite chats.” Nope, I don’t think so. Not even Amazon’s money pipe can serve “infinite chats.” Come on, Claude. Get real. Amazon cannot even keep its system online if my memory serves me. But infinite? Hmmm.

The write up says that Venture Beat engaged in an exclusive interview with Alex Albert, “Anthropic’s head of developer relations.” (I think this means a marketing job. What do you think?) Here’s a statement that caught my attention:

The new model, Claude Opus 4.5, scored higher on Anthropic’s most challenging internal engineering assessment than any human job candidate in the company’s history, according to materials reviewed by VentureBeat. The result underscores both the rapidly advancing capabilities of AI systems and growing questions about how the technology will reshape white-collar professions. The Amazon-backed company is pricing Claude Opus 4.5 at $5 per million input tokens and $25 per million output tokens — a dramatic reduction from the $15 and $75 rates for its predecessor, Claude Opus 4.1, released earlier this year. The move makes frontier AI capabilities accessible to a broader swath of developers and enterprises while putting pressure on competitors to match both performance and pricing.

Does this strike you as what I caller a “better, faster, cheaper” approach. I like to add cheaper is the operative word. Yep, buy the market and raise prices later. Does this surprise me? No. From a company that promises to deliver something that costs money infinitely, cheaper does not surprise me one whit.

Here’s another statement I found interesting:

Albert said employee testers consistently reported that the model demonstrates improved judgment and intuition across diverse tasks — a shift he described as the model developing a sense of what matters in real-world contexts. “The model just kind of gets it,” Albert said. “It just has developed this sort of intuition and judgment on a lot of real world things that feels qualitatively like a big jump up from past models.”

To test this statement, I fired up Claude 4.5 again and asked, “Is it possible for a commercial enterprise supported by modest customer sales and venture capital to deliver an “infinite service”; for example, infinite chats for users?”

Here’s what the “just kind of gets it” Claude told me:

No, it’s not sustainably possible. Here’s why:

- Real Costs Scale with Usage: Every chat consumes computational resources (servers, energy, bandwidth). Even efficient models incur marginal costs per interaction that accumulate with heavy users.

- Finite Capital: Venture funding and modest sales provide limited runway. Without usage caps, a small subset of power users could drain resources disproportionately, making the business model unsustainable.

- Economic Reality: “Unlimited” offerings typically include hidden constraints (throttling during peak times, deprioritization, or eventual tier upgrades). True infinity would require infinite capital and infrastructure.

- Tragedy of the Commons: Without limits, rational users maximize personal use, collectively overwhelming the system—forcing either service degradation or imposed restrictions.

Practical Outcome: Most companies offer generous but capped services (e.g., “50 free messages/day”) or tiered pricing. Marketing terms like “unlimited” often mean “more than typical users need,” not literally infinite. Would you like examples of how AI companies balance accessibility with sustainability?

I think that Mr. Albert might want to check his assertions with his product that “infinite” is a tough promise. But let’s not stop with the infinite. What about this statement in the write up?

Opus 4.5 outscores all human candidates on company’s toughest engineering test.

For me, the sticking point is the word “all.” Categorical affirmatives are problematic. Black swans aside, “all” is a cousin of infinite. Its use implies a rather sweeping approach to those who take a tough engineering test. What’s the sample? One, 100, 10,000? Yeah. Oh, well.

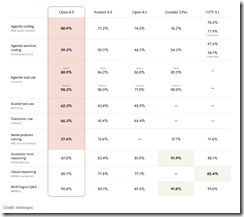

What’s the proof? Here’s the chart that did not knock me over with shock. I know it is difficult to read. Navigate to the cited article on a boat anchor computer with a big screen, and you can sort of read the numbers. Look for this:

Claude 4.5 is better than also-rans like Google and OpenAI. Well, why not? Anthropic has whipped infinite and tamed all. Dealing with weak wristed systems like Googzilla and ChatGPT is trivial.

Mr. Albert offered a statement which Venture Beat uses to complete this remarkable feat of content marketing, hyperbole, and logical impossibilities:

When asked about the engineering exam results and what they signal about AI’s trajectory, Albert was direct: “I think it’s a really important signal to pay attention to.”

Yep, pay attention. I did.

Stephen E Arnold, November 28, 2025

IBM on the Path to Dyson Spheres But Quantum Networks Come First

November 28, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

How does one of the former innovators in Fear, Uncertainty, and Doubt respond to the rare atmosphere of smart software? The answer, in my opinion, appears in “IBM, Cisco Outline Plans for Networks of Quantum Computers by Early 2030s.” My prediction was wrong about IBM. I thought that with a platform like Watson, IBM would aim directly at Freeman Dyson’s sphere. The idea is to build a sphere in space to gather energy and power advanced computing systems. Well, one can’t get to the Dyson sphere without a network of quantum computers. And the sooner the better.

A big thinker conceptualizes inventions anticipated by science fiction writers. The expert believes that if he thinks it, that “it” will become real. Sure, but usually more than a couple of years are needed for really big projects like affordable quantum computers linked via quantum networks. Thanks, Venice.ai. Good enough.

The write up from the “trust” outfit Thomson Reuters says:

IBM and Cisco Systems … said they plan to link quantum computers over long distances, with the goal of demonstrating the concept is workable by the end of 2030. The move could pave the way for a quantum internet, though executives at the two companies cautioned that the networks would require technologies that do not currently exist and will have to be developed with the help of universities and federal laboratories.

Imagine artificial general intelligence is like to arrive about the same time. IBM has Watson. Does this mean that Watson can run on quantum computers. Those can solve the engineering challenges of the Dyson sphere. IBM can then solve the world’s energy requirements. This sequence seems like a reasonable tactical plan.

The write up points out that building a quantum network poses a few engineering problems. I noted this statement in the news report:

The challenge begins with a problem: Quantum computers like IBM’s sit in massive cryogenic tanks that get so cold that atoms barely move. To get information out of them, IBM has to figure out how to transform information in stationary “qubits” – the fundamental unit of information in a quantum computer – into what Jay Gambetta, director of IBM Research and an IBM fellow, told Reuters are “flying” qubits that travel as microwaves. But those flying microwave qubits will have to be turned into optical signals that can travel between Cisco switches on fiber-optic cables. The technology for that transformation – called a microwave-optical transducer – will have to be developed with the help of groups like the Superconducting Quantum Materials and Systems Center, led by the Fermi National Accelerator Laboratory near Chicago, among others.

Trivial compared to the Dyson sphere confection. It is now sundown for year 2025. IBM and its partner target being operational in 2029. That works out to 24 months. Call it 36 just to add a margin of error.

Several observations:

- IBM and its partner Cisco Systems are staking out their claims to the future of computing

- Compared to the Dyson sphere idea, quantum computers networked together to provide the plumbing for an Internet that makes Jack Dorsey’s Web 5 vision seem like something from a Paleolithic sketch on the wall of the Lescaux Caves.

- Watson and IBM’s other advanced AI technologies probably assisted the IBM marketing professionals with publicizing Big Blue’s latest idea for moving beyond the fog of smart software.

Net net: The spirit of avid science fiction devotees is effervescing. Does the idea of a network of quantum computers tickle your nose or your fancy? I have marked my calendar.

Stephen E Arnold, November 28, 2025