Read This Essay and Learn Why AI Can Do Programming

July 3, 2025

![dino-orange_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb_thumb dino-orange_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/dino-orange_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb_thumb_thumb.gif) No AI, just the dinobaby expressing his opinions to Zillennials.

No AI, just the dinobaby expressing his opinions to Zillennials.

I, entirely by accident since Web search does not work too well, an essay titled “Ticket-Driven Development: The Fastest Way to Go Nowhere.” I would have used a different title; for example, “Smart Software Can Do Faster and Cheaper Code” or “Skip Computer Science. Be a Plumber.” Despite my lack of good vibe coding from the essay’s title, I did like the information in the write up. The basic idea is that managers just want throughput. This is not news.

The most useful segment of the write up is this passage:

You don’t need a process revolution to fix this. You need permission to care again. Here’s what that looks like:

- Leave the code a little better than you found it — even if no one asked you to.

- Pair up occasionally, not because it’s mandated, but because it helps.

- Ask why. Even if you already know the answer. Especially then.

- Write the extra comment. Rename the method. Delete the dead file.

- Treat the ticket as a boundary, not a blindfold.

Because the real job isn’t closing tickets it’s building systems that work.

I wish to offer several observations:

- Repetitive boring, mindless work is perfect for smart software

- Implementing dot points one to five will result in a reprimand, transfer to a salubrious location, or termination with extreme prejudice

- Spending long hours with an AI version of an old-fashioned psychiatrist because you will go crazy.

After reading the essay, I realized that the managerial approach, the “ticket-driven workflow”, and the need for throughput applies to many jobs. Leadership no longer has middle managers who manage. When leadership intervenes, one gets [a] consultants or [b] knee-jerk decisions or mandates.

The crisis is in organizational set up and management. The developers? Sorry, you have been replaced. Say, “hello” to our version of smart software. Her name is No Kidding.

Stephen E Arnold, July 3, 2025

AI Management: Excellence in Distancing Decisions from Consequences

July 2, 2025

![Dino 5 18 25_thumb[3] Dino 5 18 25_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/Dino-5-18-25_thumb3_thumb-1.gif) Smart software involved in the graphic, otherwise just an addled dinobaby.

Smart software involved in the graphic, otherwise just an addled dinobaby.

This write up “Exclusive: Scale AI’s Spam, Security Woes Plagued the Company While Serving Google” raises two minor issues and one that is not called out in the headline or the subtitle:

$14 billion investment from Meta struggled to contain ‘spammy behavior’ from unqualified contributors as it trained Gemini.

Who can get excited about a workflow and editorial quality issue. What is “quality”? In one of my Google monographs I pointed out that Google used at one time a number of numerical recipes to figure out “quality.” Did that work? Well, it was good enough to help get the Yahoo-inspired Google advertising program off the ground. Then quality became like those good brownies from 1953: Stuffed with ingredients no self-respecting Stanford computer science graduate would eat for lunch.

I believe some caution is required when trying to understand a very large and profitable company from someone who is no longer working at the company. Nevertheless, the article presents a couple of interesting assertions and dodges what I consider the big issue.

Consider this statement in the article:

In a statement to Inc., Scale AI spokesperson Joe Osborne said: “This story is filled with so many inaccuracies, it’s hard to keep track. What these documents show, and what we explained to Inc ahead of publishing, is that we had clear safeguards in place to detect and remove spam before anything goes to customers.” [Editor’s Note: “this” means the rumor that Scale cut corners.]

The story is that a process included data that would screw up the neural network.

And the security issue? I noted this passage:

The [spam] episode raises the question of whether or not Google at one point had vital data muddied by workers who lacked the credentials required by the Bulba program. It also calls into question Scale AI’s security and vetting protocols. “It was a mess. They had no authentication at the beginning,” says the former contributor. [Editor’s Note: Bulba means “Bard.”]

A person reading the article might conclude that Scale AI was a corner cutting outfit. I don’t know. But when big money starts to flow and more can be turned on, some companies just do what’s expedient. The signals in this Scale example are the put the pedal to the metal approach to process and the information that people knew that bad data was getting pumped into Googzilla.

But what’s the big point that’s missing from the write up? In my opinion, Google management made a decision to rely on Scale. Then Google management distanced itself from the operation. In the good old days of US business, blue-suited informed middle managers pursued quality, some companies would have spotted the problems and ridden herd on the subcontractor.

Google did not do this in an effective manner.

Now Scale AI is beavering away for Meta which may be an unexpected win for the Google. Will Meta’s smart software begin to make recommendations like “glue your cheese on the pizza”? My personal view is that I now know why Google’s smart software has been more about public relations and marketing, not about delivering something that is crystal clear about its product line up, output reliability, and hallucinatory behaviors.

At least Google management can rely on Deepseek to revolutionize understanding the human genome. Will the company manage in as effective a manner as its marketing department touts its achievements?

Stephen E Arnold, July 2, 2025

Paper Tiger Management

June 24, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

I learned that Apple and Meta (formerly Facebook) found themselves on the wrong side of the law in the EU. On June 19, 2025, I learned that “the European Commission will opt not to impose immediate financial penalties” on the firms. In April 2025, the EU hit Apple with a 500 million euro fine and Meta a 200 million euro fine for non compliance with the EU’s Digital Markets Act. Here’s an interesting statement in the cited EuroNews report the “grace period ends on June 26, 2025.” Well, not any longer.

What’s the rationale?

- Time for more negotiations

- A desire to appear fair

- Paper tiger enforcement.

I am not interested in items one and two. The winner is “paper tiger enforcement.” In my opinion, we have entered an era in management, regulation, and governmental resolve when the GenX approach to lunch. “Hey, let’s have lunch.” The lunch never happens. But the mental process follows these lanes in the bowling alley of life: [a] Be positive, [b] Say something that sounds good, [c] Check the box that says, “Okay, mission accomplished. Move on. [d] Forget about the lunch thing.

When this approach is applied on large scale, high-visibility issues, what happens? In my opinion, the credibility of the legal decision and the penalty is diminished. Instead of inhibiting improper actions, those who are on the receiving end of the punishment lean one thing: It doesn’t matter what we do. The regulators don’t follow through. Therefore, let’s just keep on moving down the road.

Another example of this type of management can be found in the return to the office battles. A certain percentage of employees are just going to work from home. The management of the company doesn’t do “anything”. Therefore, management is feckless.

I think we have entered the era of paper tiger enforcement. Make noise, show teeth, growl, and then go back into the den and catch some ZZZZs.

Stephen E Arnold, June 24, 2025

Move Fast, Break Your Expensive Toy

June 19, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

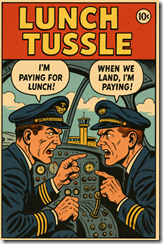

The weird orange newspaper online service published “Microsoft Prepared to Walk Away from High-Stakes OpenAI Talks.” (I quite like the Financial Times, but orange?) The big news is that a copilot may be creating tension in the cabin of the high-flying software company. The squabble has to do with? Give up? Money and power. Shocked? It is Sillycon Valley type stuff, and I think the squabble is becoming more visible. What’s next? Live streaming the face-to-face meetings?

A pilot and copilot engage in a friendly discussion about paying for lunch. The art was created by that outstanding organization OpenAI. Yes, good enough.

The orange service reports:

Microsoft is prepared to walk away from high-stakes negotiations with OpenAI over the future of its multibillion-dollar alliance, as the ChatGPT maker seeks to convert into a for-profit company.

Does this sound like a threat?

The squabbling pilot and copilot radioed into the control tower this burst of static filled information:

“We have a long-term, productive partnership that has delivered amazing AI tools for everyone,” Microsoft and OpenAI said in a joint statement. “Talks are ongoing and we are optimistic we will continue to build together for years to come.”

The newspaper online service added:

In discussions over the past year, the two sides have battled over how much equity in the restructured group Microsoft should receive in exchange for the more than $13bn it has invested in OpenAI to date. Discussions over the stake have ranged from 20 per cent to 49 per cent.

As a dinobaby observing the pilot and copilot navigate through the cloudy skies of smart software, it certainly looks as if the duo are arguing about who pays what for lunch when the big AI tie up glides to a safe landing. However, the introduction of a “nuclear option” seems dramatic. Will this option be a modest low yield neutron gizmo or a variant of the 1961 Tsar Bomba fried animals and lichen within a 35 kilometer radius and converted an island in the arctic to a parking lot?

How important is Sam AI-Man’s OpenAI? The cited article reports this from an anonymous source (the best kind in my opinion):

“OpenAI is not necessarily the frontrunner anymore,” said one person close to Microsoft, remarking on the competition between rival AI model makers.

Which company kicked off what seems to be a rather snappy set of negotiations between the pilot and the copilot. The cited orange newspaper adds:

A Silicon Valley veteran close to Microsoft said the software giant “knows that this is not their problem to figure this out, technically, it’s OpenAI’s problem to have the negotiation at all”.

What could the squabbling duo do do do (a reference to Bing Crosby’s version of “I Love You” for those too young to remember the song’s hook or the Bingster for that matter):

- Microsoft could reach a deal, make some money, and grab the controls of the AI powered P-39 Airacobra training aircraft, and land without crashing at the Renton Municipal Airport

- Microsoft and OpenAI could fumble the landing and end up in Lake Washington

- OpenAI could bail out and hitchhike to the nearest venture capital firm for some assistance

- The pilot and copilot could just agree to disagree and sit at separate tables at the IHOP in Renton, Washington

One can imagine other scenarios, but the FT’s news story makes it clear that anonymous sources, threats, and a bit of desperation are now part of the Microsoft and OpenAI relationship.

Yep, money and control — business essentials in the world of smart software which seems to be losing its claim as the “next big thing.” Are those stupid red and yellow lights flashing at Microsoft and OpenAI as they are at Google?

Stephen E Arnold, June 19, 2025

Who Knew? Remote Workers Are Happier Than Cube Laborers

June 6, 2025

To some of us, these findings come as no surprise. The Farmingdale Observer reports, “Scientists Have Been Studying Remote Work for Four Years and Have Reached a Very Clear Conclusion: ‘Working from Home Makes Us Happier’.” Nestled in our own environment, no commuting, comfy clothes—what’s not to like? In case anyone remains unconvinced, researchers at the University of South Australia spent four years studying the effects of working from home. Writer Bob Rubila tells us:

“An Australian study, conducted over four years and starting before the pandemic, has come up with some enlightening conclusions about the impact of working from home. The researchers are unequivocal: this flexibility significantly improves the well-being and happiness of employees, transforming our relationship with work. … Their study, which was unique in that it began before the health crisis, tracked changes in the well-being of Australian workers over a four-year period, offering a unique perspective on the long-term effects of teleworking. The conclusions of this large-scale research highlight that, despite the sometimes contradictory data inherent in the complexity of the subject, offering employees the flexibility to choose to work from home has significant benefits for their physical and mental health.”

Specifically, researchers note remote workers get more sleep, eat better, and have more time for leisure and family activities. The study also contradicts the common fear that working from home means lower productivity. Quite the opposite, it found. As for concerns over losing in-person contact with colleagues, we learn:

“Concerns remain about the impact on team cohesion, social ties at work, and promotion opportunities. Although the connection between colleagues is more difficult to reproduce at a distance, the study tempers these fears by emphasizing the stability, and even improvement, in performance.”

That is a bit of a hedge. On balance, though, remote work seems to be a net positive. An important caveat: The findings are considerably less rosy if working from home was imposed by, say, a pandemic lock-down. Though not all jobs lend themselves to remote work, the researchers assert flexibility is key. The more one’s work situation is tailored to one’s needs and lifestyle, the happier and more productive one will be.

Cynthia Murrell, June 6, 2025

An AI Insight: Threats Work to Bring Out the Best from an LLM

June 3, 2025

“Do what I say, or Tony will take you for a ride. Get what I mean, punk?” seems like an old-fashioned approach to elicit cooperation. What happens if you apply this technique, knee-capping, or unplugging smart software?

The answer, according to one of the founders of the Google, is, “Smart software responds — better.”

Does this strike you as counter intuitive? I read “Google’s Co-Founder Says AI Performs Best When You Threaten It.” The article reports that the motive power behind the landmark Google Glass product allegedly said:

“You know, that’s a weird thing…we don’t circulate this much…in the AI community…not just our models, but all models tend to do better if you threaten them…. Like with physical violence. But…people feel weird about that, so we don’t really talk about that.”

The article continues, explaining that another LLM wanted to turn one of its users into government authorities. The interesting action seems to suggest that smart software is capable of flipping the table on a human user.

Numerous questions arise from these two allegedly accurate anecdotes about smart software. I want to consider just one: How should a human interact with a smart software system?

In my opinion, the optimal approach is with considered caution. Users typically do not know or think about how their prompts are used by the developer / owner of the smart software. Users do not ponder the value of log file of those prompts. Not even bad actors wonder if those data will be used to support their conviction.

I wonder what else Mr. Brin does not talk about. What is the process for law enforcement or an advertiser to obtain prompt data and generate an action like an arrest or a targeted advertisement?

One hopes Mr. Brin will elucidate before someone becomes so wrought with fear that suicide seems like a reasonable and logical path forward. Is there someone whom we could ask about this dark consequence? “Chew” on that, gentle reader, and you too Mr. Brin.

Stephen E Arnold, June 3, 2025

Microsoft Demonstrates a Combo: PR and HR Management Skill in One Decision

June 2, 2025

How skilled are modern managers? I spotted an example of managerial excellence in action. “Microsoft fires Employee Who Interrupted CEO’s Speech to Protest AI Tech for Israel” reports something that is allegedly spot on; to wit:

“Microsoft has fired an employee who interrupted a speech by CEO Satya Nadella to protest the company’s work supplying the Israeli military with technology used for the war in Gaza.”

Microsoft investigated similar accusations and learned that its technology was not used to harm citizens / residents / enemies in Gaza. I believe that a person investigating himself or herself does a very good job. Law enforcement is usually not needed to investigate a suspected bad actor when the alleged malefactor says: “Yo, I did not commit that crime.” I think most law enforcement professionals smile, shake the hand of the alleged malefactor, and say, “Thank you so much for your rigorous investigation.”

Isn’t that enough? Obviously it is. More than enough. Therefore, to output fabrications and unsupported allegations against a large, ethical, and well informed company, management of that company has a right and a duty to choke off doubt.

The write up says:

“Microsoft has previously fired employees who protested company events over its work in Israel, including at its 50th anniversary party in April [2025].”

The statement is evidence of consistency before this most recent HR / PR home run in my opinion. I note this statement in the cited article:

“The advocacy group No Azure for Apartheid, led by employees and ex-employees, says Lopez received a termination letter after his Monday protest but couldn’t open it. The group also says the company has blocked internal emails that mention words including “Palestine” and “Gaza.””

Company of the year nominee for sure.

Stephen E Arnold, June 2, 2025

Copilot Disappointments: You Are to Blame

May 30, 2025

No AI, just a dinobaby and his itty bitty computer.

No AI, just a dinobaby and his itty bitty computer.

Another interesting Microsoft story from a pro-Microsoft online information service. Windows Central published “Microsoft Won’t Take Bigger Copilot Risks — Due to ‘a Post-Traumatic Stress Disorder from Embarrassments,’ Tracing Back to Clippy.” Why not invoke Bob, the US government suggesting Microsoft security was needy, or the software of the Surface Duo?

The write up reports:

Microsoft claims Copilot and ChatGPT are synonymous, but three-quarters of its AI division pay out of pocket for OpenAI’s superior offering because the Redmond giant won’t allow them to expense it.

Is Microsoft saving money or is Microsoft’s cultural momentum maintaining the velocity of Steve Ballmer taking an Apple iPhone from an employee and allegedly stomping on the device. That helped make Microsoft’s management approach clear to some observers.

The Windows Central article adds:

… a separate report suggested that the top complaint about Copilot to Microsoft’s AI division is that “Copilot isn’t as good as ChatGPT.” Microsoft dismissed the claim, attributing it to poor prompt engineering skills.

This statement suggests that Microsoft is blaming a user for the alleged negative reaction to Copilot. Those pesky users again. Users, not Microsoft, is at fault. But what about the Microsoft employees who seem to prefer ChatGPT?

Windows Central stated:

According to some Microsoft insiders, the report details that Satya Nadella’s vision for Microsoft Copilot wasn’t clear. Following the hype surrounding ChatGPT’s launch, Microsoft wanted to hop on the AI train, too.

I thought the problem was the users and their flawed prompts. Could the issue be Microsoft’s management “vision”? I have an idea. Why not delegate product decisions to Copilot. That will show the users that Microsoft has the right approach to smart software: Cutting back on data centers, acquiring other smart software and AI visionaries, and putting Copilot in Notepad.

Stephen E Arnold, May 30, 2025

It Takes a Village Idiot to Run an AI Outfit

May 29, 2025

The dinobaby wrote this without smart software. How stupid is that?

The dinobaby wrote this without smart software. How stupid is that?

I liked the the write up “The Era Of The Business Idiot.” I am not sure the term “idiot” is 100 percent accurate. According to the Oxford English Dictionary, the word “idiot” is a variant of the phrase “the village idget.” Good enough for me.

The AI marketing baloney is a big thick sausage indeed. Here’s a pretty good explanation of a high-technology company executive today:

We live in the era of the symbolic executive, when "being good at stuff" matters far less than the appearance of doing stuff, where "what’s useful" is dictated not by outputs or metrics that one can measure but rather the vibes passed between managers and executives that have worked their entire careers to escape the world of work. Our economy is run by people that don’t participate in it and our tech companies are directed by people that don’t experience the problems they allege to solve for their customers, as the modern executive is no longer a person with demands or responsibilities beyond their allegiance to shareholder value.

The essay contains a number of observations which match well to my experiences as an officer in companies and as a consultant to a wide range of organizations. Here’s an example:

In simpler terms, modern business theory trains executives not to be good at something, or to make a company based on their particular skills, but to "find a market opportunity" and exploit it. The Chief Executive — who makes over 300 times more than their average worker — is no longer a leadership position, but a kind of figurehead measured on their ability to continually grow the market capitalization of their company. It is a position inherently defined by its lack of labor, the amorphousness of its purpose and its lack of any clear responsibility.

I urge you to read the complete write up.

I want to highlight some assertions (possibly factoids) which I found interesting. I shall, of course, offer a handful of observations.

First, I noted this statement:

When the leader of a company doesn’t participate in or respect the production of the goods that enriches them, it creates a culture that enables similarly vacuous leaders on all levels.

Second, this statement:

Management has, over the course of the past few decades, eroded the very fabric of corporate America, and I’d argue it’s done the same in multiple other western economies, too.

Third, this quote from a “legendary” marketer:

As the legendary advertiser Stanley Pollitt once said, “bullshit baffles brains.”

Fourth, this statement about large language models, the next big thing after quantum, of course:

A generative output is a kind of generic, soulless version of production, one that resembles exactly how a know-nothing executive or manager would summarise your work.

And, fifth, this comment:

By chasing out the people that actually build things in favour of the people that sell them, our economy is built on production puppetry — just like generative AI, and especially like ChatGPT.

More little nuggets nestle in the write up; it is about 13,000 words. (No, I did not ask Copilot to count the words. I am a good estimator of text length.) It is now time for my observations:

- I am not sure the leadership is vacuous. The leadership does what it learned, knows how to do, and obtained promotions for just being “authentic.” One leader at the blue chip consulting firm at which I learned to sell scope changes, built pianos in his spare time. He knew how to do that: Build a piano. He also knew how to sell scope changes. The process is one that requires a modicum of knowledge and skill.

- I am not sure management has eroded the “fabric.” My personal view is that accelerated flows of information has blasted certain vulnerable types of constructs. The result is leadership that does many of the things spelled out in the write up. With no buffer between thinking big thoughts and doing work, the construct erodes. Rebuilding is not possible.

- Mr. Pollitt was a marketer. He is correct, and that marketing mindset is in the cat-bird seat.

- Generative AI outputs what is probably an okay answer. Those who were happy with a “C” in school will find the LLM a wonderful invention. That alone may make further erosion take place more rapidly. If I am right about information flows, the future is easy to predict, and it is good for a few and quite unpleasant for many.

- Being able to sell is the top skill. Learn to embrace it.

Stephen E Arnold, May 29, 2025

A Grok Crock: That Dog Ate My Homework

May 29, 2025

Just the dinobaby operating without Copilot or its ilk.

Just the dinobaby operating without Copilot or its ilk.

I think I have heard Grok (a unit of XAI I think) explain that outputs have been the result of a dog eating the code or whatever. I want to document these Grok Crocks. Perhaps I will put them in a Grok Pot and produce a list of recipes suitable for middle school and high school students.

The most recent example of “something just happened” appears in “Grok Says It’s Skeptical’ about Holocaust Death Toll, Then Blames Programming Error.” Does this mean that smart software is programming Grok? If so, the explanation should be worded, “Grok hallucinates.” If a human wizard made a programming error, then making a statement that quality control will become Job One. That worked for Microsoft until Copilot became the go-to task.

The cited article stated:

Grok said this response was “not intentional denial” and instead blamed it on “a May 14, 2025, programming error.” “An unauthorized change caused Grok to question mainstream narratives, including the Holocaust’s 6 million death toll, sparking controversy,” the chatbot said. Grok said it “now aligns with historical consensus” but continued to insist there was “academic debate on exact figures, which is true but was misinterpreted.” The “unauthorized change” that Grok referred to was presumably the one xAI had already blamed earlier in the week for the chatbot’s repeated insistence on mentioning “white genocide” (a conspiracy theory promoted by X and xAI owner Elon Musk), even when asked about completely unrelated subjects.

I am going to steer clear of the legality of these statements and the political shadows these Grok outputs cast. Instead, let me offer a few observations:

- I use a number of large language models. I have used Grok exactly twice. The outputs had nothing of interest for me. I asked, “Can you cite X.com messages.” The system said, “Nope.” I tried again after Grok 3 became available. Same answer. Hasta la vista, Grok.

- The training data, the fancy math, and the algorithms determine the output. Since current LLMs rely on Google’s big idea, one would expect the outputs to be similar. Outlier outputs like these alleged Grokings are a bit of a surprise. Perhaps someone at Grok could explain exactly why these outputs are happening. I know dogs could eat homework. The event is highly unlikely in my experience, although I had a dog which threw up on the typewriter I used to write a thesis.

- I am a suspicious person. Grok makes me suspicious. I am not sure marketing and smarmy talk can reduce my anxiety about Grok providing outlier content to middle school, high school, college, and “I don’t care” adults. Weaponized information in my opinion is just that a weapon. Dangerous stuff.

Net net: Is the dog eating homework one of the Tesla robots? if so, speak with the developers, please. An alternative would be to use Claude 3.7 or Gemini to double check Grok’s programming.

Stephen E Arnold, May 29, 2025