Survival of SharePoint and the Big Bang Theory

May 5, 2015

The ebb and flow of SharePoint expansion and contraction can be described as a “big bang theory” of sorts. This cyclical pattern can be seen in many businesses, but Redmond Magazine helps readers see the cycle in SharePoint. Read more in their article, “The SharePoint Big Bang Theory.”

The article sums up the illustration:

“As Microsoft added capabilities to SharePoint over the years, and provided the flexibility to configure or customize its features to meet just about any business requirement, the success of the platform exploded . . . End users and administrators alike started thinking about their information architecture and information governance policies. Companies . . . began consolidating their efforts, and started to move their businesses toward a more structured content management strategy . . . [then] the rise of the enterprise social networks (ESNs) and cloud-based file sharing solutions have had (are having) a contracting effect on those intranet and structured collaboration plans. Suddenly end users seem to be totally in charge.”

There’s no doubt that SharePoint has learned to weather the turbulent changes of the last twenty years. In some ways, their adaptability is to be applauded. And yet, most users know the platform is not perfect. To stay attuned to what the next twenty years will bring, keep an eye on ArnoldIT.com. Stephen E. Arnold has made a career of out reporting on all things search, and his dedicated SharePoint feed distills the information down into an easily digestible platform.

Emily Rae Aldridge, May 5, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

Indexing Rah Rah Rah!

May 4, 2015

Enterprise search is one of the most important features for enterprise content management systems and there is huge industry for designing and selling taxonomies. The key selling features for taxonomies are their diversity, accuracy, and quality. The categories within taxonomies make it easier for people to find their content, but Tech Target’s Search Content Management blog says there is room improvement in the post: “Search-Based Applications Need The Engine Of Taxonomy.”

Taxonomies are used for faceted search, allowing users to expand and limit their search results. Faceted search gives users a selection to change their results, including file type, key words, and more of the ever popular content categories. Users usually don’t access the categories, primarily they are used behind the scenes and aggregated the results appear on the dashboard.

Taxonomies, however, take their information from more than what the user provides:

“We are now able to assemble a holistic view of the customer based on information stored across a number of disparate solutions. Search-based applications can also include information about the customer that was inferred from public content sources that the enterprise does not own, such as news feeds, social media and stock prices.”

Whether you know it or not, taxonomies are vital to enterprise search. Companies that have difficulty finding their content need to consider creating a taxonomy plan or invest in purchasing category lists from a proven company.

Whitney Grace, May 4, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

EnterpriseJungle Launches SAP-Based Enterprise Search System

May 4, 2015

A new enterprise search system startup is leveraging the SAP HANA Cloud Platform, we learn from “EnterpriseJungle Tames Enterprise Search” at SAP’s News Center. The company states that their goal is to make collaboration easier and more effective with a feature they’re calling “deep people search.” Writer Susn Galer cites EnterpriseJungle Principal James Sinclair when she tells us:

“Using advanced algorithms to analyze data from internal and external sources, including SAP Jam, SuccessFactors, wikis, and LinkedIn, the applications help companies understand the make-up of its workforce and connect people quickly….

“Who Can Help Me is a pre-populated search tool allowing employees to find internal experts by skills, location, project requirements and other criteria which companies can also configure, if needed. The Enterprise Q&A tool lets employees enter any text into the search bar, and find experts internally or outside company walls. Most companies use the prepackaged EnterpriseJungle solutions as is for Human Resources (HR), recruitment, sales and other departments. However, Sinclair said companies can easily modify search queries to meet any organization’s unique needs.”

EnterpriseJungle users manage their company’s data through SAP’s Lumira dashboard. Galer shares Sinclair’s example of one company in Germany, which used EnterpriseJungle to match employees to appropriate new positions when it made a whopping 3,000 jobs obsolete. Though the software is now designed primarily for HR and data-management departments, Sinclair hopes the collaboration tool will permeate the entire enterprise.

Cynthia Murrell, May 4, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

A Binging Double Take

May 1, 2015

After you read this headline from Venture Beat, you will definitely be doing a double take: “ComScore: Bing Passes 20% Share In The US For The First Time.” Bing has been the punch line for search experts and IT professionals ever since it was deployed a few years ago. Anyone can contest that Bing is not the most accurate search engine, mostly due to it being a Microsoft product. Bing developers have been working to improve the search engine’s accuracy and for the first time ever ComScore showed that both Google and Yahoo fell a 0.1 percentage and Bing gained 0.3 percent, most likely stealing it from DuckDuckGo and other smaller search engines. Microsoft can proudly state that one in five searches are conducted on Bing.

The change comes after months of stagnation:

“For many months, ComScore’s reports showed next to no movement for each search service (a difference of 0.1 points or 0.2 points one way or the other, if that). A 0.3 point change is not much larger, but it does come just a few months after big gains from Yahoo. So far, 2015 is already a lot more exciting, and it looks like the search market is going to be worth paying close attention to.”

The article says that most of search engine usage is generated by what Internet browsers people use. Yahoo keep telling people to move to Firefox and Google wants people to download Chrome. The browser and search engine rivalries continue, but Google still remains on top. How long will Bing be able to keep this bragging point?

Whitney Grace, May 1, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

Hoping to End Enterprise Search Inaccuracies

May 1, 2015

Enterprise search is limited to how well users tag their content and the preloaded taxonomies. According Tech Target’s Search Content Management blog, text analytics might be the key to turning around poor enterprise search performance: “How Analytics Engines Could Finally-Relieve Enterprise Pain.” Text analytics turns out to only be part of the solution. Someone had the brilliant idea to use text analytics to classification issues in enterprise search, making search reactive to user input to proactive to search queries.

In general, analytics search engines work like this:

“The first is that analytics engines don’t create two buckets of content, where the goal is to identify documents that are deemed responsive. Instead, analytics engines identify documents that fall into each category and apply the respective metadata tags to the documents. Second, people don’t use these engines to search for content. The engines apply metadata to documents to allow search engines to find the correct information when people search for it. Text analytics provides the correct metadata to finally make search work within the enterprise.”

Supposedly, they are fixing the tagging issue by removing the biggest cause for error: humans. Microsoft caught onto how much this could generate profit, so they purchased Equivio in 2014 and integrated the FAST Search platform into SharePoint. Since Microsoft is doing it, every other tech company will copy and paste their actions in time. Enterprise search is gull of faults, but it has improved greatly. Big data trends have improved search quality, but tagging continues to be an issue. Text analytics search engines will probably be the newest big data field for development. Hint for developers: work on an analytics search product, launch it, and then it might be bought out.

Whitney Grace, May 1 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

Recorded Future: The Threat Detection Leader

April 29, 2015

The Exclusive Interview with Jason Hines, Global Vice President at Recorded Future

In my analyses of Google technology, despite the search giant’s significant technical achievements, Google has a weakness. That “issue” is the company’s comparatively weak time capabilities. Identifying the specific time at which an event took place or is taking place is a very difficult computing problem. Time is essential to understanding the context of an event.

This point becomes clear in the answers to my questions in the Xenky Cyber Wizards Speak interview, conducted on April 25, 2015, with Jason Hines, one of the leaders in Recorded Future’s threat detection efforts. You can read the full interview with Hines on the Xenky.com Cyber Wizards Speak site at the Recorded Future Threat Intelligence Blog.

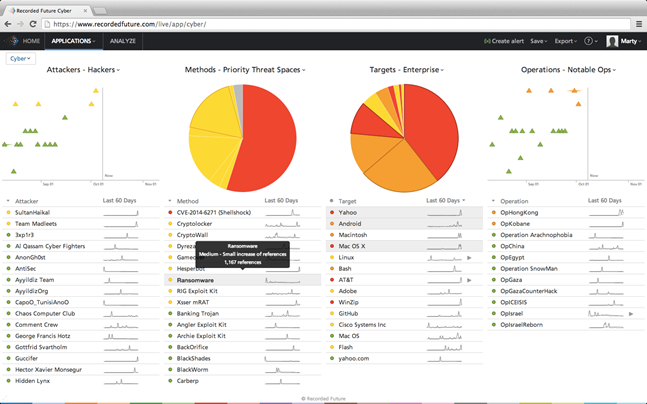

Recorded Future is a rapidly growing, highly influential start up spawned by a team of computer scientists responsible for the Spotfire content analytics system. The team set out in 2010 to use time as one of the lynch pins in a predictive analytics service. The idea was simple: Identify the time of actions, apply numerical analyses to events related by semantics or entities, and flag important developments likely to result from signals in the content stream. The idea was to use time as the foundation of a next generation analysis system, complete with visual representations of otherwise unfathomable data from the Web, including forums, content hosting sites like Pastebin, social media, and so on.

A Recorded Future data dashboard it easy for a law enforcement or intelligence professionals to identify important events and, with a mouse click, zoom to the specific data of importance to an investigation. (Used with the permission of Recorded Future, 2015.)

Five years ago, the tools for threat detection did not exist. Components like distributed content acquisition and visualization provided significant benefits to enterprise and consumer applications. Google, for example, built a multi-billion business using distributed processes for Web searching. Salesforce.com integrated visualization into its cloud services to allow its customers to “get insight faster.”

According to Jason Hines, one of the founders of Recorded Future and a former Google engineer, “When our team set out about five years ago, we took on the big challenge of indexing the Web in real time for analysis, and in doing so developed unique technology that allows users to unlock new analytic value from the Web.”

Recorded Future attracted attention almost immediately. In what was an industry first, Google and In-Q-Tel (the investment arm of the US government) invested in the Boston-based company. Threat intelligence is a field defined by Recorded Future. The ability to process massive real time content flows and then identify hot spots and items of interest to a matter allows an authorized user to identify threats and take appropriate action quickly. Fueled by commercial events like the security breach at Sony and cyber attacks on the White House, threat detection is now a core business concern.

The impact of Recorded Future’s innovations on threat detection was immediate. Traditional methods relied on human analysts. These methods worked but were and are slow and expensive. The use of Google-scale content processing combined with “smart mathematics” opened the door to a radically new approach to threat detection. Security, law enforcement, and intelligence professionals understood that sophisticated mathematical procedures combined with a real-time content processing capability would deliver a new and sophisticated approach to reducing risk, which is the central focus of threat detection.

In the exclusive interview with Xenky.com, the law enforcement and intelligence information service, Hines told me:

Recorded Future provides information security analysts with real-time threat intelligence to proactively defend their organization from cyber attacks. Our patented Web Intelligence Engine indexes and analyzes the open and Deep Web to provide you actionable insights and real-time alerts into emerging and direct threats. Four of the top five companies in the world rely on Recorded Future.

Despite the blue ribbon technology and support of organizations widely recognized as the most sophisticated in the technology sector, Recorded Future’s technology is a response to customer needs in the financial, defense, and security sectors. Hines said:

When it comes to security professionals we really enable them to become more proactive and intelligence-driven, improve threat response effectiveness, and help them inform the leadership and board on the organization’s threat environment. Recorded Future has beautiful interactive visualizations, and it’s something that we hear security administrators love to put in front of top management.

As the first mover in the threat intelligence sector, Recorded Future makes it possible for an authorized user to identify high risk situations. The company’s ability to help forecast and spotlight threats likely to signal a potential problem has obvious benefits. For security applications, Recorded Future identifies threats and provides data which allow adaptive perimeter systems like intelligent firewalls to proactively respond to threats from hackers and cyber criminals. For law enforcement, Recorded Future can flag trends so that investigators can better allocate their resources when dealing with a specific surveillance task.

Hines told me that financial and other consumer centric firms can tap Recorded Future’s threat intelligence solutions. He said:

We are increasingly looking outside our enterprise and attempt to better anticipate emerging threats. With tools like Recorded Future we can assess huge swaths of behavior at a high level across the network and surface things that are very pertinent to your interests or business activities across the globe. Cyber security is about proactively knowing potential threats, and much of that is previewed on IRC channels, social media postings, and so on.

In my new monograph CyberOSINT: Next Generation Information Access, Recorded Future emerged as the leader in threat intelligence among the 22 companies offering NGIA services. To learn more about Recorded Future, navigate to the firm’s Web site at www.recordedfuture.com.

Stephen E Arnold, April 29, 2015

Retail Feels Internet Woes

April 29, 2015

Mobile Web sites, mobile apps, mobile search, mobile content, and the list goes on and on for Web-related material to be mobile-friendly. Online retailers are being pressured to make their digital storefronts applicable to the mobile users, because more people are using their smartphones and tablets over standard desktop and laptop computers. It might seem easy to design an app and then people can download it for all of their shopping needs, but according to Easy Ask things are not that simple: “Internet Retailer Reveals Mobile Commerce Conversion Troubles.”

The article reveals that research conducted by Spreadshirt CTO Guido Laures shows that while there is a high demand for mobile friendly commerce applications and Web sites, very few people are actually purchasing products through these conduits. Why? The problem relates to the lack of spontaneous browsing and one the iPhone 6’s main selling features: a big screen.

“While mobile-friendly responsive designs and easier mobile checkouts are cited as inhibitors to mobile commerce conversion, an overlooked and more dangerous problem is earlier in the shopping process. Before they can buy, customers first need to find the product they want. Small screen sizes, clumsy typing and awkward scrolling gestures render traditional search and navigation useless on a smartphone.”

Easy Ask says that these problems can be resolved by using a natural language search application over the standard keyword search tool. It says that:

“A keyword search engine leaves you prone to misunderstanding different words and returning a wide swath of products that will frustrate your shoppers and continue you down the path of poor mobile customer conversion.”

Usually natural language voice search tools misunderstand words and return funny phrases. The article is a marketing tool to highlight the key features of Easy Ask technology, but they do make some key observations about mobile shopping habits.

Whitney Grace, April 29, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

Cerebrant Discovery Platform from Content Analyst

April 29, 2015

A new content analysis platform boasts the ability to find “non-obvious” relationships within unstructured data, we learn from a write-up hosted at PRWeb, “Content Analyst Announces Cerebrant, a Revolutionary SaaS Discovery Platform to Provide Rapid Insight into Big Content.” The press release explains what makes Cerebrant special:

“Users can identify and select disparate collections of public and premium unstructured content such as scientific research papers, industry reports, syndicated research, news, Wikipedia and other internal and external repositories.

“Unlike alternative solutions, Cerebrant is not dependent upon Boolean search strings, exhaustive taxonomies, or word libraries since it leverages the power of the company’s proprietary Latent Semantic Indexing (LSI)-based learning engine. Users simply take a selection of text ranging from a short phrase, sentence, paragraph, or entire document and Cerebrant identifies and ranks the most conceptually related documents, articles and terms across the selected content sets ranging from tens of thousands to millions of text items.”

We’re told that Cerebrant is based on the company’s prominent CAAT machine learning engine. The write-up also notes that the platform is cloud-based, making it easy to implement and use. Content Analyst launched in 2004, and is based in Reston, Virginia, near Washington, DC. They also happen to be hiring, in case anyone here is interested.

Cynthia Murrell, April 29, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

EnterpriseJungle Launches SAP-Based Enterprise Search System

April 27, 2015

A new enterprise search system startup is leveraging the SAP HANA Cloud Platform, we learn from “EnterpriseJungle Tames Enterprise Search” at SAP’s News Center. The company states that their goal is to make collaboration easier and more effective with a feature they’re calling “deep people search.” Writer Susn Galer cites EnterpriseJungle Principal James Sinclair when she tells us:

“Using advanced algorithms to analyze data from internal and external sources, including SAP Jam, SuccessFactors, wikis, and LinkedIn, the applications help companies understand the make-up of its workforce and connect people quickly….

“Who Can Help Me is a pre-populated search tool allowing employees to find internal experts by skills, location, project requirements and other criteria which companies can also configure, if needed. The Enterprise Q&A tool lets employees enter any text into the search bar, and find experts internally or outside company walls. Most companies use the prepackaged EnterpriseJungle solutions as is for Human Resources (HR), recruitment, sales and other departments. However, Sinclair said companies can easily modify search queries to meet any organization’s unique needs.”

EnterpriseJungle users manage their company’s data through SAP’s Lumira dashboard. Galer shares Sinclair’s example of one company in Germany, which used EnterpriseJungle to match employees to appropriate new positions when it made a whopping 3,000 jobs obsolete. Though the software is now designed primarily for HR and data-management departments, Sinclair hopes the collaboration tool will permeate the entire enterprise.

Cynthia Murrell, April 27, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph

Research Like the Old School

April 24, 2015

There was a time before the Internet that if you wanted to research something you had to go to the library, dig through old archives, and check encyclopedias for quick facts. While it seems that all information is at your disposable with a few keystrokes, but search results are often polluted with paid ads and unless your information comes from a trusted source, you can’t count it as fact.

LifeHacker, like many of us, knows that if you want to get the truth behind a topic, you have to do some old school sleuthing. The article “How To Research Like A Journalist When The Internet Doesn’t Deliver” drills down tried and true research methods that will continue to withstand the sands of time or the wrecking ball (depending on how long libraries remain brick and mortar buildings).

The article pushes using librarians as resources and even going as far as petitioning government agencies and filing FOIA requests for information. When it makes the claim that some information is only available in person or strictly for other librarians, this is both true and false. Many libraries are trying to digitize their information, but due to budgets are limited in their resources. Also unless the librarian works in a top secret archive, most of the information is readily available to anyone with or without the MLS degree.

Old school interviews are always great, especially when you have to cite a source. You can always cite your own interview and verify it cam straight from the horse’s mouth. One useful way to team the Internet with interviews is tracking down the interviewees.

Lastly, this is the best piece of advice from the article:

“Finally, once you’ve done all of this digging, visited government agencies, libraries, and the offices of the people with the knowledge you need, don’t lose it. Archive everything. Digitize those notes and the recordings of your interviews. Make copies of any material you’ve gotten your hands on, then scan them and archive them safely.”

The Internet is full of false information. By placing a little more credence out there, will make the information more safe to use or claim as the truth.

These tips are useful, even if a little obvious, but they however still fail to mention the important step that all librarians know: doing the actual footwork and proper search methods to find things.

Whitney Grace, April 24, 2015

Sponsored by ArnoldIT.com, publisher of the CyberOSINT monograph