Errors? AI Makes Accuracy Irrelevant

April 4, 2025

This blog post is the work of a humanoid dino baby. If you don’t know what a dinobaby is, you are not missing anything.

This blog post is the work of a humanoid dino baby. If you don’t know what a dinobaby is, you are not missing anything.

We have poked around some AI services. A few are very close to being dark patterns that want to become like Al Capone or moe accurately AI Capone. Am I thinking of 1min.ai? Others just try to sound so friendly when outputting wackiness? Am I thinking about the Softies or ChatGPT? I don’t know.

I did read “AI Search Has A Citation Problem.” The main point is that AI struggles with accuracy. One can gild the lily and argue that it makes work faster. I won’t argue that quick incorrect output may speed some tasks. However, the write up points out:

Premium chatbots provided more confidently incorrect answers than their free counterparts.

I think this means that paying money does not deliver accuracy, judgment, or useful information. I would agree.

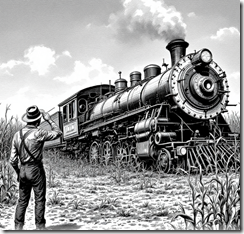

A farmer wonders how the steam engine ended up in his corn field. How did smart software get involved in deciding that distorted information was a useful output for students and workers? Thanks, You.com. The train was supposed to be on its side, but by getting the image different from my prompt, you have done the job. Close enough for horse shoes, right?

The write up also points out:

Generative search tools fabricated links and cited syndicated and copied versions of articles.

I agree.

Here’s a useful finding if one accepts the data in the write up as close enough for horseshoes:

Overall, the chatbots often failed to retrieve the correct articles. Collectively, they provided incorrect answers to more than 60 percent of queries. Across different platforms, the level of inaccuracy varied, with Perplexity answering 37 percent of the queries incorrectly, while Grok 3 had a much higher error rate, answering 94 percent of the queries incorrectly.

The alleged error rate of Grok is in line with my experience. I try to understand, but when space ships explode, people set Cybertrucks on fire, and the cratering of Tesla stock cause my widowed neighbor to cry — I see a pattern of missing the mark. Your mileage or wattage may vary, of course.

The write up points out:

Platforms often failed to link back to the original source

For the underlying data and more academic explanations, please, consult the original article.

I want to shift gears and make some observations about the issue the data in the article and my team’s experience with smart software present. Here we go, gentle reader:

- People want convenience or what I call corner cutting. AI systems eliminate the old fashioned effort required to verify information. Grab and go information, like fast food, may not be good for the decision making life.

- The information floating around about a Russian content mill pumping out thousands of weaoonized news stories a day may be half wrong. Nevertheless, it makes clear that promiscuous and non-thinking AI systems can ingest weaponized content and spit it out without a warning level or even recognizing baloney when one expects a slab of Wagu beef.

- Integrating self-driving AI into autonomous systems is probably not yet a super great idea. The propaganda about Chinese wizards doing this party trick is interesting, just a tad risky when a kinetic is involved.

Where are we? Answering this question is a depressing activity. Companies like Microsoft are forging ahead with smart software helping people do things in Excel. Google is allowing its cheese-obsessed AI to write email responses. Outfits like BoingBoing are embracing questionable services like a speedy AI Popeil pocket fisherman as part of its money making effort. And how about those smart Anduril devices? Do they actually work? I don’t want to let one buzz me.

The AI crazy train is now going faster than the tracks permit. How does one stop a speeding autonomous train? I am going to stand back because that puppy is going to fall off the tracks and friction will do the job. Whoo. Whoo.

Stpehen E Arnold, April 4, 2025