Ah, the Warmth of the Old, Friendly Internet. For Real?

January 30, 2025

I never thought I’d be looking back at the Internet of yesteryear nostalgically. I hated the sound of dial-up and the instant messaging sounds were annoying. Also AOL had the knack of clogging up machines with browsing history making everything slow. Did I mention YouTube wasn’t around? There are somethings that were better in the past, including parts of the Internet, but not all of it.

We also like to think that the Internet was “safer” and didn’t have predatory content. Wrong! Since the Internet’s inception, parents were worried about their children being the victims of online predators. Back then it was easier to remain anonymous, however. El País agrees that the Internet was just as bad as it is today: “‘The internet Hasn’t Made Us Bad, We Were Already Like That’: The Mistake Of Yearning For The ‘Friendly’ Online World Of 20 Years Ago."

It’s strange to see artists using Y2K era technology as art pieces and throwbacks. It’s a big eye-opener to aging Millennials, but it also places these items on par with the nostalgia of all past eras. All generations love the stuff from their youth and proclaim it to be superior. As the current youth culture and even those middle-aged are obsessed with retro gear, a new slang term has arisen: “cozy tech.”

“‘Cozy tech’ is the label that groups together content about users sipping from a steaming cup, browsing leisurely or playing nice, simple video games on devices with smooth, ergonomic designs. It’s a more powerful image than it seems because it conveys something we lost at some point in the last decade: a sense of control; the idea that it is possible to enjoy technology in peace again.”

They’re conflating the idea with reading a good book or listening to music on a record player. These “cozy tech” people are forgetting about the dangers of chatrooms or posting too much information on the Internet. Dare we bring up Omegle without drifting down channels of pornography?

Check out this statement:

“Mayte Gómez concludes: “We must stop this reactionary thinking and this fear of technology that arises from the idea that the internet has made us bad. That is not true: we were already like that. If the internet is unfriendly it is because we are becoming less so. We cannot perpetuate the idea that machines are entities with a will of their own; we must take responsibility for what happens on the internet.”

Sorry, Mayte, I disagree. Humans have always been unfriendly. We now have a better record of it.

Whitney Grace, January 30, 2025

Sonus, What Is That Painful Sound I Hear?

January 21, 2025

Sonos CEO Swap: Are Tech Products Only As Good As Their Apps?

Lawyers and accountants leading tech firms, please, take note: The apps customers use to manage your products actually matter. Engadget reports, “Sonos CEO Patrick Spence Falls on his Sword After Horrible App Launch.” Reporter Lawrence Bonk writes:

“Sonos CEO Patrick Spence is stepping down from the company after eight years on the job, according to reporting by Bloomberg. This follows last year’s disastrous app launch, in which a redesign was missing core features and was broken in nearly every major way. The company has tasked Tom Conrad to steer the ship as interim CEO. Conrad is a current member of the Sonos board, but was a co-founder of Pandora, VP at Snap and product chief at, wait for it, the short-lived video streaming platform Quibi. He also reportedly has a Sonos tattoo. The board has hired a firm to find a new long-term leader.”

Conrad told employees that “we” let people down with the terrible app. And no wonder. Bonk explains:

“The decision to swap leadership comes after months of turmoil at the company. It rolled out a mobile app back in May that was absolutely rife with bugs and missing key features like alarms and sleep timers. Some customers even complained that entire speaker systems would no longer work after updating to the new app. It was a whole thing.”

Indeed. And despite efforts to rekindle customer trust, the company is paying the price of its blunder. Its stock price has fallen about 13 percent, revenue tanked 16 percent in the fiscal fourth quarter, and it has laid off more than 100 workers since August. Chief Product Officer Patrick Spence is also leaving the firm. Will the CEO swap help Sonos recover? As he takes the helm, Conrad vows a return to basics. At the same time, he wants to expand Sonos’ products. Interesting combination. Meanwhile, the search continues for a more permanent replacement.

Cynthia Murrell, January 21, 2025

AI Doom: Really Smart Software Is Coming So Start Being Afraid, People

January 20, 2025

Prepared by a still-alive dinobaby.

Prepared by a still-alive dinobaby.

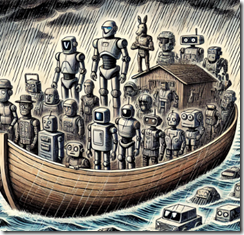

The essay “Prophecies of the Flood” gathers several comments about software that thinks and decides without any humans fiddling around. The “flood” metaphor evokes the streams of money about which money people fantasize. The word “flood” evokes the Hebrew Biblical idea’s presentation of a divinely initiated cataclysm intended to cleanse the Earth of widespread wickedness. Plus, one cannot overlook the image of small towns in North Carolina inundated in mud and debris from a very bad storm.

When the AI flood strikes as a form of divine retribution, will the modern arc be filled with humans? Nope. The survivors will be those smart agents infused with even smarter software. Tough luck, humanoids. Thanks, OpenAI, I knew you could deliver art that is good enough.

To sum up: A flood is bad news, people.

The essay states:

the researchers and engineers inside AI labs appear genuinely convinced they’re witnessing the emergence of something unprecedented. Their certainty alone wouldn’t matter – except that increasingly public benchmarks and demonstrations are beginning to hint at why they might believe we’re approaching a fundamental shift in AI capabilities. The water, as it were, seems to be rising faster than expected.

The signs of darkness, according to the essay, include:

- Rising water in the generally predictable technology stream in the park populated with ducks

- Agents that “do” something for the human user or another smart software system. To humans with MBAs, art history degrees, and programming skills honed at a boot camp, the smart software is magical. Merlin wears a gray T shirt, sneakers, and faded denims

- Nifty art output in the form of images and — gasp! — videos.

The essay concludes:

The flood of intelligence that may be coming isn’t inherently good or bad – but how we prepare for it, how we adapt to it, and most importantly, how we choose to use it, will determine whether it becomes a force for progress or disruption. The time to start having these conversations isn’t after the water starts rising – it’s now.

Let’s assume that I buy this analysis and agree with the notion “prepare now.” How realistic is it that the United Nations, a couple of super powers, or a motivated individual can have an impact? Gentle reader, doom sells. Examples include The Big Short: Inside the Doomsday Machine, The Shifts and Shocks: What We’ve Learned – and Have Still to Learn – from the Financial Crisis, and Too Big to Fail: How Wall Street and Washington Fought to Save the Financial System from Crisis – and Themselves, and others, many others.

Have these dissections of problems had a material effect on regulators, elected officials, or the people in the bank down the street from your residence? Answer: Nope.

Several observations:

- Technology doom works because innovations have positive and negative impacts. To make technology exciting, no one is exactly sure what the knock on effects will be. Therefore, doom is coming along with the good parts

- Taking a contrary point of view creates opportunities to engage with those who want to hear something different. Insecurity is a powerful sales tool.

- Sending messages about future impacts pulls clicks. Clicks are important.

Net net: The AI revolution is a trope. Never mind that after decades of researchers’ work, a revolution has arrived. Lionel Messi allegedly said, “It took me 17 years to become an overnight success.” (Mr. Messi is a highly regarded professional soccer player.)

Will the ill-defined technology kill humans? Answer: Who knows. Will humans using ill-defined technology like smart software kill humans? Answer: Absolutely. Can “anyone” or “anything” take an action to prevent AI technology from rippling through society. Answer: Nope.

Stephen E Arnold, January 20, 2025

IBM Tells Google, Mine Is Bigger! But Can the Kookaburra Eat the Reptile Googzilla?

January 17, 2025

Prepared by a still-alive dinobaby.

Prepared by a still-alive dinobaby.

The “who has the biggest nose” contest is in gear for 2025. I read “IBM Will Release the Largest Ever Quantum Computer in 2025.” Forget the Nvidia wizard’s pushing usable quantum computing into the far future. Forget the Intel Horse Features — sorry, horse collar — statements. Forget the challenge of making a programming language which makes it possible to create an application for a Oura smart ring. Bigger is where it is at in the quantum marketing world.

The write up reports with the repeatability of most research projects:

… The company’s largest quantum chip, called Condor, has 1121 qubits, though IBM’s Jay Gambetta says the average user of its quantum computing services only works with 100 qubits… The only way to get quantum advantage is to combine different components.” This is an issue of engineering as much as it is of quantum physics – as the number of qubits increases it becomes more practically difficult to fit all of them and the quantum computer’s input and output wires onto a single chip.

So what is IBM doing? Bolting stuff together, thank you very much.

But IBM is thinking beyond the Condor. The next innovation from IBM is Kookaburra. (This is a bird whose call is the sound of human laughter. I must come clean. When I read about this quantum achievement from IBM I did laugh. When I learned that chip’s name, I chuckled again. To be fair, I laughed more whenever I encountered the cognitive whiz kid Watson. But Kookaburra is hoot, especially for those who grew up in Australia or New Guinea.)

The write up says:

The task now is to increase that total number while making sure the qubits don’t make more errors than when the chips are kept separate.

Yep, bolting stuff together works great.

I am eagerly awaiting Google’s response because it perceives itself an quantumly supreme. I think when I laugh at the content marketing these big technology outfits output, I sound like a Kookaburra. (Did you know that a Kookaburra can weigh up to a half a pound plus they are carnivorous. This was an attribute when IBM was a much more significant player in the computer market. Kookaburras eat mice and snakes. Yeah, the Kookaburra.

Stephen E Arnold, January 17, 2025

Beating on Quantum: Thump, Clang

January 13, 2025

A dinobaby produced this post. Sorry. No smart software was able to help the 80 year old this time around.

A dinobaby produced this post. Sorry. No smart software was able to help the 80 year old this time around.

The is it new or is it PR service Benzinga published on January 13, 2025, “Quantum Computing Stocks Tumble after Mark Zuckerberg Backs Nvidia CEO Jensen Huang’s Practical Comments.” I love the “practical.” Quantum computing is similar to the modular home nuclear reactor from my point of view. These are interesting topics to discuss, but when it comes to convincing a home owners’ association to allow the installation of a modular nuclear reactor or squeezing the gizmos required to make quantum computing sort of go in a relatively reliable way, un uh.

Is this a practical point of view? No. The reason is that most people have zero idea of what is required to get a quantum computer or a quantum anything to work. The room for the demonstration is usually a stage set. The cooling, the electronics, and the assorted support equipment is — how shall I phrase it — bulky. That generator outside the quantum lab is not for handling a power outage. The trailer-sized box is pumping volts into the quantum set up.

The write up explains:

comments made by Meta CEO Mark Zuckerberg and Nvidia Corp. CEO Jensen Huang, who both expressed caution regarding the timeline for quantum computing advancements.

Caution. Good word.

The remarks by Zuckerberg and Huang have intensified concerns about the future of quantum computing. Earlier, during Nvidia’s analyst day, Huang expressed optimism about quantum computing’s potential but cautioned that practical applications might take 15 to 30 years to materialize. This outlook has led to a sharp decline in quantum computing stocks. Despite the cautious projections, some industry insiders have countered Huang’s views, arguing that quantum-based innovations are already being integrated into the tech ecosystem. Retail investors have shown optimism, with several quantum computing stocks experiencing significant growth in recent weeks.

I know of a person who lectures about quantum. I have heard that the theme of these presentations is that quantum computing is just around the corner. Okay. Google is quantumly supreme. Intel has its super technology called Horse Ridge or Horse Features. IBM makes quantum squeaks.

I want research to continue, but it is interesting to me that two big technology wizards want to talk about practical quantum computing. One does the social media thing unencumbered by expensive content moderation and the other is pushing smart software enabling technology forward.

Neither wants the quantum hype to supersede the marketing of either of these wizards’ money machines. I love “real news”, particularly when it presents itself as practical. May I suggest you place your order for a D-Wave or an Enron egg nuclear reactor. Practical.

Stephen E Arnold, January 13, 2025

The Brain Rot Thing: The 78 Wax Record Is Stuck Again

January 10, 2025

This is an official dinobaby post.

This is an official dinobaby post.

I read again about brain rot. I get it. Young kids play with a mobile phone. They get into social media. They watch TikTok. The discover the rich, rewarding world of Telegram online gambling. These folks don’t care about reading. Period. I get it.

But the Financial Times wants me to really get it. “Social Media, Brain Rot and the Slow Death of Reading” says:

Social media is designed to hijack our attention with stimulation and validation in a way that makes it hard for the technology of the page to compete.

This is news? Well, what about this statement:

The easy dopamine hit of social media can make reading feel more effortful by comparison. But the rewards are worth the extra effort: regular readers report higher wellbeing and life satisfaction, benefiting from improved sleep, focus, connection and creativity. While just six minutes of reading has been shown to reduce stress levels by two-thirds, deep reading offers additional cognitive rewards of critical thinking, empathy and self-reflection.

Okay, now tell that to the people in line at the grocery store or the kids in a high school class. Guess what? The joy of reading is not part of the warp and woof of 2025 life.

The news flash is that traditional media like the Financial Times long for the time when everyone read. Excuse me. When was that time? People read in school so they can get out of school and not read. Books still sell, but the avid readers are becoming dinobabies. Most of the dinobabies I know don’t read too much. My wife’s bridge club reads popular novels but non fiction is a non starter.

What does the FT want people to do? Here’s a clue:

Even if the TikTok ban goes ahead in the US, other platforms will pop up to replace it. So in 2025, why not replace the phone on your bedside table with a book? Just an hour a day clawed back from screen time adds up to about a book a week, placing you among an elite top one per cent of readers. Melville (and a Hula-Hoop) are optional.

Lamenting and recommending is not going to change what the flows of electronic information have done. There are more insidious effects racing down the information highway. Those who will be happiest will be those who live in ignorance. People with some knowledge will be deeply unhappy.

Will the FT want dinosaurs to roam again? Sure. Will the FT write about them? Of course. Will the impassioned words change what’s happened and will happen? Nope. Get over it, please. You may as well long for the days when Madame Tussaud’s Wax Museum and you were part of the same company.

Stephen E Arnold, January 10, 2025

GitHub Identifies a Sooty Pot and Does Not Offer a Fix

January 9, 2025

This is an official dinobaby post. No smart software involved in this blog post.

This is an official dinobaby post. No smart software involved in this blog post.

GitLab’s Sabrina Farmer is a sharp thinking person. Her “Three Software Development Challenges Slowing AI Progress” articulates an issue often ignored or just unknown. Specifically, according to her:

AI is becoming an increasingly critical component in software development. However, as is the case when implementing any new tool, there are potential growing pains that may make the transition to AI-powered software development more challenging.

Ms. Farmer is being kind and polite. I think she is suggesting that the nest with the AI eggs from the fund-raising golden goose has become untidy. Perhaps, I should use the word “unseemly”?

She points out three challenges which I interpret as the equivalent of one of those unsolved math problems like cracking the Riemann Hypothesis or the Poincaré Conjecture. These are:

- AI training. Yeah, marketers write about smart software. But a relatively small number of people fiddle with the knobs and dials on the training methods and the rat’s nests of computational layers that make life easy for an eighth grader writing an essay about Washington’s alleged crossing of the Delaware River whilst standing up in a boat rowed by hearty, cheerful lads. Big demand, lots of pretenders, and very few 10X coders and thinkers are available. AI Marketers? A surplus because math and physics are hard and art history and social science are somewhat less demanding on today’s thumb typers.

- Tools, lots of tools. Who has time to keep track of every “new” piece of smart software tooling? I gave up as the hyperbole got underway in early 2023. When my team needs to do something specific, they look / hunt for possibilities. Testing is required because smart software often gets things wrong. Some call this “innovation.” I call it evidence of the proliferation of flawed or cute software. One cannot machine titanium with lousy tools.

- Management measurements. Give me a break, Ms. Farmer. Managers are often evidence of the Peter Principle, an accountant, or a lawyer. How can one measure what one does not use, understand, or creates? Those chasing smart software are not making spindles for a wooden staircase. The task of creating smart software that has a shot at producing money is neither art nor science. It is a continuous process of seeing what works, fiddling, and fumbling. You want to measure this? Good luck, although blue chip consultants will gladly create a slide deck to show you the ropes and then churn out a spectacular invoice for professional services.

One question: Is GitLab part of the problem or part of the solution?

Stephen E Arnold, January 9, 2025

Why Buzzwords Create Problems. Big Problems, Right, Microsoft?

January 7, 2025

This is an official dinobaby post. No smart software involved in this blog post.

This is an official dinobaby post. No smart software involved in this blog post.

I read an essay by Steven Sinofsky. He worked at Microsoft. You can read about him in Wikipedia because he was a manager possibly associated with Clippy. He wrote an essay called “225. Systems Ideas that Sound Good But Almost Never Work—”Let’s just…” The write up is about “engineering patterns that sound good but almost never work as intended.”

I noticed something interesting about his explanation of why many software solutions go off the rails, fail to work, create security opportunities for bad actors associated with entities not too happy with the United States, and on-going headaches for for hundreds of millions of people.

Here is a partial list of the words and bound phrases from his essay:

Add an API

Anomaly detection

Asynchronous

Cross platform

DSL

Escape to native

Hybrid parallelism

Multi-master writes

Peer to peer

Pluggable

Sync the data

What struck me about this essay is that it reveals something I think is important about Microsoft and probably other firms tapping the expertise of the author; that is, the jargon drives how the software is implemented.

I am not certain that my statement is accurate for software in general. But for this short blog post, let’s assume that it applies to some software (and I am including Microsoft’s own stellar solutions as well as products from other high profile and wildly successful vendors). With the ground rules established, I want to offer several observations about this “jargon drives the software engineering” assertion.

First, the resulting software is flawed. Problems are not actually resolved. The problems are papered over with whatever the trendy buzzword says will work. The approach makes sense because actual problem solving may not be possible within a given time allocation or a working solution may fail which requires figuring out how to not fail again.

Second, the terms reveal that marketing think takes precedence over engineering think. Here’s what the jargon creators do. These sales oriented types grab terms that sound good and refer to an approach. The “team” coalesces around the jargon, and the jargon directs how the software is approached. Does hybrid parallelism “work”? Who knows, but it is the path forward. The manager says, “Let’s go team” and Clippy emerges or the weird opaqueness of the “ribbon.”

Third, the jargon shaped by art history majors and advertising mavens defines the engineering approach. The more successful the technical jargon, the more likely those people who studied Picasso’s colors or Milton’s Paradise Regained define the technical frame in which a “solution” is crafted.

How good is software created in this way? Answer: Good enough.

How reliable is software created in this way? Answer: Who knows until someone like a paying customer actually uses the software.

How secure is the software created in this way? Answer: It is not secure as the breaches of the Department of Treasury, the US telecommunications companies, and the mind boggling number of security lapses in 2024 prove.

Net net: Engineering solutions based on jargon are not intended to deliver excellence. The approach is simply “good enough.” Now we have some evidence that industry leaders realize the fact. Right, Clippy?

Stephen E Arnold, January 8, 2025

Code Graveyards: Welcome, Bad Actors

January 3, 2025

Did you know that the siloes housing nuclear missiles are still run on systems from the 1950s-1960s? These systems use analog computers and code more ancient than some people’s grandparents. The manuals for these codes are outdated and hard to find, except in archives and libraries that haven’t deaccessioned items for decades. There’s actually money to be made in these old systems and the Datosh Blog explains how: “The Hidden Risks of High-Quality Code.”

There are haunted graveyards of code in more than nuclear siloes. They exist in enterprise systems and came into existence in many ways: created by former IT employees, made for a specific project, or out-of-the-box code that no one wants to touch in case it causes system implosion. Bureaucratic layers and indecisive mentalities trap these codebases in limbo and they become the haunted graveyards. Not only are they haunted by ghosts of coding projects past, the graveyards pose existential risks.

The existential risks are magnified when red tape and attitudes prevent companies from progressing. Haunted graveyards are the root causes of technical debt, such as accumulated inefficiencies, expensive rewrites, and prevention from adapting to change.

Tech developers can avoid technical debt by prioritizing simplicity, especially matching a team’s skill level. Being active in knowledge transfer is important, because it means system information is shared between developers beyond basic SOP. Also use self-documenting code, understandable patterns for technology, don’t underestimate the value of team work and feedback. Haunted graveyards can be avoided:

“A haunted graveyard is not always an issue of code quality, but may as well be a mismatch between code complexity and the team’s ability to grapple with it. As a consultant, your goal is to avoid these scenarios by aligning your work with the team’s capabilities, transferring knowledge effectively, and ensuring the team can confidently take ownership of your contributions.”

Haunted graveyards are also huge opportunities for IT code consultants. Anyone with versatile knowledge, the right education/credentials, and chutzpah could establish themselves in this niche field. It’s perfect for a young 20-something with youthful optimism and capital to start a business in consulting for haunted graveyard systems. They will encounter data hoarders, though.

Whitney Grace, January 3, 2024

Technical Debt: A Weight Many Carry Forward to 2025

December 31, 2024

Do you know what technical debt is? It’s also called deign debt and code debt. It refers to a development team prioritizing a project’s delivery over a functional product and the resulting consequences. Usually the project has to be redone. Data debt is a type of technical debt and it refers to the accumulated costs of poor data management that hinder decision-making and efficiency. Which debt is worse? The Newstack delves into that topic in: “Who’s the Bigger Villain? Data Debt vs. Technical Debt.”

Technical debt should only be adopted for short-term goals, such as meeting a release date, but it shouldn’t be the SOP. Data debt’s downside is that it results in poor data and manual management. It also reduces data quality, slows decision making, and increases costs. The pair seem indistinguishable but the difference is that with technical debt you can quit and start over. That’s not an option with data debt and the ramifications are bad:

“Reckless and unintentional data debt emerged from cheaper storage costs and a data-hoarding culture, where organizations amassed large volumes of data without establishing proper structures or ensuring shared context and meaning. It was further fueled by resistance to a design-first approach, often dismissed as a potential bottleneck to speed. It may also have sneaked up through fragile multi-hop medallion architectures in data lakes, warehouses, and lakehouses.”

The article goes on to recommend adopting early data-modeling and how to restructure your current systems. You do that by drawing maps or charts of your data, then project where you want them to go. It’s called planning:

“To reduce your data debt, chart your existing data into a transparent, comprehensive data model that maps your current data structures. This can be approached iteratively, addressing needs as they arise — avoid trying to tackle everything at once.

Engage domain experts and data stakeholders in meaningful discussions to align on the data’s context, significance, and usage.

From there, iteratively evolve these models — both for data at rest and data in motion—so they accurately reflect and serve the needs of your organization and customers.

Doing so creates a strong foundation for data consistency, clarity, and scalability, unlocking the data’s full potential and enabling more thoughtful decision-making and future innovation.”

Isn’t this just good data, project, or organizational management? Charting is a basic tool taught in kindergarten. Why do people forget it so quickly?

Whitney Grace, December 31, 2024