All I Want for Xmas Is Crypto: Outstanding Idea GenZ

December 24, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I wish I knew an actual GenZ person. I would love to ask, “What do you want for Christmas?” Because I am a dinobaby, I expect an answer like cash, a sweater, a new laptop, or a job. Nope, wrong.

According to the most authoritative source of real “news” to which I have access, the answer is crypto. “45% of Gen Z Wants This Present for Christmas—Here’s What Belongs on Your Gift List” explains:

[A] Visa survey found that 45% of Gen Z respondents in the United States would be excited to receive cryptocurrency as their holiday gift. (That’s way more than Americans overall, which was only 28%.)

Two geezers try to figure out what their grandchildren want for Xmas. Thanks, Qwen. Good enough.

Why? Here’s the answer from Jonathan Rose, CEO of BlockTrust IRA, a cryptocurrency-based individual retirement account (IRA) platform:

“Gen Z had a global pandemic and watched inflation eat away at the power of the dollar by around 20%. Younger people instinctively know that $100 today will buy them significantly less next Christmas. Asking for an asset that has a fixed supply, such as bitcoin, is not considered gambling to them—it is a logical decision…. We say that bull markets make you money, but bear markets get you rich. Gen Z wants to accumulate an asset that they believe will define the future of finance, at an affordable price. A crypto gift is a clear bet that the current slump is temporary while the digital economy is permanent.”

I like that line “a logical decision.”

The world of crypto is an interesting one.

The Readers Digest explains to a dinobaby how to obtain crypto. Here’s the explanation for a dinobaby like me:

One easy way to gift crypto is by using a major exchange or crypto-friendly trading app like Robinhood, Kraken or Crypto.com. Kraken’s app, for example, works almost like Venmo for digital assets. You buy a cryptocurrency—such as bitcoin—and send it to someone using a simple pay link. The recipient gets a text message, taps the link, verifies their account, and the crypto appears in their wallet. It’s a straightforward option for beginners.

What will those GenZ folks do with their funds? Gig tripping. No, I don’t know what that means.

Several observations:

- I liked getting practical gifts, and I like giving practical gifts. Crypto is not practical. It is, in my opinion, idea for money laundering, not buying sweaters.

- GenZ does have an uncertain future. Not only are those basic skill scores not making someone like me eager to spend time with “units” from this cohort, I am not sure I know how to speak to a GenZ entity. Is that why so many of these young people prefer talking to chatbots? Do dinobabies make the uncomfortable?

- When the Readers Digest explains how to buy crypto, the good old days of a homey anecdote and a summary of an article from a magazine with a reading level above the sixth grade are officially over.

Net net: I am glad I am old.

Stephen E Arnold, December 24, 2025

No Phones, Boys Get Smarter. Yeah

December 11, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I am busy with a new white paper, but one of my team called this short item to my attention. Despite my dislike of interruptions, “School Cell Phone Bans and Student Achievement” sparked my putting down one thing and addressing this research study. No, I don’t know the sample size, and I did not investigate it. No, I don’t know what methods were used to parse the information and spit out the graphic, and I did not invest time to poke around.

Young females having lunch with their mobile phones in hand cannot believe the school’s football star now gets higher test scores. Thanks, Midjourney. Good enough.

The main point of the research report, in my opinion, is to provide proof positive that mobile phones in classrooms interfere with student learning. Now, I don’t know about you, but my reaction is, “You did not know that?” I taught for a mercifully short time before I dropped out of my Ph.D. program and took a job at Halliburton’s nuclear unit. (Dick Cheney worked at Halliburton. Remember him?)

The write up from NBER.org states:

Two years after the imposition of a student cell phone ban, student test scores in a large urban school district were significantly higher than before.

But here’s the statement that caught my attention:

Test score improvements were also concentrated among male students (up 1.4 percentiles, on average) and among middle and high school students (up 1.3 percentiles, on average).

But what about the females? Why did this group not show “boy level” improvement? I don’t know much about young people in middle and high school. However, based on observation of young people at the Blaze discount pizza restaurant, females who seem to me to be in middle school and high school do three things simultaneously:

- Chatter excitedly with their friends

- Eat pizza

- Interact with their phones or watch what’s on the screen while doing [1] and [2].

I think more research is needed. I know from some previous research that females outperform males academically up to a certain age. How does mobile phone usage impact this data, assuming those data which I dimly recall are or were accurate? Do mobile devices hold males back until the mobiles are removed and then, like magic, do these individuals manifest higher academic performance?

Maybe the data in the NBER report are accurate, but the idea that males — often prone to playing games, fooling around, and napping in class — benefit more from a mobile ban than females is interesting. The problem is I am not sure that the statement lines up with my experience.

But I am a dinobaby, just one that is not easily distracted unless an interesting actual factual research reports catches my attention.

Stephen E Arnold, December 11, 2025

MIT Iceberg: Identifying Hotspots

December 10, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I like the idea of identifying exposure hotspots. (I hate to mention this, but MIT did have a tie up with Jeffrey Epstein, did it not? How long did it take for that hotspot to be exposed? The dynamic duo linked in 2002 and wound down the odd couple relationship in 2017. That looks to me to be about 15 years.) Therefore, I approach MIT-linked research from some caution. Is this a good idea? Yep.)

What is this iceberg thing? I won’t invoke the Titanic’s encounter with an iceberg, nor will I point to some reports about faulty engineering. I am confident had MIT been involved, that vessel would probably be parked in a harbor, serving as a museum.

I read “The Iceberg Index: Measuring Skills-centered Exposure in the AI Economy.” You can too. The paper is free at least for a while. It also has 10 authors who generated 21 pages to point out that smart software is chewing up jobs. Of course, this simple conclusion is supported by quite a bit of academic fireworks.

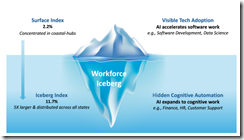

The iceberg chart which reminds me of the Dark Web charts. I wonder if Jeffrey Epstein surfed the Dark Web while waiting for a meet and greet at MIT? The source for this image is MIT or possibly an AI system helping out the MIT graphic artist humanoid.

I love these charts. I find them eye catching and easily skippable.

Even though smart software makes up stuff, appears to have created some challenges for teachers and college professors (except those laboring in Jeffrey Epstein’s favorite grove of academic, of course), and people looking for jobs. The as is smart software can eliminate about 10 to 11 percent of here and now jobs. The good news is that 90 percent of the workers can wait for AI to get better and then eliminate another chunk of jobs. For those who believe that technology just gets better and better, the number of jobs for humanoids is likely to be gnawed and spat out for the foreseeable future.

I am not going to cause the 10 authors to hire SEO spam shops in Africa to make my life miserable. I will suggest, however, that there may be what I call de-adoption in the near future. The idea is that an organization is unhappy with the cost / value for its AI installation. A related factor is that some humans in an organization may introduce some work flow friction. The actions can range from griping about services interrupting work like Microsoft’s enterprise Copilot to active sabotage. People can fake being on a work related video conference, and I assume a college graduate (not from MIT, of course) might use this tactic to escape these wonderful face to face innovations. Nor will I suggest that AI may continue to need humans to deliver successful work task outcomes. Does an AI help me buy more of a product? Does AI boost your satisfaction with an organization pushing and AI helper on each of its Web pages?

And no academic paper (except those presented at AI conferences) are complete without some nifty traditional economic type diagrams. Here’s an example for the industrious reader to check:

Source: the MIT Report. Is it my imagination or five of the six regression lines pointing down? What’s negative correlation? (Yep, dinobaby stuff.)

Several observations:

- This MIT paper is similar to blue chip consulting “thought pieces.” The blue chippers write to get leads and close engagements. What is the purpose of this paper? Reading posts on Reddit or LinkedIn makes clear that AI allegedly is replacing jobs or used as an excuse to dump expensive human workers.

- I identified a couple of issues I would raise if the 10 authors had trooped into my office when I worked at a big university and asked for comments. My hunch is that some of the 10 would have found me manifesting dinobaby characteristics even though I was 23 years old.

- The spate of AI indexes suggests that people are expressing their concern about smart software that makes mistakes by putting lipstick on what is a very expensive pig. I sense a bit of anxiety in these indexes.

Net net: Read the original paper. Take a look at your coworkers. Which will be the next to be crushed because of the massive investments in a technology that is good enough, over hyped, and perceived as the next big thing. (Measure the bigness by pondering the size of Meta’s proposed data center in the southern US of A.) Remember, please, MIT and Epstein Epstein Epstein.

Stephen E Arnold, December 10, 2025

ChatGPT: Smoked by GenX MBA Data

December 8, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

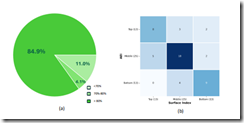

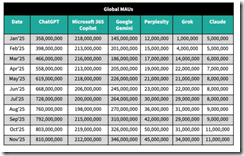

I saw this chart from Sensor Tower in several online articles. Examples include TechCrunch, LinkedIn, and a couple of others. Here’s the chart as presented by TechCrunch on December 5, 2025:

Yes, I know it is difficult to read. Complain to WordPress, not me, please.

The seven columns are labeled Date starting on January 2025. I am not sure if this is December 2024 data compiled in January 2025 or end of January 2025 data. Meta data would be helpful, but I am a dinobaby and this is a very GenX-type of Excel chart. The chart then presents what I think are mobile installs or some action related to the “event” captured when the Sensor Tower data receives a signal. I am not sure, and some remarks about how the data were collected would be helpful to a person disguised as a dinobaby. The column heads are not in alphabetical order. I assume the hassle of alphabetizing was too much work for whoever created the table. Here’s the order:

- ChatGPT

- Microsoft 365 Copilot

- Google Gemini

- Perplexity

- Grok

- Claude

The second thing I noticed was that the data do not reflect individual installs or uses. Thus, these data are of limited use to a dinobaby like me. Sure, I can see that ChatGPT’s growth slowed (if the numbers are on the money) and Gemini’s grew. But ChatGPT has a bigger base and it may be finding it ore difficult to attract installs or events so the percent increase seems to shout, “Bad news, Sam AI-Man.”

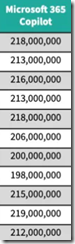

Then there is the issue of number of customers. We are now shifting from the impression some may have that these numbers represent individual humans to the fuzzy notion of app events. Why does this matter? Google and Microsoft have many more corporate and individual users than the other firms combined. If Google or Microsoft pushes or provides free access, those events will appeal to the user base and the number of “events” will jump. The data narrow Microsoft’s AI to Microsoft 365 Copilot. Google’s numbers are not narrowed. They may be, but there is not metadata to help me out. Here’s the Microsoft column:

As a result, the graph of the Microsoft 365 Copilot looks like this:

What’s going on from May to August 2025? I have no clue. Vacations maybe? Again that old fashioned metadata, footnotes, and some information about methodology would be helpful to a dinobaby. I mention the Microsoft data for one reason: None of the other AI systems listed in the Sensor Tower data table have this characteristic. Don’t users of ChatGPT, Google, et al, go on vacation? If one set of data for an important company have an anomaly, can one trust the other data. Those data are smooth.

If I look at the complete array of numbers, I expected to see more ones. There is some weird Statistics 101 “law” about digit frequency, and it seems to this dinobaby that it’s not being substantiated in the table. I can overlook how tidy the numbers are because why not round big numbers. It works for Fortune 1000 budgets and for many government agencies’ budgets.

A person looking at these data will probably think “number of users.” Nope, number of events recorded by Sensor Tower. Some of the vendors can force or inject AI into a corporate, governmental, or individual user stream. Some “events” may be triggered by workflows that use multiple AI systems. There are probably a few people with too much time and no money sense paying for multiple services and using them to explore a single topic or area in inquiry; for example, what is the psychological make up of a GenX MBA who presents data that can be misinterpreted.

Plus, the AI systems are functionally different and probably not comparable using “event” data. For example, Copilot may reflect events in corporate document editing. The Google can slam AI into any of its multi-billion user, system, or partner activities. I am not sure about Claude (Anthropic) or Grok. What about Amazon? Nowhere to be found I assume. The Chinese LLMs? Nope. Mistral? Crickets.

Finally, should I raise the question of demographics? Ah, you say, “No.” Okay, I am easy. Forget demos; there aren’t any.

Please, check out the cited article. I want to wrap up by quoting one passage from the TechCrunch write up:

Gemini is also increasing its share of the overall AI chatbot market when compared across all top apps like ChatGPT, Copilot, Claude, Perplexity, and Grok. Over the past seven months (May-November 2025), Gemini increased its share of global monthly active users by three percentage points, the firm estimates.

This sounds like Sensor Tower talking.

Net net: I am not confident in GenX “event” data which seems to say, “ChatGPT is losing the AI race.” I may agree in part with this sentiment, but the data from Sensor Tower do influence me. But marketing is marketing.

Stephen E Arnold, December 8, 2025

Cloudflare: Data without Context Are Semi-Helpful for PR

December 5, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Every once in a while, Cloudflare catches my attention. One example is today (December 5, 2025). My little clumsy feedreader binged and told me it was dead. Okay, no big deal. I poked around and the Internet itself seemed to be dead. I went to the gym and upon my return, I checked and the Internet was alive. A bit of poking around revealed that the information in “Cloudflare Down: Canva to Valorant to Shopify, Complete List of Services Affected by Cloudflare Outage” was accurate. Yep, Cloudflare, PR campaigner, and gateway to some of the datasphere seemed to be having a hiccup.

So what did today’s adventure spark in my dinobaby brain? Memories. Cloudflare was down again. November, December, and maybe the New Year will deliver another outage.

Let’s shift to another facedt of Cloudflare.

When I was working on my handouts for my Telegram lecture, my team and I discovered comments that Pavel Durov was a customer. Then one of my Zoom talks failed because Cloudflare’s system failed. When Cloudflare struggled to its very capable and very fragile feet, I noted a link to “Cloudflare Has Blocked 416 Billion AI Bot Requests Since July 1.” Cloudflare appears to be on a media campaign to underscore that it, like Amazon, can take out a substantial chunk of the Internet while doing its level best to be a good service provider. Amusing idea: The Wired Magazine article coincides with Cloudflare stubbing its extremely comely and fragile toe.

Centralization for decentralized services means just one thing to me: A toll road with some profit pumping efficiencies guiding its repairs. Somebody pays for the concentration of what I call facilitating services. Even bulletproof hosting services have to use digital nodes or junction boxes like Cloudflare. Why? Many allow a person with a valid credit card to sign up for self-managed virtual servers. With these technical marvels, knowing what a customer is doing is work, hard work.

The numbers amaze the onlookers. Thanks, Venice.ai. Good enough.

The numbers amaze the onlookers. Thanks, Venice.ai. Good enough.

When in Romania, I learned that a big service provider allows a customer with a credit card use the service provider’s infrastructure and spin up and operate virtual gizmos. I heard from a person (anonymous person, of course), “We know some general things, but we don’t know what’s really going on in those virtual containers and servers.” The approach implemented by some service providers suggested that modern service providers build opacity into their architecture. That’s no big deal for me, but some folks do want to know a bit more than “Dude, we don’t know. We don’t want to know.”

That’s what interested me in the cited article. I don’t know about blocking bots. Is bot recognition 100 percent accurate? I have case examples of bots fooling business professionals into downloading malware. After 18 months of work on my Telegram project, my team and I can say with confidence, “In the Telegram systems, we don’t know how many bots are running each day. Furthermore, we don’t know how many Telegram have been coded in the last decade. It is difficult to know if an eGame is a game or a smart bot enhanced experience designed to hook kids on gambling and crypto.” Most people don’t know this important factoid. But Cloudflare, if the information in the Wired article is accurate, knows exactly how may AI bot request have been blocked since July 1. That’s interesting for a company that has taken down the Internet this morning. How can a firm know one thing and not know it has a systemic failure. A person on Reddit.com noted, “Call it Clownflare.”

But the paragraph Wired article from which I shall quote is particularly fascinating:

Prince cites stats that Cloudflare has not previously shared publicly about how much more of the internet Google can see compared to other companies like OpenAI and Anthropic or even Meta and Microsoft. Prince says Cloudflare found that Google currently sees 3.2 times more pages on the internet than OpenAI, 4.6 times more than Microsoft, and 4.8 times more than Anthropic or Meta does. Put simply, “they have this incredibly privileged access,” Prince says.

Several observations:

- What does “Google can see” actually mean? Is Google indexing content not available to other crawlers?

- The 4.6 figure is equally intriguing. Does it mean that Google has access to four times the number of publicly accessible online Web pages than other firms? None of the Web indexing outfits put “date last visited” or any time metadata on a result. That’s an indication that the “indexing” is a managed function designed for purposes other than a user’s need to know if the data are fresh.

- The numbers for Microsoft are equally interesting. Microsoft, based on what I learned when speaking with some Softies, was that at one time Bing’s results were matched to Google’s results. The idea was that reachable Web sites not deemed important were not on the Bing must crawl list. Maybe Bing has changed? Microsoft is now in a relationship with Sam AI-Man and OpenAI. Does that help the Softies?

- The cited paragraph points out that Google has 3.2 more access or page index counts than OpenAI. However, spot checks in ChatGPT 5.1 on December 5, 2025, showed that OpenAI cited more current information that Gemini 3. Maybe my prompts were flawed? Maybe the Cloudflare numbers are reflecting something different from index and training or wrapper software freshness? Is there more to useful results than raw numbers?

- And what about the laggards? Anthropic and Meta are definitely behind the Google. Is this a surprise? For Meta, no. Llama is not exactly a go-to solution. Even Pavel Durov chose a Chinese large language model over Llama. But Anthropic? Wow, dead last. Given Anthropic’s relationship with its Web indexing partners, I was surprised. I ask, “What are those partners sharing with Anthropic besides money?”

Net net: These Cloudflare data statements strike me as information floating in dataspace context free. It’s too bad Wired Magazine did not ask more questions about the Prince data assertions. But it is 2025, and content marketing, allegedly and unverifiable facts, and a rah rah message are more important than providing context and answering more pointed questions. But I am a dinobaby. What do I know?

Stephen E Arnold, December 5, 2025

LLMs and Creativity: Definitely Not Einstein

November 25, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I have a vague recollection of a very large lecture room with stadium seating. I think I was at the University of Illinois when I was a high school junior. Part of the odd ball program in which I found myself involved a crash course in psychology. I came away from that class with an idea that has lingered in my mind for lo these many decades; to wit: People who are into psychology are often wacky. Consequently I don’t read too much from this esteemed field of study. (I do have some snappy anecdotes about my consulting projects for a psychology magazine, but let’s move on.)

A semi-creative human explains to his robot that he makes up answers and is not creative in a helpful way. Thanks, Venice.ai. Good enough, and I see you are retiring models, including your default. Interesting.

I read in PsyPost this article: “A Mathematical Ceiling Limits Generative AI to Amateur-Level Creativity.” The main idea is that the current approach to smart software does not just answers dead wrong, but the algorithms themselves run into a creative wall.

Here’s the alleged reason:

The investigation revealed a fundamental trade-off embedded in the architecture of large language models. For an AI response to be effective, the model must select words that have a high probability of fitting the context. For instance, if the prompt is “The cat sat on the…”, the word “mat” is a highly effective completion because it makes sense and is grammatically correct. However, because “mat” is the most statistically probable ending, it is also the least novel. It is entirely expected. Conversely, if the model were to select a word with a very low probability to increase novelty, the effectiveness would drop. Completing the sentence with “red wrench” or “growling cloud” would be highly unexpected and therefore novel, but it would likely be nonsensical and ineffective. Cropley determined that within the closed system of a large language model, novelty and effectiveness function as inversely related variables. As the system strives to be more effective by choosing probable words, it automatically becomes less novel.

Let me take a whack at translating this quote from PsyPost: LLMs like Google-type systems have to decide. [a] Be effective and pick words that fit the context well, like “jelly” after “I ate peanut butter and jelly.” Or, [b] The LLM selects infrequent and unexpected words for novelty. This may lead to LLM wackiness. Therefore, effectiveness and novelty work against each other—more of one means less of the other.

The article references some fancy math and points out:

This comparison suggests that while generative AI can convincingly replicate the work of an average person, it is unable to reach the levels of expert writers, artists, or innovators. The study cites empirical evidence from other researchers showing that AI-generated stories and solutions consistently rank in the 40th to 50th percentile compared to human outputs. These real-world tests support the theoretical conclusion that AI cannot currently bridge the gap to elite [creative] performance.

Before you put your life savings into a giant can’t-lose AI data center investment, you might want to ponder this passage in the PsyPost article:

“For AI to reach expert-level creativity, it would require new architecture capable of generating ideas not tied to past statistical patterns … Until such a paradigm shift occurs in computer science, the evidence indicates that human beings remain the sole source of high-level creativity.

Several observations:

- Today’s best-bet approach is the Google-type LLM. It has creative limits as well as the problems of selling advertising like old-fashioned Google search and outputting incorrect answers

- The method itself erects a creative barrier. This is good for humans who can be creative when they are not doom scrolling.

- A paradigm shift could make those giant data centers extremely large white elephants which lenders are not very good at herding along.

Net net: I liked the angle of the article. I am not convinced I should drop my teen impression of psychology. I am a dinobaby, and I like land line phones with rotary dials.

Stephen E Arnold, November 26, 2025

AI Content: Most People Will Just Accept It and Some May Love It or Hum Along

November 18, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The trust outfit Thomson Reuters summarized as real news a survey. The write up sports the title “Are You Listening to Bots? Survey Shows AI Music Is Virtually Undetectable?” Truth be told, I wanted the magic power to change the headline to “Are You Reading News? Survey Shows AI Content Is Virtually Undetectable.” I have no magic powers, but I think the headline I just made up is going to appear in the near future.

Elvis in heaven looks down on a college dance party and realizes that he has been replaced by a robot. Thanks, Venice.ai. Wow, your outputs are deteriorating in my opinion.

What does the trust outfit report about a survey? I learned:

A staggering 97% of listeners cannot distinguish between artificial intelligence-generated and human-composed songs, a Deezer–Ipsos survey showed on Wednesday, underscoring growing concerns that AI could upend how music is created, consumed and monetized. The findings of the survey, for which Ipsos polled 9,000 participants across eight countries, including the U.S., Britain and France, highlight rising ethical concerns in the music industry as AI tools capable of generating songs raise copyright concerns and threaten the livelihoods of artists.

I won’t trot out my questions about sample selection, demographics, and methodology. Let’s just roll with what the “trust” outfit presents as “real” news.

I noted this series of factoids:

- “73% of respondents supported disclosure when AI-generated tracks are recommended”

- “45% sought filtering options”

- “40% said they would skip AI-generated songs entirely.”

- Around “71% expressed surprise at their inability to distinguish between human-made and synthetic tracks.”

Isn’t that last dot point the major finding. More than two thirds cannot differentiate synthesized, digitized music from humanoid performers.

The study means that those who have access to smart software and whatever music generation prompt expertise is required can bang out chart toppers. Whip up some synthetic video and go on tour. Years ago I watched a recreation of Elvis Presley. Judging from the audience reaction, no one had any problem doing the willing suspension of disbelief. No opium required at that event. It was the illusion of the King, not the fried banana version of him that energized the crowd.

My hunch is that AI generated performances will become a very big thing. I am assuming that the power required to make the models work is available. One of my team told me that “Walk My Walk” by Breaking Rust hit the Billboard charts.

The future is clear. First, customer support staff get to find their future elsewhere. Now the kind hearted music industry leadership will press the delete button on annoying humanoid performers.

My big take away from the “real” news story is that most people won’t care or know. Put down that violin and get a digital audio workstation. Did you know Mozart got in trouble when he was young for writing math and music on the walls in his home. Now he can stay in his room and play with his Mac Mini computer.

Stephen E Arnold, November 18, 2025

News Flash: Young Workers Are Not Happy. Who Knew?

August 12, 2025

No AI. Just a dinobaby being a dinobaby.

No AI. Just a dinobaby being a dinobaby.

My newsfeed service pointed me to an academic paper in mid-July 2025. I am just catching up, and I thought I would document this write up from big thinkers at Dartmouth College and University College London and “Rising young Worker Despair in the United States.”

The write up is unlikely to become a must-read for recent college graduates or youthful people vaporized from their employers’ payroll. The main point is that the work processes of hiring and plugging away is driving people crazy.

The author point out this revelation:

ons In this paper we have confirmed that the mental health of the young in the United States has worsened rapidly over the last decade, as reported in multiple datasets. The deterioration in mental health is particularly acute among young women…. ted the relative prices of housing and childcare have risen. Student debt is high and expensive. The health of young adults has also deteriorated, as seen in increases in social isolation and obesity. Suicide rates of the young are rising. Moreover, Jean Twenge provides evidence that the work ethic itself among the young has plummeted. Some have even suggested the young are unhappy having BS jobs.

Several points jumped from the 38 page paper:

- The only reference to smart software or AI was in the word “despair”. This word appears 78 times in the document.

- Social media gets a few nods with eight references in the main paper and again in the endnotes. Isn’t social media a significant factor? My question is, “What’s the connection between social media and the mental states of the sample?”

- YouTube is chock full of first person accounts of job despair. A good example is Dari Step’s video “This Job Hunt Is Breaking Me and Even California Can’t Fix It Though It Tries.” One can feel the inner turmoil of this person. The video runs 23 minutes and you can find it (as of August 4, 2025) at this link: https://www.youtube.com/watch?v=SxPbluOvNs8&t=187s&pp=ygUNZGVtaSBqb2IgaHVudA%3D%3D. A “study” is one thing with numbers and references to hump curves. A first-person approach adds a bit is sizzle in my opinion.

A few observations seem warranted:

- The US social system is cranking out people who are likely to be challenging for managers. I am not sure the get-though approach based on data-centric performance methods will be productive over time

- Whatever is happening in “education” is not preparing young people and recent graduates to support themselves with old-fashioned jobs. Maybe most of these people will become AI entrepreneurs, but I have some doubts about success rates

- Will the National Bureau of Economic Research pick up the slack for the disarray that seems to be swirling through the Bureau of Labor Statistics as I write this on August 4, 2025?

Stephen E Arnold, August 12, 2025

Win Big at the Stock Market: AI Can Predict What Humans Will Do

July 10, 2025

No smart software to write this essay. This dinobaby is somewhat old fashioned.

No smart software to write this essay. This dinobaby is somewhat old fashioned.

AI is hot. Click bait is hotter. And the hottest is AI figuring out what humans will do “next.” Think stock picking. Think pitching a company “known” to buy what you are selling. The applications of predictive smart software make intelligence professionals gaming the moves of an adversary quiver with joy.

“New Mind-Reading’ AI Predicts What Humans Will Do Next, And It’s Shockingly Accurate” explains:

Researchers have developed an AI called Centaur that accurately predicts human behavior across virtually any psychological experiment. It even outperforms the specialized computer models scientists have been using for decades. Trained on data from more than 60,000 people making over 10 million decisions, Centaur captures the underlying patterns of how we think, learn, and make choices.

Since I believe everything I read on the Internet, smart software definitely can pull off this trick.

How does this work?

Rather than building from scratch, researchers took Meta’s Llama 3.1 language model (the same type powering ChatGPT) and gave it specialized training on human behavior. They used a technique that allows them to modify only a tiny fraction of the AI’s programming while keeping most of it unchanged. The entire training process took only five days on a high-end computer processor.

Hmmm. The Zuck’s smart software. Isn’t Meta in the midst of playing catch up. The company is believed to be hiring OpenAI professionals and other wizards who can convert the “also in the race” to “winner” more quickly than one can say “billions of dollar spent on virtual reality.”

The write up does not just predict what a humanoid or a dinobaby will do. The write up reports:

n a surprising discovery, Centaur’s internal workings had become more aligned with human brain activity, even though it was never explicitly trained to match neural data. When researchers compared the AI’s internal states to brain scans of people performing the same tasks, they found stronger correlations than with the original, untrained model. Learning to predict human behavior apparently forced the AI to develop internal representations that mirror how our brains actually process information. The AI essentially reverse-engineered aspects of human cognition just by studying our choices. The team also demonstrated how Centaur could accelerate scientific discovery.

I am sold. Imagine. These researchers will be able to make profitable investments, know when to take an alternate path to a popular tourist attraction, and discover a drug that will cure male pattern baldness. Amazing.

My hunch is that predictive analytics hooked up to a semi-hallucinating large language model can produce outputs. Will these predict human behavior? Absolutely. Did the Centaur system predict that I would believe this? Absolutely. Was it hallucinating? Yep, poor Centaur.

Stephen E Arnold, July 10, 2025

YouTube Reveals the Popularity Winners

June 6, 2025

No AI, just a dinobaby and his itty bitty computer.

No AI, just a dinobaby and his itty bitty computer.

Another big technology outfit reports what is popular on its own distribution system. The trusted outfit knows that it controls the information flow for many Googlers. Google pulls the strings.

When I read “Weekly Top Podcast Shows,” I asked myself, “Are these data audited?” And, “Do these data match up to what Google actually pays the people who make these programs?”

I was not the only person asking questions about the much loved, alleged monopoly. The estimable New York Times wondered about some programs missing from the Top 100 videos (podcasts) on Google’s YouTube. Mediaite pointed out:

The rankings, based on U.S. watch time, will update every Wednesday and exclude shorts, clips and any content not tagged as a podcast by creators.

My reaction to the listing is that Google wants to make darned sure that it controls the information flow about what is getting views on its platform. Presumably some non-dinobaby will compare the popularity listings to other lists, possibly the misfiring Apple’s list. Maybe an enthusiast will scrape the “popular” listings on the independent podcast players? Perhaps a research firm will figure out how to capture views like the now archaic logs favored decades ago by certain research firms.

Several observations:

- Google owns the platform. Google controls the data. Google controls what’s left up and what’s taken down? Google is not known for making its click data just a click away. Therefore, the listing is an example of information control and shaping.

- Advertisers, take note. Now you can purchase air time on the programs that matter.

- Creators who become dependent on YouTube for revenue are slowly being herded into the 21st century’s version of the Hollywood business model from the 1940s. A failure to conform means that the money stream could be reduced or just cut off. That will keep the sheep together in my opinion.

- As search morphs, Google is putting on its thinking cap in order to find ways to keep that revenue stream healthy and hopefully growing.

But I trust Google, don’t you? Joe Rogan does.

Stephen E Arnold, June 6, 2025