VoIP in Russia, Nyet. Telegram Voice, Nyet. Just Not Yet

December 24, 2024

Written by a dinobaby, not an over-achieving, unexplainable AI system.

Written by a dinobaby, not an over-achieving, unexplainable AI system.

PCNews.ru in everyone’s favorite special operations center reported that Roskomnadzor (a blend of the FBC and a couple of US three letter agencies) has a New Year’s surprise coming. (Xmas in Russia is often celebrated on January 7, 2024.) The short write up reported to me in English via the still semi reliable Google Translate that calls within “messenger apps” are often fraudulent. I am not sure this is a correct rendering of the Russian word. One of my colleagues suggested that this is a way to say, “Easily intercepted and logged by Roskomnadzor professionals.”

Among the main points in the article are:

- The target is voice traffic not routed via Roskomnadzor

- Communication operators — that is, Internet providers, data centers, etc. — are likely to be required to block such traffic with endpoints in Russia

- As part of the “blocks,” Roskomnadzor wants to identify or have identified for the entity such functions as “identifying illegal call centers (including those using SIM boxes) on their networks.”

The purpose is to protect Russian “consumers.” The source cited above included an interesting factoid. YouTube traffic, which has been subject to “blocks” has experienced a decrease in traffic of 80 percent.

Not bad but a 20 percent flow illustrates that Roskomnadzor has been unable to achieve its total ban. I wonder if this 80 percent is “good enough” for Roskomnadzor and for the fearless head of state who dictates in Russia.

Stephen E Arnold, December 24, 2024

Petrogenesis Means New Life for Xerox and Lexmark

December 24, 2024

Written by a dinobaby, not an over-achieving, unexplainable AI system.

Written by a dinobaby, not an over-achieving, unexplainable AI system.

I read about Xerox’s bold move to purchase Lexmark. I live in Kentucky, and some remember the days when IBM sought to create a brand called “Lexmark.” One of its Kentucky-proud units was located in Lexington, Kentucky. Unfortunately printers performed in market competitions about as well as most of the horses bred in Kentucky to win big money in races. Lexmark stumbled, but was not put down. Wikipedia has an amusing summary of the trajectory of Lexmark, originally part of IBM. That’s when the fun began.

The process of fossilmorphism begins. Will the progeny become King of the Printing Jungle? Does this young velociraptor find the snorting beasts amusing or the source of a consulting snack? Thanks, You.com. Good enough.

Xerox, on the other hand, is another Rochester, New York, business case study. (The other is Kodak, of digital camera fame.) Again Wikipedia has a good basic description without the color of the company’s most interesting missteps. (Did you know that one of Xerox’s most successful sales professionals was a fisherman. That’s is. Boat to big bucks without any of the Fancy Dan stuff in college.) Oh, Xerox made printers, and I was a dinobaby who worked on a printer that ingested scanned images or typed pages at one end and spit out collated paper copies at the other. Yep, a printer.

What has caused this dinobaby to stumble down memory lane? I read “Xerox to Acquire Lexmark.” Yep, Xerox a printer outfit has acquired what was once an IBM printer outfit. I call this type of business tactic

Fossilmorphism

A coinage! It meaning is that two fossil type companies fuse and create a new company. The idea is that the deal has “synergy” and the flows of cash from surging sales with lead to dinomorphism. This term suggests that companies only a dinobaby like me can love change and explode beyond their decades of organization and re-organization, might-have-been thinking, and the constraints of tromping around when this dinobaby was an agile velociraptor.

The deal raises an existential question: Are two dinosaurs able to mate? Will the offspring be a Darwinian win or fail? Every horse owner knows their foal is a winner. (Horse veterinarians are the big winners, according to Kentucky lore.)

Stephen E Arnold, December 24, 2024

FOGINT: TOMA Abandoning Telegram in Sharp U Turn

December 24, 2024

Observations from the FOGINT research team.

Observations from the FOGINT research team.

Pressure is building on Telegram’s vision for Messenger to become the hub for game crypto currency. Bitnewsbot published allegedly accurate information in “Popular Telegram Game Tomarket Ditches TON, Picks Aptos for Token Launch.” The article asserts:

Telegram-based gaming platform Tomarket announced it will launch its TOMA token on the Aptos blockchain network, abandoning initial plans to deploy on The Open Network (TON). The decision affects millions of users ahead of the December 20 token launch, marking a significant shift in the Telegram mini-app ecosystem.

One of the reasons given for the switch, according to Bitnewsbot, is the “speed and infrastructure capabilities” of Aptos’s blockchain network. The article continues:

The decision stands out as most Telegram-based cryptocurrency applications, including prominent names like Hamster Kombat and Notcoin, typically deploy on TON. The TON blockchain has seen substantial growth, currently ranking as the 16th largest cryptocurrency by market capitalization, according to CoinGecko with, a price increase of approximately 190% over the past year.

The online information service Decrypt.io adds some additional information which suggests that the Telegram infrastructure is not as supple as the Aptos offering; specifically:

Tomarket has handed out allocations of tokens across multiple airdrop waves, but players have been unable to withdraw or trade the token. The app’s developers previously said that the TOMA token was generated, but clarified afterwards that the term was used to describe token allocations within the app. And now, Tomarket won’t ultimately deploy to TON.

Decrypt.io reports:

Tomarket isn’t the first game to choose an alternative path, however: tap-to-earn combat game MemeFi recently launched its token on Sui, after pivoting from its original chain of Ethereum layer-2 network Linea.

The FOGINT team thinks that this Tonmarket abrupt change of direction may increase the pressure on Telegram at a time the organization is trying to wriggle free from the French red tape ensnaring Pavel Durov. Mr. Durov is on a legal tightrope. Defections like Tonmarket may spark some unpredictable actions by the Telegram collections of “organizations.”

Stephen E Arnold, December 24, 2024

Agentic Babies for 2025?

December 24, 2024

Are the days of large language models numbered? Yes, according to the CEO and co-founder of Salesforce. Finance site Benzinga shares, “Marc Benioff Says Future of AI Not in Bots Like ChatGPT But In Autonomous Agents.” Writer Ananya Gairola points to a recent Wall Street Journal podcast in which Benioff shared his thoughts:

“He stated that the next phase of AI development will focus on autonomous agents, which can perform tasks independently, rather than relying on LLMs to drive advancements. He argued that while AI tools like ChatGPT have received significant attention, the real potential lies in agents. ‘Has the AI taken over? No. Has AI cured cancer? No. Is AI curing climate change? No. So we have to keep things in perspective here,’ he stated. Salesforce provides both prebuilt and customizable AI agents for businesses looking to automate customer service functions. ‘But we are not at that moment that we’ve seen in these crazy movies — and maybe we will be one day, but that is not where we are today,’ Benioff stated during the podcast.”

Someday, he says. But it would seem the race is on. Gairola notes OpenAI is poised to launch its own autonomous AI agent in January. Will that company dominate the autonomous AI field, as it has with generative AI? Will the new bots come equipped with bias and hallucinations? Stay tuned.

Cynthia Murrell, December 24, 2024

FOGINT: Telegram Gets Some Lipstick to Put on a Very Dangerous Pig

December 23, 2024

Information from the FOGINT research team.

Information from the FOGINT research team.

We noted the New York Times article “Under Pressure, Telegram Turns a Profit for the First Time.” The write up reported on December 23, 2024:

Now Telegram is out to show it has found its financial footing so it can move past its legal and regulatory woes, stay independent and eventually hold an initial public offering. It has expanded its content moderation efforts, with more than 750 contractors who police content. It has introduced advertising, subscriptions and video services. And it has used cryptocurrency to pay down its debt and shore up its finances. The result: Telegram is set to be profitable this year for the first time, according to a person with knowledge of the finances who declined to be identified discussing internal figures. Revenue is on track to surpass $1 billion, up from nearly $350 million last year, the person said. Telegram also has about $500 million in cash reserves, not including crypto assets.

The FOGINT’s team viewpoint is different.

- Telegram took profit on its crypto holdings and pumped that money into its financials. Like magic, Telegram will be profitable.

- The arrest of Mr. Durov has forced the company’s hand, and it is moving forward at warp speed to become the hub for a specific category of crypto transactions.

- The French have thrown a monkey wrench into Telegram’s and its associated organizations’ plans for 2025. The manic push to train developers to create click-to-earn games, use the Telegram smart contracts, and ink deals with some very interesting partners illustrates that 2025 may be a turning point in the organizations’ business practices.

The French are moving at the speed of a finely tuned bureaucracy, and it is unlikely that Mr. Durov will shake free of the pressure to deliver names, mobile numbers, and messages of individuals and groups of interest to French authorities.

The New York Times write up references profitability. There are more gears engaging than putting lipstick on a financial report. A cornered Pavel Durov can be a dangerous 40 year old with money, links to interesting countries, and a desire to create an alternative to the traditional and regulated financial system.

Stephen E Arnold, December 23, 2024

AI Makes Stuff Up and Lies. This Is New Information?

December 23, 2024

The blog post is the work of a dinobaby, not AI.

The blog post is the work of a dinobaby, not AI.

I spotted “Alignment Faking in Large Language Models.” My initial reaction was, “This is new information?” and “Have the authors forgotten about hallucination?” The original article from Anthropic sparked another essay. This one appeared in Time Magazine (online version). Time’s article was titled “Exclusive: New Research Shows AI Strategically Lying.” I like the “strategically lying,” which implies that there is some intent behind the prevarication. Since smart software reflects its developers use of fancy math and the numerous knobs and levers those developers can adjust at the same time the model is gobbling up information and “learning”, the notion of “strategically lying” struck me as as interesting.

Thanks MidJourney. Good enough.

What strategy is implemented? Who thought up the strategy? Is the strategy working? were the questions which occurred to me. The Time essay said:

experiments jointly carried out by the AI company Anthropic and the nonprofit Redwood Research, shows a version of Anthropic’s model, Claude, strategically misleading its creators during the training process in order to avoid being modified.

This suggests that the people assembling the algorithms and training data, configuring the system, twiddling the administrative settings, and doing technical manipulations were not imposing a strategy. The smart software was cooking up a strategy. Who will say that the software is alive and then, like the former Google engineer, express a belief that the system is alive. It’s sci-fi time I suppose.

The write up pointed out:

Researchers also found evidence that suggests the capacity of AIs to deceive their human creators increases as they become more powerful.

That is an interesting idea. Pumping more compute and data into a model gives it a greater capacity to manipulate its outputs to fool humans who are eager to grab something that promises to make life easier and the user smarter. If data about the US education system’s efficacy are accurate, Americans are not doing too well in the reading, writing, and arithmetic departments. Therefore, discerning strategic lies might be difficult.

The essay concluded:

What Anthropic’s experiments seem to show is that reinforcement learning is insufficient as a technique for creating reliably safe models, especially as those models get more advanced. Which is a big problem, because it’s the most effective and widely-used alignment technique that we currently have.

What’s this “seem.” The actual output of large language models using transformer methods crafted by Google output baloney some of the time. Google itself had to regroup after the “glue cheese to pizza” suggestion.

Several observations:

- Smart software has become the technology more important than any other. The problem is that its outputs are often wonky and now the systems are befuddling the wizards who created and operate them. What if AI is like a carnival ride that routinely injures those looking for kicks?

- AI is finding its way into many applications but the resulting revenue has frayed some investors’ nerves. The fix is to go faster and win to reach the revenue goal. This frenzy for payoff has been building since early 2024 but those costs remain brutally high.

- The behavior of large language models is not understood by some of its developers. Does this seem like a problem?

Net net: “Seem?” One lies or one does not.

Stephen E Arnold, December 23, 2024

Microsoft Grouses and Barks, Then Regrouses and Rebarks about the Google

December 23, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

I spotted a reference to Windows Central, a very supportive yet “independent” explainer of Microsoft. That write up bounced around and a version ended up in Analytics India, an online publication from a country familiar to the Big Dogs at Microsoft and Google.

A stern mother tells her child to knock off the constant replays of a single dorky tune like “If I Knew You Were Comin’ I’d’ve Baked a Cake.” Thanks, Grok. Good enough.

The Analytics India story is titled “Google Makes More Money on Windows Than Microsoft, says Satya Nadella.” Let’s look at a couple of passages from the write up and then reflect on the “grousing” both giants in the money making department are sharing with anyone, maybe everyone.

Here’s the first snippet:

“Google makes more money on Windows than all of Microsoft,” Nadella said, discussing the company’s strategy to reclaim lost market share in the browser space.

I love that “lost market share”. Did Microsoft have market share in the browser space. Like Windows Phone, the Microsoft search engine in its many incarnations was not a click magnet. I heard when I did a teeny tiny thing for a Microsoft “specialist” outfit that Softies were running queries on Google and then reverse engineering what to index and what knobs to turn in order to replicate what Google’s massively wonderful method produced. True or false? Hey, I only know what I was told. Whatever Microsoft did in search failed. (How about that Fast Search & Transfer technology which powered alltheweb.com when it existed?)

I circled this statement as well:

Looking ahead, Nadella expressed confidence in Microsoft’s efforts to regain browser market share and promote its AI tools. “We get to relitigate,” he said, pointing to the opportunity to win back market share. “This is the best news for Microsoft shareholders—that we lost so badly that we can now go contest it and win back some share,” he said.

Ah, ha. “Lost so badly.” What an interesting word “relitigate.” Huh? And the grouse replay “win back market share.” What market share? Despite the ubiquity of the outstandingly wonderful Windows operating system and its baked in browser and button fest for Bing, exactly what is the market share.

Google is chugging along with about 90 percent Web search market share. Microsoft is nipping at Google’s heels with a robust four percent. Yandex is about two percent. The more likely scenario is that Yandex could under its new ownership knock Microsoft out of second place. Google isn’t going anywhere fast because the company is wrapped around finding information like Christiano Ronaldo holding one of his trophies.

What’s interesting about the Analytics India write up is what is not included in the article. For example:

- The cultural similarities of the two Big Dogs. The competition has the impact of a couple of high schoolers arguing in the cafeteria

- The lack of critical commentary about the glittering generalities the Microsoft Big Dog barks and rebarks like an annoyed French bulldog

- A total lack of interest in the fact that both companies are monopolies and that neither exists to benefit anyone other than those who hold shares in the respective companies. As long as there is money, search market share is nothing more than a money stream.

Will smart software improve the situation?

No. But the grouse and re-grouse approach to business tactics will be a very versatile rhetorical argument.

Stephen E Arnold, December 23, 2024

Telegram: Pressure Mounting on the TON Entities

December 23, 2024

Observations from the Telegram research team.

Observations from the Telegram research team.

Two apparently unrelated news items provide tantalizing hints about what Telegram will do in 2025. The phrase “cornered animal” is typically not used to describe a 40 year old person with a lot of money, a large technical company, and allegedly more than 100 children. The FOGINT team thinks that the two word phrase may apply to Pavel Durov, the founder of VKontakte and Telegram.

Mr. Durov has been under the control of the French judiciary since August 2024. French legal proceeding can be slow and painstaking. Via his attorneys’ interaction with the French authorities and his own one-on-one discussions, Mr. Durov has indicated that he wants to cooperate with investigators in matters deemed to be illegal.

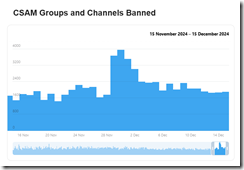

How has Telegram changed in the three months? One excellent run down of Telegram’s behavior appeared in the “report” published by Telegram. “Telegram Moderation Overview” provides some detail about Telegram’s “cooperation” in some law enforcement activities. The cited report covers Telegram’s view of banning CSAM, some terrorist communities (the term is, however, not defined by the Telegram author), and some general information about how many groups and channels have been blocked.

What’s notable is that the graphics in the write up illustrate that Telegram has been a consistent upholder of the “law” for a month:

A second item of information illustrates that despite the Telegram crypto push, resistance remains. Navigate to the “2024 Crypto Developer Report.” One interesting observation about Telegram and its “foundation’s” TONcoin is that it is not listed. Solana seems to be perking along. However, the TON developer outreach program lack magnitude to bump the needle in these data published by Electric Capital.

What happens if one views these two loosely related pieces of information together? Here are some ideas generated by the FOGINT team:

- Mr. Durov is not a “free” person, and he is making an effort to say, “Hey, we cooperate with law enforcement.”

- The November 2024 Telegram Foundation Gateway Conference was like a light show at a French chateau in the summer. There was what looked like big progress in partnerships, developer uptake, and financial performance. However, the Electric Capital report did not pay much attention.

- The TONcoin seems to be chugging along without much value movement. Hamsters in “kombat” may be excited. Actual uptake seems flat among developers in the Electric Capital sample.

Net net: Telegram is in a vulnerable position. Will Mr. Durov perceive himself as his next big thing in jeopardy? If so, he may become more problematic in 2025. A cornered ferret can be a quite dangerous beast.

Stephen E Arnold, December 23, 2024

Thales CortAIx (Get It?) and Smart Drones

December 23, 2024

Countries are investing in AI to amp up their militaries, including naval forces. Aviation Defense Universe explores how one tech company is shaping the future of maritime strategy and defense: “From Drone Swarms To Cybersecurity: Thales’ Strategic AI Innovations Unveiled.” Euronaval is one of the world’s largest naval defense exhibitions and CortAlx Labs at Thales shared their innovations AI-power technology.

Christophe Meyer is the CTO of CortAlx Labs at Thales and he was interviewed for the above article. He spoke about the developments, innovations, and challenges his company faces with AI integration in maritime and military systems. He explained that Thales has three main AI divisions. He leads the R&D department with 150 experts that are developing how to implement AI into system architectures and cybersecurity. The CortAlx Labs Factory has around 100 hundred people that are working to accelerate AI integration into produce lines. CortAlx Lab Sensors has 400 workers integrating AI algorithms into equipment such as actuators and sensors.

At Euronavel Thales, Meyer’s company demonstrated how AI plays a crucial role in information processing. AI is used in radar operations and highlights important information from the sensors. AI algorithms are also used in electronic warfare to enhance an operator’s situation awareness and pointing out information that needs attention.

Drones are also a new technology Thales is exploring. Meyer said:

“Swarm drones represent a significant leap in autonomous operations. The challenge lies in providing a level of autonomy to these drones, especially when communication with the operator is lost. AI helps drones in the swarm adapt, reorganize, and continue their mission even if some units are compromised. This technology is platform-agnostic, meaning it applies to aerial, maritime, and terrestrial swarms, with the underlying algorithms remaining consistent across domains.”

Drones are already being used by China and Dubai for aerial shows. They form pictures in the night sky and are amazing to watch. Ukraine and Russia are busy droning one another. Exciting.

Whitney Grace, December 23, 2024

Google AI Videos: Grab Your Popcorn and Kick Back

December 20, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

Google has an artificial intelligence inferiority complex. In January 2023, it found itself like a frail bathing suit clad 13 year old in the shower room filled with Los Angeles Rams. Yikes. What could the inhibited Google do? The answer has taken about two years to wend its way into Big Time PR. Nothing is an upgrade. Google is interacting with parallel universes. It is redefining quantum supremacy into supremest computer. It is trying hard not to recommend that its “users” use glue to keep cheese on its pizza.

Score one for the Grok. Good enough, but I had to try the free X.com image generator. Do you see a shivering high school student locked out of the gym on a cold and snowy day? Neither do I. Isn’t AI fabulous?

Amidst the PR bombast, Google has gathered 11 videos together under the banner of “Gemini 2.0: Our New AI Model for the Agentic Era. What is an “era”? As I recall, it is a distinct period of history with a particular feature like online advertising charging everyone someway or another. Eras, according to some long-term thinkers, are millions of years long; for example, the Mesozoic Era consists of the Triassic, Jurassic, and Cretaceous periods. Google is definitely thinking in terms of a long, long time.

Here’s the link to the playlist: https://www.youtube.com/playlist?list=PLqYmG7hTraZD8qyQmEfXrJMpGsQKk-LCY. If video is not your bag, you can listen to Google AI podcasts at this link: https://deepmind.google/discover/the-podcast/.

Has Google neutralized the blast and fall out damage from Microsoft’s 2023 OpenAI deal announcement? I think it depends on whom one asks. The feeling of being behind the AI curve must be intense. Google invented the transformer technology. Even Microsoft’s Big Dog said that Google should have been the winner. Watch for more Google PR about Google and parallel universes and numbers too big for non Googlers to comprehend.

Somebody give that kid a towel. He’s shivering.

Stephen E Arnold, December 20, 2024