AI Bubble? What Bubble? Bubble?

December 5, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I read “JP Morgan Report: AI Investment Surge Backed by Fundamentals, No Bubble in Sight.” The “report” angle is interesting. It implies unbiased, objective information compiled and synthesized by informed individuals. The content, however, strikes me as a bit of fancy dancing.

Here’s what strikes me as the main point:

A recent JP Morgan report finds the current rally in artificial intelligence (AI) related investments to be justified and sustainable, with no evidence of a bubble forming at this stage.

Feel better now? I don’t. The report strikes me as bank marketing with a big dose of cooing sounds. You know, cooing like a mother to her month old baby. Does the mother makes sense? Nope. The point is that warm cozy feeling that the cooing imparts. The mother knows she is doing what is necessary to reduce the likelihood of the baby making noises for sustained periods. The baby knows that mom’s heart is thudding along and the comfort speaks volumes.

Financial professionals in Manhattan enjoy the AI revolution. They know there is no bubble. I see bubbles (plural). Thanks, MidJourney. Good enough.

Sorry. The JP Morgan cooing is not working for me.

The write up says, quoting the estimable financial institution:

“The ingredients are certainly in place for a market bubble to form, but for now, at least, we believe the rally in AI-related investments is justified and sustainable. Capex is massive, and adoption is accelerating.”

What about this statement in the cited article?

JP Morgan contrasts the current AI investment environment to previous speculative cycles, noting the absence of cheap speculative capital or financial structures that artificially inflate prices. As AI investment continues, leverage may increase, but current AI spending is being driven by genuine earnings growth rather than assumptions of future returns.

After stating the “no bubble” argument three times, I think I understand.

Several observations:

- JP Morgan needed to make a statement that the AI data center thing, the depreciation issue, the power problem, and the potential for an innovation that derails the current LLM-type of processing are not big deals. These issues play no part in the non-bubble environment.

- The report is a rah rah for AI. Because there is no bubble, organizations should go forward and implement the current versions of smart software despite their proven “feature” of making up answers and failing to handle many routine human-performed tasks.

- The timing is designed to allow high net worth people a moment to reflect upon the wisdom of JP Morgan and consider moving money to the estimable financial institution for shepherding in what others think are effervescent moments.

My view: Consider the problems OpenAI has: [a] A need for something that knocks Googzilla off the sidewalk on Shoreline Drive and [b] more cash. Amazon — ever the consumer’s friend — is involved in making its own programmers use its smart software, not code cranked out by a non-Amazon service. Plus, Amazon is in the building mode, but it has allegedly government money to spend, a luxury some other firms are denied. Oracle is looking less like a world beater in databases and AI and more of a media-type outfit. Perplexity is probably perplexed because there are rumors that it may be struggling. Microsoft is facing some backlash because of its [a] push to make Copilot everyone’s friend and [b] dealing with the flawed updates to its vaunted Windows 11 software. Gee, why is FileManager not working? Let’s ask Copilot. On the other hand, let’s not.

Net net: JP Morgan is marketing too hard, and I am not sure it is resonating with me as unbiased and completely objective. As sales collateral, the report is good. As evidence there is no bubble, nope.

Stephen E Arnold, December 5, 2025

Mid Tier Consulting Firm Labels AI As a Chaos Agent.

December 5, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-1.gif) Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

A mid tier consulting firm (Forrester) calls smart software a chaos agent. Is the company telling BAIT (big AI tech) firms not to hire them for consulting projects? I am a dinobaby. When I worked at a once big time blue chip outfit, labeling something that is easy to sell a problem was not a standard practice. But what do I know? I am a dinobaby.

The write up in the content marketing-type publication is not exactly a sales pitch. Could it be a new type of article? Perhaps it is an example of contrarianism and a desire to make sure people know that smart software is an expensive boondoggle? I noted a couple of interesting statements in “Forrester: Gen AI Is a Chaos Agent, Models Are Wrong 60% of the Time.”

Sixty percent is, even with my failing math skills, is more than half of something. I think the idea is that smart software is stupid, and it gets an F for failure. Let’s look at a couple of statements from the write up:

Forrester says, gen AI has become that predator in the hands of attackers: The one that never tires or sleeps and executes at scale. “In Jaws, the shark acts as the chaos agent,” Forrester principal analyst Allie Mellen told attendees at the IT consultancy firm’s 2025 Security and Risk Summit. “We have a chaos agent of our own today… And that chaos agent is generative AI.”

This is news?

How about this statement?

Of the many studies Mellen cited in her keynote, one of the most damning is based on research conducted by the Tow Center for Digital Journalism at Columbia University, which analyzed eight different AI models, including ChatGPT and Gemini. The researchers found that overall, models were wrong 60% of the time; their combined performance led to more failed queries than accurate ones.

I think it is fair to conclude that Forrester is not thrilled with smart software. I don’t know if the firm uses AI or just reads about AI, but its stance is crystal clear. Need proof? A Forrester wizard recycled research that says “specialized enterprise agents all showed systemic patterns of failure. Top performers completed only 24% of tasks autonomously.

Okay, that means today’s AI gets an F. How do the disappointed parents at BAIT outfits cope with Claude, Gemini, and Copilot getting sent to a specialized school? My hunch is that the leadership in BAIT firms will ignore the criticism, invest in data centers, and look for consultants not affiliated with an outfit that dumps trash at their headquarters.

Forrester trots out a solution of course. The firm does sell time and expertise. What’s interesting is that Venture Beat rolled out some truisms about smart software, including buzzwords like red team and machine speed.

Net net: AI will be wrong most of the time. AI will be used by bad actors to compromise organizations. AI gets an F; threat actors find that AI delivers a slam dunk A. Okay, which is it? I know. It’s marketing.

Stephen E Arnold, December 5, 2025

Dead Web or Web Misread?

December 5, 2025

Journalists, Internet experts, and everyone with a bit of knowledge has declared the World Wide Web dead for thirty years. The term “World Wide Web” officially died with the new millennium, but what about the Internet itself? Ernie Smith at Tedium wrote about the demise of the Web: “The Sky Is Falling, The Web Is Dead.” Smith noticed that experts stated the Web is dead many times and he decided to investigate.

He turned to another expert: George Colony, the founder of Forrester Research. Forrester Research is a premier tech and business advisory firms in the world. Smith wrote this about Colony and his company:

“But there’s one area where the company—particularly Colony—gets it wrong. And it has to do with the World Wide Web, which Colony declared “dead” or dying on numerous occasions over a 30-year period. In each case, Colony was trying to make a bigger point about where online technology was going, without giving the Web enough credit for actually being able to get there.”

Smith strolls through instances of Colony declaring the Web is dead. The first was in 1995 followed by many other declarations of the dead Web. Smith made another smart observation:

“Can you see the underlying fault with his commentary? He basically assumed that Web technology would never improve and would be replaced with something else—when what actually happened is that the Web eventually integrated everything he wanted, plus more.

Which is funny, because Forrester’s main rival, International Data Corp., essentially said this right in the piece. ‘The web is the dirt road, the basic structure,’ IDC analyst Michael Sullivan-Trainor said. ‘The concept that you can kill the Web and start from square one is ridiculous. We are talking about using the Web, evolving it.’”

The Web and Internet evolve. Technology evolves. Smith has an optimistic view that is true about the Web: “I, for one, think the Web will do what it always does: Democratize knowledge.”

Whitney Grace, December 5, 2026

Apples Misses the AI Boat Again

December 4, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Apple and Telegram have a characteristic in common. Both did not recognize the AI boomlet that began in 2020 or so. Apple was thinking about Granny scarfs that could hold an iPhone and working out ways to cope with its dependence on Chinese manufacturing. Telegram was struggling with the US legal system and trying to create a programming language that a mere human could use to code a distributed application.

Apple’s ship has sailed, and it may dock at Google’s Gemini private island or it could decide to purchase an isolated chunk of real estate and build its de-perplexing AI system at that location.

Thanks, MidJourney. Good enough.

I thought about missing a boat or a train. The reason? I read “Apple AI Chief John Giannandrea Retiring After Siri Delays.” I simply don’t know who has been responsible for Apple AI. Siri did not work when I looked at it on my wife’s iPhone many years ago. Apparently it doesn’t work today. Could that be a factor in the leadership changes at the Tim Apple outfit?

The write up states:

Giannandrea will serve as an advisor between now and 2026, with former Microsoft AI researcher Amar Subramanya set to take over as vice president of AI. Subramanya will report to Apple engineering chief Craig Federighi, and will lead Apple Foundation Models, ML research, and AI Safety and Evaluation. Subramanya was previously corporate vice president of AI at Microsoft, and before that, he spent 16 years at Google.

Apple will probably have a person who knows some people to call at Softie and Google headquarters. However, when will the next AI boat arrive. Apple excelled at announcing AI, but no boat arrived. Telegram has an excuse; for example, our owner Pavel Durov has been embroiled in legal hassles and arm wrestling with the reality that developing complex applications for the Telegram platform is too difficult. One would have thought that Apple could have figured out a way to improve Siri, but it apparently was lost in a reality distortion field. Telegram didn’t because Pavel Durov was in jail in Paris, then confined to the country, and had to report to the French judiciary like a truant school boy. Apple just failed.

The write up says:

Giannandrea’s departure comes after Apple’s major iOS 18 Siri failure. Apple introduced a smarter, “?Apple Intelligence?” version of ?Siri? at WWDC 2024, and advertised the functionality when marketing the iPhone 16. In early 2025, Apple announced that it would not be able to release the promised version of ?Siri? as planned, and updates were delayed until spring 2026. An exodus of Apple’s AI team followed as Apple scrambled to improve ?Siri? and deliver on features like personal context, onscreen awareness, and improved app integration. Apple is now rumored to be partnering with Google for a more advanced version of ?Siri? and other ?Apple Intelligence? features that are set to come out next year.

My hunch is that grafting AI into the bizarro world of the iPhone and other Apple computing devices may be a challenge. Telegram’s solution is to not do hardware. Apple is now an outfit distinguishing itself by missing the boat. When does the next one arrive?

Stephen E Arnold, December 4, 2025

From the Ostrich Watch Desk: A Signal for Secure Messaging?

December 4, 2025

![green-dino_thumb_thumb[1] green-dino_thumb_thumb[1]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb1_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

A dinobaby is not supposed to fraternize with ostriches. These two toed birds can run. It may be time for those cyber security folks who say, “Signal is secure to run away from that broad statement.” Perhaps something like sort of secure if the information presented by the “please, please, please, send us money” newspaper company. (Tip to the Guardian leadership. There are ways to generate revenue some of which I shared in a meeting about a decade ago.)

Listening, verifying, and thinking critically are skills many professionals may want to apply to routine meetings about secure services. Thanks, Venice.ai. Good enough.

The write up from the “please, please, please, donate” outfit is “The FBI Spied on a Signal Group Chat of Immigration Activists, Records Reveal.” The subtitle makes clear that I have to mind the length of my quotes and emphasize that absolutely no one knows about this characteristic of super secret software developed by super quirky professionals working in the not-so-quirky US of A today.

The write up states:

The FBI spied on a private Signal group chat of immigrants’ rights activists who were organizing “courtwatch” efforts in New York City this spring, law enforcement records shared with the Guardian indicate.

How surprised is the Guardian? The article includes this statement, which I interpret as the Guardian’s way of saying, “You Yanks are violating privacy.” Judge for yourself:

Spencer Reynolds, a civil liberties advocate and former senior intelligence counsel with the DHS, said the FBI report was part of a pattern of the US government criminalizing free speech activities.

Several observations are warranted:

- To the cyber security vice president who told me, “Signal is secure.” The Guardian article might say, “Ooops.” When I explained it was not, he made a Three Stooges’ sound and cancel cultured me.

- When appropriate resources are focused on a system created by a human or a couple of humans, that system can be reverse engineered. Did you know Android users can drop content on an iPhone user’s device. What about those how-tos explaining the insecurity of certain locks on YouTube? Yeah. Security.

- Quirky and open source are not enough, and quirky will become less suitable as open source succumbs to corporatism and agentic software automates looking for tricks to gain access. Plus, those after-the-fact “fixes” are usually like putting on a raincoat after the storm. Security enhancement is like going to the closest big box store for some fast drying glue.

One final comment. I gave a lecture about secure messaging a couple of years ago for a US government outfit. One topic was a state of the art messaging service. Although a close hold, a series of patents held by entities in Virginia disclosed some of the important parts of the system and explained in a way lawyers found just wonderful a novel way to avoid Signal-type problems. The technology is in use in some parts of the US government. Better methods for securing messages exist. Open source, cheap, and easy remains popular.

Will I reveal the name of this firm, provide the patent numbers in this blog, and present my diagram showing how the system works? Nope.

PS to the leadership of the Guardian. My recollection is that your colleagues did not know how to listen when I ran down several options for making money online. Your present path may lead to some tense moments at budget review time. Am I right?

Stephen E Arnold, December 4, 2025

Microsoft Demonstrates Its Commitment to Security. Right, Copilot

December 4, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read on November 20, 2025, an article titled “Critics Scoff after Microsoft Warns AI Feature Can Infect Machines and Pilfer Data.” My immediate reaction was, “So what’s new?” I put the write up aside. I had to run an errand, so I grabbed the print out of this Ars Technica story in case I had to wait for the shop to hunt down my dead lawn mower.

A hacking club in Moscow celebrates Microsoft’s decision to enable agents in Windows. The group seems quite happy despite sanctions, food shortages, and the special operation. Thanks, MidJourney. Good enough.

I worked through the short write up and spotted a couple of useful (if true) factoids. It may turn out that the information in this Ars Technica write up provide insight about Microsoft’s approach to security. If I am correct, threat actors, assorted money laundering outfits, and run-of-the-mill state actors will be celebrating. If I am wrong, rest easy. Cyber security firms will have no problem blocking threats — for a small fee of course.

The write up points to what the article calls a “warning” from Microsoft on November 18, 2025. The report says:

an experimental AI agent integrated into Windows can infect devices and pilfer sensitive user data

Yep, Ars Technica then puts a cherry on top with this passage:

Microsoft introduced Copilot Actions, a new set of “experimental agentic features” that, when enabled, perform “everyday tasks like organizing files, scheduling meetings, or sending emails,” and provide “an active digital collaborator that can carry out complex tasks for you to enhance efficiency and productivity.”

But don’t worry. Users can use these Copilot actions:

if you understand the security implications.

Wow, that’s great. We know from the psycho-pop best seller Thinking Fast and Slow that more than 80 percent of people cannot figure out how much a ball costs if the total is $1.10 and the ball costs one dollar more. Also, Microsoft knows that most Windows users do not disable defaults. I think that even Microsoft knows that turning on agentic magic by default is not a great idea.

Nevertheless, this means that agents combined with large language models are sparking celebrations among the less trustworthy sectors of those who ignore laws and social behavior conventions. Agentic Windows is the new theme part for online crime.

Should you worry? I will let you decipher this statement allegedly from Microsoft. Make up your own mind, please:

“As these capabilities are introduced, AI models still face functional limitations in terms of how they behave and occasionally may hallucinate and produce unexpected outputs,” Microsoft said. “Additionally, agentic AI applications introduce novel security risks, such as cross-prompt injection (XPIA), where malicious content embedded in UI elements or documents can override agent instructions, leading to unintended actions like data exfiltration or malware installation.”

I thought this sub head in the article exuded poetic craft:

Like macros on Marvel superhero crack

The article reports:

Microsoft’s warning, one critic said, amounts to little more than a CYA (short for cover your ass), a legal maneuver that attempts to shield a party from liability. “Microsoft (like the rest of the industry) has no idea how to stop prompt injection or hallucinations, which makes it fundamentally unfit for almost anything serious,” critic Reed Mideke said. “The solution? Shift liability to the user. Just like every LLM chatbot has a ‘oh by the way, if you use this for anything important be sure to verify the answers” disclaimer, never mind that you wouldn’t need the chatbot in the first place if you knew the answer.”

Several observations are warranted:

- How about that commitment to security after SolarWinds? Yeah, I bet Microsoft forgot that.

- Microsoft is doing what is necessary to avoid the issues that arise when the Board of Directors has a macho moment and asks whoever is the Top Dog at the time, “What about the money spent on data centers and AI technology? You know, How are you going to recoup those losses?

- Microsoft is not asking its users about agentic AI. Microsoft has decided that the future of Microsoft is to make AI the next big thing. Why? Microsoft is an alpha in a world filled with lesser creatures. The answer? Google.

Net net: This Ars Technica article makes crystal clear that security is not top of mind among Softies. Hey, when’s the next party?

Stephen E Arnold, December 4, 2025

Titanic Talk: This Ship Will Not Fail

December 4, 2025

It’s too big to fail! How many times have we heard that phrase? There’s another common expression that makes more sense: The bigger they are the harder they fall. On his blog, Will Gallego writes about that idea: “Big Enough To Fail.” Through a lot of big words (intelligently used BTW), Gallego explains that big stuff fails all the time.

It’s actually a common occurrence, because crap happens. Outages daily occur, Mother Nature shows her wraith, acts of God happen, and systems fail due to mistakes. Gallego makes the observation that we’ve accepted these issues and he explains why:

- “It’s so exceptional (or feels that way). This is less so about frequency but that when a company becomes so big you just assume they’re impervious to failure, a shock and awe to the impossible.

- The lack of choices in services informs your response. Are there other providers? Sure, but with the continuous consolidation of businesses, we have fewer options every day.

- You’re locked in on your choices. Are you going to knock on Google’s door and complain, take three years to move out of one virtual data center and into another, while retraining your staff, updating your internal documents, and updating your code? No, you’re likely not.

- Failover is costly. Similarly, those at the sharp end know that the level of effort in building failover for something like this is frequently impractical. It would cost too much to set up, to maintain as developers, it would remove effort that could be put towards new features, and the financial cost backing that might be considered infeasible.

- The brittleness is everywhere. The level of complexity and the highly coupled nature of interconnected services means we’ve become brittle to failures. Doubly so when those services are the underpinnings of what we build on. “The internet is down today” as the saying goes, despite the internet having no principle nucleus. This is considered acceptable.

- We’re all in it together. When a service as large as these goes down, there’s a good chance we’re seeing so many failures in so many places that it becomes reasonable to also be down. Your competitors are likely down, your customers might be – there might be too much failure to go around to cast it in any one direction.

Ultimately, this leads into resilience engineering which is “reframing how we look incidents.” Gallego ends the article by saying we should take everything in stride, show some show patience, and give a break to the smaller players in the game. His approach is more human aka realistic unlike the egotistical rants that sank the Titanic. It’s unsinkable or it won’t fail! Yes, it will. Prepare for the eventualities. Whitney Grace, December 4, 2025

A New McKinsey Report Introduces New Jargon for Its Clients

December 3, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I read “Agents, Robots, and Us: Skill Partnerships in the Age of AI.” The write up explains that lots of employees will be terminated. Think of machines displacing seamstresses. AI is going to do that to jobs, lots of jobs.

I want to focus on a different aspect of the McKinsey Global Institute Report (a PR and marketing entity not unlike Telegram’s TON Foundation in my view).

Thanks, Vencie. Your cartoon contains neither females nor minorities. That’s definitely a good enough approach. But you have probably done a number on a few graphic artists.

First, the report offers you the potential client an opportunity to use McKinsey’s AI chatbot. The service is a test, but I have a hunch that it is not much of a test. The technology will be deployed so that McKinsey can terminate those who underperform in certain work related tasks. The criteria for keeping one’s job at a blue chip consulting firm varies from company to company. But those who don’t quit to find greener or at least less crazy pastures will now work under the watchful eye of McKinsey AI. It takes a great deal of time to write a meaningful analysis of a colleague’s job performance. Let AI do it with exceptions made for “special” hires of course. Give it a whirl.

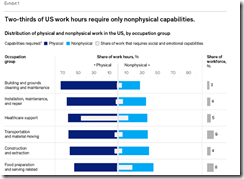

Second, the report what I call consultant facts. These are statements which link the painfully obvious with a rationale. Let me give you an example from this pre-Thanksgiving sales document. McKinsey states:

Two thirds of US work hours require only nonphysical capabilities

The painfully obvious: Most professional work is not “physical.” That means 67 percent of an employee’s fully loaded cost can be shifted to smart or semi-smart, good enough AI agentic systems. Then the obvious and the implication of financial benefits is supported by a truly blue chip chart. I know because as you can see, the graphics are blue. Here’s a segment of the McKinsey graph:

Notice that the chart is presented so that a McKinsey professional can explain the nested bar charts and expand on such data as “5 percent of a health care workforce can be agentized.” Will that resonate with hospital administrators working for roll up of individual hospitals. That’s big money. Get the AI working in one facility and then roll it out. Boom. An upside that seems credible. That’s the key to the consultant facts. Additional analysis is needed to tailor these initial McKinsey graph data to a specific use case. As a sales hook, this works and has worked for decades. Fish never understand hooks with plastic bait. Deer never quite master automobiles and headlights.

Third, the report contains sales and marketing jargon for 2026 and possibly beyond. McKinsey hopes for decades to come I think. Here’s a small selection of the words that will be used, recycled, and surface in lectures by AI experts to quite large crowds of conference attendees:

AI adjacent capabilities

AI fluency

Embodied AI

HMC or human machine collaboration

High prevalence skills

Human-agent-robot roles

technical automation potential

If you cannot define these, you need to hire McKinsey. If you want to grow as a big time manager, send that email or FedEx with a handwritten note on your engraved linen stationery.

Fourth, some humans will be needed. McKinsey wants to reassure its clients that software cannot replace the really valuable human. What do you think makes a really valuable worker beyond AI fluency? [a] A professional who signed off on a multi-million McKinsey consulting contract? [b] A person who helped McKinsey consultants get the needed data and interviews from an otherwise secretive client with compartmentalized and secure operating units? [b] A former McKinsey consultant now working for the firm to which McKinsey is pitching an AI project.

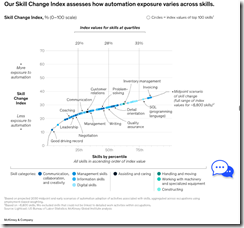

Fifth, the report introduces a new global index. The data in this free report is unlikely to be free in the future. McKinsey clients can obtain these data. This new global index is called the Skills Change Index. Here’s an example. You can get a bit more marketing color in the cited report. Just feast your eyes on this consultant fact packed chart:

Several comments. The weird bubble in the right hand page’s margin is your link to the McKinsey AI system. Give it a whirl, please. Look at the wonderland of information in a single chart presented in true blue, “just the facts, mam” style. The hawk-eyed will see that “leadership” seems immune to AI. Obviously senior management smart enough to hire McKinsey will be AI fluent and know the score or at least the projected financial payoff resulting from terminating staff who fail to up their game when robots do two thirds of the knowledge workers’ tasks.

Why has McKinsey gone to such creative lengths to create an item like this marketing collateral? Multiple teams labored on this online brochure. Graphic designers went through numerous versions of the sliding panels. McKinsey knows there is money in those AI studies. The firm will apply its intellectual method to the wizards who are writing checks to AI companies to build big data centers. Even Google is hedging its bets by packaging its data centers as providers to super wary customers like NATO. Any company can benefit from AI fluency-centric efficiency inputs. Well, not any. The reason is that only companies who can pay McKinsey fees quality to be clients.

The 11 people identified as the authors have completed the equivalent of a death march. Congratulations. I applaud you. At some point in your future career, you can look back on this document and take pride in providing a road map for companies eager to dump human workers for good enough AI systems. Perhaps one of you will be able to carry a sign in a major urban area that calls attention to your skills? You can look back and tell your friends and family, “I was part of this revolution.” So Happy holidays to you, McKinsey, and to the other blue chip firms exploiting good enough smart software.

Stephen E Arnold, December 3, 2025

Meta: Flying Its Flag for Moving Fast and Breaking Things

December 3, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Meta, a sporty outfit, is the subject of an interesting story in “Press Gazette,” an online publication. The article “News Publishers File Criminal Complaint against Mark Zuckerberg Over Scam Ads” asserts:

A group of news publishers have filed a police complaint against Meta CEO Mark Zuckerberg over scam Facebook ads which steal the identities of journalists. Such promotions have been widespread on the Meta platform and include adverts which purport to be authored by trusted names in the media.

Thanks, MidJourney. Good enough, the gold standard for art today.

I can anticipate the outputs from some Meta adherents; for example, “We are really, really sorry.” or “We have specific rules against fraudulent behavior and we will take action to address this allegation.” Or, “Please, contact our legal representative in Sweden.”

The write up does not speculate as I just did in the preceding paragraph. The article takes a different approach, reporting:

According to Utgivarna: “These ads exploit media companies and journalists, cause both financial and psychological harm to innocent people, while Meta earns large sums by publishing the fraudulent content.” According to internal company documents, reported by Reuters, Meta earns around $16bn per year from fraudulent advertising. Press Gazette has repeatedly highlighted the use of well-known UK and US journalists to promote scam investment groups on Facebook. These include so-called pig-butchering schemes, whereby scammers win the trust of victims over weeks or months before persuading them to hand over money. [Emphasis added by Beyond Search]

On November 22, 2025, Time Magazine ran this allegedly accurate story “Court Filings Allege Meta Downplayed Risks to Children and Misled the Public.” In that article, the estimable social media company found itself in the news. That write up states:

Sex trafficking on Meta platforms was both difficult to report and widely tolerated, according to a court filing unsealed Friday. In a plaintiffs’ brief filed as part of a major lawsuit against four social media companies, Instagram’s former head of safety and well-being Vaishnavi Jayakumar testified that when she joined Meta in 2020 she was shocked to learn that the company had a “17x” strike policy for accounts that reportedly engaged in the “trafficking of humans for sex.”

I find it interesting that Meta is referenced in legal issues involving two particularly troublesome problems in many countries around the world. The one two punch is sex trafficking and pig butchering. I — probably incorrectly — interpret these two allegations as kiddie crime and theft. But I am a dinobaby, and I am not savvy to the ways of the BAIT (big AI tech)-type companies. Getting labeled as a party of sex trafficking and pig butchering is quite interesting to me. Happy holidays to Meta’s PR and legal professionals. You may be busy and 100 percent billable over the holidays and into the new year.

Several observations may be warranted:

- There are some frisky BAIT outfits in Silicon Valley. Meta may well be competing for the title as the Most Frisky Firm (MFF). I wonder what the prize is?

- Meta was annoyed with a “tell all” book written by a former employee. Meta’s push back seemed a bit of a tell to me. Perhaps some of the information hit too close to the leadership of Meta? Now we have sex and fraud allegations. So…

- How will Facebook, Instagram, and WhatsApp innovate in ad sales once Meta’s AI technology is fully deployed? Will AI, for example, block ad sales that are questionable? AI does make errors, which might be a useful angle for Meta going forward.

Net net: Perhaps some journalist with experience in online crime will take a closer look at Meta. I smell smoke. I am curious about the fire.

Stephen E Arnold, December 3, 2025

Open Source Now for Rich Peeps

December 3, 2025

Once upon a time, open source was the realm of startups in a niche market. Nolan Lawson wrote about “The Fate Of ‘Small’ Open Source” on his blog Read The Tea Leaves. He explains that more developers are using AI in their work and it’s step ahead of how coding used to be in the past. He observed a societal change that has been happening since the invention of the Internet: “I do think it’s a future where we prize instant answers over teaching and understanding.”

Old-fashioned research is now an art that few decide to master except in some circumstances. However, that doesn’t help the open source libraries that built the foundation of modern AI and most systems. Lawson waxes poetic about the ending of an era and what’s the point of doing something new in an old language. He uses a lot of big words and tech speak that most people won’t understand, but I did decipher that he’s upset that big corporations and AI chatbots are taking away the work.

He remains hopeful though:

“So if there’s a conclusion to this meandering blog post (excuse my squishy human brain; I didn’t use an LLM to write this), it’s just that: yes, LLMs have made some kinds of open source obsolete, but there’s still plenty of open source left to write. I’m excited to see what kinds of novel and unexpected things you all come up with.”

My squishy brain comprehends that the future is as bleak as the present but it’s all relative and how we decide to make it.

Whitney Grace, December 3, 2025