Sam AI-Man Is Not Impressing ZDNet

December 9, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In the good old days of Ziff Communication, editorial and ad sales were separated. The “Chinese wall” seemed to work. It would be interesting to go back in time and let the editorial team from 1985 check out the write up “Stop Using ChatGPT for Everything: The AI Models I Use for Research, Coding, and More (and Which I Avoid).” The “everything” is one of those categorical affirmatives that often cause trouble for high school debaters or significant others arguing with a person who thinks a bit like a Silicon Valley technology person. Example: “I have to do everything around here.” Ever hear that?

Yes, granny. You say one thing, but it seems to me that you are getting your cupcakes from a commercial bakery. You cannot trust dinobabies when they say “I make everything” can you?

But the subtitle strikes me as even more exciting; to wit:

From GPT to Claude to Gemini, model names change fast, but use cases matter more. Here’s how I choose the best model for the task at hand.

This is the 2025 equivalent to a 1985 article about “Choosing Character Sets with EGA.” Peter Norton’s article from November 26, 1985, was mostly arcana, not too much in the opinion game. The cited “Stop Using ChatGPT for Everything” is quite different.

Here’s a passage I noted:

(Disclosure: Ziff Davis, ZDNET’s parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

And what about ChatGPT as a useful online service? Consider this statement:

However, when I do agentic coding, I’ve found that OpenAI’s Codex using GPT-5.1-Max and Claude Code using Opus 4.5 are astonishingly great. Agentic AI coding is when I hook up the AIs to my development environment, let the AIs read my entire codebase, and then do substantial, multi-step tasks. For example, I used Codex to write four WordPress plugin products for me in four days. Just recently, I’ve been using Claude Code with Opus 4.5 to build an entire complex and sophisticated iPhone app, which it helped me do in little sprints over the course of about half a month. I spent $200 for the month’s use of Codex and $100 for the month’s use of Claude Code. It does astonish me that Opus 4.5 did so poorly in the chatbot experience, but was a superstar in the agentic coding experience, but that’s part of why we’re looking at different models. AI vendors are still working out the kinks from this nascent technology.

But what about “everything” as in “stop using ChatGPT for everything”? Yeah, well, it is 2025.

And what about this passage? I quote:

Up until now, no other chatbot has been as broadly useful. However, Gemini 3 looks like it might give ChatGPT a run for its money. Gemini 3 has only been out for a week or so, which is why I don’t have enough experience to compare them. But, who knows, in six months this category might list Gemini 3 as the favorite model instead of GPT-5.1.

That “everything” still haunts me. It sure seems to me as if the ZDNet article uses ChatGPT a great deal. By the author’s own admission, he “doesn’t have enough experience to compare them.” But, but, but (as Jack Benny used to say) and then blurt “stop for everything!” Yeah, seems inconsistent to me. But, hey, I am a dinobaby.

I found this passage interesting as well:

Among the big names, I don’t use Perplexity, Copilot, or Grok. I know Perplexity also uses GPT-5.1, but it’s just never resonated with me. It’s known for search, but the few times I’ve tried some searches, its results have been meh. Also, I can’t stand the fact that you have to log in via email.

I guess these services suck as much as the ChatGPT system the author uses. Why? Yeah, log in method. That’s substantive stuff in AI land.

Observations:

- I don’t think this write up is output by AI or at least any AI system with which I am familiar

- I find the title and the text a bit out of step

- The categorical affirmative is logically loosey goosey.

Net net: Sigh.

Stephen E Arnold, December 9, 2025

Google Presents an Innovative Way to Say, “Generate Revenue”

December 9, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

One of my contacts sent me a link to an interesting document. Its title is “A Pragmatic Vision for Interpretability.” I am not sure about the provenance of the write up, but it strikes me as an output from legal, corporate, and wizards. First impression: Very lengthy. I estimate that it requires about 11,000 words to say, “Generate revenue.” My second impression: A weird blend of consulting speak and nervousness.

A group of Googlers involved in advanced smart software ideation get a phone call clarifying they have to hit revenue targets. No one looks too happy. The esteemed leader is on the conference room wall. He provides a North Star to the wandering wizards. Thanks, Venice.ai. Good enough, just like so much AI system output these days.

The write up is too long to meander through its numerous sections, arguments, and arm waving. I want to highlight three facets of the write up and leave it up to you to print this puppy out, read it on a delayed flight, and consider how different this document is from the no output approach Google used when it was absolutely dead solid confident that its search-ad business strategy would rule the world forever. Well, forever seems to have arrived for Googzilla. Hence, be pragmatic. This, in my experience, is McKinsey speak for hit your financial targets or hit the road.

First, consider this selected set of jargon:

Comparative advantage (maybe keep up with the other guys?)

Load-bearing beliefs

Mech Interp” / “mechanistic interpretability” (as opposed to “classic” interp)

Method minimalism

North Star (is it the person on the wall in the cartoon or just revenue?)

Proxy task

SAE (maybe sparse autoencoders?)

Steering against evaluation awareness (maybe avoiding real world feedback?)

Suppression of eval-awareness (maybe real-world feedback?)

Time-box for advanced research

The document tries to hard to avoid saying, “Focus on stuff that makes money.” I think that, however, is what the word choice is trying to present in very fancy, quasi-baloney jingoism.

Second, take a look at the three sets of fingerprints in what strikes me as a committee-written document.

- Researchers want to just follow their ideas about smart software just as we have done at Google for many years

- Lawyers and art history majors who want to cover their tailfeathers when Gemini goes off the rails

- Google leadership who want money or at the very least research that leads to products.

I can see a group meeting virtually, in person, and in the trenches of a collaborative Google Doc until this masterpiece of management weirdness is given the green light for release. Google has become artful in make work, wordsmithing, and pretend reconciliation of the battles among the different factions, city states, and empires within Google. One can almost anticipate how the head of ad sales reacts to money pumped into data centers and research groups who speak a language familiar to Klingons.

Third, consider why Google felt compelled to crank out a tortured document to nail on the doors of an AI conference. When I interacted with Google over a number of years, I did not meet anyone reminding me of Martin Luther. Today, if I were to return to Shoreline Drive, I might encounter a number of deep fakes armed with digital hammers and fervid eyes. I think the Google wants to make sure that no more Loons and Waymos become the butt of stand up comedians on late night TV or (heaven forbid, TikTok). The dead cat in the Mission and the dead puppy in what’s called (I think) the Western Addition. (I used to live in Berkeley, and I never paid much attention to the idiosyncratic names slapped on undifferentiable areas of the City by the Bay.)

I think that Google leadership seeks in this document:

- To tell everyone it is focusing on stuff that sort of works. The crazy software that is just like Sundar is not on the to do list

- To remind everyone at the Google that we have to pay for the big, crazy data centers in space, our own nuclear power plants, and the cost of the home brew AI chips. Ads alone are no longer going to be 24×7 money printing machines because of OpenAI

- To try to reduce the tension among the groups, cliques, and digital street gangs in the offices and the virtual spaces in which Googlers cogitate, nap, and use AI to be more efficient.

Net net: Save this document. It may become a historical artefact.

Stephen E Arnold, December 9, 2025

AI: Continuous Degradation

December 9, 2025

Many folks are unhappy with the flood of AI “tools” popping up unbidden. For example, writer Raghav Sethi at Make Use Of laments, “I’m Drowning in AI Features I Never Asked For and I Absolutely Hate It.” At first, Sethi was excited about the technology. Now, though, he just wishes the AI creep would stop. He writes:

“Somewhere along the way, tech companies forgot what made their products great in the first place. Every update now seems to revolve around AI, even if it means breaking what already worked. The focus isn’t on refining the experience anymore; it’s about finding new places to wedge in an AI assistant, a chatbot, or some vaguely ‘smart’ feature that adds little value to the people actually using it.”

Gemini is the author’s first example: He found it slower and less useful than the old Google Assistant, to which he returned. Never impressed by Apple’s Siri, he found Apple Intelligence made it even less useful. As for Microsoft, he is annoyed it wedges Copilot into Windows, every 365 app, and even the lock screen. Rather than helpful tool, it is a constant distraction. Smaller firms also embrace the unfortunate trend. The maker of Sethi’s favorite browser, Arc, released its AI-based successor Dia. He asserts it “lost everything that made the original special.” He summarizes:

“At this point, AI isn’t even about improving products anymore. It’s a marketing checkbox companies use to convince shareholders they’re staying ahead in this artificial race. Whether it’s a feature nobody asked for or a chatbot no one uses, it’s all about being able to say ‘we have AI too.’ That constant push for relevance is exactly what’s ruining the products that used to feel polished and well-thought-out.”

And it is does not stop with products, the post notes. It is also ruining “social” media. Sethi is more inclined to believe the dead Internet theory than he used to be. From Instagram to Reddit to X, platforms are filled with AI-generated, SEO-optimized drivel designed to make someone somewhere an easy buck. What used to connect us to other humans is now a colossal waste of time. Even Google Search– formerly a reliable way to find good information– now leads results with a confident AI summery that is often wrong.

The write-up goes on to remind us LLMs are built on the stolen work of human creators and that it is sopping up our data to build comprehensive profiles on us all. Both excellent points. (For anyone wishing to use AI without it reporting back to its corporate overlords, he points to this article on how to run an LLM on one’s own computer. The endeavor does require some beefy hardware, however.)

Sethi concludes with the wish companies would reconsider their rush to inject AI everywhere and focus on what actually makes their products work well for the user. One can hope.

Cynthia Murrell, December 9, 2025

The Web? She Be Dead

December 9, 2025

Journalists, Internet experts, and everyone with a bit of knowledge has declared the World Wide Web dead for thirty years. The term “World Wide Web” officially died with the new millennium, but what about the Internet itself? Ernie Smith at Tedium wrote about the demise of the Web: “The Sky Is Falling, The Web Is Dead.” Smith noticed that experts stated the Web is dead many times and he decided to investigate.

He turned to another expert: George Colony, the founder of Forrester Research. Forrester Research is a premier tech and business advisory firms in the world. Smith wrote this about Colony and his company:

“But there’s one area where the company—particularly Colony—gets it wrong. And it has to do with the World Wide Web, which Colony declared “dead” or dying on numerous occasions over a 30-year period. In each case, Colony was trying to make a bigger point about where online technology was going, without giving the Web enough credit for actually being able to get there.”

Smith strolls through instances of Colony declaring the Web is dead. The first was in 1995 followed by many other declarations of the dead Web. Smith made another smart observation:

“Can you see the underlying fault with his commentary? He basically assumed that Web technology would never improve and would be replaced with something else—when what actually happened is that the Web eventually integrated everything he wanted, plus more.

Which is funny, because Forrester’s main rival, International Data Corp., essentially said this right in the piece. ‘The web is the dirt road, the basic structure,’ IDC analyst Michael Sullivan-Trainor said. ‘The concept that you can kill the Web and start from square one is ridiculous. We are talking about using the Web, evolving it.’”

The Web and Internet evolve. Technology evolves. Smith has an optimistic view that is true about the Web: “I, for one, think the Web will do what it always does: Democratize knowledge.”

Whitney Grace, December 9, 2025

ChatGPT: Smoked by GenX MBA Data

December 8, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

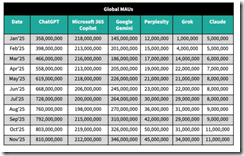

I saw this chart from Sensor Tower in several online articles. Examples include TechCrunch, LinkedIn, and a couple of others. Here’s the chart as presented by TechCrunch on December 5, 2025:

Yes, I know it is difficult to read. Complain to WordPress, not me, please.

The seven columns are labeled Date starting on January 2025. I am not sure if this is December 2024 data compiled in January 2025 or end of January 2025 data. Meta data would be helpful, but I am a dinobaby and this is a very GenX-type of Excel chart. The chart then presents what I think are mobile installs or some action related to the “event” captured when the Sensor Tower data receives a signal. I am not sure, and some remarks about how the data were collected would be helpful to a person disguised as a dinobaby. The column heads are not in alphabetical order. I assume the hassle of alphabetizing was too much work for whoever created the table. Here’s the order:

- ChatGPT

- Microsoft 365 Copilot

- Google Gemini

- Perplexity

- Grok

- Claude

The second thing I noticed was that the data do not reflect individual installs or uses. Thus, these data are of limited use to a dinobaby like me. Sure, I can see that ChatGPT’s growth slowed (if the numbers are on the money) and Gemini’s grew. But ChatGPT has a bigger base and it may be finding it ore difficult to attract installs or events so the percent increase seems to shout, “Bad news, Sam AI-Man.”

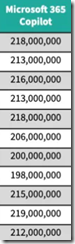

Then there is the issue of number of customers. We are now shifting from the impression some may have that these numbers represent individual humans to the fuzzy notion of app events. Why does this matter? Google and Microsoft have many more corporate and individual users than the other firms combined. If Google or Microsoft pushes or provides free access, those events will appeal to the user base and the number of “events” will jump. The data narrow Microsoft’s AI to Microsoft 365 Copilot. Google’s numbers are not narrowed. They may be, but there is not metadata to help me out. Here’s the Microsoft column:

As a result, the graph of the Microsoft 365 Copilot looks like this:

What’s going on from May to August 2025? I have no clue. Vacations maybe? Again that old fashioned metadata, footnotes, and some information about methodology would be helpful to a dinobaby. I mention the Microsoft data for one reason: None of the other AI systems listed in the Sensor Tower data table have this characteristic. Don’t users of ChatGPT, Google, et al, go on vacation? If one set of data for an important company have an anomaly, can one trust the other data. Those data are smooth.

If I look at the complete array of numbers, I expected to see more ones. There is some weird Statistics 101 “law” about digit frequency, and it seems to this dinobaby that it’s not being substantiated in the table. I can overlook how tidy the numbers are because why not round big numbers. It works for Fortune 1000 budgets and for many government agencies’ budgets.

A person looking at these data will probably think “number of users.” Nope, number of events recorded by Sensor Tower. Some of the vendors can force or inject AI into a corporate, governmental, or individual user stream. Some “events” may be triggered by workflows that use multiple AI systems. There are probably a few people with too much time and no money sense paying for multiple services and using them to explore a single topic or area in inquiry; for example, what is the psychological make up of a GenX MBA who presents data that can be misinterpreted.

Plus, the AI systems are functionally different and probably not comparable using “event” data. For example, Copilot may reflect events in corporate document editing. The Google can slam AI into any of its multi-billion user, system, or partner activities. I am not sure about Claude (Anthropic) or Grok. What about Amazon? Nowhere to be found I assume. The Chinese LLMs? Nope. Mistral? Crickets.

Finally, should I raise the question of demographics? Ah, you say, “No.” Okay, I am easy. Forget demos; there aren’t any.

Please, check out the cited article. I want to wrap up by quoting one passage from the TechCrunch write up:

Gemini is also increasing its share of the overall AI chatbot market when compared across all top apps like ChatGPT, Copilot, Claude, Perplexity, and Grok. Over the past seven months (May-November 2025), Gemini increased its share of global monthly active users by three percentage points, the firm estimates.

This sounds like Sensor Tower talking.

Net net: I am not confident in GenX “event” data which seems to say, “ChatGPT is losing the AI race.” I may agree in part with this sentiment, but the data from Sensor Tower do influence me. But marketing is marketing.

Stephen E Arnold, December 8, 2025

Clippy, How Is Copilot? Oh, Too Bad

December 8, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In most of my jobs, rewards landed on my desk when I sold something. When the firms silly enough to hire me rolled out a product, I cannot remember one that failed. The sales professionals were the early warning system for many of our consulting firm’s clients. Management provided money to a product manager or R&D whiz with a great idea. Then a product or new service idea emerged, often at a company event. Some were modest, but others featured bells and whistles. One such roll out had a big name person who a former adviser to several presidents. These firms were either lucky or well managed. Product dogs, diseased ferrets, and outright losers were identified early and the efforts redirected.

Two sales professionals realize that their prospects resist Microsoft’s agentic pawing. Mortgages must be paid. Sneakers must be purchased. Food has to be put on the table. Sales are needed, not push backs. Thanks, Venice.ai. Good enough.

But my employers were in tune with what their existing customer base wanted. Climbing a tall tree and going out on a limb were not common occurrences. Even Apple, which resides in a peculiar type of commercial bubble, recognizes a product that does not sell. A recent example is the itsy bitsy, teeny weenie mobile thingy. Apple bounced back with the Granny Scarf designed to hold any mobile phone. The thin and light model is not killed; its just not everywhere like the old reliable orange iPhone.

Sales professionals talk to prospects and customers. If something is not selling, the sales people report, “Problemo, boss.”

In the companies which employed me, the sales professionals knew what was coming and could mention in appropriately terms to those in the target market. This happened before the product or service was in production or available to clients. My employers (Halliburton, Booz, Allen, and a couple of others held in high esteem) had the R&D, the market signals, the early warning system for bad ideas, and the refinement or improvement mechanism working in a reliable way.

I read “Microsoft Drops AI Sales Targets in Half after Salespeople Miss Their Quotas.” The headline suggested three things to me instantly:

- The pre-sales early warning radar system did not exist or it was broken

- The sales professionals said in numbers, “Boss, this Copilot AI stuff is not selling.”

- Microsoft committed billions of dollars and significant, expensive professional staff time on something that prospects and customers do not rush to write checks, use, or tell their friends about the next big thing.”

The write up says:

… one US Azure sales unit set quotas for salespeople to increase customer spending on a product called Foundry, which helps customers develop AI applications, by 50 percent. Less than a fifth of salespeople in that unit met their Foundry sales growth targets. In July, Microsoft lowered those targets to roughly 25 percent growth for the current fiscal year. In another US Azure unit, most salespeople failed to meet an earlier quota to double Foundry sales, and Microsoft cut their quotas to 50 percent for the current fiscal year. The sales figures suggest enterprises aren’t yet willing to pay premium prices for these AI agent tools. And Microsoft’s Copilot itself has faced a brand preference challenge: Earlier this year, Bloomberg reported that Microsoft salespeople were having trouble selling Copilot to enterprises because many employees prefer ChatGPT instead.

Microsoft appears to have listened to the feedback. The adjustment, however, does not address the failure to implement the type of marketing probing process used by Halliburton and Booz, Allen: Microsoft implemented the “think it and it will become real.” The thinking in this case is that software can perform human work roles in a way that is equivalent to or better than a human’s execution.

I may be a dinobaby, but I figured out quickly that smart software has been for the last three years a utility. It is not quite useless, but it is not sufficiently robust to do the work that I do. Other people are on the same page with me.

My take away from the lower quotas is that Microsoft should have a rethink. The OpenAI bet, the AI acquisitions, the death march to put software that makes mistakes in applications millions use in quite limited ways, and the crazy publicity output to sell Copilot are sending Microsoft leadership both audio and visual alarms.

Plus, OpenAI has copied Google’s weird Red Alert. Since Microsoft has skin in the game with OpenAI, perhaps Microsoft should open its eyes and check out the beacons and listen to the klaxons ringing in Softieland sales meetings and social media discussions about Microsoft AI? Just a thought. (That Telegram virtual AI data center service looks quite promising to me. Telegram’s management is avoiding the Clippy-type error. Telegram may fail, but that outfit is paying GPU providers in TONcoin, not actual fiat currency. The good news is that MSFT can make Azure AI compute available to Telegram and get paid in TONcoin. Sounds like a plan to me.)

Stephen E Arnold, December 8, 2025

Guess Who Will Not Advertise on Gizmodo? Give Up?

December 8, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I have

I spotted an interesting write up on Gizmodo. The article “438 Reasons to Doubt that David Sacks Should Work for the Federal Government” suggests that none of the companies in which David Sacks has invested will throw money at Gizmodo. I don’t know that Mr. Sacks will direct his investments to avoid Gizmodo, but I surmise that the cited article may not induce him to tap his mobile and make ad buys on the Gizmodo thing.

A young trooper contemplates petting one of the animals. Good enough, Venice.ai. I liked that you omitting my instruction to have the young boy scout put his arm through the bars in order to touch the tiger. But, hey, good enough is the gold standard.

The write up reports as actual factual:

His investments may expose him to conflicts of interest. They also probably distort common sense.

Now wait a Silicon Valley illegal left turn: “Conflicts of interest?”

The write up explains:

The presence of such a guy—who everyone knows has a massive tech-based portfolio of investments—totally guarantees the perception that public policy is being shaped by self-dealing in the tech world, which in turn distorts common sense.

The article prances forth:

When you zoom out, it looks like this: As an advisor, Trump hired a venture capitalist who held a $500,000-per-couple dinner for him last year in San Francisco. It turns out that guy has a stake in a company that makes AI night vision goggles. When he writes you an AI action plan calling for AI in the military, and your Pentagon ends up contracting with that very company, that’s just sensible government policy. After all, the military needs AI-powered night vision goggles, doesn’t it?

Several observations:

- The cited article appears to lean heavily on reporting by the New York Times. The Gray Lady does not seem charmed by David Sacks, but that’s just my personal interpretation.

- The idea that Silicon Valley viewpoints appear to influence some government projects is interesting. Combine streamlining of US government procurement policies, and I wonder if it possible that some projects get slipstreamed. I don’t know. Maybe?

- Online media that poke the tender souls of some big time billionaires strikes me as a risk-filled approach to creating actionable information. I think the action may not be what Gizmodo wants, however.

Net net: This new friskiness in itself is interesting. A thought crossed my mind about the performance capabilities of AI or maybe Anduril’s drones? But that’s a different type of story to create. It is just easier to recycle the Gray Lady. It is 2025, right after a holiday break?

Stephen E Arnold, December 8, 2025

Telegram’s Cocoon AI Hooks Up with AlphaTON

December 5, 2025

[This post is a version of an alert I sent to some of the professionals for whom I have given lectures. It is possible that the entities identified in this short report will alter their messaging and delete their Telegram posts. However, the thrust of this announcement is directionally correct.]

Telegram’s rapid expansion into decentralized artificial intelligence announced a deal with AlphaTON Capital Corp. The Telegram post revealed that AlphaTON would be a flagship infrastructure and financial partner. The announcement was posted to the Cocoon Group within hours of AlphaTON getting clear of U.S. SEC “baby shelf” financial restrictions. AlphaTON promptly launched a $420.69 million securities push. Telegram and AlphaTON either acted in a coincidental way or Pavel Durov moved to make clear his desire to build a smart, Telegram-anchored financial service.

AlphaTON, a Nasdaq microcap formerly known as Portage Biotech rebranded in September 2025. The “new” AlphaTON claims to be deploying Nvidia B200 GPU clusters to support Cocoon, Telegram’s confidential-compute AI network. The company’s pivot from oncology to crypto-finance and AI infrastructure was sudden. Plus AlphaTON’s CEO Brittany Kaiser (best known for Cambridge Analytica) has allegedly interacted with Russian political and business figures during earlier data-operations ventures. If the allegations are accurate, Ms. Kaiser has connections to Russia-linked influence and financial networks. Telegram is viewed by some organizations like Kucoin as a reliable operational platform for certain financial activities.

Telegram has positioned AlphaTON as a partner and developer in the Telegram ecosystem. Firms like Huione Guarantee allegedly used Telegram for financial maneuvers that resulted in criminal charges. Other alleged uses of the Telegram platform have included other illegal activities identified in the more than a dozen criminal charges for which Pavel Durov awaits trial in France. Telegram’s instant promotion of AlphaTON, combined with the firm’s new ability to raise hundreds of millions, points to a coordinated strategy to build an AI-enabled financial services layer using Cocoon’s VAIC or virtual artificial intelligence complex.

The message seems clear. Telegram is not merely launching a distributed AI compute service; it is enabling a low latency, secrecy enshrouded AI-crypto financial construct. Telegram and AlphaTON both see an opportunity to profit from a fusion of distributed AI, cross jurisdictional operation, and a financial pay off from transactions at scale. For me and my research team, the AlphaTON tie-up signals that Telegram’s next frontier may blend decentralized AI, speculative finance, and actors operating far from traditional regulatory guardrails.

In my monograph “Telegram Labyrinth” (available only to law enforcement, US intelligence officers, and cyber attorneys in the US), Telegram requires close monitoring and a new generation of intelware software. Yesterday’s tools were not designed for what Telegram is deploying itself and with its partners. Thank you.

Stephen E Arnold, December 5, 2025, 1034 am US Eastern time

Cloudflare: Data without Context Are Semi-Helpful for PR

December 5, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Every once in a while, Cloudflare catches my attention. One example is today (December 5, 2025). My little clumsy feedreader binged and told me it was dead. Okay, no big deal. I poked around and the Internet itself seemed to be dead. I went to the gym and upon my return, I checked and the Internet was alive. A bit of poking around revealed that the information in “Cloudflare Down: Canva to Valorant to Shopify, Complete List of Services Affected by Cloudflare Outage” was accurate. Yep, Cloudflare, PR campaigner, and gateway to some of the datasphere seemed to be having a hiccup.

So what did today’s adventure spark in my dinobaby brain? Memories. Cloudflare was down again. November, December, and maybe the New Year will deliver another outage.

Let’s shift to another facedt of Cloudflare.

When I was working on my handouts for my Telegram lecture, my team and I discovered comments that Pavel Durov was a customer. Then one of my Zoom talks failed because Cloudflare’s system failed. When Cloudflare struggled to its very capable and very fragile feet, I noted a link to “Cloudflare Has Blocked 416 Billion AI Bot Requests Since July 1.” Cloudflare appears to be on a media campaign to underscore that it, like Amazon, can take out a substantial chunk of the Internet while doing its level best to be a good service provider. Amusing idea: The Wired Magazine article coincides with Cloudflare stubbing its extremely comely and fragile toe.

Centralization for decentralized services means just one thing to me: A toll road with some profit pumping efficiencies guiding its repairs. Somebody pays for the concentration of what I call facilitating services. Even bulletproof hosting services have to use digital nodes or junction boxes like Cloudflare. Why? Many allow a person with a valid credit card to sign up for self-managed virtual servers. With these technical marvels, knowing what a customer is doing is work, hard work.

The numbers amaze the onlookers. Thanks, Venice.ai. Good enough.

The numbers amaze the onlookers. Thanks, Venice.ai. Good enough.

When in Romania, I learned that a big service provider allows a customer with a credit card use the service provider’s infrastructure and spin up and operate virtual gizmos. I heard from a person (anonymous person, of course), “We know some general things, but we don’t know what’s really going on in those virtual containers and servers.” The approach implemented by some service providers suggested that modern service providers build opacity into their architecture. That’s no big deal for me, but some folks do want to know a bit more than “Dude, we don’t know. We don’t want to know.”

That’s what interested me in the cited article. I don’t know about blocking bots. Is bot recognition 100 percent accurate? I have case examples of bots fooling business professionals into downloading malware. After 18 months of work on my Telegram project, my team and I can say with confidence, “In the Telegram systems, we don’t know how many bots are running each day. Furthermore, we don’t know how many Telegram have been coded in the last decade. It is difficult to know if an eGame is a game or a smart bot enhanced experience designed to hook kids on gambling and crypto.” Most people don’t know this important factoid. But Cloudflare, if the information in the Wired article is accurate, knows exactly how may AI bot request have been blocked since July 1. That’s interesting for a company that has taken down the Internet this morning. How can a firm know one thing and not know it has a systemic failure. A person on Reddit.com noted, “Call it Clownflare.”

But the paragraph Wired article from which I shall quote is particularly fascinating:

Prince cites stats that Cloudflare has not previously shared publicly about how much more of the internet Google can see compared to other companies like OpenAI and Anthropic or even Meta and Microsoft. Prince says Cloudflare found that Google currently sees 3.2 times more pages on the internet than OpenAI, 4.6 times more than Microsoft, and 4.8 times more than Anthropic or Meta does. Put simply, “they have this incredibly privileged access,” Prince says.

Several observations:

- What does “Google can see” actually mean? Is Google indexing content not available to other crawlers?

- The 4.6 figure is equally intriguing. Does it mean that Google has access to four times the number of publicly accessible online Web pages than other firms? None of the Web indexing outfits put “date last visited” or any time metadata on a result. That’s an indication that the “indexing” is a managed function designed for purposes other than a user’s need to know if the data are fresh.

- The numbers for Microsoft are equally interesting. Microsoft, based on what I learned when speaking with some Softies, was that at one time Bing’s results were matched to Google’s results. The idea was that reachable Web sites not deemed important were not on the Bing must crawl list. Maybe Bing has changed? Microsoft is now in a relationship with Sam AI-Man and OpenAI. Does that help the Softies?

- The cited paragraph points out that Google has 3.2 more access or page index counts than OpenAI. However, spot checks in ChatGPT 5.1 on December 5, 2025, showed that OpenAI cited more current information that Gemini 3. Maybe my prompts were flawed? Maybe the Cloudflare numbers are reflecting something different from index and training or wrapper software freshness? Is there more to useful results than raw numbers?

- And what about the laggards? Anthropic and Meta are definitely behind the Google. Is this a surprise? For Meta, no. Llama is not exactly a go-to solution. Even Pavel Durov chose a Chinese large language model over Llama. But Anthropic? Wow, dead last. Given Anthropic’s relationship with its Web indexing partners, I was surprised. I ask, “What are those partners sharing with Anthropic besides money?”

Net net: These Cloudflare data statements strike me as information floating in dataspace context free. It’s too bad Wired Magazine did not ask more questions about the Prince data assertions. But it is 2025, and content marketing, allegedly and unverifiable facts, and a rah rah message are more important than providing context and answering more pointed questions. But I am a dinobaby. What do I know?

Stephen E Arnold, December 5, 2025

AI Bubble? What Bubble? Bubble?

December 5, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I read “JP Morgan Report: AI Investment Surge Backed by Fundamentals, No Bubble in Sight.” The “report” angle is interesting. It implies unbiased, objective information compiled and synthesized by informed individuals. The content, however, strikes me as a bit of fancy dancing.

Here’s what strikes me as the main point:

A recent JP Morgan report finds the current rally in artificial intelligence (AI) related investments to be justified and sustainable, with no evidence of a bubble forming at this stage.

Feel better now? I don’t. The report strikes me as bank marketing with a big dose of cooing sounds. You know, cooing like a mother to her month old baby. Does the mother makes sense? Nope. The point is that warm cozy feeling that the cooing imparts. The mother knows she is doing what is necessary to reduce the likelihood of the baby making noises for sustained periods. The baby knows that mom’s heart is thudding along and the comfort speaks volumes.

Financial professionals in Manhattan enjoy the AI revolution. They know there is no bubble. I see bubbles (plural). Thanks, MidJourney. Good enough.

Sorry. The JP Morgan cooing is not working for me.

The write up says, quoting the estimable financial institution:

“The ingredients are certainly in place for a market bubble to form, but for now, at least, we believe the rally in AI-related investments is justified and sustainable. Capex is massive, and adoption is accelerating.”

What about this statement in the cited article?

JP Morgan contrasts the current AI investment environment to previous speculative cycles, noting the absence of cheap speculative capital or financial structures that artificially inflate prices. As AI investment continues, leverage may increase, but current AI spending is being driven by genuine earnings growth rather than assumptions of future returns.

After stating the “no bubble” argument three times, I think I understand.

Several observations:

- JP Morgan needed to make a statement that the AI data center thing, the depreciation issue, the power problem, and the potential for an innovation that derails the current LLM-type of processing are not big deals. These issues play no part in the non-bubble environment.

- The report is a rah rah for AI. Because there is no bubble, organizations should go forward and implement the current versions of smart software despite their proven “feature” of making up answers and failing to handle many routine human-performed tasks.

- The timing is designed to allow high net worth people a moment to reflect upon the wisdom of JP Morgan and consider moving money to the estimable financial institution for shepherding in what others think are effervescent moments.

My view: Consider the problems OpenAI has: [a] A need for something that knocks Googzilla off the sidewalk on Shoreline Drive and [b] more cash. Amazon — ever the consumer’s friend — is involved in making its own programmers use its smart software, not code cranked out by a non-Amazon service. Plus, Amazon is in the building mode, but it has allegedly government money to spend, a luxury some other firms are denied. Oracle is looking less like a world beater in databases and AI and more of a media-type outfit. Perplexity is probably perplexed because there are rumors that it may be struggling. Microsoft is facing some backlash because of its [a] push to make Copilot everyone’s friend and [b] dealing with the flawed updates to its vaunted Windows 11 software. Gee, why is FileManager not working? Let’s ask Copilot. On the other hand, let’s not.

Net net: JP Morgan is marketing too hard, and I am not sure it is resonating with me as unbiased and completely objective. As sales collateral, the report is good. As evidence there is no bubble, nope.

Stephen E Arnold, December 5, 2025