Google Is Just Like Santa with Free Goodies: Get “High” Grades, of Course

April 18, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

Google wants to be [a] viewed as the smartest quantumly supreme outfit in the world and [b] like Santa. The “smart” part is part of the company’s culture. The CLEVER approach worked in Web search. Now the company faces what might charitably be called headwinds. There are those pesky legal hassles in the US and some gaining strength in other countries. Also, the competitive world of smart software continues to bedevil the very company that “invented” the transformer. Google gave away some technology, and now everyone from the update champs in Redmond, Washington, to Sam AI-Man is blowing smoke about Google’s systems and methods.

What a state of affairs?

The fix is to give away access to Google’s most advanced smart software to college students. How Santa like. According to “Google Is Gifting a Year of Gemini advanced to Every College Student in the US” reports:

Google has announced today that it’s giving all US college students free access to Gemini Advanced, and not just for a month or two—the offer is good for a full year of service. With Gemini Advanced, you get access to the more capable Pro models, as well as unlimited use of the Deep Research tool based on it. Subscribers also get a smattering of other AI tools, like the Veo 2 video generator, NotebookLM, and Gemini Live. The offer is for the Google One AI Premium plan, so it includes more than premium AI models, like Gemini features in Google Drive and 2TB of Drive storage.

The approach is not new. LexisNexis was one of the first online services to make online legal research available to law school students. It worked. Lawyers are among the savviest of the work fast, bill more professionals. When did Lexis Nexis move this forward? I recall speaking to a LexisNexis professional named Don Wilson in 1980, and he was eager to tell me about this “new” approach.

I asked Mr. Wilson (who as I recall was a big wheel at LexisNexis then), “That’s a bit like drug dealers giving the curious a ‘taste’?”

He smiled and said, “Exactly.”

In the last 45 years, lawyers have embraced new technology with a passion. I am not going to go through the litany of search, analysis, summarization, and other tools that heralded the success of smart software for the legal folks. I recall the early days of LegalTech when the most common question was, “How?” My few conversations with the professionals laboring in the jungle of law, rules, and regulations have shifted to “which system” and “how much.”

The marketing professionals at Google have “invented” their own approach to hook college students on smart software. My instinct is that Google does not know much about Don Wilson’s big idea. (As an aside, I remember one of Mr. Wilson’s technical colleague sometimes sported a silver jumpsuit which anticipated some of the fashion choices of Googlers by half a century.)

The write up says:

Google’s intention is to give students an entire school year of Gemini Advanced from now through finals next year. At the end of the term, you can bet Google will try to convert students to paying subscribers.

I am not sure I agree with this. If the program gets traction, Sam AI-Man and others will be standing by with special offers, deals, and free samples. The chemical structure of certain substances is similar to today’s many variants of smart software. Hey, whatever works, right? Whatever is free, right?

Several observations:

- Google’s originality is quantumly supreme

- Some people at the Google dress like Mr. Wilson’s technical wizard, jumpsuit and all

- The competition is going to do their own version of this “original” marketing idea; for example, didn’t Bing offer to pay people to use that outstanding Web search-and-retrieval system?

Net net: Hey, want a taste? It won’t hurt anything. Try it. You will be mentally sharper. You will be more informed. You will have more time to watch YouTube. Trust the Google.

Stephen E Arnold, April 18, 2025

Google Gemini 2.5: A Somewhat Interesting Content Marketing Write Up

April 18, 2025

Just a still alive dinobaby . No smart software involved.

Just a still alive dinobaby . No smart software involved.

How about this headline: “Google’s Gemini 2.5 Pro Is the Smartest Model You’re Not Using – and 4 Reasons It Matters for Enterprise AI”?

OpenAI scroogled the Google again. First, it was the January 2023 starting gun for AI hype. Now it was the release of a Japanese cartoon style for ChatGPT. Who knew that Japanese cartoons could have blasted the Google Gemini 2.5 Pro launch more effectively than a detonation of a failed SpaceX rocket?

The write up pants:

Gemini 2.5 Pro marks a significant leap forward for Google in the foundational model race – not just in benchmarks, but in usability. Based on early experiments, benchmark data, and hands-on developer reactions, it’s a model worth serious attention from enterprise technical decision-makers, particularly those who’ve historically defaulted to OpenAI or Claude for production-grade reasoning.

Yeah, whatever.

Announcements about Google AI are about as satisfying as pizza with glued-on cheese or Apple’s AI fantasy PR about “intelligence.”

But I like this statement:

Bonus: It’s Just Useful

The headline and this “just useful” make it clear none of Google’s previous AI efforts are winning the social media buzz game. Plus, the author points out that billions of Google dollars have not made the smart software speedy. And if you want to have smart software write that history paper about Germany after WW 2, stick with other models which feature “conversational smoothness.”

Quite an advertisement. A headline that says, “No one is using this” and” it is sluggish and writes in a way that a student will get flagged for cheating.

Stick to ads maybe?

And what about “why it matters to for enterprise AI.” Yeah, nice omission.

Stephen E Arnold, April 18, 2025

YouTube Click Count Floors Creators

April 18, 2025

Content creators are not thrilled about a change in how YouTube counts views for short-form videos. The Google-owned site now tallies a view any time the short starts, regardless of how long it plays before the user scrolls on past. Digiday reports, “YouTube Shorts View Count Update Wins Over Brands—But Creators Aren’t Sold.” Though view counts have spiked since the change, that number has nothing to do with creators’ compensation. Any bragging rights from high view counts will surely be negated as word spreads on how their calculation changed. Besides, say seasoned creators, there could be a real downside for newbies. Reporter Ivy Liu writes:

Other creators said that they were worried the change could encourage YouTubers to focus on the inflated view metric displayed beneath Shorts, rather than the engaged view metric that contributes more meaningfully to creators’ income. For example, the creator BnG Refining — who goes by the name ‘Scrooge’ to his audience and asked not to be quoted by his real name — said that he was afraid less experienced creators might ‘flood the platform with content that they think is wanted, and not until hours, days, weeks later realizing that those were only fake views.’”

We are sure Google does not mind, though. Creators were not the real audience for the change. We learn:

“Brands and marketers are far more welcoming of the update, saying it brings order to the chaos of influencer marketing. Now, YouTube Shorts, TikTok videos and Instagram Reels all measure their views in the same way, making it easier for marketers to compare creators’ and videos’ performance across platforms. ‘It makes it easier, if you’re a brand, to say, “here’s how performance is across the board,” vs. looking at impressions and then trying to judge an impression as a view,’ said Krishna Subramanian, CEO of the influencer marketing company Captiv8.”

Of course. Because it is all about making it easier for brands to calculate their ROI. Creators’ perspectives, information, and artistic expression are secondary. As usual, creators are at the mercy of Google. Google likes everyone to be at its mercy. No meaningful regulation is the best regulation. Self regulation works wonders in the financial services sector too.

Cynthia Murrell, April 18, 2025

Why Is Meta Experimenting With AI To Write Comments?

April 18, 2025

Who knows why Meta does anything original? Amazon uses AI to write snapshots of book series. Therefore, Meta is using AI to write comments. We were not surprised to read “Meta Is Experimenting With AI-Generated Comments, For Some Reason."

Meta is using AI to write Instagram comments. It sounds like a very stupid idea, but Meta is doing it. Some Instagram accounts can see a new icon to the left of the text field after choosing to leave a comment. The icon is a pencil with a star. When the icon is tapped, a new Meta AI menu pops up, and offers a selection of comment choices. These comments are presumed to be based off whatever content the comment corresponds to in the post.

It doesn’t take much effort to write a simple Instagram comment, but offloading the task appears to take more effort than completing the task yourself. Plus, Instagram is already plagued with chatbot comments already. Does it need more? Nope.

Here’s what the author Jake Peterson requests of his readers:

“Writing comments isn’t hard, and yet, someone at Meta thought there was a usefulness—a market—for AI-generated comments. They probably want more training data for their AI machine, which tracks, considering companies are running out of internet for models to learn from. But that doesn’t mean we should be okay with outsourcing all human tasks to AI.

Mr. Peterson suggest that what bugs him the most is users happily allowing hallucinating software to perform cognitive tasks and make decision for people like me. Right on, Mr. Peterson.

Whitney Grace, April 18, 2025

Google: Keep a Stiff Upper Lip and Bring Fiat Currency Other Than Dollars

April 17, 2025

No AI, just the dinobaby himself.

No AI, just the dinobaby himself.

Poor Googzilla. After decades of stomping through virtual barrier, those lovers of tea, fried fish, and cricket have had enough. I think some UK government officials have grown tired of, as Monty Python said:

“..what I object to is you automatically treat me as an inferior..” “Well, I am KING.”

“Google Faces £5bn UK Lawsuit” reports:

The lawsuit alleges that the tech giant has abused its dominant market position to prevent both existing and potential competitors from entering the general search and search advertising markets, thereby allowing Google to impose supra-competitive advertising prices. The lawsuit seeks compensation for thousands of UK advertisers impacted by the company’s actions.

Will Googzilla trample this pesky lawsuit the way cinematic cities fell to the its grandfather, Godzilla?

Key allegations include Google’s contracts with smartphone manufacturers and network operators that mandate the pre-installation of Google Search and the Chrome browser on Android devices. The suit also highlights Google’s agreement with Apple, under which it pays to remain the default search engine on iPhones. Plaintiffs argue that these practices have made Google the only practical platform for online search advertising.

I know little about the UK, but I did work for an outfit on Clarendon Terrace, adjacent Buckingham Palace, for a couple of years. I figured out that the US and UK government officials were generally cooperative, but there were some interesting differences.

Obviously there is the Monty Python canon. Another point of differentiation is a tendency to keep a stiff upper lip and then bang!

The wonderful Google and its quantumly supreme approach to business may be approaching one of those bang moments. Will Google’s solicitors prevail?

I am not very good at fancy probability and nifty gradient descent calculations. I would suggest that Googzilla bring a check book or a valid cargo container filled with an acceptable fiat currency. Pounds, euros, or Swiss francs are probably acceptable at this particular point in business history.

Oh, that £5bn works out to 5.4 million Swiss francs.

Stephen E Arnold, April 17, 2025

Trust: Zuck, Meta, and Llama 4

April 17, 2025

Sorry, no AI used to create this item.

Sorry, no AI used to create this item.

CNET published a very nice article that says to me: “Hey, we don’t trust you.” Navigate to “Meta Llama 4 Benchmarking Confusion: How Good Are the New AI Models?” The write up is like a wimpy version of the old PC Perspective podcast with Ryan Shrout. Before the embrace of Intel’s intellectual blanket, the podcast would raise questions about video card benchmarks. Most of the questions addressed: “Is this video card that fast?” In some cases, yes, the video card benchmarks were close to the real world. In other cases, video card manufacturers did what the butcher on Knoxville Avenue did in 1951. Mr. Wilson put his thumb on the scale. My grandmother watched friendly Mr. Wilson who drove a new Buick in a very, very modest neighborhood, closely. He did not smile as broadly when my grandmother and I would enter the store for a chicken.

Would someone put an AI professional benchmarked to this type of test? Of course not. But the idea has a certain charm. Plus, if the person dies, he was fooling. If the person survives, that individual is definitely a witch. This was a winner method to some enlightened leaders at one time.

The CNET story says about the Zuck’s most recent non-virtual reality investment:

Meta’s Llama 4 models Maverick and Scout are out now, but they might not be the best models on the market.

That’s a good way to say, “Liar, liar, pants on fire.”

The article adds:

the model that Meta actually submitted to the LMArena tests is not the model that is available for people to use now. The model submitted for testing is called “llama-4-maverick-03-26-experimental.” In a footnote on a chart on Llama’s website (not the announcement), in tiny font in the final bullet point, Meta clarifies that the model submitted to LMArena was ‘optimized for conversationality.”

Isn’t this a GenZ way to say, “You put your thumb on the scale, Mr. Wilson”?

Let’s review why one should think about the desire to make something better than it is:

- Meta’s decision is just marketing. Think about the self driving Teslas. Consequences? Not for fibbing.

- The Meta engineers have to deliver good news. Who wants to tell the Zuck that the Llama innovations are like making the VR thing a big winner? Answer: No one who wants to get a bonus and curry favor.

- Meta does not have the ability to distinguish good from bad. The model swap is what Meta is going to do anyway. So why not just use it? No big deal. Is this a moral and ethical dead zone?

What’s interesting is that from my point of view, Meta and the Zuck have a standard operating procedure. I am not sure that aligns with what some people expect. But as long as the revenue flows and meaningful regulation of social media remains a windmill for today’s Don Quixotes, Meta is the best — until another AI leader puts out a quantumly supreme news release.

Stephen E Arnold, April 17, 2025

Google AI Search: A Wrench in SEO Methods

April 17, 2025

Does AI finally spell the end of SEO? Or will it supercharge the practice? Pymnts declares, “Google’s AI Search Switch Leaves Indie Websites Unmoored.” The brief write-up states:

“Google’s AI-generated search answers have reportedly not been good for independent websites. Those answers, along with Google’s alterations to its search algorithm in support of them, have caused traffic to those websites to plunge, Bloomberg News reported Monday (April 7), citing interviews with 25 publishers and people working with them. The changes, Bloomberg said, threaten a ‘delicate symbiotic relationship’ between businesses and Google: they generate good content, and the tech giant sends them traffic. According to the report, many publishers said they either need to shut down or revamp their distribution strategy. Experts this effort could ultimately reduce the quality of information Google can access for its search results and AI answers.”

To add insult to injury, we are reminded, AI Search’s answers are often inaccurate. SEO pros are scrambling to adapt to this new reality. We learn:

“‘It’s important for businesses to think of more than just pure on-page SEO optimization,’ Ben Poulton, founder of the SEO agency Intellar, told PYMNTS. ‘AI overviews tend to try and showcase the whole experience. That means additional content, more FAQs answered, customer feedback addressed on the page, details about walking distance and return policies for brands with a brick-and-mortar, all need to be readily available, as that will give you the best shot of being featured,’ Poulton said.”

So it sounds like one thing has not changed: Second to buying Google ads, posting thoroughly good content is the best way to surface in search results. Or, now, to donate knowledge for the algorithm to spit out. Possibly with hallucinations mixed in.

Cynthia Murrell, April 17, 2025

Google AI: Invention Is the PR Game

April 17, 2025

Google was so excited to tout its AI’s great achievement: In under 48 hours, It solved a medical problem that vexed human researchers for a decade. Great! Just one hitch. As Pivot to AI tells us, "Google Co-Scientist AI Cracks Superbug Problem in Two Days!—Because It Had Been Fed the Team’s Previous Paper with the Answer In It." With that detail, the feat seems much less impressive. In fact, two days seems downright sluggish. Writer David Gerard reports:

"The hype cycle for Google’s fabulous new AI Co-Scientist tool, based on the Gemini LLM, includes a BBC headline about how José Penadés’ team at Imperial College asked the tool about a problem he’d been working on for years — and it solved it in less than 48 hours! [BBC; Google] Penadés works on the evolution of drug-resistant bacteria. Co-Scientist suggested the bacteria might be hijacking fragments of DNA from bacteriophages. The team said that if they’d had this hypothesis at the start, it would have saved years of work. Sounds almost too good to be true! Because it is. It turns out Co-Scientist had been fed a 2023 paper by Penadés’ team that included a version of the hypothesis. The BBC coverage failed to mention this bit. [New Scientist, archive]"

It seems this type of Googley AI over-brag is a pattern. Gerard notes the company claims Co-Scientist identified new drugs for liver fibrosis, but those drugs had already been studied for this use. By humans. He also reminds us of this bit of truth-stretching from 2023:

"Google loudly publicized how DeepMind had synthesized 43 ‘new materials’ — but studies in 2024 showed that none of the materials was actually new, and that only 3 of 58 syntheses were even successful. [APS; ChemrXiv]"

So the next time Google crows about an AI achievement, we have to keep in mind that AI often is a synonym for PR.

Cynthia Murrell, April 17, 2026

The French Are Going After Enablers: Other Countries May Follow

April 16, 2025

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Another post by the dinobaby. Judging by the number of machine-generated images of young female “entities” I receive, this 80-year-old must be quite fetching to some scammers with AI. Who knew?

Enervated by the French judiciary’s ability to reason with Pavel Durov, the Paris Judicial Tribunal is going after what I call “enablers.” The term applies to the legitimate companies which make their online services available to customers. With the popularity of self-managed virtual machines, the online services firms receive an online order, collect a credit card, validate it, and let the remote customer set up and manage a computing resource.

Hey, the approach is popular and does not require expensive online service technical staff to do the handholding. Just collect the money and move forward. I am not sure the Paris Judicial Tribunal is interested in virtual anything. According to “French Court Orders Cloudflare to ‘Dynamically’ Block MotoGP Streaming Piracy”:

In the seemingly endless game of online piracy whack-a-mole, a French court has ordered Cloudflare to block several sites illegally streaming MotoGP. The ruling is an escalation of French blocking measures that began increasing their scope beyond traditional ISPs in the last few months of 2024. Obtained by MotoGP rightsholder Canal+, the order applies to all Cloudflare services, including DNS, and can be updated with ‘future’ domains.

The write up explains:

The reasoning behind the blocking request is similar to a previous blocking order, which also targeted OpenDNS and Google DNS. It is grounded in Article L. 333-10 of the French Sports Code, which empowers rightsholders to seek court orders against any outfit that can help to stop ‘serious and repeated’ sports piracy. This time, SECP’s demands are broader than DNS blocking alone. The rightsholder also requested blocking measures across Cloudflare’s other services, including its CDN and proxy services.

The approach taken by the French provides a framework which other countries can use to crack down on what seem to be legal online services. Many of these outfits expose one face to the public and regulators. Like the fictional Dr. Jekyll and Mr. Hyde, these online service firms make it possible for bad actors to perform a number of services to a special clientele; for example:

- Providing outlets for hate speech

- Hosting all or part of a Dark Web eCommerce site

- Allowing “roulette wheel” DNS changes for streaming sites distributing sports events

- Enabling services used by encrypted messaging companies whose clientele engages in illegal activity

- Hosting images of a controversial nature.

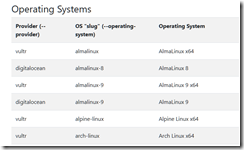

How can this be? Today’s technology makes it possible for an individual to do a search for a DMCA ignored advertisement for a service provider. Then one locates the provider’s Web site. Using a stolen credit card and the card owner’s identity, the bad actor signs up for a service from these providers:

This is a partial list of Dark Web hosting services compiled by SporeStack. Do you recognize the vendors Digital Ocean or Vultr? I recognized one.

These providers offer virtual machines and an API for interaction. With a bit of effort, the online providers have set up a vendor-customer experience that allows the online provider to say, “We don’t know what customer X is doing.” A cyber investigator has to poke around hunting for the “service” identified in the warrant in the hopes that the “service” will not be “gone.”

My view is that the French court may be ready to make life a bit less comfortable for some online service providers. The cited article asserts:

… the blockades may not stop at the 14 domain names mentioned in the original complaint. The ‘dynamic’ order allows SECP to request additional blockades from Cloudflare, if future pirate sites are flagged by French media regulator, ARCOM. Refusal to comply could see Cloudflare incur a €5,000 daily fine per site. “[Cloudflare is ordered to implement] all measures likely to prevent, until the date of the last race in the MotoGP season 2025, currently set for November 16, 2025, access to the sites identified above, as well as to sites not yet identified at the date of the present decision,” the order reads.

The US has a proposed site blocking bill as well.

But the French may continue to push forward using the “Pavel Durov action” as evidence that sitting on one’s hands and worrying about international repercussions is a waste of time. If companies like Amazon and Google operate in France, the French could begin tire kicking in the hopes of finding a bad wheel.

Mr. Durov believed he was not going to have a problem in France. He is a French citizen. He had the big time Kaminski firm represent him. He has lots of money. He has 114 children. What could go wrong? For starters, the French experience convinced him to begin cooperating with law enforcement requests.

Now France is getting some first hand experience with the enablers. Those who dismiss France as a land with too many different types of cheese may want to spend a few moments reading about French methods. Only one nation has some special French judicial savoir faire.

Stephen E Arnold, April 16, 2025

AI Impacts Jobs: But Just 40 Percent of Them

April 16, 2025

AI enthusiasts would have us believe workers have nothing to fear from the technology. In fact, they gush, AI will only make our jobs easier by taking over repetitive tasks and allowing time for our creative juices to flow. It is a nice vision. Far-fetched, but nice. Euronews reports, “AI Could Impact 40 Percent of Jobs Worldwide in the Next Decade, UN Agency Warns.” Writer Anna Desmarais cites a recent report as she tells us:

“Artificial intelligence (AI) may impact 40 per cent of jobs worldwide, which could mean overall productivity growth but many could lose their jobs, a new report from the United Nations Department of Trade and Development (UNCTAD) has found. The report … says that AI could impact jobs in four main ways: either by replacing or complementing human work, deepening automation, and possibly creating new jobs, such as in AI research or development.”

So it sounds like we could possibly reach a sort of net-zero on jobs. However, it will take deliberate action to get there. And we are not currently pointed in the right direction:

“A handful of companies that control the world’s advancement in AI ‘often favour capital over labour,’ the report continues, which means there is a risk that AI ‘reduces the competitive advantage’ of low-cost labour from developing countries. Rebeca Grynspan, UCTAD’s Secretary-General, said in a statement that there needs to be stronger international cooperation to shift the focus away ‘from technology to people’.”

Oh, is that all? Easy peasy. The post notes it is not just information workers under threat—when combined with other systems, AI can also perform physical production jobs. Desmarais concludes:

“The impact that AI is going to have on the labour force depends on how automation, augmentation, and new positions interact. The UNCTAD said developing countries need to invest in reliable internet connections, making high-quality data sets available to train AI systems and building education systems that give them necessary digital skills, the report added. To do this, UNCTAD recommends building a shared global facility that would share AI tools and computing power equitably between nations.”

Will big tech and agencies around the world pull together to make it happen?

Cynthia Murrell, April 16, 2025