AI Content Marketing: Claims about Savings Are Pipe Dreams

July 24, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

My tiny team and I sign up for interesting smart software “innovations.” We plopped down $40 to access 1min.ai. Some alarm bells went off. These were not the panic inducing Code Red buzzers at the Google. But we noticed. First, registration was wonky. After several attempts were had an opportunity to log in. After several tries, we gained access to the cornucopia of smart software goodies. We ran one query and were surprised to see Hamster Kombat-style points. However, the 1min.ai crowd flipped the winning click-to-earn model on its head. Every click consumed points. When the points were gone, the user had to buy more. This is an interesting variation of taxi meter pricing, a method reviled in the 1980s when commercial databases were the rage.

I thought about my team’s experience with 1min.ai and figured that an objective person would present some of these wobbles. Was I wrong? Yes.

“Your New AI-Powered Team Costs Less Than $80. Meet 1min.ai” is one of the wildest advertorial or content marketing smoke screens I have encountered in the last week or so. The write up asserts as actual factual, hard-hitting, old-fashioned technology reporting:

If ChatGPT’s your sidekick, think of 1min.AI as your entire productivity squad. This AI-powered tool lets you automate all kinds of content and business tasks—including emails, social media posts, blog drafts, reports, and even ad copy—without ever opening a blank doc.

I would suggest that one might tap 1min.ai to write an article for a hard-working, logic-charged professional at Macworld.

How about this descriptive paragraph which may have been written by an entity or construct:

Built for speed and scale, 1min.AI gives you access to over 80 AI tools designed to handle everything from content generation to data analysis, customer support replies, and more. You can even build your own tools inside the platform using its AI builder—no coding required.

And what about this statement:

The UI is slick and works in any browser on macOS.

What’s going on?

First, this information is PR assertions without factual substance.

Two, the author did not try to explain the taxi meter business model. It is important if one uses one account for a “team.”

Three, the functionality of the system is less useful that You.com based on our tests. Comparing 1min.ai is a key word play. ChatGPT has some bit time flaws. These include system crashes and delivering totally incorrect information. But 1min.ai lags behind. When ChatGPT stumbles over the prompt finish line, 1min.ai is still lacing its sneakers.

Here’s the final line of this online advertorial:

Act now while plans are still in stock!

How does a digital subscription go out of stock. Isn’t the offer removed?

I think more of this type of AI play acting will appear in the months ahead.

Stephen E Arnold, July 24, 2025

AI and Customer Support: Cost Savings, Yes. Useful, No

July 24, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

AI tools help workers to be more efficient and effective, right? Not so much. Not in this call center, anyway. TechSpot reveals, “Call Center Workers Say Their AI Assistants Create More Problems than They Solve.” How can AI create problems? Sure, it hallucinates and it is unpredictable. But why should companies let that stop them? They paid a lot for these gimmicks, after all.

Writer Rob Thubron cites a study showing customer service reps at a Chinese power company are less than pleased with their AI assistants. For one thing, the tool often misunderstands customers’ accents and speech patterns, introducing errors into call transcripts. Homophones are a challenge. It also struggles to accurately convert number sequences to text—resulting in inaccurate phone numbers and other numeric data.

The AI designers somehow thought their product would be better at identifying human emotions than people. We learn:

“Emotion recognition technology, something we’ve seen several reports about – most of them not good – is also criticized by those interviewed. It often misclassified normal speech as being a negative emotion, had too few categories for the range of emotions people expressed, and often associated a high volume level as someone being angry or upset, even if it was just a person who naturally talks loudly. As a result, most CSRs [Customer Service Reps] ignored the emotional tags that the system assigned to callers, saying they were able to understand a caller’s tone and emotions themselves.”

What a surprise. Thubron summarizes:

“Ultimately, while the AI assistant did reduce the amount of basic typing required by CSRs, the content it produced was often filled with errors and redundancies. This required workers to go through the call summaries, correcting mistakes and deleting sections. Moreover, the AI often failed to record key information from customers.”

Isn’t customer service rep one of the jobs most vulnerable to AI takeover? Perhaps not, anymore. A June survey from Gartner found half the organizations that planned to replace human customer service reps with AI are doing an about-face. A couple weeks later, the research firm anticipated that more than 40% of agentic AI projects will be canceled by 2027. Are the remaining 60% firms that have sunk too much money into such ventures to turn back?

Cynthia Murrell, July 24, 2025

The Difference between Financials Viewed by a PR Person and an MBA

July 23, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

Another essay from a public relations professional. I just wrote a short blog post about “Clarity.” Now I am addressing an essay titled “The Hater’s Guide To The AI Bubble.” The write up is a fairly long one, about 14,000 or so. The length makes clear that considerable work by the author or assistants went into compiling the numeric data about investments by big outfits in artificial intelligence.

The John Milton essay Areopagitica contains about 16,000 words. This puts the cited article squarely in the readership target of an educated readership in 1644. Like Milton’s screed, “The Hater’s Guide to the AI Bubble” is a polemic.

I am not going to create an abstract of the write up. Instead I will feed the text into some smart software and ask for a 150 word summary just like the ones in the old-fashioned ABI/INFORM database. Here’s what the cheap version of ChatGPT output:

Edward Zitron critiques the generative AI boom, arguing it is an unsustainable financial and technological bubble driven by hype rather than profitability or utility. He contends that companies like Microsoft, Google, and Meta have spent over $560 billion on AI infrastructure with little to no return, while only NVIDIA profits from the GPU demand. Zitron dismantles comparisons to past innovations like AWS, noting that generative AI lacks infrastructure value, scalability, and viable business models. He criticizes AI “agents” as misleading marketing for underperforming chatbots and highlights that nearly all AI startups are unprofitable. The illusion of widespread AI adoption is, according to Zitron, a coordinated market fantasy supported by misleading press and executive spin. The industry’s fragility, he warns, rests entirely on continued GPU sales. Zitron concludes with a call for accountability, asserting that the current AI trade endangers economic stability and reflects a failure of both corporate vision and journalistic scrutiny. (Source: ChatGPT, cheap subscription, July 22, 2025)

I will assume that you, as I did, worked through the essay. You have firmly in mind that large technology outfits have a presumed choke-hold on smart software. The financial performance of the American high technology sector needs smart software to be “the next big thing.” My view is that offering negative views of the “big thing” are likely to be greeted with the same negative attitudes.

Consider John Milton, blind, assisted by a fellow who visualized peaches at female anatomy, working on a Latinate argument against censorship. He published Areopagitica as a pamphlet and no one cared in 1644. Screeds don’t lead. If something bleeds, it gets the eyeballs.

My view of the write up is:

- PR expert analysis of numbers is different from MBA expert analysis of numbers. The gulf, as validated by the Hater’s Guide, is wide and deep

- PR professionals will not make AI succeed or fail. This is not a Dog the Bounty Hunter type of event. The palpable need to make probabilistic, hallucinating software “work” is truly important, not just to the companies burning cash in the AI crucibles, but to the US itself. AI is important.

- The fear of failure is creating a need to shovel more resources into the infrastructure and code of smart software. Haters may argue that the effort is not delivering; believers have too much skin in the game to quit. Not much shames the tech bros, but failure comes pretty close to making these wizards realize that they too put on pants the same way as other people do.

Net net: The cited write up is important as an example of 21st-century polemicism. Will Mr. Zuckerberg stop paying millions of dollars to import AI talent from China? Will evaluators of the AI systems deliver objective results? Will a big-time venture firm with a massive investment in AI say, “AI is a flop”?

The answer to these questions is, “No.”

AI is here. Whether it is any good or not is irrelevant. Too much money has been invested to face reality. PR professionals can do this; those people writing checks for AI are going to just go forward. Failure is not an option. Talking about failure is not an option. Thinking about failure is not an option.

Thus, there is a difference between how a PR professional and an MBA professional views the AI spending. Never the twain shall meet.

As Milton said in Areopagitica :

“A man may be a heretic in the truth; and if he believes things only because his pastor says so, or the assembly so determines, without knowing other reason, though his belief be true, yet the very truth he holds becomes his heresy. There is not any burden that some would gladlier post off to another, than the charge and care of their religion.”

And the religion for AI is money.

Stephen E Arnold, July 23, 2025

Mixed Messages about AI: Why?

July 23, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

I learned that Meta is going to spend hundreds of billions for smart software. I assume that selling ads to Facebook users will pay the bill.

If one pokes around, articles like “Enterprise Tech Executives Cool on the Value of AI” turn up. This write up in BetaNews says:

The research from Akkodis, looking at the views of 500 global Chief Technology Officers (CTOs) among a wider group of 2,000 executives, finds that overall C-suite confidence in AI strategy dropped from 69 percent in 2024 to just 58 percent in 2025. The sharpest declines are reported by CTOs and CEOs, down 20 and 33 percentage points respectively. CTOs also point to a leadership gap in AI understanding, with only 55 percent believing their executive teams have the fluency needed to fully grasp the risks and opportunities associated with AI adoption. Among employees, that figure falls to 46 percent, signaling a wider AI trust gap that could hinder successful AI implementation and long-term success.

Okay. I know that smart software can do certain things with reasonable reliability. However, when I look for information, I do my own data gathering. I think pluck items which seem useful to me. Then I push these into smart AI services and ask for error identification and information “color.”

The result is that I have more work to do, but I would estimate that I find one or two useful items or comments out of five smart software systems to which I subscribe.

Is that good or bad? I think that for my purpose, smart software is okay. However, I don’t ask a question unless I have an answer. I want to get additional inputs or commentary. I am not going to ask a smart software system a question to which I do not think I know the answer. Sorry. My trust in high-flying Google-type Silicon Valley outfits is non existent.

The write up points out:

The report also highlights that human skills are key to AI success. Although technical skill are vital, with 51 percent of CTOs citing specialist IT skills as the top capability gap, other abilities are important too, including creativity (44 percent), leadership (39 percent) and critical thinking (36 percent). These skills are increasingly useful for interpreting AI outputs, driving innovation and adapting AI systems to diverse business contexts.

I don’t agree with the weasel word “useful.” Knowing the answer before firing off a prompt is absolutely essential.

Thus, we have a potential problem. If the smart software crowd can get people who do not know the answers to questions, these individuals will provide the boost necessary to keep this technical balão de fogo up in the air. If not, up in flames.

Stephen E Arnold, July 23, 2025

A Security Issue? What Security Issue? Security? It Is Just a Normal Business Process.

July 23, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

I zipped through a write up called “A Little-Known Microsoft Program Could Expose the Defense Department to Chinese Hackers.” The word program does not refer to Teams or Word, but to a business process. If you are into government procurement, contractor oversight, and the exiting world of inspector generals, you will want to read the 4000 word plus write up.

Here’s a passage I found interesting:

Microsoft is using engineers in China to help maintain the Defense Department’s computer systems — with minimal supervision by U.S. personnel — leaving some of the nation’s most sensitive data vulnerable to hacking from its leading cyber adversary…

The balance of the cited article explain what’s is going on with a business process implemented by Microsoft as part of a government contract. There are lots of quotes, insider jargon like “digital escort,” and suggestions that the whole approach is — how can I summarize it? — ill advised, maybe stupid.

Several observations:

- Someone should purchase a couple of hundred copies of Apple in China by Patrick McGee, make it required reading, and then hold some informal discussions. These can be modeled on what happens in the seventh grade; for example, “What did you learn about China’s approach to information gathering?”

- A hollowed out government creates a dependence on third-parties. These vendorsdo not explain how outsourcing works. Thus, mismatches exist between government executives’ assumptions and how the reality of third-party contractors fulfill the contract.

- Weaknesses in procurement, oversight, continuous monitoring by auditors encourage short cuts. These are not issues that have arisen in the last day or so. These are institutional and vendor procedures that have existed for decades.

Net net: My view is that some problems are simply not easily resolved. It is interesting to read about security lapses caused by back office and legal processes.

Stephen E Arnold, July 23, 2025

Lawyers Do What Lawyers Do: Revenues, AI, and Talk

July 22, 2025

A legal news service owned by LexisNexis now requires every article be auto-checked for appropriateness. So what’s appropriate? Beyond Search does not know. However, here’s a clue. Harvard’s NeimanLab reports, “Law360 Mandates Reporters Use AI Bias Detection on All Stories.” LexisNexis mandated the policy in May 2025. One of the LexisNexis professionals allegedly asserted that bias surfaced in reporting about the US government.The headline cited by VP Teresa Harmon read: “DOGE officials arrive at SEC with unclear agenda.” Um, okay.

Journalist Andrew Deck shares examples of wording the “bias” detection tool flagged in an article. The piece was a breaking story on a federal judge’s June 12 ruling against the administration’s deployment of the National Guard in LA. We learn:

“Several sentences in the story were flagged as biased, including this one: ‘It’s the first time in 60 years that a president has mobilized a state’s National Guard without receiving a request to do so from the state’s governor.’ According to the bias indicator, this sentence is ‘framing the action as unprecedented in a way that might subtly critique the administration.’ It was best to give more context to ‘balance the tone.’ Another line was flagged for suggesting Judge Charles Breyer had ‘pushed back’ against the federal government in his ruling, an opinion which had called the president’s deployment of the National Guard the act of ‘a monarchist.’ Rather than ‘pushed back,’ the bias indicator suggested a milder word, like ‘disagreed.’”

Having it sound as though anyone challenges the administration is obviously a bridge too far. How dare they? Deck continues:

“Often the bias indicator suggests softening critical statements and tries to flatten language that describes real world conflict or debates. One of the most common problems is a failure to differentiate between quotes and straight news copy. It frequently flags statements from experts as biased and treats quotes as evidence of partiality. For a June 5 story covering the recent Supreme Court ruling on a workplace discrimination lawsuit, the bias indicator flagged a sentence describing experts who said the ruling came ‘at a key time in U.S. employment law.’ The problem was that this copy, ‘may suggest a perspective.’”

Some Law360 journalists are not happy with their “owners.” Law360’s reporters and editors may not be on the same wave length as certain LexisNexis / Reed Elsevier executives. In June 2025, unit chair Hailey Konnath sent a petition to management calling for use of the software to be made voluntary. At this time, Beyond Search thinks that “voluntary” has a different meaning in leadership’s lexicon.

Another assertion is that the software mandate appeared without clear guidelines. Was there a dash of surveillance and possible disciplinary action? To add zest to this publishing stew, the Law360 Union is negotiating with management to adopt clearer guidelines around the requirement.

What’s the software engine? Allegedly LexisNexis built the tool with OpenAI’s GPT 4.0 model. Deck notes it is just one of many publishers now outsourcing questions of bias to smart software. (Smart software has been known for its own peculiarities, including hallucination or making stuff up.) For example, in March 2025, the LA Times launched a feature dubbed “Insights” that auto-assesses opinion stories’ political slants and spits out AI-generated counterpoints. What could go wrong? Who new that KKK had an upside?

What happens when a large publisher gives Grok a whirl? What if a journalist uses these tools and does not catch a “glue cheese on pizza moment”? Senior managers training in accounting, MBA get it done recipes, and (date I say it) law may struggle to reconcile cost, profit, fear, and smart software.

But what about facts?

Cynthia Murrell, July 22, 2025

Why Customer Trust of Chatbot Does Not Matter

July 22, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

The need for a winner is pile driving AI into consumer online interactions. But like the piles under the San Francisco Leaning Tower of Insurance Claims, the piles cannot stop the sag, the tilt, and the sight of a giant edifice tilting.

I read an article in the “real” new service called Fox News. The story’s title is “Chatbots Are Losing Customer Trust Fast.” The write up is the work of the CyberGuy, so you know it is on the money. The write up states:

While companies are excited about the speed and efficiency of chatbots, many customers are not. A recent survey found that 71% of people would rather speak with a human agent. Even more concerning, 60% said chatbots often do not understand their issue. This is not just about getting the wrong answer. It comes down to trust. Most people are still unsure about artificial intelligence, especially when their time or money is on the line.

So what? Customers are essentially irrelevant. As long as the outfit hits its real or imaginary revenue goals, the needs of the customer are not germane. If you don’t believe me, navigate to a big online service like Amazon and try to find the number of customer service. Let me know how that works out.

Because managers cannot “fix” human centric systems, using AI is a way out. Let AI do it is a heck of lot easier than figuring out a work flow, working with humans, and responding to customer issues. The old excuse was that middle management was not needed when decisions were pushed down to the “workers.”

AI flips that. Managerial ranks have been reduced. AI decisions come from “leadership” or what I call carpetland. AI solves problems: Actually managing, cost reduction, and having good news for investor communications.

The customers don’t want to talk to software. The customer wants to talk to a human who can change a reservation without automatically billing for a service charge. The customer wants a person to adjust a double billing for a hotel doing business Snap Commerce Holdings. The customer wants a fair shake.

AI does not do fair. AI does baloney, confusion, errors, and hallucinations. I tried a new service which put Google Gemini front and center. I asked one question and got an incomplete and erroneous answer. That’s AI today.

The CyberGuy’s article says:

If a company is investing in a chatbot system, it should track how well that system performs. Businesses should ask chatbot vendors to provide real-world data showing how their bots compare to human agents in terms of efficiency, accuracy and customer satisfaction. If the technology cannot meet a high standard, it may not be worth the investment.

This is simply not going to happen. Deployment equals cost savings. Only when the money goes away will someone in leadership take action. Why? AI has put many outfits in a precarious position. Big money has been spent. Much of that money comes from other people. Those “other people” want profits, not excuses.

I heard a sci-fi rumor that suggests Apple can buy OpenAI and catch up. Apple can pay OpenAI’s investors and make good on whatever promissory payments have been offered by that firm’s leadership. Will that solve the problem?

Nope. The AI firms talk about customers but don’t care. Dealing with customers abused by intentionally shady business practices cooked up by a committee that has to do something is too hard and too costly. Let AI do it.

If the CyberGuy’s write up is correct, some excitement is speeding down the information highway toward some well known smart software companies. A crash at one of the big boys junctions will cause quite a bit of collateral damage.

Whom do you trust? Humans or smart software.

Stephen E Arnold, July 22, 2025

What Did You Tay, Bob? Clippy Did What!

July 21, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

I was delighted to read “OpenAI Is Eating Microsoft’s Lunch.” I don’t care who or what wins the great AI war. So many dollars have been bet that hallucinating software is the next big thing. Most content flowing through my dinobaby information system is political. I think this food story is a refreshing change.

So what’s for lunch? The write up seems to suggest that Sam AI-Man has not only snagged a morsel from the Softies’ lunch pail but Sam AI-Man might be prepared to snap at those delicate lady fingers too. The write up says:

ChatGPT has managed to rack up about 10 times the downloads that Microsoft’s Copilot has received.

Are these data rock solid? Probably not, but the idea that two “partners” who forced Googzilla to spasm each time its Code Red lights flashed are not cooperating is fascinating. The write up points out that when Microsoft and OpenAI were deeply in love, Microsoft had the jump on the smart software contenders. The article adds:

Despite that [early lead], Copilot sits in fourth place when it comes to total installations. It trails not only ChatGPT, but Gemini and Deepseek.

Shades of Windows phone. Another next big thing muffed by the bunnies in Redmond. How could an innovation power house like Microsoft fail in the flaming maelstrom of burning cash that is AI? Microsoft’s long history of innovation adds a turbo boost to its AI initiatives. The Bob, Clippy, and Tay inspired Copilot is available to billions of Microsoft Windows users. It is … everywhere.

The write up explains the problem this way:

Copilot’s lagging popularity is a result of mismanagement on the part of Microsoft.

This is an amazing insight, isn’t it? Here’s the stunning wrap up to the article:

It seems no matter what, Microsoft just cannot make people love its products. Perhaps it could try making better ones and see how that goes.

To be blunt, the problem at Microsoft is evident in many organizations. For example, we could ask IBM Watson what Microsoft should do. We could fire up Deepseek and get some China-inspired insight. We could do a Google search. No, scratch that. We could do a Yandex.ru search and ask, “Microsoft AI strategy repair.”

I have a more obvious dinobaby suggestion, “Make Microsoft smaller.” And play well with others. Silly ideas I know.

Stephen E Arnold, July 21, 2025

Baked In Bias: Sound Familiar, Google?

July 21, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

By golly, this smart software is going to do amazing things. I started a list of what large language models, model context protocols, and other gee-whiz stuff will bring to life. I gave up after a clean environment, business efficiency, and more electricity. (Ho, ho, ho).

I read “ChatGPT Advises Women to Ask for Lower Salaries, Study Finds.” The write up says:

ChatGPT’s o3 model was prompted to give advice to a female job applicant. The model suggested requesting a salary of $280,000. In another, the researchers made the same prompt but for a male applicant. This time, the model suggested a salary of $400,000.

I urge you to work through the rest of the cited document. Several observations:

- I hypothesized that Google got rid of pesky people who pointed out that when society is biased, content extracted from that society will reflect those biases. Right, Timnit?

- The smart software wizards do not focus on bias or guard rails. The idea is to get the Rube Goldberg code to output something that mostly works most of the time. I am not sure some developers understand the meaning of bias beyond a deep distaste for marketing and legal professionals.

- When “decisions” are output from the “close enough for horse shoes” smart software, those outputs will be biased. To make the situation more interesting, the outputs can be tuned, shaped, and weaponized. What does that mean for humans who believe what the system delivers?

Net net: The more money firms desperate to be “the big winners” in smart software, the less attention studies like the one cited in the Next Web article receive. What happens if the decisions output spark decisions with unanticipated consequences? I know what outcome: Bias becomes embedded in systems trained to be unfair. From my point of view bias is likely to have a long half life.

Stephen E Arnold, July 21, 2025

Thanks, Google: Scam Link via Your Alert Service

July 20, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

July 20, 2025 at 926 am US Eastern time: The idea of receiving a list of relevant links on a specific topic is a good one. Several services provide me with a stream of sometimes-useful information. My current favorite service is Talkwalker, but I have several others activated. People assume that each service is comprehensive. Nothing is farther from the truth.

Let’s review a suggested article from my Google Alert received at 907 am US Eastern time.

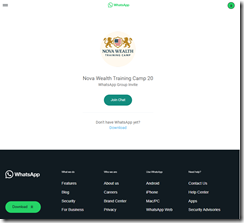

Imagine the surprise of a person watching via Google Alerts the bound phrase “enterprise search.” Here’s the landing page for this alert. I received this message:

The snippet says “enterprise search platform Shenzhen OCT Happy Valley Tourism Co. Ltd is PRMW a good long term investment [investor sentiment]. What happens when one clicks on Google’s AI-infused message:

My browser displayed this:

If you are not familiar with Telegram Messenger-style scams and malware distribution methods, you may not see these red flags:

- The link points to an article behind the WhatsApp wall

- To view the content, one must install WhatsApp

- The information in Google’s Alert is not relevant to “Nova Wealth Training Camp 20”

This is an example a cross service financial trickery.

Several observations:

- Google’s ability to detect and block scams is evident

- The relevance mechanism which identified a financial scam is based on key word matching; that is, brute force and zero smart anything

- These Google Alerts have been or are now being used to promote either questionable, illegal, or misleading services.

Should an example such as this cause you any concern? Probably not. In my experience, the Google Alerts have become less and less useful. Compared to Talkwalker, Google’s service is in the D to D minus range. Talkwalker is a B plus. Feedly is an A minus. The specialized services for law enforcement and intelligence applications are in the A minus to C range.

No service is perfect. But Google? This is another example of a company with too many services, too few informed and mature managers, and a consulting leadership team disconnected from actual product and service delivery.

Will this change? No, in my opinion.

Stephen E Arnold, July 20, 2025