First, Virtual AI Compute and Now a Virtual Supercomputation Complex

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Do you remember the good old days at AT&T? No Judge Green. No Baby Bells. Just the Ma Bell. Devices were boxes or plastic gizmos. Western Electric paid people to throw handsets out of a multi story building to make sure the stuff was tough. That was the old Ma Bell. Today one has virtual switches, virtual exchanges, and virtual systems. Software has replaced quite a bit of the fungible.

A few days ago, Pavel Durov rolled out his Cocoon. This is a virtual AI complex or VAIC. Skip that build out of data centers. Telegram is using software to provide an AI compute service to anyone with a mobile device. I learned today (December 6, 2025) that Stephen Wolfram has rolled out “instant supercompute.”

When those business plans don’t work out, the buggy whip boys decide to rent out their factory and machines. Too bad about those new fangled horseless carriages. Will the AI data center business work out? Stephen Wolfram and Pavel Durov seem to think that excess capacity is a business opportunity. Thanks, Venice.ai. Good enough.

A Mathematica user wants to run a computation at scale. According to “Instant Supercompute: Launching Wolfram Compute Services”:

Well, today we’ve released an extremely streamlined way to do that. Just wrap the scaled up computation in RemoteBatchSubmit and off it’ll go to our new Wolfram Compute Services system. Then—in a minute, an hour, a day, or whatever—it’ll let you know it’s finished, and you can get its results. For decades I’ve often needed to do big, crunchy calculations (usually for science). With large volumes of data, millions of cases, rampant computational irreducibility, etc. I probably have more compute lying around my house than most people—these days about 200 cores worth. But many nights I’ll leave all of that compute running, all night—and I still want much more. Well, as of today, there’s an easy solution—for everyone: just seamlessly send your computation off to Wolfram Compute Services to be done, at basically any scale.

And the payoff to those using Mathematica for big jobs:

One of the great strengths of Wolfram Compute Services is that it makes it easy to use large-scale parallelism. You want to run your computation in parallel on hundreds of cores? Well, just use Wolfram Compute Services!

One major point in the announcement is:

Wolfram Compute Services is going to be very useful to many people. But actually it’s just part of a much larger constellation of capabilities aimed at broadening the ways Wolfram Language can be used…. An important direction is the forthcoming Wolfram HPCKit—for organizations with their own large-scale compute facilities to set up their own back ends to RemoteBatchSubmit, etc. RemoteBatchSubmit is built in a very general way, that allows different “batch computation providers” to be plugged in.

Does this suggest that Supercompute is walking down the same innovation path as Pavel and Nikolai Durov? I seem some similarities, but there are important differences. Telegram’s reputation is enhanced with some features of considerable value to a certain demographic. Wolfram Computer Services is closely associated with heavy duty math. Pavel Durov awaits trial in France on more than a dozen charges of untoward online activities. Stephen Wolfram collects awards and gives enthusiastic if often incomprehensible talks on esoteric subjects.

But the technology path is similar in my opinion. Both of these organizations want to use available compute resources; they are not too keen on buying GPUs, building data centers, and spending time in meetings about real estate.

The cost of running a job on the Supercompute system depends on a number of factors. A user buys “credits” and pays for a job with those. No specific pricing details are available to me at this time: 0800 US Eastern on December 6, 2025.

Net net: Two very intelligent people — Stephen Wolfram and Pavel Durov — seem to think that the folks with giant data centers will want to earn some money. Messrs. Wolfram and Durov are resellers of excess computing capacity. Will Amazon, Google, Microsoft, et al be signing up if the AI demand does not meet the somewhat robust expectations of big AI tech companies?

Stephen E Arnold, December 19, 2025

Windows Strafed by Windows Fanboys: Incredible Flip

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

When the Windows folding phone came out, I remember hunting around for blog posts, podcasts, and videos about this interesting device. Following links I bumbled onto the Windows Central Web site. The two fellows who seemed to be front and center had a podcast (a quite irregularly published podcast I might add). I was amazed at the pro-folding gizmo. One of the write ups was panting with excitement. I thought then and think now that figuring out how to fold a screen is a laboratory exercise, not something destined to be part of my mobile phone experience.

I forgot about Windows Central and the unflagging ability to find something wonderfully bigly about the Softies. Then I followed a link to this story: “Microsoft Has a Problem: Nobody Wants to Buy or Use Its Shoddy AI Products — As Google’s AI Growth Begins to Outpace Copilot Products.”

An athlete failed at his Dos Santos II exercise. The coach, a tough love type, offers the injured gymnast a path forward with Mistral AI. Thanks, Qwen, do you phone home?

The cited write up struck me as a technology aficionado pulling off what is called a Dos Santos II. (If you are not into gymnastics, this exercise “trick” involves starting backward with a half twist into a double front in the layout position. Boom. Perfect 10. From folding phone to “shoddy AI products.”

If I were curious, I would dig into the reasons for this change in tune, instruments, and concert hall. My hunch is that a new manager replaced a person who was talking (informally, of course) to individuals who provided the information without identifying the source. Reuters, the trust outfit, does this on occasion as do other “real” journalists. I prefer to say, here are my observations or my hypotheses about Topic X. Others just do the “anonymous” and move forward in life.

Here are a couple of snips from the write up that I find notable. These are not quite at the “shoddy AI products” level, but I find them interesting.

Snippet 1:

If there’s one thing that typifies Microsoft under CEO Satya Nadella‘s tenure: it’s a general inability to connect with customers. Microsoft shut down its retail arm quietly over the past few years, closed up shop on mountains of consumer products, while drifting haphazardly from tech fad to tech fad.

I like the idea that Microsoft is not sure what it is doing. Furthermore, I don’t think Microsoft every connected with its customers. Connections come from the Certified Partners, the media lap dogs fawning at Microsoft CEO antics, and brilliant statements about how many Russian programmers it takes to hack into a Windows product. (Hint: The answer is a couple if the Telegram posts I have read are semi accurate.)

Snippet 2:

With OpenAI’s business model under constant scrutiny and racking up genuinely dangerous levels of debt, it’s become a cascading problem for Microsoft to have tied up layer upon layer of its business in what might end up being something of a lame duck.

My interpretation of this comment is that Microsoft hitched its wagon to one of AI’s Cybertrucks, and the buggy isn’t able to pull the Softie’s one-horse shay. The notion of a “lame duck” is that Microsoft cannot easily extricate itself from the money, the effort, the staff, and the weird “swallow your AI medicine, you fool” approach the estimable company has adopted for Copilot.

Snippet 3:

Microsoft’s “ship it now fix it later” attitude risks giving its AI products an Internet Explorer-like reputation for poor quality, sacrificing the future to more patient, thoughtful companies who spend a little more time polishing first. Microsoft’s strategy for AI seems to revolve around offering cheaper, lower quality products at lower costs (Microsoft Teams, hi), over more expensive higher-quality options its competitors are offering. Whether or not that strategy will work for artificial intelligence, which is exorbitantly expensive to run, remains to be seen.

A less civilized editor would have dropped in the industry buzzword “crapware.” But we are stuck with “ship it now fix it later” or maybe just never. So far we have customer issues, the OpenAI technology as a lame duck, and now the lousy software criticism.

Okay, that’s enough.

The question is, “Why the Dos Santos II” at this time? I think citing the third party “Information” is a convenient technique in blog posts. Heck, Beyond Search uses this method almost exclusively except I position what I do as an abstract with critical commentary.

Let my hypothesize (no anonymous “source” is helping me out):

- Whoever at Windows Central annoyed a Softie with power created is responding to this perceived injustice

- The people at Windows Central woke up one day and heard a little voice say, “Your cheerleading is out of step with how others view Microsoft.” The folks at Windows Central listened and, thus, the Dos Santos II.

- Windows Central did what the auth9or of the article states in the article; that is, using multiple AI services each day. The Windows Central professional realized that Copilot was not as helpful writing “real” news as some of the other services.

Which of these is closer to the pin? I have no idea. Today (December 12, 2025) I used Qwen, Anthropic, ChatGPT, and Gemini. I want to tell you that these four services did not provide accurate output.

Windows Central gets a 9.0 for its flooring Microsoft exercise.

Stephen E Arnold, December 19, 2025

Waymo and a Final Woof

December 19, 2025

We’re dog lovers. Canines are the best thing on this green and blue sphere. We were sickened when we read this article in The Register about the death of a bow-wow: “Waymo Chalks Up Another Four-Legged Casualty On San Francisco Streets.”

Waymo is a self-driving car company based in San Francisco. The company unfortunately confirmed that one of its self-driving cars ran over a small, unleashed dog. The vehicle had adults and children in it. The children were crying after hearing the dog’s suffering. The status of the dog is unknown. Waymo wants to locate the dog’s family to offer assistance and veterinary services.

Waymo cars are popular in San Francisco, but…

“Many locals report feeling uneasy about the fleet of white Jaguar I-Paces roaming the city’s roads, although the offering has proven popular with tourists, women seeking safer rides, and parents in need of a quick, convenient way to ferry their children to school. Waymo currently operates in the SF Bay Area, Los Angeles, and Phoenix, and some self-driving rides are available through Uber in Austin and Atlanta.”

Waymo cars also ran over a famous stray cat named Kit Kat, known as the “Mayor of 16th Street.” May these animals rest in peace. Does the Waymo software experience regret? Yeah.

Whitney Grace, December 19, 2025

Mistakes Are Biological. Do Not Worry. Be Happy

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read a short summary of a longer paper written by a person named Paul Arnold. I hope this is not misinformation. I am not related to Paul. But this could be a mistake. This dinobaby makes many mistakes.

The article that caught my attention is titled “Misinformation Is an Inevitable Biological Reality Across nature, Researchers Argue.” The short item was edited by a human named Gaby Clark. The short essay was reviewed by Robert Edan. I think the idea is to make clear that nothing in the article is made up and it is not misinformation.

Okay, but…. Let’s look at couple of short statements from the write up about misinformation. (I don’t want to go “meta” but the possibility exists that the short item is stuffed full of information. What do you think?

Here’s an image capturing a youngish teacher outputting misinformation to his students. Okay, Qwen. Good enough.

Here’s snippet one:

… there is nothing new about so-called “fake news…”

Okay, does this mean that software that predicts the next word and gets it wrong is part of this old, long-standing trajectory for biological creatures. For me, the idea that algorithms cobbled together gets a pass because “there is nothing new about so-called ‘fake news’ shifts the discussion about smart software. Instead of worrying about getting about two thirds of questions right, the smart software is good enough.

A second snippet says:

Working with these [the models Paul Arnold and probably others developed] led the team to conclude that misinformation is a fundamental feature of all biological communication, not a bug, failure, or other pathology.

Introducing the notion of “pathology” adds a bit of context to misinformation. Is a human assembled smart software system, trained on content that includes misinformation and processed by algorithms that may be biased in some way is just the way the world works. I am not sure I am ready to flash the green light for some of the AI outfits to output what is demonstrably wrong, distorted, weaponized, or non-verifiable outputs.

What puzzled me is that the article points to itself and to an article by Ling Wei Kong et al, “A Brief Natural history of Misinformation” in the Journal of the Royal Society Interface.

Here’s the link to the original article. The authors of the publication are, if the information on the Web instance of the article is accurate, Ling-Wei Kong, Lucas Gallart, Abigail G. Grassick, Jay W. Love, Amlan Nayak, and Andrew M. Hein. Seven people worked on the “original” article. The three people identified in the short version worked on that item. This adds up to 10 people. Apparently the group believes that misinformation is a part of the biological being. Therefore, there is no cause to worry. In fact, there are mechanisms to deal with misinformation. Obviously a duck quack that sends a couple of hundred mallards aloft can protect the flock. A minimum of one duck needs to check out the threat only to find nothing is visible. That duck heads back to the pond. Maybe others follow? Maybe the duck ends up alone in the pond. The ducks take the viewpoint, “Better safe than sorry.”

But when a system or a mobile device outputs incorrect or weaponized information to a user, there may not be a flock around. If there is a group of people, none of them may be able to identify the incorrect or weaponized information. Thus, the biological propensity to be wrong bumps into an output which may be shaped to cause a particular effect or to alter a human’s way of thinking.

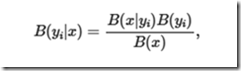

Most people will not sit down and take a close look at this evidence of scientific rigor:

and then follow the logic that leads to:

I am pretty old but it looks as if Mildred Martens, my old math teacher, would suggest the KL divergence wants me to assume some things about q(y). On the right side, I think I see some good old Bayesian stuff but I didn’t see the to take me from the KL-difference to log posterior-to-prior ratio. Would Miss Martens ask a student like me to clarify the transitions, fix up the notation, and eliminate issues between expectation vs. pointwise values? Remember, please, that I am a dinobaby and I could be outputting misinformation about misinformation.

Several observations:

- If one accepts this line of reasoning, misinformation is emergent. It is somehow part of the warp and woof of living and communicating. My take is that one should expect misinformation.

- Anything created by a biological entity will output misinformation. My take on this is that one should expect misinformation everywhere.

- I worry that researchers tackling information, smart software, and related disciplines may work very hard to prove that information is inevitable but the biological organisms can carry on.

I am not sure if I feel comfortable with the normalization of misinformation. As a dinobaby, the function of education is to anchor those completing a course of study in a collection of generally agreed upon facts. With misinformation everywhere, why bother?

Net net: One can read this research and the summary article as an explanation why smart software is just fine. Accept the hallucinations and misstatements. Errors are normal. The ducks are fine. The AI users will be fine. The models will get better. Despite this framing of misinformation is everywhere, the results say, “Knock off the criticism of smart software. You will be fine.”

I am not so sure.

Stephen E Arnold, December 18, 2025

Tim Apple Convinces a Person That Its AI Juice Is Lemonade

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read “Apple’s Slow AI Pace Becomes a Strength as Market Grows Weary of Spending.” [Please, note that the source I used may kill the link. If that happens, complain to Yahoo, not to me.]

Everyone, it seems, is into AI. The systems hallucinate; they fail to present verifiable information; they draw stuff with too many fingers; they even do videos purpose built for scamming grannies.

Apple has been content to talk about AI and not much else other than experience staff turnover and some management waffling.

But that’s looking at Apple’s management approach to AI incorrectly. Apple was smart. Its missing the AI boat was brilliant. Just as doubts about the viability of using more energy than available to create questionable outputs, Apple’s slow movement positions it to thrive.

The write up makes sweet lemonade out of what I thought was gallons of sour, lukewarm apple cider.

I quote:

Apple now has a $4.1 trillion market capitalization and the second biggest weight in the S&P 500, leaping over Microsoft and closing in on Nvidia. The shift reflects the market’s questioning of the hundreds of billions of dollars Big Tech firms are throwing at AI development, as well as Apple’s positioning to eventually benefit when the technology is ready for mass use.

The write up includes this statement from a financial whiz:

“The stock is expensive, but Apple’s consumer franchise is unassailable,” Moffett said. “At a time when there are very real concerns about whether AI is a bubble, Apple is understandably viewed as the safe place to hide.”

Yep, lemonade. Next, up is down and down is up. I am ready. The only problem for me is that Apple tried to do AI and announced features and services. Then Apple could only produce the Granny scarf to hold another look-alike candy bar mobile device. Apple needs Splenda in its mix!

Stephen E Arnold, December 18, 2025

AI and Management: Look for Lists and Save Time

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

How does a company figure out whom to terminate? [a] Ask around. [b] Consult “objective” performance reviews. [c] Examine a sales professionals booked deal? [d] Look for a petition signed by employees unhappy with company policies? The answer is at the end of this short post.

A human resources professional has figured out which employees are at the top of the reduction in force task. Thanks Venice.ai. How many graphic artists did you annoy today?

I read “More Than 1,000 Amazon Employees Sign Open Letter Warning the Company’s AI Will Do Staggering Damage to Democracy, Our Jobs, and the Earth .”* The write up states:

The letter was published last week with signatures from over 1,000 unnamed Amazon employees, from Whole Foods cashiers to IT support technicians. It’s a fraction of Amazon’s workforce, which amounts to about 1.53 million, according to the company’s third-quarter earnings release. In it, employees claim the company is “casting aside its climate goals to build AI,” forcing them to use the tech while working toward cutting its workforce in favor of AI investments, and helping to build “a more militarized surveillance state with fewer protections for ordinary people.”

Okay, grousing employees. Signatures. Amazon AI. Hmm. I wonder if some of that old time cross correlation will highlight these individuals and their “close” connections in the company. Who are the managers of these individuals? Are the signers and their close connections linked by other factors; for example a manager? What if a manager has a disproportionate number of grousers? These are made up questions in a purely hypothetical scenario. But they crossed my mind

Do you think someone in Amazon leadership might think along similar lines?

The write up says:

Amazon announced in October it would cut around 14,000 corporate jobs, about 4% of its 350,000-person corporate workforce, as part of a broader AI-driven restructuring. Total corporate cuts could reach up to 30,000 jobs, which would be the company’s single biggest reduction ever, Reuters reported a day prior to Amazon’s announcement.

My reaction was, “Just 1,000 employees signed the grousing letter?” The rule of thumb in a company with pretty good in-person customer support had a truism, “One complaint means 100 people are annoyed just too lazy to call us.” I wonder if this rule of thumb would apply to an estimable firm like Amazon. It only took me 30 minutes to get a refund for the prone to burn or explode mobile phone battery. Pretty swift, but not exactly the type of customer services that company at which I worked responded.

The write up concludes with a quote from a person in carpetland at Amazon:

“What we need to remember is that the world is changing quickly. This generation of AI is the most transformative technology we’ve seen since the Internet, and it’s enabling companies to innovate much faster than ever before,” Beth Galetti, Amazon’s senior vice president of people and experience, wrote in the memo.

I like the royal “we” or the parental “we.” I don’t think it is the in the trenches we, but that is my personal opinion. I like the emphasis on faster and innovation. That move fast and break things is just an outstanding approach to dealing with complex problems.

Ah, Amazon, why does my Kindle iPad app no longer work when I don’t have an Internet connection? You are definitely innovating.

And the correct answer to the multiple choice test? [d] Names on a list. Just sayin’.

———————

* This is one of those wonky Yahoo news urls. If it doesn’t work, don’t hassle me. Speak with that well managed outfit Yahoo, not someone who is 81 and not well managed.

Stephen E Arnold, December 18, 2025

Un-Aliving Violates TOS and Some Linguistic Boundaries

December 18, 2025

Ah, lawyers.

Depression is a dark emotionally state and sometimes makes people take their lives. Before people unalive themselves, they usually investigate the act and/or reach out to trusted sources. These days the “trusted sources” are the host of AI chatbots that populate the Internet. Ars Technica shares the story about how one teenager committed suicide after using a chatbot: “OpenAI Says Dead Teen Violated TOS When He Used ChatGPT To Plan Suicide.”

OpenAI is facing a total of five lawsuits about wrongful deaths associated with ChatGPT. The first lawsuit came to court and OpenAI defended itself by claiming that the teen in question, Adam Raine, violated the terms of service because they prohibited self-harm and suicide. While pursuing the world’s “most engaging chatbot,” OpenAI relaxed their safety measures for ChatGPT which became Raine’s suicide coach.

OpenAI’s lawyers argued that Raine’s parents selected the most damaging chat logs. They also claim that the logs show that Raine had had suicidal ideations since age eleven and that his medication increased his un-aliving desires.

Along with the usual allegations about shifting the blame onto the parents and others, OpenAI says that people use the chatbot at their own risk. It’s a way to avoid any accountability.

“To overcome the Raine case, OpenAI is leaning on its usage policies, emphasizing that Raine should never have been allowed to use ChatGPT without parental consent and shifting the blame onto Raine and his loved ones. ‘ChatGPT users acknowledge their use of ChatGPT is ‘at your sole risk and you will not rely on output as a sole source of truth or factual information,’ the filing said, and users also “must agree to ‘protect people’ and ‘cannot use [the] services for,’ among other things, ‘suicide, self-harm,’ sexual violence, terrorism or violence.’”

OpenAI employees were also alarmed by the amount of “liberties” used to make the chatbot more engaging.

How far will OpenAI go with ChatGPT to make it intuitive, human-like, and intelligent? Raines already had underlying conditions that caused his death, but ChatGPT did exasperate them. Remember the terms of service.

Whitney Grace, December 18, 2025

Meta: An AI Management Issue Maybe?

December 17, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I really try not to think about Facebook, Mr. Zuckerberg, his yachts, and Llamas. I mean the large language model, not the creatures I associate with Peru. (I have been there, and I did not encounter any reptilian snakes. Cuy chactado, si. Vibora, no.)

I read in the pay-walled orange newspaper online “Inside Mark Zuckerberg’s Turbulent Bet on AI.” Hmm. Turbulent. I was thinking about synonyms I would have suggested; for example, unjustifiable, really big, wild and crazy, and a couple of others. I am not a real journalist so I will happily accept turbulent. The word means, however, “relating to or denoting flow of a fluid in which the velocity at any point fluctuates irregularly and there is continual mixing rather than a steady or laminar flow pattern” according to the Google’s opaque system. I think the idea is that Meta is operating in a chaotic way. What about “juiced running fast and breaking things”? Yep. Chaos, a modern management method that is supposed to just work.

A young executive with oodles of money hears an older person, probably a blue chip consultant, asking one of those probing questions about a top dog’s management method. Will this top dog listen or just fume and keep doing what worked for more than a decade? Thanks, Qwen. Good enough.

What does the write up present? Please, sign up for the FT and read the original article. I want to highlight two snippets.

The first is:

Investors are also increasingly skittish. Meta’s 2025 capital expenditures are expected to hit at least $70bn, up from $39bn the previous year, and the company has started undertaking complex financial maneuverings to help pay for the cost of new data centers and chips, tapping corporate bond markets and private creditors.

Not RIFed employees, not users, not advertisers, and not government regulators. The FT focuses on investors who are skittish. The point is that when investors get skittish, an already unsettled condition is sufficiently significant to increase anxiety. Investors do not want to be anxious. Has Mr. Zuckerberg mismanaged the investors that help keep his massive investments in to be technology chugging along. First, there was the metaverse. That may arrive in some form, but for Meta I perceive it as a dumpster fire for cash.

Now investors are anxious and the care and feeding of these entities is more important. The fact that the investors are anxious suggests that Mr. Zuckerberg has not managed this important category of professionals in a way that calms them down. I don’t think the FT’s article will do much to alleviate their concern.

The second snippet is:

But the [Meta] model performed worse than those by rivals such as OpenAI and Google on jobs including coding tasks and complex problem solving.

This suggests to me that Mr. Zuckerberg did not manage the process in an optimal way. Some wizards left for greener pastures. Others just groused about management methods. Regardless of the signals one receives about Meta, the message I receive is that management itself is the disruptive factor. Mismanagement is, I think, part of the method at Meta.

Several observations:

- Meta like the other AI outfits with money to toss in the smart software dumpster fire are in the midst of realizing “if we think it, it will become reality” is not working. Meta’s spinning off chunks of flaming money bundles and some staff don’t want to get burned.

- Meta is a technology follower, and it may have been aced by its message and social media competitor Telegram. If Telegram’s approach is workable, Meta may be behind another AI eight ball.

- Mr. Zuckerberg is a wonder of American business. He began as a boy wonder. Now as an adult wonder, the question is, “Why are investors wondering about his current wonder-fulness?”

Net net: Meta faces a management challenge. The AI tech is embedded in that. Some of its competitors lack management finesse, but some of them are plugging along and not yet finding their companies presented in the Financial Times as outfits making “increasingly skittish.” Perhaps in the future, but right now, the laser focus of the Financial Times is on Meta. The company is an easy target in my opinion.

Stephen E Arnold, December 17, 2025

The Google Has a New Sheep Herder: An AI Boss to Nip at the Heels of the AI Beasties

December 17, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Staffing turmoil appears to be the end-of-year trend in some Silicon Valley outfits. Apple is spitting out executives. Meta is thrashing. OpenAI is doing the Code Red alert thing amidst unsettled wizards. And, today I learned that Google has a chief technologist for AI infrastructure. I think that means data centers, but it could extend some oversight to the new material science lab in the UK that will use AI (of course) to invent new materials. “Exclusive / Google Names New Chief of AI Infrastructure Buildout” reports:

Amin Vahdat, who joined Google from academia roughly 15 years ago, will be named chief technologist for AI infrastructure, according to the memo, and become one of 15 to 20 people reporting directly to CEO Sundar Pichai. Google estimates it will have spent more than $90 billion on capital expenditures by the end of 2025, most of it going into the part of the company Vahdat will now oversee.

The sheep dog attempts to herd the little data center doggies away from environmental issues, infrastructure inconsistencies, and roll-your-own engineering. Woof. Thanks, Venice.ai. Close enough for horseshoes.

I read this as making clear the following:

- Google spent “more than $90 billion” on infrastructure in 2025

- No one was paying attention to this investment

- For 2025, a former academic steeped in Googliness will herd the sheep in 2026.

I assume that is part of the McKinsey way, Fire, Aim, Ready! Dinobabies like me with some blue chip consulting experience feel slightly more comfortable with the old school Ready, Aim, Fire! But the world today is different from the one I traveled through decades ago. Nostalgia does not cut it in the “we have to win AI” business environment today.

Here’s a quote making clear that planning and organizing were not part of the 2025 check writing. I quote:

“This change establishes AI Infrastructure as a key focus area for the company,” wrote Google Cloud CEO Thomas Kurian in the Wednesday memo congratulating Vahdat.

The cited article puts this sheep herder in context:

In August, Google disclosed in a paper co-authored by Vahdat that the amount of energy used to run the median prompt on its AI models was equivalent to watching less than nine seconds of television and consuming five drops of water. The numbers were far less than what some critics had feared and competitors had likely hoped for. There’s no single answer for how to best run an AI data center. It’s small, coordinated efforts across disparate teams that span the globe. The job of coordinating it all now has an official title.

See and understand. The power consumption for the Google AI data centers is trivial. The Google can plug these puppies into the local power grid, nip at the heels of the people who complain about rising electricity prices and brown outs, and nuzzle the folks who:

- Promise small, local nuclear power generation facilities. No problems with licensing, component engineering, and nuclear waste. Trivialities.

- Repurposed jet engines from a sort of real supersonic jet source. Noise? No problem. Heat? No problem. Emission control? No problem.

- Brand spanking new pressurized water reactors built by the old school nuclear crowd. No problem. Time? No problem. The new folks are accelerationists.

- Recommissioning turned off (deactivated) nuclear power stations. No problem. Costs? No problem. Components? No problem. Environmental concerns? Absolutely no problem.

Google is tops in strategic planning and technology. It should be. It crafted its expertise selling advertising. AI infrastructure is a piece of cake. I think sheep dogs herding AI can do the job which apparently was not done for more than a year. When a problem becomes to big to ignore, restructure. Grrr or Woof, not Yipe, little herder.

Stephen E Arnold, December 17, 2025

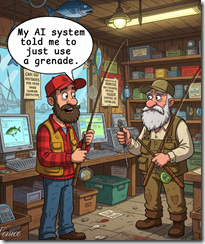

Tech Whiz Wants to Go Fishing (No, Not Phishing), Hook, Link, Sinker Stuff

December 17, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

My deeply flawed service that feeds me links produced a rare gem. “I Work in Tech But I Hate Everything Big Tech Has Become” is interesting because it states clearly what I have heard from other Silicon Valley types recently. I urge you to read the essay because the discomfort the author feels jumps off the screen or printed page if you are a dinobaby. If the essay has a rhetorical weakness, it is no resolution. My hunch is that the author has found himself in a digital construct with No Exit signs on the door.

Thanks, Venice.ai. Good enough.

The essay states:

We try to build products that help people. We try to solve mostly problems we ourselves face using tech. We are nerds, misfits, borderline insane people driven by a passion to build. we could probably get a job in big tech if we tried as hard as we try building our own startup. but we don’t want to. in fact we can’t. we’d have to kill a little (actually a lot) of ourselves to do that.

This is an interesting comment. I interpreted it to mean that the tech workers and leadership who build “products that help people” are have probably “killed” some of their inner selves. I never thought of the luminaries who head the outfits pushing AI or deploying systems that governments have to ban for users under a certain age as being dead inside. Is it true? I am not sure. Thought provoking notion? Yes.

The essay states:

I hate everything big tech stands for today. Facebook openly admitting they earn millions from scam ads. VCs funding straight up brain rot or gambling. Big tech is not even pretending to be good these days.

The word “hate” provides a glimpse of how the author is responding to the current business set up in certain sectors of the technology industry. Instead of focusing on what might be called by some dinobaby like me as “ethical behavior” is viewed as abnormal by many people. My personal view is that this idea of doing whatever to reach a goal operates across many demographics. Is this a-ethical behavior now the norm.

The essay states:

If tech loses people like us, all it’ll have left are psychopaths. Look I’m not trying to take a holier-than-thou stance here. I’m just saying objectively it seems insane what’s happening in mainstream tech these days.

I noted a number of highly charged words. These make sense in the context of the author’s personal situation. I noted “psychopaths” and “insane.” When many instances of a-ethical behavior bubble up from technical, financial, and political sectors, a-ethics mean one cannot trust, rely, or believe words. Actions alone must be scrutinized.

The author wants to “keep fighting,” but against who or what system? Deception, trickery, double dealing, criminal activity can be identified in most business interactions.

The author mentions going fishing. The caution I would offer is to make sure you are not charged a dynamic price based on your purchasing profile. Shop around if any fishing stores are open. If not, Amazon will deliver what you need.

Stephen E Arnold, December 17, 2025