YouTube: Behind the Scenes Cleverness?

September 17, 2025

No smart software involved. Just a dinobaby’s work.

No smart software involved. Just a dinobaby’s work.

I read “YouTube Is a Mysterious Monopoly.” The author tackles the subject of YouTube and how it seems to be making life interesting for some “creators.” In many countries, YouTube is television. I discovered this by accident in Bucharest, Cape Town, and Santiago, to name three locations where locals told me, “I watch YouTube.”

The write up offers some comments about this Google service. Let’s look at a couple of these.

First, the write up says:

…while views are down, likes and revenue have been mostly steady. He guesses that this might be caused by a change in how views are calculated, but it’s just a guess. YouTube hasn’t mentioned anything about a change, and the drop in views has been going on for about a month.

About five years ago, one of the companies with which I have worked for a while, pointed out that their Web site traffic was drifting down. As we monitored traffic and ad revenues, we noticed initial stability and then a continuing decline in both traffic and ad revenue. I recall we checked some data about competitive sites and most were experiencing the same drift downwards. Several were steady or growing. My client told me that Google was not able to provide substantive information. Is this type of decline an accident or is it what I call traffic shaping for Google’s revenue? No one has provided information to make this decline clear. Today (September 10, 2025) the explanation is related to smart software. I have my doubts. I think it is Google cleverness.

Second, the write up states:

I pay for YouTube Premium. For my money, it’s the best bang-for-the-buck subscription service on the market. I also think that YouTube is a monopoly. There are some alternatives — I also pay for Nebula, for example — but they’re tiny in comparison. YouTube is effectively the place to watch video on the internet.

In the US, Google has been tagged with the term “monopoly.” I find it interesting that YouTube is allegedly wearing a T shirt that says, “The only game in town.” I think that YouTube has become today’s version of the Google online search service. We have people dependent on the service for money, and we have some signals that Google is putting its thumb on the revenue scale or is suffering from what users are able to view on the service. Also, we have similar opaqueness about who or what is fiddling the dials. If a video or a Web site does not appear in a search result, that site may as well not exist for some people. The write up comes out and uses the “monopoly” word for YouTube.

Finally, the essay offers this statement:

Creators are forced to share notes and read tea leaves as weird things happen to their traffic. I can only guess how demoralizing that must feel.

For me, this comment illustrates that the experience of my client’s declining traffic and ad revenue seems to be taking place in the YouTube “datasphere.” What is a person dependent on YouTube revenue supposed to do when views drop or the vaunted YouTube search service does not display a hit for a video directly relevant to a user’s search. OSINT experts have compiled information about “Google dorks.” These are hit-and-miss methods to dig a relevant item from the Google index. But finding a video is a bit tricky, and there are fewer Google dorks to unlock YouTube content than for other types of information in the Google index.

What do I make of this? Several preliminary observations are warranted. First, Google is hugely successful, but the costs of running the operation and the quite difficult task of controlling the costs of ping, pipes, and power, the cost of people, and the expense of dealing with pesky government regulators. The “steering” of traffic and revenue to creators is possibly a way to hit financial targets.

Second, I think Google’s size and its incentive programs allow certain “deciders” to make changes that have local and global implications. Another Googler has to figure out what changed, and that may be too much work. The result is that Googlers don’t have a clue what’s going on.

Third, Google appears to be focused on creating walled gardens for what it views as “Web content” and for creator-generated content. What happens when a creator quits YouTube? I have heard that Google’s nifty AI may be able to extract the magnetic points of the disappeared created and let its AI crank out a satisfactory simulacrum. Hey, what are those YouTube viewers in Santiago going to watch on their Android mobile device?

My answer to this rhetorical question is the creator and Google “features” that generate the most traffic. What are these programs? A list of the alleged top 10 hits on YouTube is available at https://mashable.com/article/most-subscribed-youtube-channels. I want to point out that the Google holds down position in its own list spots number four and number 10. The four spot is Google Movies, a blend of free with ads, rent the video, “buy” the video which sort of puzzles me, and subscribe to a stream. The number 10 spot is Google’s own music “channel”. I think that works out to YouTube’s hosting of 10 big draw streams and services. Of those 10, the Google is 20 percent of the action. What percentage will be “Google” properties in a year?

Net net: Monitoring YouTube policy, technical, and creator data may help convert these observations into concrete factoids. On the other hand, you are one click away from what exactly? Answer: Daily Motion or RuTube? Mysterious, right?

Stephen E Arnold, September 17, 2025

Professor Goes Against the AI Flow

September 17, 2025

One thing has Cornell professor Kate Manne dreading the upcoming school year: AI. On her Substack, “More to Hate,” the academic insists, “Yes, It Is Our Job as Professors to Stop our Students Using ChatGPT.” Good luck with that.

Manne knows even her students who genuinely love to learn may give in to temptation when faced with an unrelenting academic schedule. She cites the observations of sociologist Tressie McMillan Cottom as she asserts young, stressed-out students should not bear that burden. The responsibility belongs, she says, to her and her colleagues. How? For one thing, she plans to devote precious class time to having students hand-write essays. See the write-up for her other ideas. It will not be easy, she admits, but it is important. After all, writing assignments are about developing one’s thought processes, not the finished product. Turning to ChatGPT circumvents the important part. And it is sneaky. She writes:

“Again, McMillan Cottom crystallized this perfectly in the aforementioned conversation: learning is relational, and ChatGPT fools you into thinking that you have a relationship with the software. You ask it a question, and it answers; you ask it to summarize a text, and it offers to draft an essay; you request it respond to a prompt, using increasingly sophisticated constraints, and it spits out a response that can feel like your own achievement. But it’s a fake relationship, and a fake achievement, and a faulty simulacrum of learning. It’s not going to office hours, and having a meeting of the minds with your professor; it’s not asking a peer to help you work through a problem set, and realizing that if you do it this way it makes sense after all; it’s not consulting a librarian and having them help you find a resource you didn’t know you needed yet. Your mind does not come away more stimulated or enriched or nourished by the endeavor. You yourself are not forging new connections; and it makes a demonstrable difference to what we’ve come to call ‘learning outcomes.’”

Is it even possible to keep harried students from handing in AI-generated work? Manne knows she is embarking on an uphill battle. But to her, it is a fight worth having. Saddle up, Donna Quixote.

Cynthia Murrell, September 17, 2025

What Happens When Content Management Morphs into AI? A Jargon Blast

September 16, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

I did a small project for a killer outfit in Cleveland. The BMW-driving owner of the operation talked about CxO this and CxO that. The jargon meant that “x” was a placeholder for titles like “Chief People Officer” or “Chief Relationship Officer” or some similar GenX concept.

I suppose I have a built in force shield to some business jargon, but I did turn off my blocker to read CxO Today’s marketing article titled helpfully “Gartner: Optimize Enterprise Search to Equip AI Assistants and Agents.” I was puzzled by the advertising essay, but then I realized that almost anything goes in today’s world of sell stuff by using jargon.

The write up is by an “expert” who used to work in the content management field. I must admit that I have zero idea what content management means. Like knowledge management, the blending of an undefined noun with the word “management” creates jargon that mesmerizes certain types of “leadership” or “deciders.”

The article (ad in essay form) is chock full of interesting concepts and words. The intent is to cause a “leadership” or “decider” to “reach out” for the consulting firm Gartner and buy reports or sit-downs with “experts.”

I noticed the term “enterprise search” in the title. What is “enterprise search” other than the foundation for the HP Autonomy dust up and the FAST Search & Transfer legal hassle? Most organizations struggle to find information that someone knows exists within an organization. “Leadership” decrees that “enterprise search” must be upgraded, improved, or installed. Today one can download an open source search system, ring up a cloud service offering remote indexing and search of “content,” or tap one of the super-well-funded newcomers like Glean or other AI-enabled search and retrieval systems.

Here’s what the write up advertorial says:

The advent of semantic search through vectorization and generative AI has revolutionized the way information is retrieved and synthesized. Search is no longer just an experience. It powers the experience by augmenting AI assistants. With RAG-based AI assistants and agents, relevant information fragments can be retrieved and resynthesized into new insights, whether interactively or proactively. However, the synthesis of accurate information depends largely on retrieving relevant data from multiple repositories. These repositories and the data they contain are rarely managed to support retrieval and synthesis beyond their primary application.

My translation of this jargon blast is that content proliferation is taking place and AI may be able to help “leadership” or a regular employee find the information needed to complete work. I mean who doesn’t want “RAG-based AI assistants” when trying to find a purchase order or to check the last quality report about a part that is failing 75 percent of the time for a big customer?

The fix is to embrace “touchpoints.” The write up says:

Multiple touchpoints and therefore multiple search services mean overlap in terms of indexes and usage. This results in unnecessary costs. These costs are both direct, such as licenses, subscriptions, compute and storage, and indirect, such as staff time spent on maintaining search services, incorrect decisions due to inaccurate information, and missed opportunities from lack of information. Additionally, relying on diverse technologies and configurations means that query evaluations vary, requiring different skills and expertise for maintenance and optimization.

To remediate this problem — that is, to deliver a useful enterprise search and retrieval system — the organization needs to:

aim for optimum touchpoints to information provided through maximum applications with minimum services. The ideal scenario is a single underlying service catering to all touchpoints, whether delivered as applications or in applications. However, this is often impractical due to the vast number of applications from numerous vendors… so

hire Gartner to figure out who is responsible for what, reduce the number of search vendors, and cut costs “by rationalizing the underlying search and synthesis services and associated technologies.”

In short, start over with enterprise search.

Several observations:

- Enterprise search is arguably more difficult than some other enterprise information problems. There are very good reasons for this, and they boil down to the nature of what employees need to do a job or complete a task

- AI is not going to solve the problem because these “wrappers” will reflect the problems in the content pools to which the systems have access

- Cost cutting is difficult because those given the job to analyze the “costs” of search discover that certain costs cannot be eliminated; therefore, their attendant licensing and support fees continue to become “pay now” invoices.

What do I make of this advertorial or content marketing item in CxO Today. First, I think calling it “news” is problematic. The write up is a bundle of jargon presented as a sales pitch. Second, the information in the marketing collateral is jargon and provides zero concrete information. And, third, the problem of enterprise search is in most organizational situations is usually a compromise forced on the organization because of work processes, legal snarls, secret government projects, corporate paranoia, and general turf battles inside the outfit itself.

The “fix” is not a study. The “fix” is not a search appliance as Google discovered. The “fix” is not smart software. If you want an answer that won’t work, I can identify whom not to call.

Stephen E Arnold, September 19, 2025

Who Needs Middle Managers? AI Outfits. MBAs Rejoice

September 16, 2025

No smart software involved. Just a dinobaby’s work.

No smart software involved. Just a dinobaby’s work.

I enjoy learning about new management trends. In most cases, these hip approaches to reaching a goal using people are better than old Saturday Night Live skits with John Belushi dressed as a bee. Here’s a good one if you enjoy the blindingly obvious insights of modern management thinkers.

Navigate to “Middle Managers Are Essential for AI Success.” That’s a title for you!

The write up reports without a trace of SNL snarkiness:

31% of employees say they’re actively working against their company’s AI initiatives. Middle managers can bridge the gap.

Whoa, Nellie. I thought companies were pushing forward with AI because, AI is everywhere. Microsoft Word, Google “search” (I use the term as a reminder that relevance is long gone), and from cloud providers like Salesforce.com. (Yeah, I know Salesforce is working hard to get the AI thing to go, and it is doing what big companies like to do: Cut costs by terminating humanoids.)

But the guts of the modern management method is a list (possibly assisted by AI?) The article explains without a bit of tongue in cheek élan “ways managers can turn anxious employees into AI champions.”

Here’s the list:

- Communicate the AI vision. [My observation: Isn’t that what AI is supposed to deliver? Fewer employees, no health care costs, no retirement costs, and no excess personnel because AI is so darned effective?”]

- Say, “I understand” and “Let’s talk about it.” [My observation: How long does psychological- and attitudinal-centric interactions take when there are fires to put out about an unhappy really big customer’s complaint about your firm’s product or service?]

- Explain to the employee how AI will pay off for the employee who fears AI won’t work or will cost the person his/her job? [My observation: A middle manager can definitely talk around, rationalize, and lie to make the person’s fear go away. Then the middle manager will write up the issue and forward it to HR or a superior. We don’t need a weak person on our team, right?]

- “Walk the talk.” [My observation: That’s a variant of fake it until you make it. The modern middle manager will use AI, probably realize that an AI system can output a good enough response so the “walk the talk” person can do the “walk the walk” to the parking lot to drive home after being replaced by an AI agent.]

- Give employees training and a test. [My observation: Adults love going to online training sessions and filling in the on-screen form to capture trainee responses. Get the answers wrong, and there is an automated agent pounding emails to the failing employee to report to security, turn in his/her badge, and get escorted out of the building.]

These five modern management tips or insights are LinkedIn-grade output. Who will be the first to implement these at an AI company or a firm working hard to AI-ify its operations. Millions I would wager.

Stephen E Arnold, September 16, 2025

Desperate Much? Buying Cyber Security Software Regularly

September 16, 2025

Bad actors have access to AI, and it is enabling them to increase both speed and volume at an alarming rate. Are cybersecurity teams able to cope? Maybe—if they can implement the latest software quickly enough. VentureBeat reports, “Software Commands 40% of Cybersecurity Budgets ad Gen AI Attacks Execute in Milliseconds.” Citing IBM’s recent Cost of a Data Breach Report, writer Louis Columbus reports 40% of cybersecurity spending now goes to software. Compare that to just 15.8% spent on hardware, 15% on outsourcing, and 29% on personnel. Even so, AI-assisted hacks now attack in milliseconds while the Mean Time to Identify (MTTI) is 181 days. That is quite the disparity. Columbus observes:

“Three converging threats are flipping cybersecurity on its head: what once protected organizations is now working against them. Generative AI (gen AI) is enabling attackers to craft 10,000 personalized phishing emails per minute using scraped LinkedIn profiles and corporate communications. NIST’s 2030 quantum deadline threatens retroactive decryption of $425 billion in currently protected data. Deepfake fraud that surged 3,000% in 2024 now bypasses biometric authentication in 97% of attempts, forcing security leaders to reimagine defensive architectures fundamentally.”

Understandable. But all this scrambling for solutions may now be part of the problem. Some teams, we are told, manage 75 or more security tools. No wonder they capture so much of the budget. Simplification, however, is proving elusive. We learn:

“Security Service Edge (SSE) platforms that promised streamlined convergence now add to the complexity they intended to solve. Meanwhile, standalone risk-rating products flood security operations centers with alerts that lack actionable context, leading analysts to spend 67% of their time on false positives, according to IDC’s Security Operations Study. The operational math doesn’t work. Analysts require 90 seconds to evaluate each alert, but they receive 11,000 alerts daily. Each additional security tool deployed reduces visibility by 12% and increases attacker dwell time by 23 days, as reported in Mandiant’s 2024 M-Trends Report. Complexity itself has become the enterprise’s greatest cybersecurity vulnerability.”

See the writeup for more on efforts to improve cybersecurity’s speed and accuracy and the factors that thwart them. Do we have a crisis yet? Of course not. Marketing tells us cyber security just works. Sort of.

Cynthia Murrell, September 16, 2025

Google Is Going to Race Penske in Court!

September 15, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

How has smart software affected the Google? On the surface, we have the Code Red klaxons. Google presents big time financial results so the sirens drowned out by the cheers for big bucks. We have Google dodging problems with the Android and Chrome snares, so the sounds are like little chicks peeping in the eventide.

—-

FYI: The Penske Outfits

- Penske Corporation itself focuses on transportation, truck leasing, automotive retail, logistics, and motorsports.

- Penske Media Corporation (PMC), a separate entity led by Jay Penske, owns major media brands like Rolling Stone and Billboard.

—-

What’s actually going on is different, if the information in “Rolling Stone Publisher Sues Google Over AI Overview Summaries.” [Editor’s note: I live the over over lingo, don’t you?] The write up states:

Google has insisted that its AI-generated search result overviews and summaries have not actually hurt traffic for publishers. The publishers disagree, and at least one is willing to go to court to prove the harm they claim Google has caused. Penske Media Corporation, the parent company of Rolling Stone and The Hollywood Reporter, sued Google on Friday over allegations that the search giant has used its work without permission to generate summaries and ultimately reduced traffic to its publications.

Site traffic metrics are an interesting discipline. What exactly are the log files counting? Automated pings, clicks, views, downloads, etc.? Google is the big gun in traffic, and it has legions of SEO people who are more like cheerleaders for making sites Googley, doing the things that Google wants, and pitching Google advertising to get sort of reliable traffic to a Web site.

The SEO crowd is busy inventing new types of SEO. Now one wants one’s weaponized content to turn up as a link, snippet, or footnote in an AI output. Heck, some outfits are pitching to put ads on the AI output page because money is the name of the game. Pay enough and the snippet or summary of the answer to the user’s prompt may contain a pitch for that item of clothing or electronic gadget one really wants to acquire. Psychographic ad matching is marvelous.

The write up points out that an outfit I thought was into auto racing and truck rentals but is now a triple threat in publishing has a different take on the traffic referral game. The write up says:

Penske claims that in recent years, Google has basically given publishers no choice but to give up access to its content. The lawsuit claims that Google now only indexes a website, making it available to appear in search, if the publisher agrees to give Google permission to use that content for other purposes, like its AI summaries. If you think you lose traffic by not getting clickthroughs on Google, just imagine how bad it would be to not appear at all.

Google takes a different position, probably baffled why a race car outfit is grousing. The write up reports:

A spokesperson for Google, unsurprisingly, said that the company doesn’t agree with the claims. “With AI Overviews, people find Search more helpful and use it more, creating new opportunities for content to be discovered. We will defend against these meritless claims.” Google Spokesperson Jose Castaneda told Reuters.

Gizmodo, the source for the cited article about the truck rental outfit, has done some original research into traffic. I quote from the cited article:

Just for kicks, if you ask Google Gemini if Google’s AI Overviews are resulting in less traffic for publishers, it says, “Yes, Google’s AI Overview in search results appears to be resulting in less traffic for many websites and publishers. While Google has stated that AI Overviews create new opportunities for content discovery, several studies and anecdotal reports from publishers suggest a negative impact on traffic.”

I have some views on this situation, and I herewith present them to you:

- Google is calm on the outside but in crazy mode internally. The Googlers are trying to figure out how to keep revenues growing as referral traffic and the online advertising are undergoing some modest change. Is the glacier calving? Yep, but it is modest because a glacier is big and the calf is small.

- The SEO intermediaries at the Google are communicating like Chatty Cathies to the SEO innovators. The result will be a series of shotgun marriages among the lucrative ménage à trois of Google’s ad machine, search engine optimization professional, and advertising services firms in order to lure advertisers to a special private island.

- The bean counters at Google are looking at their MBA course materials, exam notes for CPAs, and reading books about forensic accounting in order to make the money furnaces at Google hot using less cash as fuel. This, gentle reader, is a very, very difficult task. At another time, a government agency might be curious about the financial engineering methods, but at this time, attention is directed elsewhere I presume.

Net net: This is a troublesome point. Google has lots of lawyers and probably more cash to spend on fighting the race car outfit and its news publications. Did you know that the race outfit owned the definitive publication about heavy metal as well at Billboard magazine?

Stephen E Arnold, September 15, 2025

Google: The EC Wants Cash, Lots of Cash

September 15, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

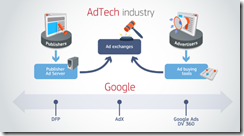

The European Commission is not on the same page as the judge involved in the Google case. Googzilla is having a bit of a vacay because Android and Chrome are still able to attend the big data party. But the EC? Not invited and definitely not welcome. “Commission Fines Google €2.95 Billion over Abusive Practices in Online Advertising Technology” states:

The European Commission has fined Google €2.95 billion for breaching EU antitrust rules by distorting competition in the advertising technology industry (‘adtech’). It did so by favouring its own online display advertising technology services to the detriment of competing providers of advertising technology services, advertisers and online publishers. The Commission has ordered Google (i) to bring these self-preferencing practices to an end; and (ii) to implement measures to cease its inherent conflicts of interest along the adtech supply chain. Google has now 60 days to inform the Commission about how it intends to do so.

The news release includes a third grade type diagram, presumably to make sure that American legal eagles who may not read at the same grade level as their European counterparts can figure out the scheme. Here it is:

For me, the main point is Google is in the middle. This racks up what crypto cronies call “gas fees” or service charges. Whatever happens Google gets some money and no other diners are allowed in the Mountain View giant’s dining hall.

There are explanations and other diagrams in the cited article. The main point is clear: The EC is not cowed by the Googlers nor their rationalizations and explanations about how much good the firm does.

Stephen E Arnold, September 15, 2025

Shame, Stress, and Longer Hours: AI’s Gifts to the Corporate Worker

September 15, 2025

Office workers from the executive suites to entry-level positions have a new reason to feel bad about themselves. Fortune reports, “ ‘AI Shame’ Is Running Rampant in the Corporate Sector—and C-Suite Leaders Are Most Worried About Getting Caught, Survey Says.” Writer Nick Lichtenberg cites a survey of over 1,000 workers by SAP subsidiary WalkMe. We learn almost half (48.8%) of the respondents said they hide their use of AI at work to avoid judgement. The number was higher at 53.4% for those at the top—even though they use AI most often. But what about the generation that has entered the job force amid AI hype? We learn:

“Gen Z approaches AI with both enthusiasm and anxiousness. A striking 62.6% have completed work using AI but pretended it was all their own effort—the highest rate among any generation. More than half (55.4%) have feigned understanding of AI in meetings. … But only 6.8% report receiving extensive, time-consuming AI training, and 13.5% received none at all. This is the lowest of any age group.”

In fact, the study found, only 3.7% of entry-level workers received substantial AI training, compared to 17.1% of C-suite executives. The write-up continues:

“Despite this, an overwhelming 89.2% [of Gen Z workers] use AI at work—and just as many (89.2%) use tools that weren’t provided or sanctioned by their employer. Only 7.5% reported receiving extensive training with AI tools.”

So younger employees use AI more but receive less training. And, apparently, are receiving little guidance on how and whether to use these tools in their work. What could go wrong?

From executives to fresh hires and those in between, the survey suggests everyone is feeling the impact of AI in the workplace. Lichtenberg writes:

“AI is changing work, and the survey suggests not always for the better. Most employees (80%) say AI has improved their productivity, but 59% confess to spending more time wrestling with AI tools than if they’d just done the work themselves. Gen Z again leads the struggle, with 65.3% saying AI slows them down (the highest amount of any group), and 68% feeling pressure to produce more work because of it.”

In addition, more than half the respondents said AI training initiatives amounted to a second, stressful job. But doesn’t all that hard work pay off? Um, no. At least, not according to this report from MIT that found 95% of AI pilot programs at large companies fail. So why are we doing this again? Ask the investor class.

Cynthia Murrell, September 15, 2025

How Much Is That AI in the Window? A Lot

September 15, 2025

AI technology is expensive. Big Tech companies are aware of the rising costs, but the average organization is unaware of how much AI will make their budgets skyrocket. The Kilo Code blog shares insights into AI’s soaring costs in, “Future AI Bills Of $100K/YR Per Dev.”

Kilo recently broke the 1 trillion tokens a month barrier on OpenRouter for the first time. Other open source AI coding tools experienced serious growth too. Claude and Cursor “throttled” their users and encouraged them to use open source tools. These AI algorithms needed to be throttled because their developers didn’t anticipate that application inference costs would rise. Why did this happen?

“Application inference costs increased for two reasons: the frontier model costs per token stayed constant and the token consumption per application grew a lot. We’ll first dive into the reasons for the constant token price for frontier models and end with explaining the token consumption per application. The price per token for the frontier model stayed constant because of the increasing size of models and more test-time scaling. Test time scaling, also called long thinking, is the third way to scale AI…While the pre- and post-training scaling influenced only the training costs of models. But this test-time scaling increases the cost of inference. Thinking models like OpenAI’s o1 series allocate massive computational effort during inference itself. These models can require over 100x compute for challenging queries compared to traditional single-pass inference.”

If organizations don’t want to be hit with expensive AI costs they should consider using open source models. Open source models ere designed to assist users instead of throttling them on the back send. That doesn’t even account for people expenses such as salaries and training.

Costs and customers’ willingness to pay escalating and unpredictable fees for AI may be a problem the the AI wizards cannot explain away. Those free and heavily discounted deals may deflate some AI balloons.

Whitney Grace, September 15, 2025

Here Is a Happy Thought: The Web Is Dead

September 12, 2025

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

I read “One of the Last, Best Hopes for Saving the Open Web and a Free Press Is Dead.” If the headline is insufficiently ominous, how about the subtitle:

The Google ruling is a disaster. Let the AI slop flow and the writers, journalists and creators get squeezed.

The write up says:

Google, of course, was one of the worst actors. It controlled (and still controls) an astonishing 90% of the search engine market, and did so not by consistently offering the best product—most longtime users recognize the utility of Google Search has been in a prolonged state of decline—but by inking enormous payola deals with Apple and Android phone manufacturers to ensure Google is the default search engine on their products.

The subject is the US government court’s ruling that Google must share. Google’s other activities are just ducky. The write up presents this comment:

The only reason that OpenAI could even attempt to do anything that might remotely be considered competing with Google is that OpenAI managed to raise world-historic amounts of venture capital. OpenAI has raised $60 billion, a staggering figure, but also a sum that still very much might not be enough to compete in an absurdly capital intensive business against a decadal search monopoly. After all, Google drops $60 billion just to ensure its search engine is the default choice on a single web browser for three years. [Note: The SAT word “decadal” sort of means over 10 years. The Google has been doing search control for more than 20 years, but “more than 20 years is not sufficiently erudite I guess.]

The point is that competition in the number scale means that elephants are fighting. Guess what loses? The grass, the ants, and earthworms.

The write up concludes:

The 2024 ruling that Google was an illegal monopoly was a glimmer of hope at a time when platforms were concentrating ever more power, Silicon Valley oligarchy was on the rise, and it was clear the big tech cartels that effectively control the public internet were more than fine with overrunning it with AI slop. That ruling suggested there was some institutional will to fight against the corporate consolidation that has come to dominate the modern web, and modern life. It proved to be an illusion.

Several observations are warranted:

- Money talks; common sense walks

- AI is having dinner at the White House; the legal eagles involved in this high-profile matter got the message

- I was not surprised; the author, surprised and somewhat annoyed that the Internet is dead.

The US mechanisms remind me of how my father described government institutions in Campinas, Brazil, in the 1950s: Carry contos and distribute them freely. [Note: A conto was 1,000 cruzeiros at the time. Today the word applies to 1,000 reais.]

Stephen E Arnold, September 12, 2025