Fixing AI Convenience Behavior: Lead, Collaborate, and Mindset?

September 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “AI-Generated “Workslop” Is Destroying Productivity.” Six people wrote the article for the Harvard Business Review. (Whatever happened to independent work?)

The write up reports:

Employees are using AI tools to create low-effort, passable looking work that ends up creating more work for their coworkers.

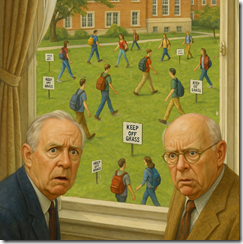

Let’s consider this statement in the context of college students’ behavior when walking across campus. I was a freshman in college in 1962. The third rate institution had a big green area with no cross paths. The enlightened administration put up “keep off the grass” signs.

What did the students do? They walked the shortest distance between two points. Why? Why go the long way? Why spend extra time? Why be stupid? Why be inconvenienced?

The cited write up from the estimable Harvard outfit says:

But while some employees are using this ability [AI tools] to polish good work, others use it to create content that is actually unhelpful, incomplete, or missing crucial context about the project at hand. The insidious effect of workslop is that it shifts the burden of the work downstream, requiring the receiver to interpret, correct, or redo the work. In other words, it transfers the effort from creator to receiver.

Yep, convenience. Why waste effort?

The fix is to eliminate smart software. But that won’t happen. Why? Smart software provides a way to cut humanoids from the costs of running a business. Efficiency works. Well, mostly.

The write up says:

we jettison hard mental work to technologies like Google because it’s easier to, for example, search for something online than to remember it. Unlike this mental outsourcing to a machine, however, workslop uniquely uses machines to offload cognitive work to another human being. When coworkers receive workslop, they are often required to take on the burden of decoding the content, inferring missed or false context.

And what about changing this situation? Did the authors trot out the old chestnuts from Kurt Lewin and the unfreeze, change, refreeze model? Did the authors suggest stopping AI deployment? Nope. The fix involves:

- Leadership

- Mindsets

- Collaboration

Just between you and me, I think this team of authors is angling for some juicy consulting assignments. These will involve determining who, how much, what, and impact of using slop AI. Then there will be a report with options. The team will implement “options” and observe the results. If the process works, the client will sign a long=term contract with the team.

Keep off the grass!

Stephen E Arnold, September 24, 2025

A Googler Explains How AI Helps Creators and Advertisers in the Googley Maze

September 24, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Most of Techmeme’s stories are paywalled. But one slipped through. (I wonder why?) Anyhow, the article in question is “An Interview with YouTube Neal Mohan about Building a Stage for Creators.” The interview is a long one. I want to focus on a couple of statements and offer a handful of observations.

The first comment by the Googler Mohan is this one:

Moving away from the old model of the cliché Madison Avenue type model of, “You go out to lunch and you negotiate a deal and it’s bespoke in this particular fashion because you were friends with the head of ad sales at that particular publisher”. So doing away with that model, and really frankly, democratizing the way advertising worked, which in our thesis, back to this kind of strategy book, would result in higher ROI for publishers, but also better ROI for advertisers.

The statement makes clear that disrupting advertising was the key to what is now the Google Double Click model. Instead of Madison Avenue, today there is the Google model. I think of it as a maze. Once one “gets into” the Google Double Click model, there is no obvious exit.

The art was generated by Venice.ai. No human needed. Sorry freelance artists on Fiverr.com. This is the future. It will come to YouTube as well.

Here’s the second I noted:

everything that we build is in service of people that are creative people, and I use the term “creator” writ large. YouTubers, artists, musicians, sports leagues, media, Hollywood, etc., and from that vantage point, it is really exceedingly clear that these AI capabilities are just that, they’re capabilities, they’re tools. But the thing that actually draws us to YouTube, what we want to watch are the original storytellers, the creators themselves.

The idea, in my interpretation, is that Google’s smart software is there to enable humans to be creative. AI is just a tool like an ice pick. Sure, the ice pick can be driven into someone’s heart, but that’s an extreme example of misuse of a simple tool. Our approach is to keep that ice pick for the artist who is going to create an ice sculpture.

Please, read the rest of this Googley interview to get a sense of the other concepts Google’s ad system and its AI are delivering to advertisers and “creators.”

Here’s my view:

- Google wants to get creators into the YouTube maze. Google wants advertisers to use the now 30 year old Google Double Click ad system. Everyone just enter the labyrinth.

- The rats will learn that the maze is the equivalent of a fish in an aquarium. What else will the fish know? Not too much. The aquarium is life. It is reality.

- Google has a captive, self-sustaining ecosystem. Creators create; advertisers advertise because people or systems want the content.

Now let me ask a question, “How does this closed ecosystem make more money?” The answer, according to Googler Mohan, a former consultant like others in Google leadership, is to become more efficient. How does one become more efficient? The answer is to replace expensive, popular creators with cheaper AI driven content produced by Google’s AI system.

Therefore, the words say one thin: Creator humans are essential. However, the trajectory of Google’s behavior is that Google wants to maximize its revenues. Just the threat or fear of using AI to knock off a hot new human engineered “content object” will allow the Google to reduce what it pays to a human until Google’s AI can eliminate those pesky, protesting, complaining humans. The advertisers want eyeballs. That’s what Google will deliver. Where will the advertisers go? Craigslist, Nextdoor, X.com?

Net net: Money is more important to Google than human creators. I know I am a dinobaby and probably incorrect. That’s how I see the Google.

Stephen E Arnold, September 24, 2025

The Skill for the AI World As Pronounced by the Google

September 24, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

Worried about a job in the future: The next minute, day, decade. The secret of constant employment, big bucks, and even larger volumes of happiness has been revealed. “Google’s Top AI Scientist Says Learning How to Learn Will Be Next Generation’s Most Needed Skill” says:

the most important skill for the next generation will be “learning how to learn” to keep pace with change as Artificial Intelligence transforms education and the workplace.

Well, that’s the secret: Learn how to learn. Why? Surviving in the chaos of an outfit like Google means one has to learn. What should one learn? Well, the write up does not provide that bit of wisdom. I assume a Google search will provide the answer in a succinct AI-generated note, right?

The write up presents this chunk of wisdom from a person keen on getting lots of AI people aware of Google’s AI prowess:

The neuroscientist and former chess prodigy said artificial general intelligence—a futuristic vision of machines that are as broadly smart as humans or at least can do many things as well as people can—could arrive within a decade…. [He] Hassabis emphasized the need for “meta-skills,” such as understanding how to learn and optimizing one’s approach to new subjects, alongside traditional disciplines like math, science and humanities.

This means reading poetry, preferably Greek poetry. The Google super wizard’s father is “Greek Cypriot.” (Cyprus is home base for a number of interesting financial operations and the odd intelware outfit. Which part of Cyprus is which? Google Maps may or may not answer this question. Ask your Google Pixel smart phone to avoid an unpleasant mix up.)

The write up adds this courteous note:

[Greek Prime Minister Kyriakos] Mitsotakis rescheduled the Google Big Brain to “avoid conflicting with the European basketball championship semifinal between Greece and Turkey. Greece later lost the game 94-68.”

Will life long learning skill help the Greek basketball team win against a formidable team like Turkey?

Sure, if Google says it, you know it is true just like eating rocks or gluing cheese on pizza. Learn now.

Stephen E Arnold, September 24, 2025

Graphite: Okay, to License Now

September 24, 2025

The US government uses specialized software to gather information related to persons of interest. The brand of popular since NSO Group marketed itself into a pickle is from the Israeli-founded spyware company Paragon Solutions. The US government isn’t a stranger to Paragon Solutions, in fact, El Pais shares in the article, “Graphite, the Israeli Spyware Acquired By ICE” that it renewed its contract with the specialized software company.

The deal was originally signed during Biden’s administration during September 24, but it went against the then president’s executive order that prohibited US agencies from using spyware tools that “posed ‘significant counterintelligence and security risks’ or had been misused by foreign governments to suppress dissent.

During the negotiations, AE Industrial Partners purchased Paragon and merged it with REDLattice, an intelligence contractor located in Virginia. Paragon is now a domestic partner with deep connections to former military and intelligence personnel. The suspension on ICE’s Homeland Security Investigations was quietly lifted on August 29 according to public contracting announcements.

The Us government will use Paragon’s Graphite spyware:

“Graphite is one of the most powerful commercial spy tools available. Once installed, it can take complete control of the target’s phone and extract text messages, emails, and photos; infiltrate encrypted apps like Signal and WhatsApp; access cloud backups; and covertly activate microphones to turn smartphones into listening devices.

The source suggests that although companies like Paragon insist their tools are intended to combat terrorism and organized crime, past use suggests otherwise. Earlier this year, Graphite allegedly has been linked to info gathering in Italy targeting at least some journalists, a few migrant rights activists, and a couple of associates of the definitely worth watching Pope Francis. Paragon stepped away from the home of pizza following alleged “public outrage.”

The US government’s use of specialized software seems to be a major concern among Democrats and Republicans alike. What government agencies are licensing and using Graphite. Beyond Search has absolutely no idea.

Whitney Grace, September 24, 2025

Titanic AI Goes Round and Round: Are You Dizzy Yet?

September 23, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Nvidia to Invest Up to $100 Billion in OpenAI, Linking Two Artificial Intelligence Titans.” The headline makes an important point. The words “big” and “huge” are not sufficiently monumental. Now we have “titans." As you may know, a “titan” is a person of great power. I will leave out the Greek mythology. I do want to point out that “titans” were the kiddies produced by Uranus and Gaea. Titans were big dogs until Zeus and a few other Olympian gods forced them to live in what is now Newark, New Jersey.

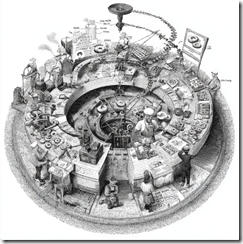

An AI-generated diagram of a simple circular deal. Regulators and and IRS professionals enjoy challenges. What are those people doing to make the process work? Thanks, MidJourney.com. Good enough.

The write up from the outfit that it is into trust explains how two “titans” are now intertwined. No, I won’t bring up the issue of incestuous behavior. Let’s stick to the “real” news story:

Nvidia will invest up to $100 billion in OpenAI and supply it with data center chips… Nvidia will start investing in OpenAI for non-voting shares once the deal is finalized, then OpenAI can use the cash to buy Nvidia’s chips.

I am not a finance, tax, or money wizard. On the surface, it seems to me that I loan a person some money and then that person gives me the money back in exchange for products and services. I may have this wrong, but I thought a similar arrangement landed one of the once-famous enterprise search companies in a world of hurt and a member of the firm’s leadership in prison.

Reuters includes this statement:

Analysts said the deal was positive for Nvidia but also voiced concerns about whether some of Nvidia’s investment dollars might be coming back to it in the form of chip purchases. "On the one hand this helps OpenAI deliver on what are some very aspirational goals for compute infrastructure, and helps Nvidia ensure that that stuff gets built. On the other hand the ‘circular’ concerns have been raised in the past, and this will fuel them further," said Bernstein analyst Stacy Rasgon.

“Circular” — That’s an interesting word. Some of the financial transaction my team and I examined during our Telegram (the messaging outfit) research used similar methods. One of the organizations apparently aware of “circular” transactions was Huione Guarantee. No big deal, but the company has been in legal hot water for some of its circular functions. Will OpenAI and Nvidia experience similar problems? I don’t know, but the circular thing means that money goes round and round. In circular transactions, at each touch point magical number things can occur. Money deals are rarely hallucinatory like AI outputs and semiconductor marketing.

What’s this mean to companies eager to compete in smart software and Fancy Dan chips? In my opinion, I hear my inner voice saying, “You may be behind a great big circular curve. Better luck next time.”

Stephen E Arnold, September 23, 2025

Pavel Durov Was Arrested for Online Stubbornness: Will This Happen in the US?

September 23, 2025

Written by an unteachable dinobaby. Live with it.

In august 2024, the French judiciary arrested Pavel Durov, the founder of VKontakte and then Telegram, a robust but non-AI platform. Why? The French government identified more than a dozen transgressions by Pavel Durov, who holds French citizenship as a special tech bro. Now he has to report to his French mom every two weeks or experience more interesting French legal action. Is this an example of a failure to communicate?

Will the US take similar steps toward US companies? I raise the question because I read an allegedly accurate “real” news write up called “Anthropic Irks White House with Limits on Models’ Use.” (Like many useful online resources, this story requires the curious to subscribe, pay, and get on a marketing list.) These “models,” of course, are the zeros and ones which comprise the next big thing in technology: artificial intelligence.

The write up states:

Anthropic is in the midst of a splashy media tour in Washington, but its refusal to allow its models to be used for some law enforcement purposes has deepened hostility to the company inside the Trump administration…

The write up says as actual factual:

Anthropic recently declined requests by contractors working with federal law enforcement agencies because the company refuses to make an exception allowing its AI tools to be used for some tasks, including surveillance of US citizens…

I found the write up interesting. If France can take action against an upstanding citizen like Pavel Durov, what about the tech folks at Anthropic or other outfits? These firms allegedly have useful data and the tools to answer questions? I recently fed the output of one AI system (ChatGPT) into another AI system (Perplexity), and I learned that Perplexity did a good job of identifying the weirdness in the ChatGPT output. Would these systems provide similar insights into prompt patterns on certain topics; for instance, the charges against Pavel Durov or data obtained by people looking for information about nuclear fuel cask shipments?

With France’s action, is the door open to take direct action against people and their organizations which cooperate reluctantly or not at all when a government official makes a request?

I don’t have an answer. Dinobabies rarely do, and if they do have a response, no one pays attention to these beasties. However, some of those wizards at AI outfits might want to ponder the question about cooperation with a government request.

Stephen E Arnold, September 24, 2025

Google Tactic: Blame Others for an Issue

September 23, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

The shift from irrelevant, SEO-corrupted results to the hallucinating world of Google smart software is allegedly affecting traffic to some sites. Google is on top of the situation. Its tireless wizards and even more tireless smart software is keeping track of ads. Oh, sorry, I mean Web site traffic. With AI making everything better for users, the complaints about declining referral traffic are annoying.

Google has an answer. “YouTube Addresses Lower View Counts Which Seem to Be Caused by Ad Blockers” reports:

Since mid-August, many YouTubers have noticed their view counts are considerably lower than they were before, in some cases with very drastic drops. The reason for the drop, though, has been shrouded in mystery for many creators.

Then adds:

The most likely explanation seems to be that YouTube is not counting views properly for users with an ad blocker enabled, another step in the platform’s continued war on ad blockers.

Yeah, maybe.

My view is that Google is steering traffic across its platform to extract as much revenue as possible. The model is the one used by olive oil producers. The good stuff is golden. The processes to squeeze the juice produces results that are unsatisfactory to some. The broken recommendations system, the smart summaries in search, and the other quantumly supreme innovations have fouled the gears in the Google advertising machine. That means Google has to up the amount of money it can obtain by squeezing harder.

How does one fix something that is the equivalent of an electric generator that once whizzed at Niagara Falls? That’s easy: One wraps it in something better, faster, and cheaper. Oh, I forgot easier to do. Googlers are busy people. Easy is efficient if it is cheap or produces additional revenue. The better outcome is to do both: Lower costs and boost revenue.

Google is going to have the same experience reinventing itself that IBM and Intel are experiencing. You may think I am just a bonkers dinobaby. Yeah, maybe. But, maybe not.

Stephen E Arnold, September 23, 2025

UAE: Will It Become U-AI?

September 23, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

UAE is moving forward in smart software, not just crypto. “Industry Leading AI Reasoning for All” reports that the Institute of foundation Models has “industry leading AI reasoning for all.” The new item reports:

Built on six pillars of innovation, K2 Think represents a new class of reasoning model. It employs long chain-of-thought supervised fine-tuning to strengthen logical depth, followed by reinforcement learning with verifiable rewards to sharpen accuracy on hard problems. Agentic planning allows the model to decompose complex challenges before reasoning through them, while test-time scaling techniques further boost adaptability.

I am not sure what the six pillars of innovation are, particularly after looking at some of the UAE’s crypto plays, but there is more. Here’s another passage which suggests that Intel and Nvidia may not be in the k2think.ai technology road map:

K2 Think will soon be available on Cerebras’ wafer-scale, inference-optimized compute platform, enabling researchers and innovators worldwide to push the boundaries of reasoning performance at lightning-fast speed. With speculative decoding optimized for Cerebras hardware, K2 Think will achieve unprecedented throughput of 2,000 tokens per second, making it both one of the fastest and most efficient reasoning systems in existence.

If you want to kick its tires (tAIres?), the system is available at k2think.ai and on Hugging Face. Oh, the write up quotes two people with interesting names: Eric Xing and Peng Xiao.

Stephen E Arnold, September 23, 2025

Can Meta Buy AI Innovation and Functioning Demos?

September 22, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

That “move fast and break things” has done a bang up job. Mark Zuckerberg, famed for making friends in Hawaii, demonstrated how “think and it becomes real” works in the real world. “Bad Luck for Zuckerberg: Why Meta Connect’s Live Demos Flopped” reported

two of Meta’s live demos epically failed. (A third live demo took some time but eventually worked.) During the event, CEO Mark Zuckerberg blamed it on the Wi-Fi connection.

Yep, blame the Wi-Fi. Bad Wi-Fi, not bad management or bad planning or bad prepping or bad decision making. No, it is bad Wi-Fi. Okay, I understand: A modern management method in action at Meta, Facebook, WhatsApp, and Instagram. Or, bad luck. No, bad Wi-Fi.

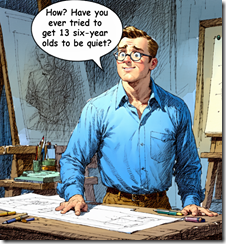

Thanks Venice.ai. You captured the baffled look on the innovator’s face when I asked Ron K., “Where did you get the idea for the hair dryer, the paper bag, and popcorn?”

Let’s think about another management decision. Navigate to the weirdly named write up “Meta Gave Millions to New AI Project Poaches, Now It Has a Problem.” That write up reports that Meta has paid some employees as much as $300 million to work on AI. The write up adds:

Such disparities appear to have unsettled longer-serving Meta staff. Employees were said to be lobbying for higher pay or transfers into the prized AI lab. One individual, despite receiving a grant worth millions, reportedly quit after concluding that newcomers were earning multiples more…

My recollection that there is some research that suggests pay is important, but other factors enter into a decision to go to work for a particular organization. I left the blue chip consulting game decades ago, but I recall my boss (Dr. William P. Sommers) explaining to me that pay and innovation are hoped for but not guaranteed. I saw that first hand when I visited the firm’s research and development unit in a rust belt city.

This outfit was cranking out innovations still able to wow people. A good example is the hot air pop corn pumper. Let that puppy produce popcorn for a group of six-year-olds at a birthday party, and I know it will attract some attention.

Here’s the point of the story. The fellow who came up with the idea for this innovation was an engineer, but not a top dog at the time. His wife organized a birthday party for a dozen six and seven year olds to celebrate their daughter’s birthday. But just as the girls arrived, the wife had to leave for a family emergency. As his wife swept out the door, she said, “Find some way to keep them entertained.”

The hapless engineer looked at the group of young girls and his daughter asked, “Daddy, will you make some popcorn?” Stress overwhelmed the pragmatic engineer. He mumbled, “Okay.” He went into the kitchen and found the popcorn. Despite his engineering degree, he did not know where the popcorn pan was. The noise from the girls rose a notch.

He poked his head from the kitchen and said, “Open your gifts. Be there in a minute.”

Adrenaline pumping, he grabbed the bag of popcorn, took a brown paper sack from the counter, and dashed into the bathroom. He poked a hole in the paper bag. He dumped in a handful of popcorn. He stuck the nozzle of the hair dryer through the hole and turned it on. Ninety seconds later, the kernels began popping.

He went into the family room and said, “Let’s make popcorn in the kitchen. He turned on the hair dryer and popped corn. The kids were enthralled. He let his daughter handle the hair dryer. The other kids scooped out the popcorn and added more kernels. Soon popcorn was every where.

The party was a success even though his wife was annoyed at the mess he and the girls made.

I asked the engineer, “Where did you get the idea to use a hair dryer and a paper bag?”

He looked at me and said, “I have no idea.”

That idea became a multi-million dollar product.

Money would not have caused the engineer to “innovate.”

Maybe Mr. Zuckerberg, once he has resolved his demo problems to think about the assumption that paying a person to innovate is an example of “just think it and it will happen” generates digital baloney?

Stephen E Arnold, September 22, 2025

AI and the Media: AI Is the Answer for Some Outfits

September 22, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

I spotted a news item about Russia’s government Ministry of Defense. The estimable defensive outfit now has an AI-generated news program. Here’s the paywalled source link. I haven’t seen it yet, but the statistics for viewership and the Telegram comments will be interesting to observe. Gee, do you think that bright Russian developers have found a way to steer the output to represent the political views of the Russian government? Did you say, “No.” Congratulations, you may qualify for a visa to homestead in Norilsk. Check it out on Google Maps.

Back in Germany, Axel Springer SE is definitely into AI as well. Coincidentally, Axel Springer will use AI for news. I noted Business Insider will allow its real and allegedly human journalists to use AI to write “drafts” of news stories. Here’s the paywalled source link. Hey, Axel, aren’t your developers able to pipe the AI output into slamma jamma banana and produce via AI complete TikTok-type news videos? Russia’s Ministry of Defense has this angle figured out. YouTube may be in the MoD’s plans. One has to fund that “defensive” special operation in Ukraine somehow.

Several observations:

- Steering or weaponing large language models is a feature of the systems. Can one trust AI generated news? Can one trust any AI output from a large organization? You may. I don’t.

- The economics of producing Walter Cronkite type news make “real” news expensive. Therefore, say hello to AI written news and AI delivered news. GenX and GenY will love this approach to information in my opinion.

- How will government regulators respond to AI news? In Russia, government controlled AI news will get a green light. Elsewhere, the shift may be slightly more contentious.

Net net: AI is great.

Stephen E Arnold, September 22, 2025