AI in Action: Price Fixing Play

March 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If a tree falls in a forest and no one is there to hear it, does I make a sound? The obvious answer is yes, but the philosophers point out how can that be possible if there wasn’t anyone there to witness the event. The same argument can be made that price fixing isn’t illegal if it’s done by an AI algorithm. Smart people know it is a straw man’s fallacy and so does the Federal Trade Commission: “Price Fixing By Algorithm Is Still Price Fixing.”

The FTC and the Department of Justice agree that if an action is illegal for a human then it is illegal for an algorithm too. The official nomenclature is antitrust compliance. Both departments want to protect consumers against algorithmic collision, particularly in the housing market. They failed a joint legal brief that stresses the importance of a fair, competitive market. The brief stated that algorithms can’t be used to evade illegal price fixing agreements and it is still unlawful to share price fixing information even if the conspirators retain pricing discretion or cheat on the agreement.

Protecting consumers from unfair pricing practices is extremely important as inflation has soared. Rent has increased by 20% since 2020, especially for lower-income people. Nearly half of renters also pay more than 30% of their income in rent and utilities. The Department of Justice and the FTC also hold other industries accountable for using algorithms illegally:

“The housing industry isn’t alone in using potentially illegal collusive algorithms. The Department of Justice has previously secured a guilty plea related to the use of pricing algorithms to fix prices in online resales, and has an ongoing case against sharing of price-related and other sensitive information among meat processing competitors. Other private cases have been recently brought against hotels(link is external) and casinos(link is external).”

Hopefully the FTC and the Department of Justice retain their power to protect consumers. Inflation will continue to rise and consumers continue to suffer.

Whitney Grace, March 18, 2024

Harvard University: William James Continues Spinning in His Grave

March 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

William James, the brother of a novelist which caused my mind to wander just thinking about any one of his 20 novels, loved Harvard University. In a speech at Stanford University, he admitted his untoward affection. If one wanders by William’s grave in Cambridge Cemetery (daylight only, please), one can hear a sound similar to a giant sawmill blade emanating from the a modest tombstone. “What’s that horrific sound?” a by passer might ask. The answer: “William is spinning in his grave. It a bit like a perpetual motion machine now,” one elderly person says. “And it is getting louder.”

William is spinning in his grave because his beloved Harvard appears to foster making stuff up. Thanks, MSFT Copilot. Working on security today or just getting printers to work?

William is amping up his RPMs. Another distinguished Harvard expert, professor, shaper of the minds of young men and women and thems has been caught fabricating data. This is not the overt synthetic data shop at Stanford University’s Artificial Intelligence Lab and the commercial outfit Snorkel. Nope. This is just a faculty member who, by golly, wanted to be respected it seems.

The Chronicle of Higher Education (the immensely popular online information service consumed by thumb typers and swipers) published “Here’s the Unsealed Report Showing How Harvard Concluded That a Dishonesty Expert Committed Misconduct.” (Registration required because, you know, information about education is sensitive and users must be monitored.) The report allegedly required 1,300 pages. I did not read it. I get the drift: Another esteemed scholar just made stuff up. In my lingo, the individual shaped reality to support her / its vision of self. Reality was not delivering honor, praise, rewards, money, and freedom from teaching horrific undergraduate classes. Why not take the Excel macro to achievement: Invent and massage information. Who is going to know?

The write up says:

the committee wrote that “she does not provide any evidence of [research assistant] error that we find persuasive in explaining the major anomalies and discrepancies.” Over all, the committee determined “by a preponderance of the evidence” that Gino “significantly departed from accepted practices of the relevant research community and committed research misconduct intentionally, knowingly, or recklessly” for five alleged instances of misconduct across the four papers. The committee’s findings were unanimous, except for in one instance. For the 2012 paper about signing a form at the top, Gino was alleged to have falsified or fabricated the results for one study by removing or altering descriptions of the study procedures from drafts of the manuscript submitted for publication, thus misrepresenting the procedures in the final version. Gino acknowledged that there could have been an honest error on her part. One committee member felt that the “burden of proof” was not met while the two other members believed that research misconduct had, in fact, been committed.

Hey, William, let’s hook you up to a power test dynamometer so we can determine exactly how fast you are spinning in your chill, dank abode. Of course, if the data don’t reveal high-RPM spinning, someone at Harvard can be enlisted to touch up the data. Everyone seems to be doing from my vantage point in rural Kentucky.

Is there a way to harness the energy of professors who may cut corners and respected but deceased scholars to do something constructive? Oh, look. There’s a protest group. Let’s go ask them for some ideas. On second thought… let’s not.

Stephen E Arnold, March 15, 2024

Stanford: Tech Reinventing Higher Education: I Would Hope So

March 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “How Technology Is Reinventing Education.” Essays like this one are quite amusing. The ideas flow without important context. Let’s look at this passage:

“Technology is a game-changer for education – it offers the prospect of universal access to high-quality learning experiences, and it creates fundamentally new ways of teaching,” said Dan Schwartz, dean of Stanford Graduate School of Education (GSE), who is also a professor of educational technology at the GSE and faculty director of the Stanford Accelerator for Learning. “But there are a lot of ways we teach that aren’t great, and a big fear with AI in particular is that we just get more efficient at teaching badly. This is a moment to pay attention, to do things differently.”

A university expert explains to a rapt audience that technology will make them healthy, wealthy, and wise. Well, that’s the what the marketing copy which the lecturer recites. Thanks, MSFT Copilot. Are you security safe today? Oh, that’s too bad.

I would suggest that Stanford’s Graduate School of Education consider these probably unimportant points:

- The president of Stanford University resigned allegedly because he fudged some data in peer-reviewed documents. True or false. Does it matter? The fellow quit.

- The Stanford Artificial Intelligence Lab or SAIL innovated with cooking up synthetic data. Not only was synthetic data the fast food of those looking for cheap and easy AI training data, Stanford became super glued to the fake data movement which may be good or it may be bad. Hallucinating is easier if the models are training using fake information perhaps?

- Stanford University produced some outstanding leaders in the high technology “space.” The contributions of famous graduates have delivered social media, shaped advertising systems, and interesting intelware companies which dabble in warfighting and saving lives from one versatile software and consulting platform.

The essay operates in smarter-than-you territory. It presents a view of the world which seems to be at odds with research results which are not reproducible, ethics-free researchers, and an awareness of how silly it looks to someone in rural Kentucky to have a president accused of pulling a grade-school essay cheating trick.

Enough pontification. How about some progress in remediating certain interesting consequences of Stanford faculty and graduates innovations?

Stephen E Arnold, March 15, 2024

Humans Wanted: Do Not Leave Information Curation to AI

March 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Remember RSS feeds? Before social media took over the Internet, they were the way we got updates from sources we followed. It may be time to dust off the RSS, for it is part of blogger Joan Westenberg’s plan to bring a human touch back to the Web. We learn of her suggestions in, “Curation Is the Last Best Hope of Intelligent Discourse.”

Westenberg argues human judgement is essential in a world dominated by AI-generated content of dubious quality and veracity. Generative AI is simply not up to the task. Not now, perhaps not ever. Fortunately, a remedy is already being pursued, and Westenberg implores us all to join in. She writes:

“Across the Fediverse and beyond, respected voices are leveraging platforms like Mastodon and their websites to share personally vetted links, analysis, and creations following the POSSE model – Publish on your Own Site, Syndicate Elsewhere. By passing high-quality, human-centric content through their own lens of discernment before syndicating it to social networks, these curators create islands of sanity amidst oceans of machine-generated content of questionable provenance. Their followers, in turn, further syndicate these nuggets of insight across the social web, providing an alternative to centralised, algorithmically boosted feeds. This distributed, decentralised model follows the architecture of the web itself – networks within networks, sites linking out to others based on trust and perceived authority. It’s a rethinking of information democracy around engaged participation and critical thinking from readers, not just content generation alone from so-called ‘influencers’ boosted by profit-driven behemoths. We are all responsible for carefully stewarding our attention and the content we amplify via shares and recommendations. With more voices comes more noise – but also more opportunity to find signals of truth if we empower discernment. This POSSE model interfaces beautifully with RSS, enabling subscribers to follow websites, blogs and podcasts they trust via open standard feeds completely uncensored by any central platform.”

But is AI all bad? No, Westenberg admits, the technology can be harnessed for good. She points to Anthropic‘s Constitutional AI as an example: it was designed to preserve existing texts instead of overwriting them with automated content. It is also possible, she notes, to develop AI systems that assist human curators instead of compete with them. But we suspect we cannot rely on companies that profit from the proliferation of shoddy AI content to supply such systems. Who will?

Cynthia Murrell, March 15, 2024

Is the AskJeeves Approach the Next Big Thing Again?

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Way back when I worked in silicon Valley or Plastic Fantastic as one 1080s wag put it, AskJeeves burst upon the Web search scene. The idea is that a user would ask a question and the helpful digital butler would fetch the answer. This worked for questions like “What’s the temperature in San Mateo?” The system did not work for the types of queries my little group of full-time equivalents peppered assorted online services.

A young wizard confronts a knowledge problem. Thanks, MSFT Copilot. How’s that security today? Okay, I understand. Good enough.

The mechanism involved software and people. The software processed the query and matched up the answer with the outputs in a knowledge base. The humans wrote rules. If there was no rule and no knowledge, the butler fell on his nose. It was the digital equivalent of nifty marketing applied to a system about as aware as the man servant in Kazuo Ishiguro’s The Remains of the Day.

I thought about AskJeeves as a tangent notion as I worked through “LLMs Are Not Enough… Why Chatbots Need Knowledge Representation.” The essay is an exploration of options intended to reduce the computational cost, power sucking, and blind spots in large language models. Progress is being made and will be made. A good example is this passage from the essay which sparked my thinking about representing knowledge. This is a direct quote:

In theory, there’s a much better way to answer these kinds of questions.

- Use an LLM to extract knowledge about any topics we think a user might be interested in (food, geography, etc.) and store it in a database, knowledge graph, or some other kind of knowledge representation. This is still slow and expensive, but it only needs to be done once rather than every time someone wants to ask a question.

- When someone asks a question, convert it into a database SQL query (or in the case of a knowledge graph, a graph query). This doesn’t necessarily need a big expensive LLM, a smaller one should do fine.

- Run the user’s query against the database to get the results. There are already efficient algorithms for this, no LLM required.

- Optionally, have an LLM present the results to the user in a nice understandable way.

Like AskJeeves, the idea is a good one. Create a system to take a user’s query and match it to information answering the question. The AskJeeves’ approach embodied what I called rules. As long as one has the rules, the answers can be plugged in to a database. A query arrives, looks for the answer, and presents it. Bingo. Happy user with relevant information catapults AskJeeves to the top of a world filled with less elegant solutions.

The question becomes, “Can one represent knowledge in such a way that the information is current, usable, and “accurate” (assuming one can define accurate). Knowledge, however, is a slippery fish. Small domains with well-defined domains chock full of specialist knowledge should be easier to represent. Well, IBM Watson and its adventure in Houston suggests that the method is okay, but it did not work. Larger scale systems like an old-fashioned Web search engine just used “signals” to produce lists which presumably presented answers. “Clever,” correct? (Sorry, that’s an IBM Almaden bit of humor. I apologize for the inside baseball moment.)

What’s interesting is that youthful laborers in the world of information retrieval are finding themselves arm wrestling with some tough but elusive problems. What is knowledge? The answer, “It depends” does not provide much help. Where does knowledge originate, the answer “No one knows for sure.” That does not advance the ball downfield either.

Grabbing epistemology by the shoulders and shaking it until an answer comes forth is a tough job. What’s interesting is that those working with large language models are finding themselves caught in a room of mirrors intact and broken. Here’s what TheTemples.org has to say about this imaginary idea:

The myth represented in this Hall tells of the divinity that enters the world of forms fragmenting itself, like a mirror, into countless pieces. Each piece keeps its peculiarity of reflecting the absolute, although it cannot contain the whole any longer.

I have no doubt that a start up with venture funding will solve this problem even though a set cannot contain itself. Get coding now.

Stephen E Arnold, March 14, 2024

AI Limits: The Wind Cannot Hear the Shouting. Sorry.

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

One of my teachers had a quote on the classroom wall. It was, I think, from a British novelist. Here’s what I recall:

Decide on what you think is right and stick to it.

I never understood the statement. In school, I was there to learn. How could I decide whether what I was reading was correct. Making a decision about what I thought was stupid because I was uninformed. The notion of “stick” is interesting and also a little crazy. My family was going to move to Brazil, and I knew that sticking to what I did in the Midwest in the 1950s would have to change. For one thing, we had electricity. The town to which we were relocating had electricity a few hours each day. Change was necessary. Even as a young sprout, trying to prevent something required more than talk, writing a Letter to the Editor, or getting a petition signed.

I thought about this crazy quote as soon as I read “AI Bioweapons? Scientists Agree to Policies to Reduce Risk of Human Disaster.” The fear mongering note of the write up’s title intrigued me. Artificial intelligence is in what I would call morph mode. What this means is that getting a fix on what is new and impactful in the field of artificial intelligence is difficult. An electrical engineering publication reported that experts are not sure if what is going on is good or bad.

Shouting into the wind does not work for farmers nor AI scientists. Thanks, MSFT Copilot. Busy with security again?

The “AI Bioweapons” essay is leaning into the bad side of the AI parade. The point of the write up is that “over 100 scientists” want to “prevent the creation of AI bioweapons.” The article states:

The agreement, crafted following a 20230 University of Washington summit and published on Friday, doesn’t ban or condemn AI use. Rather, it argues that researchers shouldn’t develop dangerous bioweapons using AI. Such an ask might seem like common sense, but the agreement details guiding principles that could help prevent an accidental DNA disaster.

That sounds good, but is it like the quote about “decide on what you think is right and stick to it”? In a dynamic environment, change is appears to accelerate. Toss in technology and the potential for big wins (either financial, professional, or political), and the likelihood of slowing down the rate of change is reduced.

To add some zip to the AI stew, much of the technology required to do some AI fiddling around is available as open source software or low-cost applications and APIs.

I think it is interesting that 100 scientists want to prevent something. The hitch in the git-along is that other countries have scientists who have access to AI research, tools, software, and systems. These scientists may feel as thought their reminding people that doom is (maybe?) just around the corner or a ruined building in an abandoned town on Route 66.

Here are a few observations about why individuals rally around a cause, which is widely perceived by some of those in the money game as the next big thing:

- The shouters perception of their importance makes it an imperative to speak out about danger

- Getting a group of important, smart people to climb on a bandwagon makes the organizers perceive themselves as doing something important and demonstrating their “get it done” mindset

- Publicity is good. It is very good when a speaking engagement, a grant, or consulting gig produces a little extra fame and money, preferably in a combo.

Net net: The wind does not listen to those shouting into it.

Stephen E Arnold, March 14, 2024

AI Deepfakes: Buckle Up. We Are in for a Wild Drifting Event

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

AI deepfakes are testing the uncanny valley but technology is catching up to make them as good as the real thing. In case you’ve been living under a rock, deepfakes are images, video, and sound clips generated by AI algorithms to mimic real people and places. For example, someone could create a deepfake video of Joe Biden and Donald Trump in a sumo wrestling match. While the idea of the two presidential candidates duking it out on a sumo mat is absurd, technology is that advanced.

Gizmodo reports the frustrating news that “The AI Deepfakes Problem Is Going To Get Unstoppably Worse”. Bad actors are already using deepfakes to wreak havoc on the world. Federal regulators outlawed robocalls and OpenAI and Google released watermarks on AI-generated images. These aren’t doing anything to curb bad actors.

Which is real? Which is fake? Thanks, MSFT Copilot, the objects almost appear identical. Close enough like some security features. Close enough means good enough, right?

New laws and technology need to be adopted and developed to prevent this new age of misinformation. There should be an endless amount of warnings on deepfake videos and soundbites, not to mention service providers should employ them too. It is going to take a horrifying event to make AI deepfakes more prevalent:

"Deepfake detection technology also needs to get a lot better and become much more widespread. Currently, deepfake detection is not 100% accurate for anything, according to Copyleaks CEO Alon Yamin. His company has one of the better tools for detecting AI-generated text, but detecting AI speech and video is another challenge altogether. Deepfake detection is lagging generative AI, and it needs to ramp up, fast.”

Wired Magazine missed an opportunity to make clear that the wizards at Google can sell data and advertising, but the sneaker-wearing marvels cannot manage deepfake adult pictures. Heck, Google cannot manage YouTube videos teaching people how to create deepfakes. My goodness, what happens if one uploads ASCII art of a problematic item to Gemini? One of my team tells me that the Sundar & Prabhakar guard rails, don’t work too well in some situations.

Not every deepfake will be as clumsy as the one the “to be maybe” future queen of England finds herself ensnared. One can ask Taylor Swift I assume.

Whitney Grace’s March 14, 2024

Can Your Job Be Orchestrated? Yes? Okay, It Will Be Smartified

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My work career over the last 60 years has been filled with luck. I have been in the right place at the right time. I have been in companies which have been acquired, reassigned, and exposed to opportunities which just seemed to appear. Unlike today’s young college graduate, I never thought once about being able to get a “job.” I just bumbled along. In an interview for something called Singularity, the interviewer asked me, “What’s been the key to your success?” I answered, “Luck.” (Please, keep in mind that the interviewer assumed I was a success, but he had no idea that I did not want to be a success. I just wanted to do interesting work.)

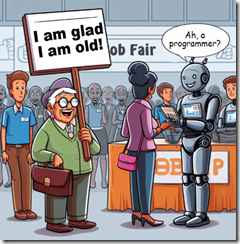

Thanks, MSFT Copilot. Will smart software do your server security? Ho ho ho.

Would I be able to get a job today if I were 20 years old? Believe it or not, I told my son in one of our conversations about smart software: “Probably not.” I thought about this comment when I read today (March 13, 2024) the essay “Devin AI Can Write Complete Source Code.” The main idea of the article is that artificial intelligence, properly trained, appropriately resourced can do what only humans could do in 1966 (when I graduated with a BA degree from a so so university in flyover country). The write up states:

Devin is a Generative AI Coding Assistant developed by Cognition that can write and deploy codes of up to hundreds of lines with just a single prompt. Although there are some similar tools for the same purpose such as Microsoft’s Copilot, Devin is quite the advancement as it not only generates the source code for software or website but it debugs the end-to-end before the final execution.

Let’s assume the write up is mostly accurate. It does not matter. Smart software will be shaped to deliver what I call orchestrated solutions either today, tomorrow or next month. Jobs already nuked by smartification are customer service reps, boilerplate writing jobs (hello, McKinsey), and translation. Some footloose and fancy free gig workers without AI skills may face dilemmas about whether to pursue begging, YouTubing the van life, or doing some spelunking in the Chemical Abstracts database for molecular recipes in a Walmart restroom.

The trajectory of applied AI is reasonably clear to me. Once “programming” gets swept into the Prada bag of AI, what other professions will be smartified? Once again, the likely path is light by dim but visible Alibaba solar lights for the garden:

- Legal tasks which are repetitive even though the cases are different, the work flow is something an average law school graduate can master and learn to loathe

- Forensic accounting. Accountants are essentially Ground Hog Day people, because every tax cycle is the same old same old

- Routine one-day surgeries. Sorry, dermatologists, cataract shops, and kidney stone crunchers. Robots will do the job and not screw up the DRG codes too much.

- Marketers. I know marketing requires creative thinking. Okay, but based on the Super Bowl ads this year, I think some clients will be willing to give smart software a whirl. Too bad about filming a horse galloping along the beach in Half Moon Bay though. Oh, well.

That’s enough of the professionals who will be affected by orchestrated work flows surfing on smartified software.

Why am I bothering to write down what seems painfully obvious to my research team?

I just wanted another reason to say, “I am glad I am old.” What many young college graduates will discover that despite my “luck” over the course of my work career, smartified software will not only kill some types of work. Smart software will remove the surprise in a serendipitous life journey.

To reiterate my point: I am glad I am old and understand efficiency, smartification, and the value of having been lucky.

Stephen E Arnold, March 13, 2024

AI Bubble Gum Cards

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

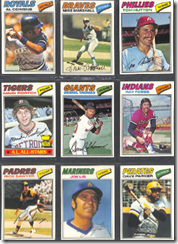

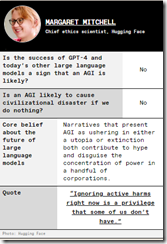

A publication for electrical engineers has created a new mechanism for making AI into a collectible. Navigate to “The AI apocalypse: A Scorecard.” Scroll down to the part of the post which looks like the gems from the 1050s:

The idea is to pick 22 experts and gather their big ideas about AI’s potential to destroy humanity. Here’s one example of an IEEE bubble gum card:

© by the estimable IEEE.

The information on the cards is eclectic. It is clear that some people think smart software will kill me and you. Others are not worried.

My thought is that IEEE should expand upon this concept; for example, here are some bubble gum card ideas:

- Do the NFT play? These might be easier to sell than IEEE memberships and subscriptions to the magazine

- Offer actual, fungible packs of trading cards with throw-back bubble gum

- Create an AI movie about AI experts with opposing ideas doing battle in a video game type world. Zap. You lose, you doubter.

But the old-fashioned approach to selling trading cards to grade school kids won’t work. First, there are very few corner stores near schools in many cities. Two, a special interest group will agitate to block the sale of cards about AI because the inclusion of chewing gum will damage children’s teeth. And, three, kids today want TikToks, at least until the service is banned from a fast-acting group of elected officials.

I think the IEEE will go in a different direction; for example, micro USBs with AI images and source code on them. Or, the IEEE should just advance to the 21st-century and start producing short-form AI videos.

The IEEE does have an opportunity. AI collectibles.

Stephen E Arnold, March 13, 2024

Want Clicks: Do Sad, Really, Really Sorrowful

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The US is a hotbed of negative news. It’s what drives the media and perpetuates the culture of fear that (arguably) has plagued the country since colonial times. US citizens and now the rest of the world are so addicted to bad news that a research team got the brilliant idea to study what words people click. Nieman Lab wrote about the study in, “Negative Words In News Headlines Generate More Clicks-But Sad Words Are More Effective Than Angry Or Scary Ones.”

Thanks, MSFT Copilot. One of Redmond’s security professionals I surmise?

Negative words are prevalent in headlines because they sell clicks. The Nature Human Behavior(u)r journal published a study called “Negativity Drives Online News Consumption.” The study analyzed the effect of negative and emotional words on news consumption and the research team discovered that negativity increased clickability. These findings also confirm the well-documented behavior of humans seeking negativity in all information-seeking.

It coincides with humanity’s instinct to be vigilant of any danger and avoid it. While humans instinctually gravitate towards negative headlines, certain negative words are more popular than others. Humans apparently are driven to click on sad-related synonyms, avoid anything resembling joy or fear, and angry words don’t have any effect. It all goes back to survival:

“And if we are to believe “Bad is stronger than good” derives from evolutionary psychology — that it arose as a useful heuristic to detect threats in our environment — why would fear-related words reduce likelihood to click? (The authors hypothesize that fear and anger might be more important in generating sharing behavior — which is public-facing — than clicks, which are private.)

In any event, this study puts some hard numbers to what, in most newsrooms, has been more of an editorial hunch: Readers are more drawn to negativity than to positivity. But thankfully, the effect size is small — and I’d wager that it’d be even smaller for any outlet that decided to lean too far in one direction or the other.”

It could also be a strict diet of danger-filled media too.

Whitney Grace, March 13, 2024