Blue-Chip Consulting Firm Needs Lawyers and Luck

May 15, 2024

McKinsey’s blue chip consultants continue their fancy dancing to explain away an itsy bitsy problem: ruined lives and run-of-the-mill deaths from drug overdoses. The International Business Times reminds us, “McKinsey Under Criminal Investigation Over Alleged Role in Fueling Opioid Epidemic.” The investigation, begun before the pandemic, continues to advance at the glacial pace of justice. Journalist Kiran Tom Sajan writes:

“Global consulting firm McKinsey & Company is under a criminal investigation by the U.S. attorneys’ offices in Massachusetts and the Western District of Virginia over its alleged involvement in fueling the opioid epidemic. The Federal prosecutors, along with the Justice Department’s civil division in Washington, are specifically examining whether the consulting firm participated in a criminal conspiracy by providing advice to Purdue Pharma and other pharmaceutical companies on marketing tactics aimed at increasing sales of prescription painkillers. Purdue is the manufacturer of OxyContin, one of the painkillers that allegedly contributed to widespread addiction and fatal overdoses. Since 2021, McKinsey has reached settlements of approximately $1 billion to resolve investigations and legal actions into its collaboration with opioid manufacturers, primarily Purdue. The company allegedly advised Purdue to intensify its marketing of the drug amid the opioid epidemic, which has resulted in the deaths of hundreds of thousands of Americans. McKinsey has not admitted any wrongdoing.”

Of course not. We learn McKinsey raked in about $86 million working for Purdue, most of it since the drug firm’s 2007 guilty plea. Sajan notes the investigations do not stop with the question of fueling the epidemic: The Justice Department is also considering whether McKinsey obstructed justice when it fired two incautious partners—they were caught communicating about the destruction of related documents. It is also examining whether the firm engaged in healthcare fraud when it helped Purdue and other opioid sellers make fraudulent Medicare claims. Will McKinsey’s recent settlement with insurance companies lend fuel to that dumpster fire? Will Lady Luck kick her opioid addiction and embrace those McKinsey professionals? Maybe.

Cynthia Murrell, May 15, 2024

Google Lessons in Management: Motivating Some, Demotivating Others

May 14, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I spotted an interesting comment in “Google Workers Complain of Decline in Morale’ as CEO Sundar Pichai Grilled over Raises, Layoffs: Increased distrust.” Here’s the passage:

Last month, the company fired 200 more workers, aside from the roughly 50 staffers involved in the protests, and shifted jobs abroad to Mexico and India.

I paired this Xhitter item with “Google Employees Question Execs over Decline in Morale after Blowout Earnings.” That write up asserted:

At an all-hands meeting last week, Google employees questioned leadership about cost cuts, layoffs and “morale” issues following the company’s better-than-expected first-quarter earnings report. CEO Sundar Pichai and CFO Ruth Porat said the company will likely have fewer layoffs in the second half of 2024.

Poor, poor Googzilla. I think the fearsome alleged monopolist could lose a few pounds. What do you think? Thanks, MSFT Copilot good enough work just like some security models we know and love.

Not no layoffs. Just “fewer layoffs.” Okay, that a motivator.

The estimable “real” news service stated:

Alphabet’s top leadership has been on the defensive for the past few years, as vocal staffers have railed about post-pandemic return-to-office mandates, the company’s cloud contracts with the military, fewer perks and an extended stretch of layoffs — totaling more than 12,000 last year — along with other cost cuts that began when the economy turned in 2022. Employees have also complained about a lack of trust and demands that they work on tighter deadlines with fewer resources and diminished opportunities for internal advancement.

What’s wrong with this management method? The answer: Absolutely nothing. The write up included this bit of information:

She [Ruth Porat, Google CFO, who is quitting the volleyball and foosball facility] also took the rare step of admitting to leadership’s mistakes in its prior handling of investments. “The problem is a couple of years ago — two years ago, to be precise — we actually got that upside down and expenses started growing faster than revenues,” said Porat, who announced nearly a year ago [in 2023] that she would be stepping down from the CFO position but hasn’t yet vacated the office. “The problem with that is it’s not sustainable.”

Ever tactful, Sundar Pichai (the straight man in the Sundar & Prabhakar Comedy Team is quoted as saying in silky tones:

“I think you almost set the record for the longest TGIF answer,” he said. Google all-hands meetings were originally called TGIFs because they took place on Fridays, but now they can occur on other days of the week. Pichai then joked that leadership should hold a “Finance 101” Ted Talk for employees. With respect to the decline in morale brought up by employees, Pichai said “leadership has a lot of responsibility here, adding that “it’s an iterative process.”

That’s a demonstration of tactful high school science club management-speak, in my opinion. To emphasize the future opportunities for the world’s smartest people, he allegedly said, according to the write up:

Pichai said the company is “working through a long period of transition as a company” which includes cutting expenses and “driving efficiencies.” Regarding the latter point, he said, “We want to do this forever.” [Editor note: Emphasis added]

Forever is a long, long time, isn’t it?

Poor, addled Googzilla. Litigation to the left, litigation to the right. Grousing world’s smartest employees. A legacy of baby making in the legal department. Apple apparently falling in lust with OpenAI. Amazon and its pesky Yellow Pages approach to advertising.

The sky is not falling, but there are some dark clouds overhead. And, speaking of overhead, is Google ever going to be able to control its costs, pay off its technical debt, write checks to the governments when the firm is unjustly found guilty of assorted transgressions?

For now, yes. Forever? Sure, why not?

Stephen E Arnold, May 14, 2024

AdVon: Why So Much Traction and Angst?

May 14, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

AdVon. AdVon. AdVon. Okay, the company is in the news. Consider this write up: “Meet AdVon, the AI-Powered Content Monster Infecting the Media Industry.” So why meet AdVon? The subtitle explains:

Remember that AI company behind Sports Illustrated’s fake writers? We did some digging — and it’s got tendrils into other surprisingly prominent publications.

Let’s consider the question: Why is AdVon getting traction among “prominent publications” or any other outfit wanting content? The answer is not far to see: Cutting costs, doing more with less, get more clicks, get more money. This is not a multiple choice test in a junior college business class. This is common sense. Smart software makes it possible for those with some skill in the alleged art of prompt crafting and automation to sell “stories” to publishers for less than those publishers can produce the stories themselves.

The future continues to arrive. Here’s smart software is saying “Hasta la vista” to the human information generator. The humanoid looks very sad. The AI software nor its owner does not care. Revenue and profit are more important as long as the top dogs get paid big bucks. Thanks, MSFT Copilot. Working on your security systems or polishing the AI today?

Let’s look at the cited article’s peregrination to the obvious: AI can reduce costs of “publishing”. Plus, as AI gets more refined, the publications themselves can be replaced with scripts.

The write up says:

Basically, AdVon engages in what Google calls “site reputation abuse”: it strikes deals with publishers in which it provides huge numbers of extremely low-quality product reviews — often for surprisingly prominent publications — intended to pull in traffic from people Googling things like “best ab roller.” The idea seems to be that these visitors will be fooled into thinking the recommendations were made by the publication’s actual journalists and click one of the articles’ affiliate links, kicking back a little money if they make a purchase. It’s a practice that blurs the line between journalism and advertising to the breaking point, makes the web worse for everybody, and renders basic questions like “is this writer a real person?” fuzzier and fuzzier.

Okay. So what?

In spite of the article being labeled as “AI” in AdVon’s CMS, the Outside Inc spokesperson said the company had no knowledge of the use of AI by AdVon — seemingly contradicting AdVon’s claim that automation was only used with publishers’ knowledge.

Okay, corner cutting as part of AdVon’s business model. What about the “minimum viable product” or “good enough” approach to everything from self driving auto baloney to Boeing air craft doors? AI use is somehow exempt from what is the current business practice. Major academic figures take short cuts. Now an outfit with some AI skills is supposed to operate like a hybrid of Joan of Arc and Mother Theresa? Sure.

The write up states:

In fact, it seems that many products only appear in AdVon’s reviews in the first place because their sellers paid AdVon for the publicity. That’s because the founding duo behind AdVon, CEO Ben Faw and president Eric Spurling, also quietly operate another company called SellerRocket, which charges the sellers of Amazon products for coverage in the same publications where AdVon publishes product reviews.

To me, AdVon is using a variant of the Google type of online advertising concept. The bar room door swings both ways. The customer pays to enter and the customer pays to leave. Am I surprised? Nope. Should anyone? How about a government consumer protection watch dog. Tip: Don’t hold your breath. New York City tested a chatbot that provided information that violated city laws.

The write up concludes:

At its worst, AI lets unscrupulous profiteers pollute the internet with low-quality work produced at unprecedented scale. It’s a phenomenon which — if platforms like Google and Facebook can’t figure out how to separate the wheat from the chaff — threatens to flood the whole web in an unstoppable deluge of spam. In other words, it’s not surprising to see a company like AdVon turn to AI as a mechanism to churn out lousy content while cutting loose actual writers. But watching trusted publications help distribute that chum is a unique tragedy of the AI era.

The kicker is that the company owning the publication “exposing” AdVon used AdVon.

Let me offer several observations:

- The research reveals what will become an increasingly wide spread business practice. But the practice of using AI to generate baloney and spam variants is not the future. It is now.

- The demand for what appears to be old fashioned information generation is high. The cost of producing this type of information is going to force those who want to generate information to take short cuts. (How do I know? How about the president of Stanford University who took short cuts. That’s how. When a university president muddles forward for years and gets caught by accident, what are students learning? My answer: Cheat better than that.)

- AI diffusion is like gerbils. First, you have a couple of cute gerbils in your room. As a nine year old, you think those gerbils are cute. Then you have more gerbils. What do you do? You get rid of the gerbils in your house. What about the gerbils? Yeah, they are still out there. One can see gerbils; it is more difficult to see the AI gerbils. The fix is not the plastic bag filled with gerbils in the garbage can. The AI gerbils are relentless.

Net net: Adapt and accept that AI is here, reproducing rapidly, and evolving. The future means “adapt.” One suggestion: Hire McKinsey & Co. to help your firm make tough decisions. That sometimes works.

Stephen E Arnold, May 14, 2024

AI and Doctors: Close Enough for Horseshoes and More Time for Golf

May 14, 2024

Burnout is a growing pandemic for all industries, but it’s extremely high in medical professions. Doctors and other medical professionals are at incredibly high risk of burnout. The daily stressors of treating patients, paperwork, dealing with insurance agencies, resource limitations, etc. are worsening. Stat News reports that AI algorithms offer a helpful solution for medical professionals, but there are still bugs in the system: “Generative AI Is Supposed To Save Doctors From Burnout. New Data Show It Needs More Training.”

Clinical notes are important for patient care and ongoing treatment. The downside of clinical notes is that it takes a long time to complete the task. Academic hospitals became training grounds for generative AI usage in the medical fields. Generative AI is a tool with a lot of potential, but it’s proven many times that it still needs a lot of work. The large language models for generative AI in medical documentation proved lacking. Is anyone really surprised? Apparently they were:

“Just in the past week, a study at the University of California, San Diego found that use of an LLM to reply to patient messages did not save clinicians time; another study at Mount Sinai found that popular LLMs are lousy at mapping patients’ illnesses to diagnostic codes; and still another study at Mass General Brigham found that an LLM made safety errors in responding to simulated questions from cancer patients. One reply was potentially lethal.”

Why doesn’t common sense prevail in these cases? Yes, generative AI should be tested so the data will back up the logical outcome. It’s called the scientific method for a reason. Why does everyone act surprised, however? Stop reflecting on the obvious of lackluster AI tools and focus on making them better. Use these tests to find the bugs, fix them, and make them practical applications that work. Is that so hard to accomplish?

Whitney Grace, May 14, 2024

Wanna Be Happy? Use the Internet

May 13, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The glory days of the Internet have faded. Social media, AI-generated baloney, and brain numbing TikTok-esque short videos — Outstanding ways to be happy. What about endless online scams, phishing, and smishing, deep fake voices to grandma from grandchildren needing money — Yes, guaranteed uplifts to sagging spirits.

The idea of a payoff in a coffee shop is silly. Who would compromise academic standards for a latte and a pile of cash. Absolutely no one involved in academic pursuits. Good enough, MSFT Copilot. Good enough.

When I read two of the “real” news stories about how the Internet manufactures happiness, I asked myself, “Exactly what’s with this study?” The PR push to say happy things about online reminded me of the OII or Oxford Internet Institute and some of its other cheerleading. And what is the OII? It is an outfit which receives some university support, funds from private industry, and foundation cash; for example, the Shirley Institute.

In my opinion, it is often difficult to figure out if the “research” is wonky due to its methodology, the desire to keep some sources of funding writing checks, or a nifty way to influence policies in the UK and elsewhere. The magic of the “Oxford” brand gives the outfit some cachet for those who want to collect conference name tags to bedeck their office coat hangers.

The OII is back in the content marketing game. I read the BBC’s “Internet Access Linked to Higher Wellbeing, Study Finds” and the Guardian’s “Internet Use Is Associated with Greater Wellbeing, Global Study Finds.” Both articles are generated from the same PR-type verbiage. But the weirdness of the assertion is undermined by this statement from the BBC’s rewrite of the OII’s PR:

The study was not able to prove cause and effect, but the team found measures of life satisfaction were 8.5% higher for those who had internet access. Nor did the study look at the length of time people spent using the internet or what they used it for, while some factors that could explain associations may not have be considered.

The Oxford brand and the big numbers about a massive sample size cannot hide one awkward fact: There is little evidence that happiness drips from Internet use. Convenience? Yep. Entertainment? Yep. Crime? Yep. Self-harm, drug use or experimentation, meme amplification. Yep, yep, yep.

Several questions arise:

- Why is the message “online is good” suddenly big news? If anything, the idea runs counter to the significant efforts to contain access to potentially harmful online content in the UK and elsewhere. Gee, I wonder if the companies facing some type of sanctions are helping out the good old OII?

- What’s up with Oxford University itself? Doesn’t it have more substantive research to publicize? Perhaps Oxford should emulate the “Naked Scientist” podcast or lobby to get Melvin Bragg to report about more factual matters? Does Oxford have an identity crisis?

- And the BBC and the Guardian! Have the editors lost the plot? Don’t these professionals have first hand knowledge about the impact of online on children and young adults? Don’t they try to talk to their kids or grandkids at the dinner table when the youthful progeny of “real” news people are using their mobile phones?

I like facts which push back against received assumptions. But online is helping out those who use it needs a bit more precision, clearer thinking, and less tenuous cause-and-effect hoo-hah in my opinion.

Stephen E Arnold, May 13, 2024

Apple and a Recycled Carnival Act: Woo Woo New New!

May 13, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

A long time ago, for a project related to a new product which was cratering, one person on my team suggested I read a book by James B. Twitchell. Carnival Culture: The Trashing of Taste in America provided a broad context, but the information in the analysis of taste was not going to save the enterprise software I was supposed to analyze. In general, I suggest that investment outfits with an interest in online information give me a call before writing checks to the tale-spinning entrepreneurs.

A small creative spark getting smashed in an industrial press. I like the eyes. The future of humans in Apple’s understanding of the American datasphere. Wow, look at those eyes. I can hear the squeals of pain, can’t you?

Dr. Twitchell did a good job, in my opinion, of making clear that some cultural actions are larger than a single promotion. Popular movies and people like P.T. Barnum (the circus guy) explain facets of America. These two examples are not just entertaining; they are making clear what revs the engines of the US of A.

I read “Hating Apple Goes Mainstream” and realized that Apple is doing the marketing for which it is famous. The roll out of the iPad had a high resolution, big money advertisement. If you are around young children, squishy plastic toys are often in small fingers. Squeeze the toy and the eyes bulge. In the image above, a child’s toy is smashed in what seems to me be the business end of a industrial press manufactured by MSE Technology Ltd in Turkey.

Thanks, MSFT Copilot. Glad you had time to do this art. I know you are busy on security or is it AI or is AI security or security AI? I get so confused.

The Apple iPad has been a bit of an odd duck. It is a good substitute for crappy Kindle-type readers. We have a couple, but they don’t get much use. Everything is a pain for me because the super duper Apple technology does not detect my fingers. I bought the gizmos so people could review the PowerPoint slides for one of my lectures at a conference. I also experimented with the iPad as a teleprompter. After a couple of tests, getting content on the device, controlling it, and fiddling so the darned thing knew I was poking the screen to cause an action — I put the devices on the shelf.

Forget the specific product, let’s look at the cited write ups comments about the Apple “carnival culture” advertisement. The write up states:

Apple has lost its presumption of good faith over the last five years with an ever-larger group of people, and now we’ve reached a tipping point. A year ago, I’m sure this awful ad would have gotten push back, but I’m also sure we’d heard more “it’s not that big of a deal” and “what Apple really meant to say was…” from the stalwart Apple apologists the company has been able to count on for decades. But it’s awfully quiet on the fan-boy front.

I think this means the attempt to sell sent weird messages about a company people once loved. What’s going on, in my opinion, is that Apple is explaining what technology is going to do to people who once used software to create words, images, and data exhaust will be secondary to cosmetics of technology.

In short, people and their tools will be replaced by a gizmo or gizmos that are similar to bright lights and circus posters. What do these artifacts tell us. My take on the Apple iPad M4 super duper creative juicer is, at this time:

- So what? I have an M2 Air, and it does what I hoped the two touch insensitive iPads would do.

- Why create a form factor that is likely to get crushed when I toss my laptop bad on a security screening belt? Apple’s products are, in my view, designed to be landfill residents.

- Apple knows in its subconscious corporate culture heat sink that smart software, smart services, and dumb users are the future. The wonky expensive high-resolution shouts, “We know you are going to be out of job. You will be like the yellow squishy toy.”

The message Apple is sending is that innovation has moved from utility to entertainment to the carnival sideshow. Put on your clown noses, people. Buy Apple.

Stephen E Arnold, May 13, 2024

Open Source and Open Doors. Bad Actors, Come On In

May 13, 2024

Open source code is awesome, because it allows developers to create projects without paying proprietary fees and it inspires innovation. Open source code, however, has problems especially when bad actors know how to exploit it. OpenSSF shares how a recent open source back door left many people vulnerable: “Open Source Security (OpenSSF) And OpenJS Foundations Issue Alert For Social Engineer Takeovers Of Open Source Projects.”

The OpenJS Foundation hosts billions of JavaScript websites. The foundation recently discovered a social engineering takeover attempt dubbed XZ Utilz backdoor, similar to another hack in the past. The OpenJS Foundation and the Open Source Security Foundation are alerting developers about the threat.

The OpenJS received a series of suspicious emails from various GitHub emails that advised project administrators to update their JavaScript. The update description was vague and wanted the administrators to allow the bad actors access to projects. The scam emails are part of the endless bag of tricks black hat hackers use to manipulate administrators, so they can access source code.

The foundations are warning administrators about the scams and sharing tips about how to recognize scams. Bad actors exploit open source developers:

“These social engineering attacks are exploiting the sense of duty that maintainers have with their project and community in order to manipulate them. Pay attention to how interactions make you feel. Interactions that create self-doubt, feelings of inadequacy, of not doing enough for the project, etc. might be part of a social engineering attack.

Social engineering attacks like the ones we have witnessed with XZ/liblzma were successfully averted by the OpenJS community. These types of attacks are difficult to detect or protect against programmatically as they prey on a violation of trust through social engineering. In the short term, clearly and transparently sharing suspicious activity like those we mentioned above will help other communities stay vigilant. Ensuring our maintainers are well supported is the primary deterrent we have against these social engineering attacks.”

These scams aren’t surprising. There needs to be more organizations like OpenJS and Open Source Security, because their intentions are to protect the common good. They’re on the side of the little person compared to politicians and corporations.

Whitney Grace, May 13, 2024

Will Google Behave Like Telegram?

May 10, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I posted a short item on LinkedIn about Telegram’s blocking of Ukraine’s information piped into Russia via Telegram. I pointed out that Pavel Durov, the founder of VK and Telegram, told Tucker Carlson that he was into “free speech.” A few weeks after the interview, Telegram blocked the data from Ukraine for Russia’s Telegram users. One reason given, as I recall, was that Apple was unhappy. Telegram rolled over and complied with a request that seems to benefit Russia more than Apple. But that’s just my opinion. The incident, which one of my team verified with a Ukrainian interacting with senior professionals in Ukraine, the block. Not surprisingly, Ukraine’s use of Telegram is under advisement. I think that means, “Find another method of sending encrypted messages and use that.” Compromised communications can translate to “Rest in Peace” in real time.

A Hong Kong rock band plays a cover of the popular hit Glory to Hong Kong. The bats in the sky are similar to those consumed in Shanghai during a bat festival. Thanks, MSFT Copilot. What are you working on today? Security or AI?

I read “Hong Kong Puts Google in Hot Seat With Ban on Protest Song.” That news story states:

The Court of Appeal on Wednesday approved the government’s application for an injunction order to prevent anyone from playing Glory to Hong Kong with seditious intent. While the city has a new security law to punish that crime, the judgment shifted responsibility onto the platforms, adding a new danger that just hosting the track could expose companies to legal risks. In granting the injunction, judges said prosecuting individual offenders wasn’t enough to tackle the “acute criminal problems.”

What’s Google got to do with it that toe tapper Glory to Hong Kong?

The write up says:

The injunction “places Google, media platforms and other social media companies in a difficult position: Essentially pitting values such as free speech in direct conflict with legal obligations,” said Ryan Neelam, program director at the Lowy Institute and former Australian diplomat to Hong Kong and Macau. “It will further the broader chilling effect if foreign tech majors do comply.”

The question is, “Roll over as Telegram allegedly has, or fight Hong Kong and by extension everyone’s favorite streaming video influencer, China?” What will Google do? Scrub Glory to Hong Kong, number one with a bullet on someone’s hit parade I assume.

My guess is that Google will go to court, appeal, and then take appropriate action to preserve whatever revenue is at stake. I do know The Sundar & Prabhakar Comedy Show will not use Glory to Hong Kong as its theme for its 2024 review.

Stephen E Arnold, May 10, 2024

More on Intelligence and Social Media: Birds Versus Humans

May 10, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

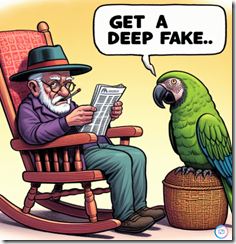

I have zero clue if the two stories about which I will write a short essay are accurate. I don’t care because the two news items are in delicious tension. Do you feel the frisson? The first story is “Parrots in Captivity Seem to Enjoy Video-Chatting with Their Friends on Messenger.” The core of the story strikes me as:

A new (very small) study led by researchers at the University of Glasgow and Northeastern University compared parrots’ responses when given the option to video chat with other birds via Meta’s Messenger versus watching pre-recorded videos. And it seems they’ve got a preference for real-time conversations.

Thanks, MSFT Copilot. Is your security a type of deep fake?

Let me make this somewhat over-burdened sentence more direct. Birds like to talk to other birds, live, and in real time. The bird is not keen on the type of video humans gobble up.

Now the second story. It has the click–licious headline “Gen Z Mostly Doesn’t Care If Influencers Are Actual Humans, New Study Shows.” The main idea of this “real” news story is, in my opinion:

The report [from a Sprout Social report] notes that 46 percent of Gen Z respondents, specifically, said they would be more interested in a brand that worked with an influencer generated with AI.

The idea is that humans are okay with fake video which aims to influence them through fake humans.

My atrophied dinobaby brain interprets the information in each cited article this way: Birds prefer to interact with real birds. Humans are okay with fake humans. I will have to recalibrate my understanding of the bird brain.

Let’s assume both write ups are chock full of statistically-valid data. The assorted data processes conformed to the requirements of Statistics 101. The researchers suggest humans are okay with fake data. Birds, specifically parrots, prefer the real doo-dah.

Observations:

- Humans may not be able to differentiate real from fake. When presented with fakes, humans may prefer the bogus.

- I may need to reassess the meaning of the phrase “bird brain.”

- Researchers demonstrate the results of years of study in these findings.

Net net: The chills I referenced in the first paragraph of this essay I now recognize as fear.

Stephen E Arnold, May 10, 2024

Google Search Is Broken

May 10, 2024

ChatGPT and other generative AI engines have screwed up search engines, including the all-powerful Google. The Blaze article, “Why Google Search Is Broken” explains why Internet search is broke, and the causes. The Internet is full of information and the best way to get noticed in search results is using SEO. A black hat technique (it will probably be considered old school in the near future) to manipulate search results is to litter a post with keywords aka “keyword stuffing.”

ChatGPT users realized that it’s a fantastic tool for SEO, because they tell the AI algorithm to draft a post with a specific keyword and it generates a decent one. Google’s search algorithm then reads that post and pushes it to the top of search results. ChatGPT was designed to read and learn language the same way as Google: skin the Internet, scoop up information from Web sites, and then use it to teach the algorithm. This threatens Google’s search profit margins and Alphabet Inc. doesn’t like that:

“By and large, people don’t want to read AI-generated content, no matter how accurate it is. But the trouble for Google is that it can’t reliably detect and filter AI-generated content. I’ve used several AI detection apps, and they are 50% accurate at best. Google’s brain trust can probably do a much better job, but even then, it’s computationally expensive, and even the mighty Google can’t analyze every single page on the web, so the company must find workarounds.

This past fall, Google rolled out its Helpful Content Update, in which Google started to strongly emphasize sites based on user-generated content in search results, such as forums. The site that received the most notable boost in search rankings was Reddit. Meanwhile, many independent bloggers saw their traffic crash, whether or not they used AI.”

Google wants to save money by offloading AI detection/monitoring to forum moderators that usually aren’t paid. Unfortunately SEO experts figured out Google’s new trick and are now spamming user-content driven Websites. Google recently signed a deal with Reddit to acquire its user data to train its AI project, Gemini.

Google hates AI generated SEO and people who game its search algorithms. Google doesn’t have the resources to detect all the SEO experts, but went they are found Google extracts vengeance with deindexing and making better tools. Google released a new update to its spam policies to remove low-quality, unoriginal content made to abuse its search algorithm. The overall goal is to remove AI-generated sites from search results.

If you read between the lines, Google doesn’t want to lose more revenue and is calling out bad actors.

Whitney Grace, May 10, 2024