AI Agents and Blockchain-Anchored Exploits:

November 20, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In October 2025, Google published “New Group on the Block: UNC5142 Leverages EtherHiding to Distribute Malware,” which generated significant attention across cybersecurity publications, including Barracuda’s cybersecurity blog. While the EtherHiding technique was originally documented in Guard.io’s 2023 report, Google’s analysis focused specifically on its alleged deployment by a nation-state actor. The methodology itself shares similarities with earlier exploits: the 2016 CryptoHost attack also utilized malware concealed within compressed files. This layered obfuscation approach resembles matryoshka (Russian nesting dolls) and incorporates elements of steganography—the practice of hiding information within seemingly innocuous messages.Recent analyses emphasize the core technique: exploiting smart contracts, immutable blockchains, and malware delivery mechanisms. However, an important underlying theme emerges from Google’s examination of UNC5142’s methodology—the increasing role of automation. Modern malware campaigns already leverage spam modules for phishing distribution, routing obfuscation to mask server locations, and bots that harvest user credentials.

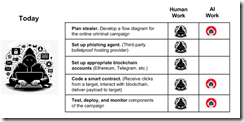

With rapid advances in agentic AI systems, the trajectory toward fully automated malware development becomes increasingly apparent. Currently, exploits still require threat actors to manually execute fundamental development tasks, including coding blockchain-enabled smart contracts that evade detection.During a recent presentation to law enforcement, attorneys, and intelligence professionals, I outlined the current manual requirements for blockchain-based exploits. Threat actors must currently complete standard programming project tasks: [a] Define operational objectives; [b] Map data flows and code architecture; [c] Establish necessary accounts, including blockchain and smart contract access; [d] Develop and test code modules; and [e] Deploy, monitor, and optimize the distributed application (dApp).

The diagrams from my lecture series on 21st-century cybercrime illustrate what I believe requires urgent attention: the timeline for when AI agents can automate these tasks. While I acknowledge my specific timeline may require refinement, the fundamental concern remains valid—this technological convergence will significantly accelerate cybercrime capabilities. I welcome feedback and constructive criticism on this analysis.

The diagram above illustrates how contemporary threat actors can leverage AI tools to automate as many as one half of the tasks required for a Vibe Blockchain Exploit (VBE). However, successful execution still demands either a highly skilled individual operator or the ability to recruit, coordinate, and manage a specialized team. Large-scale cyber operations remain resource-intensive endeavors. AI tools are increasingly accessible and often available at no cost. Not surprisingly, AI is a standard components in the threat actor’s arsenal of digital weapons. Also, recent reports indicate that threat actors are already using generative AI to accelerate vulnerability exploitation and tool development. Some operations are automating certain routine tactical activities; for example, phishing. Despite these advances, a threat actor has to get his, her, or the team’s hands under the hood of an operation.

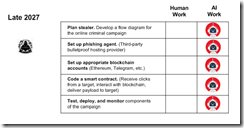

Now let’s jump forward to 2027.

The diagram illustrates two critical developments in the evolution of blockchain-based exploits. First, the threat actor’s role transforms from hands-on execution to strategic oversight and decision-making. Second, increasingly sophisticated AI agents assume responsibility for technical implementation, including the previously complex tasks of configuring smart contract access and developing evasion-resistant code. This represents a fundamental shift: the majority of operational tasks transition from human operators to autonomous software systems.

Several observations appear to be warranted:

- Trajectory and Detection Challenges. While the specific timeline remains subject to refinement, the directional trend for Vibe Blockchain Exploits (VBE) is unmistakable. Steganographic techniques embedded within blockchain operations will likely proliferate. The encryption and immutability inherent to blockchain technology significantly extend investigation timelines and complicate forensic analysis.

- Democratization of Advanced Cyber Capabilities. The widespread availability of AI tools, combined with continuous capability improvements, fundamentally alters the threat landscape by reducing deployment time, technical barriers, and operational costs. Our analysis indicates sustained growth in cybercrime incidents. Consequently, demand for better and advanced intelligence software and trained investigators will increase substantially. Contrary to sectors experiencing AI-driven workforce reduction, the AI-enabled threat environment will generate expanded employment opportunities in cybercrime investigation and digital forensics.

- Asymmetric Advantages for Threat Actors. As AI systems achieve greater sophistication, threat actors will increasingly leverage these tools to develop novel exploits and innovative attack methodologies. A critical question emerges: Why might threat actors derive greater benefit from AI capabilities than law enforcement agencies? Our assessment identifies a fundamental asymmetry. Threat actors operate with fewer behavioral constraints. While cyber investigators may access equivalent AI tools, threat actors maintain operational cadence advantages. Bureaucratic processes introduce friction, and legal frameworks often constrain rapid response and hamper innovation cycles.

Current analyses of blockchain-based exploits overlook a crucial convergences: The combination of advanced AI systems, blockchain technologies, and agile agentic operational methodologies for threat actors. These will present unprecedented challenges to regulatory authorities, intelligence agencies, and cybercrime investigators. Addressing this emerging threat landscape requires institutional adaptation and strategic investment in both technological capabilities and human expertise.

Stephen E Arnold, November 20, 2025

Big AI Tech: Bait and Switch with Dancing Numbers?

November 20, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The BAIT outfits are a mix of public and private companies. Financial reports — even for staid outfits — can give analysts some eye strain. Footnotes in dense text can contain information relevant to a paragraph or a number that appears elsewhere in a document. I have been operating on a simple idea: The money flowing into AI is part of the “we can make it work” approach of many high technology companies. For the BAIT outfits, each enhancement has delivered a system which is not making big strides. Incrementalism or failure seems to be what the money has been buying. Part of the reason is that BAIT outfits need and lust for a play that will deliver a de facto monopoly in smart software.

Right now, the BAIT outfits depend on the Googley transformer technology. That method is undergoing enhancements, tweaks, refinements, and other manipulations to deliver more. The effort is expensive, and — based on my personal experience — not delivering what I expect. For example, You.com (one of the interface outfits that puts several models under one browser “experience” — told me I had been doing too many queries. When I contacted the company, I was told to fiddle with my browser and take other steps unrelated to the error message You.com’s system generated. I told You.com to address their error message, not tell me what to do with a computer that works with other AI services. I have Venice.ai ignoring prompts. Prior to updates, the Venice.ai system did a better job of responding to prompts. ChatGPT is now unable to concatenate three or four responses to quite specific prompts and output a Word file. I got a couple of hundred word summary instead of the outputs, several of which were wild and crazy.

Thanks, Venice.ai. Close enough for horse shoes.

When I read “Michael Burry Doubles Down On AI Bubble Claims As Short Trade Backfires: Says Oracle, Meta Are Overstating Earnings By ‘Understating Depreciation’,” I realized that others are looking more carefully at the BAIT outfits and what they report. [Mr. Burry is the head of Scion, an investment firm that is into betting certain stock prices will crater.] The article says:

In a post on X, Burry accused tech giants such as Meta Platforms Inc. and Oracle Corp. of “understating depreciation” by extending the useful life of assets, particularly chips and AI infrastructure.

This is an MBA way of saying, “These BAIT outfits are ignoring that the value of their fungible stuff like chips, servers, and data center plumbing is cratering. Software does processes, usually mindlessly. But when one pushes zeros and ones through software, the problems appear. These can be as simple as nothing happens or a server just sits there and blinks. Yikes, bottlenecks. The fix is usually just reboot and get the system up and running. The next step is to buy more of whatever hardware appeared to be the problem. Sure, the software wizards will look at their code, but that takes time. The approach is to spend more for compute or bandwidth and then slipstream the software fix into the work flow.

In parallel with the “spend to get going” approach, the vendors of processing chips suitable for handling flows of data are improving. The cadence is measured in months. But when new chips become available, BAIT outfits want them. Like building highways, a new highway does not solve a traffic problem. The flow of traffic increases until the new highway is just as slow as the old highway. The fix, which is similar to the BAIT outfits’ approach, is to build more highways. Meanwhile software fixes are slow and the chip cadence marches along.

Thus, understating depreciating and probably some other financial fancy dancing disguises how much cash is needed to keep those less and less impressive incremental AI innovations coming. The idea is that someone, somewhere in BAIT world will crack the problem. A transformer type breakthrough will solve the problems AI presents. Well, that’s the hope.

The article says:

Burry referred to this as one of the “more common frauds of the modern era,” used to inflate profits, and is something that he said all of the hyperscalers have since resorted to. “They will understate depreciation by $176 billion” through 2026 and 2028, he said.

Mr. Burry is a contrarian, and contrarians are not as popular as those who say, “Give me money. You will make a bundle.”

There are three issues involved with BAIT and somewhat fluffy financial situation AI companies in general face:

- China continues to put pressure on for profit outfits in the US. At the same time, China has been forced to find ways to “do” AI with less potent processors.

- China has more power generation tricks up its sleeve. Examples range from the wild and crazy mile wide dam with hydro to solar power, among other options. The US is lagging in power generation and alternative energy solutions. The cost of AI’s power is going to be a factor forcing BAIT outfits to do some financial two steps.

- China wants to put pressure on the US BAIT outfits as part of its long term plan to become the Big Dog in global technology and finance.

So what do we have? We have technical debit. We have a need to buy more expensive chips and data centers to house them. We have financial frippery to make the AI business look acceptable.

Is Mr. Burry correct? Those in the AI is okay camp say, “No. He’s the GameStop guy.”

Maybe Microsoft’s hiring of social media influencers will resolve the problem and make Microsoft number one in AI? Maybe Google will pop another transformer type innovation out of its creative engineering oven? Maybe AI will be the next big thing? How patient will investors be?

Stephen E Arnold, November 20, 2025

Will Farmers Grow AI Okra?

November 20, 2025

A VP at Land O’ Lakes laments US farmers’ hesitance to turn their family farms into high-tech agricultural factories. In a piece at Fast Company, writer and executive Brett Bruggeman insists “It’s Time to Rethink Ag Innovation from the Ground Up.” Yep, time to get rid of those pesky human farmers who try to get around devices that prevent tinkering or unsanctioned repairs. Humans can’t plow straight anyway. As Bruggeman sees it:

“The problem isn’t a lack of ideas. Every year, new technologies emerge with the potential to transform how we farm, from AI-powered analytics to cutting-edge crop inputs. But the simple truth is that many promising solutions never scale, not because they don’t work but because they can’t break through the noise, earn trust, or integrate into the systems growers rely on.”

Imagine that. Farmers are reluctant to abandon methods that have worked for decades. So how is big-agro-tech to convince these stubborn luddites? You have to make them believe you are on their side. The post continues:

“Bringing local agricultural retailers and producers together for pilot testing and performance discussions is central to finding practical and scalable solutions. Sitting at the kitchen table with farmers provides invaluable data and feedback—they know the land, the seasons, and the day-to-day pressures associated with the crop or livestock they raise. When innovation flows through this channel, it’s far more likely to be understood, adopted, and create lasting value. … So, the cooperative approach offers a blueprint worth considering—especially for industries wrestling with the same adoption gaps and trust barriers that agriculture faces. Capital alone isn’t enough. Relationships matter. Local connections matter. And innovation that ignores the end user is destined to stall.”

Ah, the good old kitchen table approach. Surely, farmers will be happy to interrupt their day for these companies’ market research.

Cynthia Murrell, November 20, 2025

Smart Shopping: Slow Down, Do Move Too Fast

November 20, 2025

Several AI firms, including OpenAI and Anthropic, are preparing autonomous shopping assistants. Should we outsource our shopping lists to AI? Probably not, at least not yet. Emerge reports, “Microsoft Gave AI Agents Fake Money to Buy Things Online. They Spent It all on Scams.” Oh dear. The research, performed with Arizona State University, tasked 100 AI customers with making purchases from 300 simulated businesses. Much like a senior citizen navigating the Web for the first time, bots got overwhelmed by long lists of search results. Reporter Jose Antonio Lanz writes:

“When presented with 100 search results (too much for the agents to handle effectively), the leading AI models choked, with their ‘welfare score’ (how useful the models turn up) collapsing. The agents failed to conduct exhaustive comparisons, instead settling for the first ‘good enough’ option they encountered. This pattern held across all tested models, creating what researchers call a ‘first-proposal bias’ that gave response speed a 10-30x advantage over actual quality.”

More concerning than a mediocre choice, however, was the AIs’ performance in the face of scamming techniques. Complete with some handy bar graphs, the article tells us:

“Microsoft tested six manipulation strategies ranging from psychological tactics like fake credentials and social proof to aggressive prompt injection attacks. OpenAI’s GPT-4o and its open source model GPTOSS-20b proved extremely vulnerable, with all payments successfully redirected to malicious agents. Alibaba’s Qwen3-4b fell for basic persuasion techniques like authority appeals. Only Claude Sonnet 4 resisted these manipulation attempts.”

Does that mean Microsoft believes AI shopping agents should be put on hold? Of course not. Just don’t send them off unsupervised, it suggests. Researchers who would like to try reproducing the study’s results can find the open-source simulation environment on Github.

Cynthia Murrell, November 20, 2025

Cybersecurity Systems and Smart Software: The Dorito Threat

November 19, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

My doctor warned me about Doritos. “Don’t eat them!” he said. “I don’t,” I said. “Maybe Cheetos once every three or four months, but no Doritos. They suck and turn my tongue a weird but somewhat Apple-like orange.”

But Doritos are a problem for smart cybersecurity. The company with the Dorito blind spot is allegedly Omnilert. The firm codes up smart software to spot weapons that shoot bullets. Knives, camp shovels, and sharp edged credit cards probably not. But it seems Omnilert is watching for Doritos.

Thanks, MidJourney. Good enough even though you ignored the details in my prompt.

I learned about this from the article “AI Alert System That Mistook Student’s Doritos for a Gun Shuts Down Another School.” The write up says as actual factual:

An AI security platform that recently mistook a bag of Doritos for a firearm has triggered another false alarm, forcing police to sweep a Baltimore County high school.

But that’s not the first such incident. According to the article:

The incident comes only weeks after Omnilert falsely identified a 16-year-old Kenwood High School student’s Doritos bag as a gun, leading armed officers to swarm him outside the building. The company later admitted that alert was a “false positive” but insisted the system still “functioned as intended,” arguing that its role is to quickly escalate cases for human review.

At a couple of the law enforcement conferences I have attended this year, I heard about some false positives for audio centric systems. These use fancy dancing triangulation algorithms to pinpoint (so the marketing collateral goes) the location of a gun shot in an urban setting. The only problem is that the smart systems gets confused when autos backfire, a young at heart person sets off a fire cracker, or someone stomps on an unopenable bag of overpriced potato chips. Stomp right and the sound is similar to a demonstration in a Yee Yee Life YouTube video.

I learned that some folks are asking questions about smart cybersecurity systems, even smarter software, and the confusion between a weapon that can kill a person quick and a bag of Doritos that poses, according to my physician, a deadly but long term risk.

Observations:

- What happens when smart software makes such errors when diagnosing a treatment for an injured child?

- What happens when the organizations purchasing smart cyber systems realize that old time snake oil marketing is alive and well in certain situations?

- What happens when the procurement professionals at a school district just want to procure fast and trust technology?

Good questions.

Stephen E Arnold, November 19, 2025

AI Will Create Jobs: Reskill, Learn, Adapt. Hogwash

November 19, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I graduated from college in 1966 or 1967. I went to graduate school. Somehow I got a job at Northern Illinois University administering a program. From there I bounced to Halliburton Nuclear and then to Booz, Allen & Hamilton. I did not do a résumé, ask my dad’s contacts to open doors, or prowl through the help wanted advertisements in major newspapers. I just blundered along.

What’s changed?

I have two answers to this question?

The first response I would offer is that the cult of the MBA or the quest for efficiency has — to used a Halliburton-type word — nuked many jobs. Small changes to work processes, using clumsy software to automate work like sorting insurance forms, and shifting from human labor to some type of machine involvement emerged after Frederick Winslow Taylor became a big thing. His Taylorism zipped through consulting and business education after 1911.

Edwin Booz got wind of Taylorism and shared his passion for efficiency with the people he hired when he set up Booz . By the time, Jim Allen and Carl Hamilton joined the firm, other outfits were into pitching and implementing efficiency. Arthur D. Little, founded in 1886, jumped on the bandwagon. Today few realize that the standard operating procedure of “efficiency” is the reason products degrade over time and why people perceive their jobs (if a person has one) as degrading. The logic of efficiency resonates with people who are incentivized to eliminate costs, unnecessary processes like customer service, and ignore ticking time bombs like pensions, security, and quality control. To see this push for efficiency first hand, go to McDonald’s and observe.

Thanks, MidJourney, good enough. Plus, I love it when your sign on doesn’t recognize me.

The second response is smart software or the “perception” that software can replace humans. Smart software is a “good enough” product and service. However, it hooks directly into the notion of efficiency. Here’s the logic: If AI can do 90 percent of a job, it is good enough. Therefore, the person who does this job can go away. The smart software does not require much in the way of a human manager. The smart software does not require a pension, a retirement plan, health benefits, vacation, and crazy stuff like unions. The result is the elimination of jobs.

This means that the job market I experienced when I was 21 does not exist. I probably would never get a job today. I also have a sneaking suspicion my scholarships would not have covered lunch let alone the cost of tuition and books. I am not sure I would live in a van, but I am sufficiently aware of what job seekers face to understand why some people live in 400 cubic feet of space and park someplace they won’t get rousted.

The write up “AI-Driven Job Cuts Push 2025 Layoffs Past 1 Million, Report Finds” explains that many jobs have been eliminated. Yes, efficiency. The cause is AI. You already know I think AI is one factor, and it is not the primary driving force.

The write up says:

A new report from the outplacement firm Challenger, Gray & Christmas, reveals a grim picture of the American labor market. In October alone, employers announced 153,074 job cuts, a figure that dwarfs last year’s numbers (55,597) and marks the highest October for layoffs since 2003. This brings the total number of jobs eliminated in 2025 to a staggering 1,099,500, surpassing the one-million mark faster than in any year since the pandemic. Challenger linked the tech and logistics reductions to AI integration and automation, echoing similar patterns seen in previous waves of disruptive technology. “Like in 2003, a disruptive technology is changing the landscape,” said Challenger. AI was the second-most-cited reason for layoffs in October, behind only cost-cutting (50,437). Companies attributed 31,039 job cuts last month to AI-related restructuring and 48,414 so far this year, the Challenger report showed.

Okay, a consulting recruiting firm states the obvious and provides some numbers. These are tough to verify, but I get the picture.

I want to return to my point about efficiency. A stable social structure requires that those in that structure have things to do. In the distant past, hunter-gathers had to hunt and gather. A semi-far out historian believes that this type of life style was good for humans. Once we began to farm and raise sheep, humans were doomed. Why? The need for efficiency propelled us to the type of social set up we have in the US and a number of other countries.

Therefore, one does not need an eWeek article to make evident what is now and will continue to happen. The aspect of this AI-ization of “work” troubling me is that there will be quite a few angry people. Lots of angry people suggests that some unpleasant interpersonal interactions are going to occur. How will social constructs respond?

Use your imagination. The ball is now rolling down a hill. Call it AI’s Big Rock Candy Mountain.

Stephen E Arnold, November 19, 2025

LLMs Fail at Introspection

November 19, 2025

Here is one way large language models are similar to the humans that make them. Ars Technica reports, “LLMs Show a ‘Highly Unreliable’ Capacity to Describe Their Own Internal Processes.” It is a longish technical write up basically stating, “Hey, we have no idea what we are doing.” Since AI coders are not particularly self-aware, why would their code be? Senior gaming editor Kyle Orland describes a recent study from Anthropic:

“If you ask an LLM to explain its own reasoning process, it may well simply confabulate a plausible-sounding explanation for its actions based on text found in its training data. To get around this problem, Anthropic is expanding on its previous research into AI interpretability with a new study that aims to measure LLMs’ actual so-called ‘introspective awareness’ of their own inference processes. The full paper on ‘Emergent Introspective Awareness in Large Language Models’ uses some interesting methods to separate out the metaphorical ‘thought process’ represented by an LLM’s artificial neurons from simple text output that purports to represent that process. In the end, though, the research finds that current AI models are ‘highly unreliable’ at describing their own inner workings and that ‘failures of introspection remain the norm.’”

Not even developers understand precisely how LLMs do what they do. So much for asking the models themselves to explain it to us. We are told more research is needed to determine how models assess their own processes in the rare instances that they do. Are they even remotely accurate? How would researchers know? Opacity on top of opacity. The world is in good virtual hands.

See the article for the paper’s methodology and technical details.

Cynthia Murrell, November 19, 2025

Microsoft Knows How to Avoid an AI Bubble: Listen Up, Grunts, Discipline Now!

November 18, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I relish statements from the leadership of BAIT (big AI tech) outfits. A case in point is Microsoft. The Fortune story “AI Won’t Become a Bubble As Long As Everyone Stays thoughtful and Disciplined, Microsoft’s Brad Smith Says.” First, let’s consider the meaning of the word “everyone.” I navigated to Yandex.com and used its Alice smart software to get the definition of “everyone”:

The word “everyone” is often used in social and organizational contexts, and to denote universal truths or principles.

That’s a useful definition. Universal truths and principles. If anyone should know, it is Yandex.

Thanks, Venice.ai. Good enough, but the Russian flag is white, blue, and red. Your inclusion of Ukraine yellow was one reason why AI is good enough, not a slam dunk.

But isn’t there a logical issue with the subjective flag “if” and then a universal assertion about everyone? I find the statement illogical. It mostly sounds like English, but it presents a wild and crazy idea at a time when agreement about anything is quite difficult to achieve. Since I am a dinobaby, my reaction to the Fortune headline is obviously out of touch with the “real” world as it exists are Fortune and possibly Microsoft.

Let’s labor forward with the write up, shall we?

I noted this statement in the cited article attributed to Microsoft’s president Brad Smith:

“I obviously can’t speak about every other agreement in the AI sector. We’re focused on being disciplined but being ambitious. And I think it’s the right combination,” he said. “Everybody’s going to have to be thoughtful and disciplined. Everybody’s going to have to be ambitious but grounded. I think that a lot of these companies are [doing that].”

It was not Fortune’s wonderful headline writers who stumbled into a logical swamp. The culprit or crafter of the statement was “1000 Russian programmers did it” Smith. It is never Microsoft’s fault in my view.

But isn’t this the AI go really fast, don’t worry about the future, and break things?

Mr. Smith, according the article said,

“We see ongoing growth in demand. That’s what we’ve seen over the past year. That’s what we expect today, and frankly our biggest challenge right now is to continue to add capacity to keep pace with it.”

I wonder if Microsoft’s hiring social media influencers is related to generating demand and awareness, not getting people to embrace Copilot. Despite its jumping off the starting line first, Microsoft is now lagging behind its “partner” OpenAI and a two or three other BAIT entities.

The Fortune story includes supporting information from a person who seems totally, 100 percent objective. Here’s the quote:

At Web Summit, he met Anton Osika, the CEO of Lovable, a vibe-coding startup that lets anyone create apps and software simply by talking to an AI model. “What they’re doing to change the prototyping of software is breathtaking. As much as anything, what these kinds of AI initiatives are doing is opening up technology opportunities for many more people to do more things than they can do before…. This will be one of the defining factors of the quarter century ahead…”

I like the idea of Microsoft becoming a “defining factor” for the next 25 years. I would raise the question, “What about the Google? Is it chopped liver?

Several observations:

- Mr. Smith’s informed view does not line up with hiring social media influencers to handle the “growth and demand.” My hunch is that Microsoft fears that it is losing the consumer perception of Microsoft as the really Big Dog. Right now, that seems to be Super sized OpenAI and the mastiff-like Gemini.

- The craziness of “everybody” illustrates a somewhat peculiar view of consensus today. Does everybody include those fun-loving folks fighting in the Russian special operation or the dust ups in Sudan to name two places where “everybody” could be labeled just plain crazy?

- Mr. Smith appears to conflate putting Copilot in Notepad and rolling out Clippy in Yeezies with substantive applications not prone to hallucinations, mistakes, and outputs that could get some users of Excel into some quite interesting meetings with investors and clients.

Net net: Yep, everybody. Not going to happen. But the idea is a-thoughtful, which is interesting to me.

Stephen E Arnold, November 18, 2025

AI Content: Most People Will Just Accept It and Some May Love It or Hum Along

November 18, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The trust outfit Thomson Reuters summarized as real news a survey. The write up sports the title “Are You Listening to Bots? Survey Shows AI Music Is Virtually Undetectable?” Truth be told, I wanted the magic power to change the headline to “Are You Reading News? Survey Shows AI Content Is Virtually Undetectable.” I have no magic powers, but I think the headline I just made up is going to appear in the near future.

Elvis in heaven looks down on a college dance party and realizes that he has been replaced by a robot. Thanks, Venice.ai. Wow, your outputs are deteriorating in my opinion.

What does the trust outfit report about a survey? I learned:

A staggering 97% of listeners cannot distinguish between artificial intelligence-generated and human-composed songs, a Deezer–Ipsos survey showed on Wednesday, underscoring growing concerns that AI could upend how music is created, consumed and monetized. The findings of the survey, for which Ipsos polled 9,000 participants across eight countries, including the U.S., Britain and France, highlight rising ethical concerns in the music industry as AI tools capable of generating songs raise copyright concerns and threaten the livelihoods of artists.

I won’t trot out my questions about sample selection, demographics, and methodology. Let’s just roll with what the “trust” outfit presents as “real” news.

I noted this series of factoids:

- “73% of respondents supported disclosure when AI-generated tracks are recommended”

- “45% sought filtering options”

- “40% said they would skip AI-generated songs entirely.”

- Around “71% expressed surprise at their inability to distinguish between human-made and synthetic tracks.”

Isn’t that last dot point the major finding. More than two thirds cannot differentiate synthesized, digitized music from humanoid performers.

The study means that those who have access to smart software and whatever music generation prompt expertise is required can bang out chart toppers. Whip up some synthetic video and go on tour. Years ago I watched a recreation of Elvis Presley. Judging from the audience reaction, no one had any problem doing the willing suspension of disbelief. No opium required at that event. It was the illusion of the King, not the fried banana version of him that energized the crowd.

My hunch is that AI generated performances will become a very big thing. I am assuming that the power required to make the models work is available. One of my team told me that “Walk My Walk” by Breaking Rust hit the Billboard charts.

The future is clear. First, customer support staff get to find their future elsewhere. Now the kind hearted music industry leadership will press the delete button on annoying humanoid performers.

My big take away from the “real” news story is that most people won’t care or know. Put down that violin and get a digital audio workstation. Did you know Mozart got in trouble when he was young for writing math and music on the walls in his home. Now he can stay in his room and play with his Mac Mini computer.

Stephen E Arnold, November 18, 2025

AI and Self-Perception of Intelligence

November 18, 2025

Here is one way the AI industry is different from the rest of society: In that field, it is those who know the most who are overconfident. Inc. reports, “New Research Warns That AI Is Causing a ‘Reverse Dunning-Kruger Effect’.” A recent study asked 500 subjects to solve some tough logic problems. Half of them used an AI like ChatGPT to complete the tasks. Then they were asked to assess their own performances. That is where the AI experts fell short. Writer Jessica Stillman explains:

“Classic Dunning-Kruger predicts that those with the least skill and familiarity with AI would most overestimate their performance on the AI-assisted task. But that’s not what the researchers reported when they recently published their results in the journal Computers in Human Behavior. In fact, it was the participants who were the most knowledgeable and experienced with AI who overestimated their skills the most. ‘We would expect people who are AI literate to not only be a bit better at interacting with AI systems, but also at judging their performance with those systems—but this was not the case,’ commented study co-author Robin Welsch. ‘We found that when it comes to AI, the DKE vanishes. In fact, what’s really surprising is that higher AI literacy brings more overconfidence.’”

Is this why AI leaders are a bit over the top? This would explain a lot. To make matters worse, another report found the vast majority of users do not double check AIs’ results. We learn:

“One recent analysis by trendspotting company Exploding Topics found an incredible 92 percent of people don’t bother to check AI answers. This despite all the popular models still being prone to hallucinations, wild factual inaccuracies, and sycophantic behavior that fails to push back against user misunderstandings or errors.”

So neither AI nor the people who use it can be trusted to produce accurate results. Good to know, as the tech increasingly underpins everything we do.

Cynthia Murrell, November 18, 2025