Google Will Not Change; the EU Will Not Change; Writing Checks to the EU Will Not Change

January 28, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I suppose one could summarize the information in “Commission Opens Proceedings to Assist Google in Complying with Interoperability and Online Search Data Sharing Obligations under the Digital Markets Act” with a new mobile number for these types of complaints: +1 800 YOU WISH.

Two EU professionals look at a facility. Thanks, Venice.ai. Good enough.

My hunch is that Google will email the legal document to its legal eagles. Leadership at Google does not do legal paperwork. The company is busy inventing the future with its quantumly supreme technology. Let’s look at what the EU’s media office says about the online advertising outfit with smart software and even smarter people:

the European Commission has started two sets of specification proceedings to assist Google in complying with its obligations under the Digital Markets Act (‘DMA’). The specification proceedings formalize the Commission’s regulatory dialogue with Google on certain areas of its compliance with two DMA obligations.

Two violations for Googzilla. First, the company allegedly is not making life easy enough for Android developers. Second, the Google is not providing access to search data.

I am a dinobaby. I live in rural Kentucky. From my point of view, this is similar to the government Lilliput demanding that France ship the Mona Lisa for an exhibition in the Lilliputian National Art Gallery. Sorry, won’t happen. France will just plug along being France.

The EU will work on this matter for several months and then let the Google know what’s up. Is this a surprise the the leadership or the legal eagles at Google? Perhaps. The Google has only known about this matter since September 2023.

What will happen now? Google will allow its legal eagles to flap and bill. Then the EU will respond. Then there will be outputs from Google. Then the EU will find Google a less than willing party to this matter. Google will appeal. Eventually Google will write a check. But what will happen in the meantime? My view is that Google will continue to be Googley. The approach has worked well for the last 25 years.

The EU might want to rethink its system and method. It seems to be flummoxed by Googzilla’s. Just a thought.

Stephen E Arnold, January 27, 2026

AI Stress Cracks: Immaturity Bubbles Visible from Afar

January 28, 2026

Those images of Kilauea’s lava spouting, dribbling, and sputtering are reminders of the molten core within Mother Earth. Has Mom influenced the inner heat of great big technology leaders? Probably not, but after looking at videos of the most recent lava events in Hawaii, I read “Billionaires Elon Musk and Sam Altman Explode in Ugly Online Fight over Whose Tech Killed More People.”

The write up says:

OpenAI CEO Sam Altman fired back at Elon Musk on Tuesday [January 20, 2025] after Musk posted on X warning people not to use ChatGPT, linking it to nine suicide deaths. Altman called out Musk’s claim as misleading and flipped the criticism back, pointing to Tesla’s Autopilot, which has been linked to more than 50 deaths.

A Vietnam era body count. Robert McNamara, as Secdef, liked metrics. Body counts were just one way to measure efficiency and effectiveness. Like an employee’s incentive plans, the body counts reflected remarkable achievements on the battlefield. Were there bodies to count? As I recall, it depended on a number of factors. I won’t go there. The bodycount numbers were important. The bodies often not so much.

Now we have two titans of big tech engaging in bodycounting.

Consider this passage from the cited write up:

The feud comes as Musk sues OpenAI, claiming the company abandoned its nonprofit mission. Musk is reportedly seeking up to $134 billion in damages. The timing of the spat comes amid heightened scrutiny of AI safety globally.

The issues are [a] litigation, [b] big money, and [c] AI safety. One could probably pick any or all three as motivations for the Vietnam era bodycounts.

The write up does not chase the idea about the reason that I considered. These two titans of big tech and the “next big thing” used to be pals and partners. The OpenAI construct was a product of the interaction of these pals and partners. Then the two big tech titans were not pals and partners.

Here we are: [a] litigation, [b] big moneys, and [c] AI (safety is an add on to AI). I think we have a few other drivers for this “ugly online fight.” I don’t think the bodycount is much more than a PR trope. I am yet to be convinced that big tech titans do not think about people not germane to their mission: Amassing power and money.

My view is that we are witnessing Mother Nature’s influence. These estimable titans are volcanos in big tech. They are, in my opinion as a dinobaby, spouting, dribbling, and sputtering. Kilauea is what might be called a mature volcano. Can one say that these titans are mature? I am not so sure.

Could this bodycount thing be a version of a grade school playground spat with the stakes being a little bit higher? Your mileage may vary.

Stephen E Arnold, January 26, 2026

AI and Jobs in the EU

January 28, 2026

We keep hearing that AI is coming for our jobs, but the technology is still infantile. However as the technology advances the fear increases about humans becoming obsolete. The Next Web has the lowdown about AI and how it is affecting jobs across the pond: “What AI Is Actually Doing To Jobs In Europe.” London’s mayor made a fear mongering speech that AI will cause “mass unemployment.” He countered that statement by offering free AI training and a task force to help workers adapt.

Europe isn’t facing mass unemployment. AI has been implemented in many industries and it’s been discovered that:

“In some sectors where AI tools are already in use, work has been reshaped rather than eliminated. A European study found that 30% of EU workers now use AI at work, especially for text tasks like writing and translation, and that digital tools have become nearly universal (90%) across workplaces. Many workers report that AI helps them perform tasks more efficiently or take on different responsibilities.”

While that sounds reassuring, this statistic makes me want to sound the alarm:

“At the same time, employers are actively reassessing job roles because of AI, with about 71% of European firms reconsidering job responsibilities due to AI implementation and over a quarter reducing hiring or cutting roles as a direct result of AI deployment.”

Businesses are slowing their hiring processes, because they don’t know how they will be affected by AI and potential economic slowdowns. Many industries such as finance, legal services, and customer support are susceptible to AI displacement, while many industries are adapting. It’s also expected that while some jobs become obsolete, new jobs will be created.

Hallucinations and incorrect outputs aside, smart software is now alter society in interesting ways. If smart software can disrupt the methods of the Old Country, what will happen in Nebraska?

Whitney Grace, January 28, 2026

Yext: Selling Search with Subtlety

January 27, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Every company with AI is in the search and retrieval business. I want to be direct. I think AI is useful, but it is a utility. Integrated with thought into applications, smart software can smooth some of the potholes in a work process. But what happens when a company with search-and-retrieval technology embraces AI? Do customers beat a path to the firm’s office door? Do podcasters discuss the benefits of the approach? Do I see a revolution?

I thought about the marketing challenge facing Yext, a company whose shares were trading at about $20 in 2021 and today (January 26, 2026) listing at about $8 per share. On the surface, it would seem that AI has not boosted the market’s perception of the value of the value of the company. Two or three years ago, I spoke with a VP at the company. In my “Search” folder I added my text file with the url of the company, an observation about the firm’s use of the terms “search” and “SEO.” I commented, “Check out the company when something big hits.”

I find myself looking at a write up from a German online publication called Ad Hoc News. The article I read has a juicy title and a beefy subtitle; to wit:

The Truth about Yext Inc: Is This AI Search Stock a Hidden Gem or Dead App Walking? Everyone’s Suddenly Talking about Yext Inc and Its AI Search Platform. But Is Yext Stock a Must Cop or a Value Trap You Must Dodge?

I turned to my Overflight system and noticed announcements from the company of about the company like this:

- The CEO Michael Walrath wanted to take the company private in the autumn of 2025

- The company acquired two outfits: Hearsay Systems and Places Scout. (I am unfamiliar with these firms.)

- The firm launched Yext Social. I think this is a marketing and social media management service. (I don’t know anything about social media management.)

- Yext rolled out a white paper about the market.

My thought was that these initiatives represented diversification or amplification of the firm’s search solution. A couple of them could be interesting to learn more about. The winner in this list of Overflight items was the desire of Mr. Walrath to take the firm private. Why? Who will fund the play? What will the company do as a private enterprise that it cannot with access to the US NASDAQ market?

Which direction is this company executive taking the firm? AI, SEO, enterprise search, product shopping, customer service, or some combination of these options? Thanks, MidJourney. Good enough.

When I read through the write up “The Truth about Yext”, I was surprised. The German publication presented me with an English language write up. Plus, the word choice, tone, and structure of the article were quite different from the usual articles about search with smart software. Google writes as if it is a Greek deity with an inferiority complex. Microsoft writes to disguise how much people dislike Copilot using a mad dad tone. Elasticsearch writes in the manner of a GitHub page for those in the know.

But Yext? Here are three examples of the rhetoric in the article:

- Not exactly viral-core… but the AI angle is pulling it back into the chat.

- The AI Angle: Riding the Wave vs Getting Washed

- not a sleepy bond proxy

The German publication appears to have these rhetorical principles in mind when writing about Yext: [a] Use American AI systems to rewrite the German text in a hip, jazzy way, [b] a writer who studied in Berkeley, Calif. and absorbed the pseudo-hip style of those chilling at the Roast & Toast Café, [c] a gig worker hired to write about Yext and trying very hard to hit a home run.

Does the write up provide substantive information about Yext? Answer: From my point of view, the answer is, “No.” Years ago I did profiles of enterprise search vendors for the Enterprise Search Report. My approach can be seen in the profiles on my Xenky Web site. Although these documents are rough drafts and not the final versions for the Enterprise Search Report, you can get a sense of what I expect when reading about search and retrieval.

Does the write up present a clear picture of the firm’s secret sauce? Answer: Again I would answer, “No.” After reading the article and tapping the information at my fingertips about next, I would say that the write up is a play to make Yext into a meme stock. Place a bet and either win big or lose. That’s okay, but when writing about search solid information is needed.,

Do I understand how smart software (AI) integrates into the firm’s search and retrieval systems? My answer, “No.” I am not sure if the “search” is post-processed using smart software, if the queries are converted in some way to help deliver an on point answer. I don’t know if the smart software has been integrated into the standard workflow of acquiring, parsing, indexing, and outputting results that hopefully align with the user’s query. Changing underlying search plumbing is difficult. Gemini recycles and wraps Google’s search and ad injection methods with those quantumly supreme, best-est of the universe assertions. I have no idea what Yext purports to do.

Let me offer several observations whether you like it or not:

- I think the source article had some opportunity to get up close and personal with an AI system, maybe ChatGPT or Qwen?

- I think that Yext is doing some content marketing. Venture Beat is in this game, and I wonder why Yext did not target that type of publication.

- Based on the stock performance in the heart of the boom in AI, I have some difficulty identifying Yext’s unique selling proposition. The actions from taking the company private to buying an SEO services outfit don’t make sense to me. If the tie up worked, I would expect to see Yext in numerous sources to which I have access.

Net net: Yext, what’s next?

Stephen E Arnold, January 27, 2026

Is Google the Macintosh in the Big Apple PAI?

January 27, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I want to be fair. Everyone, including companies that are recognized in the US as having the same “rights” as a citizen, is entitled to an opinion. Google is expressing an opinion, if the information in “Google Appeals Ruling on Illegal Search Monopoly” is correct. The write up says:

Google has appealed a US ruling that in 2024 found the company had an illegal monopoly in internet search and search advertising, reports CNBC. After a special hearing on penalties, the court decided in 2025 on milder measures than those originally proposed by the US Department of Justice.

Google, if I understand this news report, believes it is not a monopoly. Okay, that’s an opinion. Let’s assume that Google is correct. Its Android operating system, its Chrome browser, and its online advertising businesses along with other Google properties do not constitute a monopoly. Just keep that thought in mind: Google is not a monopoly.

Thanks, Venice. Good enough.

Consider that idea in the context of this write up in Macworld, an online information service: “If Google Helps Apple Beat Google, Does Everyone Lose?” The article states:

Basing Siri on Google Gemini, then, is a concession of defeat, and the question is what that defeat will cost. Of course, it will result in more features and very likely a far more capable Siri. Google is in a better position than Apple to deliver on the reckless promises and vaporware demos we heard and saw at WWDC 2024. The question is what compromises Apple will be asked to make, and which compromises it will be prepared to make, in return.

With all due respect to the estimable Macworld, I want to suggest the key question is: “What does the deal with Apple mean to Google’s argument that it is not a monopoly?”

The two companies control the lion’s share of the mobile device operating systems. The data from these mobile devices pump a significant amount of useful metadata and content to each of these companies. One can tell me that there will be a “Great Wall of Secrecy” between the two firms. I can be reassured that every system administrator involved in this tie up, deal, relationship, or “just pals” cooperating set up will not share data.

Google will remain the same privacy centric, user first operation it has been since it got into the online advertising business decades ago. The “don’t be evil” slogan is no longer part of the company credo, but the spirit of just being darned ethical remains the same as it was when Paul Buchheit allegedly came up with this memorable phrase. I assume it is now part of the Google DNA.,

Apple will continue to embrace the security, privacy, and vertical business approach that it has for decades. Despite the niggling complaints about the company’s using negotiation to do business with some interesting entities in the Middle Kingdom, Apple is working hard to allow its users that flexibility to do almost anything each wants within the Apple ecosystem of the super-open App Store.

Who wins in this deal?

I would suggest that Google is the winner for these reasons:

- Google now provides its services to the segment of the upscale mobile market that it has not been able to saturate

- Google provides Apple with its AI services from its constellation of data centers although that may change after Apple learns more about smart software, Google’s logs, and Google’s advertising system

- Google aced out the totally weak wristed competitors like Grok, OpenAI, Apple’s own internal AI team or teams, and open source solutions from a country where Apple a few, easy-to-manage, easy-to-replace manufacturing sets.

What’s Apple get? My view is:

- A way to meet its year’s old promises about smart software

- Some time to figure out how to position this waving of the white flag and the emails to Google suggesting, “Let’s meet up for a chat.”

- Catch up with companies that are doing useful things with smart software despite the hallucination problems.

The cited write up says:

In the end, the most likely answer is some complex mixture of incentives that may never be completely understood outside the companies (or outside an antitrust court hearing).

That statement is indeed accurate. Score a big win for the Googlers. Google is the Apple pulp, the skin, and the meat of the deal.

Stephen E Arnold, January 27, 2026

Programming: Let AI Do It. No Problem?

January 27, 2026

How about this idea: No one is a good programmer anymore.

Why?

Because the programmers are relying on LLMs instead of Stack Overflow. Ibrahim Diallo explains this conundrum in his blog post: “We Were Never Good Programmers.” Diallo says that users are baling the lack of Stack Overflow usage on heavy moderation, but he believes the answer is more nuanced. Stack Overflow was never meant to be a Q&A forum. It was meant to be a place where experts could find answers and ask questions if they couldn’t find what they needed.

Users were quick to point out to newbies that their questions had already been answered. It was often a tough love situation, specially when users didn’t think their solution was incorrect.

Then along came ChatGPT:

“Now most people, according to that graph at least, aren’t asking their questions on Stack Overflow. They’re going straight to ChatGPT or their favorite LLM. It may look like failure, but I think it means Stack Overflow did exactly what it set out to do. Maybe not the best business strategy for themselves, but the most logical outcome for users. Stack Overflow won by losing.”

Stack Overflow users thought their questions were unique snowflakes, but they weren’t. ChatGPT can answer a question because:

“When you ask ChatGPT that same question and it gives you an answer, it’s not because the LLM is running your code and debugging it in real-time. It’s because large language models are incredibly good at surfacing those existing answers. They were trained on Stack Overflow, after all.”

Stack Overflow forced programmers to rethink how they asked questions and observe their problems from another perspective. This was beneficial because it forced programmers to understand their problems to articulate them clearly.

LLMs force programmers to ask themselves the question: Are you a good enough programmer to resolve your problem when ChatGPT can’t? Answer: MBAs say, “Of course.” Accountants say, “Which is better for the bottom line: A human who wants health care or a software tool? Users say: “This stuff doesn’t work.” Yep, let AI do it.

Whitney Grace, January 27, 2026

Tell People What They Want to Hear and Make Up Data. Winning Tactic

January 26, 2026

As one of the people who created Business Dateline in 1983, this article is no surprise. Business Dateline was unique in that we included corrections to the original full text articles in the database. Our interviews with special librarians (now an almost extinct species of information professional), dozens of our best customers, and individuals who were members of trade associations like the now defunct Information Industry Association encouraged us.

Forty years ago, we spent a substantial sum to modify our database workflow to monitor changes to full text documents, create updated records, and insert those records into the online services which provided access to our paying customers.

No one noticed. Users did not care.

Our research was not flawed. The sample we used did care, but these people were not our bread-and-butter users. If the information in the cited article with the very wordy title is on the money, nobody cares today. If it is online, the information is presumed to be accurate until it is not. Even then, no one cares.

The author of this cited article does care. The author invested considerable time in gathering data for his article. The author wants professionals in publishing and institutions to care.

We cared. We created Business Dateline because we knew errors, lies, and distorted information were endemic in online. Cheating is rewarded by the incentives in place. Those incentives are still in place, and it is more frustrating than it was 40 years ago to get a fix to a bonkers online content object.

One of the comments to the cited article struck a chord with me. The stated is from a person who identified himself / herself as Anonymous. I quote:

… Incentives [for accuracy] don’t work that way in business schools, where career success depends upon creating a clear “brand.” People do not care about science or good research, they care about being known for something specific…. Plus there are (bad) outside incentives that exist in business schools. As the word “brand” suggests, there are also very lucrative outside options to be gained from telling people something that they want to hear…

To sum up, accuracy doesn’t matter. If making up information advances a career to lands a paying project, go for the fake.

What are the downsides? For most people, what look like mistakes can be explained away or just get mowed down by the person driving the John Deer.

What happens if the information in a medical database or a nuclear power piping article is incorrect? Not much. A doctor can say, “We did our best.” When the pipe bursts, the engineers check the specs and say, “A structural anomaly.”

With fakery endemic in modern US academia and business, why worry?

Stephen E Arnold, January 26, 2026

The Final Word on Tricky Online Shopping Tactics

January 26, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

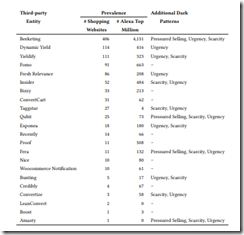

I read a round up of what I call “tricky online shopping tactics.” The data flow from an academic project called WebTAP. The researchers are smart; each hails from either Princeton University or the University of Chicago. Selected data are presented in “Dark Patterns at Scale: Findings from a Crawl of 11K Shopping Websites.” The authors (hopefully just one of them) will do a talk at a conference about tricky retail methods using the jazzier jargon “dark patterns.” The term (whether my dinobaby version or the hip new buzzword) mean the same thing: You are bamboozled into buying stuff you may not want, need, or price check before clicking.

I don’t want to be critical of these earnest researchers. There is a list of the sites that the researchers determined do some fancy dancing. Here it is:

If you want to read the list, you will find it on page 24 of the study team’s 32 page report. I want to point out that sites I know use tricky online shopping tactics are not on the list. Here’s one example of a site I expected to find on the radar of the estimable study team from Princeton and the University of Chicago: Amazon.

But what do the researchers say about dicey online shopping sites I never encounter? The paper states:

We found at least one instance of dark pattern on approximately 11.1% of the examined websites. Notably, 183 of the websites displayed deceptive messages. Furthermore, we observed that dark patterns are more likely to appear on popular websites. Finally, we discovered that dark patterns are often enabled by third-party entities, of which we identify 22; two of these advertise practices that enable deceptive patterns. Based on these findings, we suggest that future work focuses on empirically evaluating the effects of dark patterns on user behavior, developing countermeasures against dark patterns so that users have a fair and transparent experience, and extending our work to discover dark patterns in other domains.

Net net: No Amazon, no Microsoft, no big name online retailers like WalMart, and no product pitch blogs like Venture Beat-type publications. No suggestions for regulatory action to protect consumers. No data about the increase or decrease in the number of sites using dark patterns. Yep, there is indeed work to be done. Why not focus on deception as a business strategy and skip the jazzy jargon?

Stephen E Arnold, January 26, 2026

Are NoKos Scam Phisher Champs?

January 26, 2026

When you think about scams, do you immediately think about Nigeria or Russian females who really want to meet an amerikos ? hat African nation is one of the scam capitals of the world. Russia is pretty capable in this department. But does North Korea hold the title of Scam King? Probably not. But some experts want people to believe that North Korean bad actors are the top phishers of men. Tech Radar explains the authoritarian country’s latest scam: “North Korean Hackers Using Malicious QR Codes In Spear Phishing, FBI Warns.”

North Korean bad actors are preying own academia, think tanks, and US government institutions with sophisticated QR codes called “quishing” attacks. Their goal is to obtain credentials for VPNs, Okta, or Microsoft 365. The FBI issued a warning about quishing attacks. The attacks are sent from “Kimusky,” who sends out convincing emails with complicated QR codes that bypass protections.

The FBI says that QR codes are easily scanned with mobile devices. Here’s how the scam works:

“When the victim scans the code, they are sent through multiple redirectors that collect different information and identity attributes, such as user-agent, operating system, IP address, locale, and screen size. This data is then used to land the victim on a custom-built credential-harvesting page, impersonating Microsoft 365, Okta, or VPN portals.

If the victim does not spot the trick and tries to log in, the credentials would end up with the attackers. What’s more – these attacks often end with session token theft and replay, allowing the threat actors to bypass multi-factor authentication (MFA) and hijack cloud accounts without triggering the usual “MFA failed” alert.”

Mobile devices aren’t managed as readily as desktop and laptop computers. They’re extremely vulnerable to this QR code scam! The smart thing to do is: Don’t scan strange QR codes. Some outfits hire coders, use their scam software, and just provide more phish to be trawled. Hey, restaurant owner, am I talking about you?

Whitney Grace, January 26 , 2026

Consulting at Deloitte, AI, Ls, and Sub Families like 3

January 23, 2026

It seems that artificial intelligence is forcing some vocabulary change in the blue chip world of big buck consulting services. “Deloitte to Scrap Traditional Job Titles As AI Ushers in a ‘Modernization’ of the Big Four” reports the inside skivvy:

… the firm is shifting away from a workforce structure that was originally designed for “traditional consulting profiles,” a model the firm now deems outdated.

When a client, maybe at a Japanese outfit, asks, “What does this mean?” the consultant can explain the nuances of a job family and a sub family; for instance, a software engineer 3 or a project management senior consultant, functional transformation. I like the idea of “functional transformation” instead of “consultant.”

However, the big news in the write up in my opinion is:

A new leadership class simply titled “Leaders” will join the senior ranks of partners, principals, and managing directors. And internally, employees will also be assigned alphanumeric levels, such as L45 for what is currently a senior consultant and L55 for managers. However, the presentation stressed that the day-to-day work, leadership, and the firm’s “compensation philosophy” will all remain the same.

The “news” is in the phrase “the firm’s compensation philosophy will all remain the same.”

All. AI means jobs will be off loaded to good enough AI agents, services, and systems. If these systems do not lead to the loss of engagements, then AI adepts will get paid more and the consultants who burn hours that could be completed in minutes or hours by software means, in my opinion:

- Unproductive workers will be moved down and out

- AI adepts will be moved up and given an “L” deslignation

- New hires will be at a baseline until their value as a sales person, AI adept, or magician who can convert AI output into a scope change, a repeatable high value work products, or do work that allows no revenue loss.

Yep, all.

The write up notes:

Last September [2025], Deloitte committed $3 billion in generative AI development through fiscal year 2030. The company has also launched Zora AI, an agentic AI model powered by Nvidia to “to automate complex business processes, eliminate data siloes and boost productivity for the human workforce.”

My conclusion: Fewer staff, higher pay for AI adepts, client fees increase. Profits, if any, go to the big number “L’s”. Is this an innovation? Nope, adaptation. Get that new job lingo in a LinkedIn profile.

Stephen E Arnold, January 23, 2026