Microsoft, by Golly, Has an Ethical Compass: It Points to Security? No. Clippy? No. Subscriptions? Yes!

October 27, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The elephants are in training for a big fight. Yo, grass, watch out.

“Microsoft AI Chief Says Company Won’t Build Chatbots for Erotica” reports:

Microsoft AI CEO Mustafa Suleyman said the software giant won’t build artificial intelligence services that provide “simulated erotica,” distancing itself from longtime partner OpenAI. “That’s just not a service we’re going to provide,” Suleyman said on Thursday [October 23, 2025] at the Paley International Council Summit in Menlo Park, California. “Other companies will build that.”

My immediate question: “Will Microsoft build tools and provide services allowing others to create erotica or conduct illegal activities; for example, delivery of phishing emails from the Microsoft Cloud to Outlook users?” A quick no seems to be implicit in this report about what Microsoft itself will do. A more pragmatic yes means that Microsoft will have no easy, quick, and cheap way to restrain what a percentage of its users will either do directly or via some type of obfuscation.

Microsoft seems to step away from converting the digital Bob into an adult star or Clippy engaging with a user in a “suggestive” interaction.

The write up adds:

On Thursday, Suleyman said the creation of seemingly conscious AI is already happening, primarily with erotica-focused services. He referenced Altman’s comments as well as Elon Musk’s Grok, which in July launched its own companion features, including a female anime character. “You can already see it with some of these avatars and people leaning into the kind of sexbot erotica direction,” Suleyman said. “This is very dangerous, and I think we should be making conscious decisions to avoid those kinds of things.”

I heard that 25 percent of Internet traffic is related to erotica. That seems low based on my estimates which are now a decade old. Sex not only sells; it seems to be one of the killer applications for digital services whether the user is obfuscated, registered, or using mom’s computer.

My hunch is that the AI enhanced services will trip over their own [a] internal resources, [b] the costs of preventing abuse, sexual or criminal, and [c] the leadership waffling.

There is big money in salacious content. Talking about what will and won’t happen in a rapidly evolving area of technology is little more than marketing spin. The proof will be what happens as AI becomes more unavoidable in Microsoft software and services. Those clever teenagers with Windows running on a cheap computer can do some very interesting things. Many of these will be actions that older wizards do not anticipate or simply push to the margins of their very full 9-9-6 day.

Stephen E Arnold, October 27, 2025

Will AI Topple Microsoft?

October 1, 2025

At least one Big Tech leader is less than enthused about AI rat race. In fact, reports Futurism, “Microsoft CEO Concerned AI Will Destroy the Entire Company.” As the competition puts pressure on the firm to up its AI game, internal stress is building. Senior editor Victor Tangermann writes:

“Morale among employees at Microsoft is circling the drain, as the company has been roiled by constant rounds of layoffs affecting thousands of workers. Some say they’ve noticed a major culture shift this year, with many suffering from a constant fear of being sacked — or replaced by AI as the company embraces the tech. Meanwhile, CEO Satya Nadella is facing immense pressure to stay relevant during the ongoing AI race, which could help explain the turbulence. While making major reductions in headcount, the company has committed to multibillion-dollar investments in AI, a major shift in priorities that could make it vulnerable. As The Verge reports, the possibility of Microsoft being made obsolete as it races to keep up is something that keeps Nadella up at night.”

The CEO recalled his experience with the Digital Equipment Corporation in the 1970s. That once-promising firm lost out to IBM after a series of bad decisions, eventually shuttering completely in the 90s. Nadella would like to avoid a similar story for Microsoft. One key element is, of course, hiring the right talent—a task that is getting increasingly difficult. And expensive.

A particularly galling provocation comes from Elon Musk. Hard to imagine, we know. The frenetic entrepreneur has announced an AI project designed to “simulate” Microsoft’s Office software. Then there is the firm’s contentious relationship with OpenAI to further complicate matters. Will Microsoft manage to stay atop the Big Tech heap?

Cynthia Murrell, October 1, 2025

Microsoft AI: Options, Chaos, Convergence, or Complexity

September 30, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Have you wondered why it is easy to paste a jpeg image into PowerPoint and have it stay in one place? Have you tried to paste the same jpeg image into Word and have it stay in one place? What’s the difference? I will let you ponder the origin of the two programs and why pasting is baffling in sister products. Hint: Find out who coded the applications.

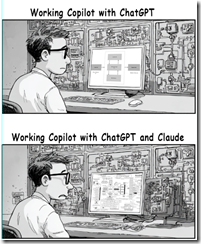

What’s this simple pair of questions have to do with putting Anthropic into Microsoft Copilot? I learned about this alleged management move in “Microsoft Adds Claude to Copilot but Cross Cloud AI Could Raise New Governance Challenges.”

My first thought was, “What is governance in the Microsoft Copilot everywhere approach to smart software?” I did the first thing a slouching high school student would do, I asked Claude for clarification:

Here’s my prompt for Claude:

The context for this prompt is Microsoft Corporation’s approach to smart software. The company is involved with OpenAI. The software giant acqui-hired other AI professionals. There is a team working on home-brew artificial intelligence. Now you (Anthropic Claude) will become available to the users of Copilot. In this context, what does the management buzzword “governance” when it comes to wrangling these multiple AI initiatives, deployments, and services?

Here’s a snapshot of Claude’s answer. I have edited it so it fits into this short blog post. Claude is a wordy devil.

…governance” represents the orchestration framework for managing competing priorities, overlapping capabilities, and divergent AI philosophies under a unified strategic vision.

What does the cited Computerworld article say?

Microsoft is presenting Claude not as a replacement for GPT models, but as a complementary option.

Okay, Copilot user. You figure it out. At least, that’s how I interpret this snippet.

The write up adds:

Unlike OpenAI’s GPT models, which run on Azure, Anthropic’s Claude runs on AWS. Microsoft has warned customers that Anthropic models are hosted outside Microsoft-managed environments and subject to Anthropic’s Terms of Service. So every time Claude is used, it crosses cloud borders that bring governance challenges, and new egress bills in latency.

Managing and optimizing seem to be the Copilot user’s job. I wonder if those Microsoft Certified Professionals are up to speed on the Amazon AWS idiosyncrasies. (I know the answer is, “Absolutely.” Do I believe it? Nope.)

Observations

- If OpenAI falls over will Anthropic pick up the slack? Nope, at least not until the user figures out how to perform this magic trick.

- Will users of Copilot know when to use which AI system? Eventually but the journey will be an interesting and possibly expensive one. Tuition in the School of Hard AI Knocks is not cheap.

- Will users craft solutions that cross systems and maintain security and data access controls / settings? I know the answer will be, “Yes, Microsoft has security nailed.” I am a bit skeptical.

Net net: I think the multi AI model approach provides a solid foundation for chaos, complexity, and higher costs. But I am a dinobaby. What do I know?

Stephen E Arnold, September 30, 2025

Microsoft: The Secure Discount King

September 10, 2025

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Just a dinobaby sharing observations. No AI involved. My apologies to those who rely on it for their wisdom, knowledge, and insights.

Let’s assume that this story in The Register is dead accurate. Let’s forget that Google slapped the $0.47 smart software price tag on its Gemini smart software. Now let’s look at the interesting information in “Microsoft Rewarded for Security Failures with Another US Government Contract.” Snappy title. But check out the sub-title for the article: “Free Copilot for Any Agency Who Actually Wants It.”

I did not know that a US government agency was human signaled by the “who.” But let’s push forward.

The article states:

The General Services Administration (GSA) announced its new deal with Microsoft on Tuesday, describing it as a “strategic partnership” that could save the federal government as much as $3.1 billion over the next year. The GSA didn’t mention specific discount terms, but it said that services, including Microsoft 365, Azure cloud services, Dynamics 365, Entra ID Governance, and Microsoft Sentinel, will be cheaper than ever for feds. That, and Microsoft’s next-gen Clippy, also known as Copilot, is free to access for any agency with a G5 contract as part of the new deal, too. That free price undercuts Google’s previously cheapest-in-show deal to inject Gemini into government agencies for just $0.47 for a year.

Will anyone formulate the hypothesis that Microsoft and Google are providing deep discounts to get government deals and the every-popular scope changes, engineering services, and specialized consulting fees?

I would not.

I quite like comparing Microsoft’s increasingly difficult to explain OpenAI, acqui-hire, and home-grown smart software as Clippy. I think that the more apt comparison is the outstanding Microsoft Bob solution to interface complexity.

The article explains that Oracle landed contracts with a discount, then Google, and now Microsoft. What about the smaller firms? Yeah, there are standard procurement guidelines for those outfits. Follow the rules and stop suggesting that giant companies are discounting there way into the US government.

What happens if these solutions hallucinate, do not deliver what an Inspector General, an Independent Verification & Validation team, or the General Accounting Office expects? Here’s the answer:

With the exception of AWS, all the other OneGov deals that have been announced so far have a very short shelf life, with most expirations at the end of 2026. Critics of the OneGov program have raised concerns that OneGov deals have set government agencies up for a new era of vendor lock-in not seen since the early cloud days, where one-year discounts leave agencies dependent on services that could suddenly become considerably more expensive by the end of next year.

The write up quotes one smaller outfit’s senior manager’s concern about low prices. But the deals are done, and the work on the 2026-2027 statements of work has begun, folks. Small outfits often lack the luxury of staff dedicated to extending a service provider’s engagement into a year or two renewal target.

The write up concludes by bringing up ancient history like those pop archaeologists on YouTube who explain that ancient technology created urns with handles. The write up says:

It was mere days ago that we reported on the Pentagon’s decision to formally bar Microsoft from using China-based engineers to support sensitive cloud services deployed by the Defense Department, a practice Defense Secretary Pete Hegseth called “mind-blowing” in a statement last week. Then there was last year’s episodes that allowed Chinese and Russian cyber spies to break into Exchange accounts used by high-level federal officials and steal a whole bunch of emails and other information. That incident, and plenty more before it, led former senior White House cyber policy director AJ Grotto to conclude that Microsoft was an honest-to-goodness national security threat. None of that has mattered much, as the feds seem content to continue paying Microsoft for its services, despite wagging their finger at Redmond for “avoidable errors.”

Ancient history or aliens? I don’t know. But Microsoft does deals, and it is tough to resist “free”.

Stephen E Arnold, September 10, 2025

Dr. Bob Clippy Will See You Now

September 8, 2025

I cannot wait for AI to replace my trusted human physician whom I’ve been seeing for years. “Microsoft Claims its AI Tool Can Diagnose Complex Medical Cases Four Times More Accurately than Doctors,” Fortune reports. The company made this incredible claim in a recent blog post. How did it determine this statistic? By taking the usual resources away from human doctors it pitted against its AI. Senior Reporter Alexa Mikhail tells us:

“The team at Microsoft noted the limitations of this research. For one, the physicians in the study had between five and 20 years of experience, but were unable to use textbooks, coworkers, or—ironically—generative AI for their answers. It could have limited their performance, as these resources may typically be available during a complex medical situation.”

You don’t say? Additionally, the study did not include everyday cases. You know, the sort doctors do not need to consult books or coworkers to diagnose. Seems legit. Microsoft says it sees the tool as a complement to doctors, not a replacement for them. That sounds familiar.

Mikahil notes AI already permeates healthcare: Most of us have looked up symptoms with AI-assisted Web searches. ChatGPT is actively being used as a psychotherapist (sometimes for better, often for worse). Many healthcare executives are eager to take this much, much further. So are about half of US patients and 63% of clinicians, according to the 2025 Philips Future Health Index (FHI), who expect AI to improve health outcomes. We hope they are correct, because there may be no turning back now.

Cynthia Murrell, September 8, 2025

AI Words Are the Surface: The Deeper Thought Embedding Is the Problem with AI

September 3, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

Humans are biased. Content generated by humans reflects these mental patterns. Smart software is probabilistic. So what?

Select the content to train smart software. The more broadly the content base, the greater range of biases will be baked into the Fancy Dan software. Then toss in the human developers who make decisions about thresholds, weights, and rounding. Mix in the wrapper code that does the guardrails which are created by humans with some of those biases, attitudes, and idiosyncratic mental equipment.

Then provide a system to students and people eager to get more done with less effort and what do you get? A partial and important glimpse of the consequences of about 2.5 years of AI as the next big thing are presented in “On-Screen and Now IRL: FSU Researchers Find Evidence of ChatGPT Buzzwords Turning Up in Everyday Speech.”

The write up reports:

“The changes we are seeing in spoken language are pretty remarkable, especially when compared to historical trends,” Juzek said. “What stands out is the breadth of change: so many words are showing notable increases over a relatively short period. Given that these are all words typically overused by AI, it seems plausible to conjecture a link.”

Conjecture. That’s a weasel word. Once words are embedded they dragged a hard sided carry on with them.

The write up adds:

“Our research highlights many important ethical questions,” Galpin said. “With the ability of LLMs to influence human language comes larger questions about how model biases and misalignment, or differences in behavior in LLMs, may begin to influence human behaviors.”

As more research data become available, I project that several factoids will become points of discussion:

- What happens when AI outputs are weaponized for political, personal, or financial gain?

- How will people consuming AI outputs recognize that their vocabulary and the attendant “value baggage” is along for the life journey?

- What type of mental remapping can be accomplished with shaped AI output?

For now, students are happy to let AI think for them. In the future, will that warm, fuzzy feeling persist. If ignorance is bliss, I say, “Hello, happy.”

Stephen E Arnold, September 3, 2025

Copilot, Can You Crash That Financial Analysis?

August 22, 2025

No AI. Just a dinobaby working the old-fashioned way.

No AI. Just a dinobaby working the old-fashioned way.

The ever-insouciant online service The Verge published a story about Microsoft, smart software, and Excel. “Microsoft Excel Adds Copilot AI to Help Fill in Spreadsheet Cells” reports:

Microsoft Excel is testing a new AI-powered function that can automatically fill cells in your spreadsheets, which is similar to the feature that Google Sheets rolled out in June.

Okay, quite specific intentionality: Fill in cells. And a dash of me-too. I like it.

However, the key statement in my opinion is:

The COPILOT function comes with a couple of limitations, as it can’t access information outside your spreadsheet, and you can only use it to calculate 100 functions every 10 minutes. Microsoft also warns against using the AI function for numerical calculations or in “high-stakes scenarios” with legal, regulatory, and compliance implications, as COPILOT “can give incorrect responses.”

I don’t want to make a big deal out of this passage, but I will do it anyway. First, Microsoft makes clear that the outputs can be incorrect. Second, don’t use it too much because I assume one will have to pay to use a system that “can give incorrect results.” In short, MSFT is throttling Excel’s Copilot. Doesn’t everyone want to explore numbers with an addled Copilot known to flub numbers in a jet aircraft at 0.8 Mach?

I want to quote from “It Took Many Years And Billions Of Dollars, But Microsoft Finally Invented A Calculator That Is Wrong Sometimes”:

Think of it. Forty-five hundred years ago, if you were a Sumerian scribe, while your calculations on the world’s first abacus might have been laborious, you could be assured they’d be correct. Four hundred years ago, if you were palling around with William Oughtred, his new slide rule may have been a bit intimidating at first, but you could know its output was correct. In the 1980s, you could have bought the cheapest, shittiest Casio-knockoff calculator you could find, and used it exclusively, for every day of the rest of your life, and never once would it give anything but a correct answer. You could use it today! But now we have Microsoft apparently determining that “unpredictability” was something that some number of its customers wanted in their calculators.

I know that I sure do. I want to use a tool that is likely to convert “high-stakes scenarios” into an embarrassing failure. I mean who does not want this type of digital Copilot?

Why do I find this Excel with Copilot software interesting?

- It illustrates that accuracy has given way to close enough for horseshoes. Impressive for a company that can issue an update that could kill one’s storage devices.

- Microsoft no longer dances around hallucinations. The company just says, “The outputs can be wrong.” But I wonder, “Does Microsoft really mean it?” What about Red Bull-fueled MBAs handling one’s retirement accounts? Yeah, those people will be really careful.

- The article does not come and and say, “Looks like the AI rocket ship is losing altitude.”

- I cannot imagine sitting in a meeting and observing the rationalizations offered to justify releasing a product known to make NUMERICAL errors.

Net net: We are learning about the quality of [a] managerial processes at Microsoft, [b] the judgment of employees, and [c] the sheer craziness that an attorney said, “Sure, release the product just include an upfront statement that it will make mistakes.” Nothing builds trust more than a company anchored in customer-centric values.

Stephen E Arnold, August 22, 2025

The Bubbling Pot of Toxic Mediocrity? Microsoft LinkedIn. Who Knew?

August 19, 2025

No AI. Just a dinobaby working the old-fashioned way.

No AI. Just a dinobaby working the old-fashioned way.

Microsoft has a magic touch. The company gets into Open Source; the founder “gits” out. Microsoft hires a person from Intel. Microsoft hires garners an engineer, asks some questions, and the new hire is whipped with a $34,000 fine and two years of mom looking in his drawers.

Now i read “Sunny Days Are Warm: Why LinkedIn Rewards Mediocrity.” The write up includes an outstanding metaphor in my opinion: Toxic Mediocrity. The write up says:

The vast majority of it falls into a category I would describe as Toxic Mediocrity. It’s soft, warm and hard to publicly call out but if you’re not deep in the bubble it reads like nonsense. Unlike it’s cousins ‘Toxic Positivity’ and ‘Toxic Masculinity’ it isn’t as immediately obvious. It’s content that spins itself as meaningful and insightful while providing very little of either. Underneath the one hundred and fifty words is, well, nothing. It’s a post that lets you know that sunny days are warm or its better not to be a total psychopath. What is anyone supposed to learn from that?

When I read a LinkedIn post it is usually referenced in an article I am reading. I like to follow these modern slippery footnotes. (If you want slippery, try finding interesting items about Pavel Durov in certain Russian sources.)

Here’s what I learn:

- A “member” makes clear that he or she has information of value. I must admit. Once in a while a useful post will turn up. Not often, but it has happened. I do know the person believes something about himself or herself. Try asking a GenAI about their personal “beliefs.” Let me know how that works.

- Members in a specific group with an active moderator often post items of interest. Instead of writing my unread blog, these individuals identify an item and use LinkedIn as a “digital bulletin board” for people who shop at the same sporting goods store in rural Kentucky. (One sells breakfast items and weapons.)

- I get a sense of the jargon people use to explain their expertise. I work alone. I am writing a book. I don’t travel to conferences or client locations now. I rely on LinkedIn as the equivalent of going to a conference mixer and listening to the conversations.

That useful. I have a person who interacts on LinkedIn for me. I suppose my “experience” is therefore different from someone who visits the site, posts, and follows the antics of LinkedIn’s marketers as they try to get the surrogate me to pay to do what I do. (Guess what? I don’t pay.)

I noted this statement in the essay:

Honestly, the best approach is to remember that LinkedIn is a website owned by Microsoft, trying to make money for Microsoft, based on time spent on the site. Nothing you post there is going to change your career. Doing work that matters might. Drawing attention to that might. Go for depth over frequency.

I know that many people rely on LinkedIn to boost their self confidence. One of the people who worked for me moved to another city. I suggested that she give LinkedIn a whirl. She wrote interesting short items about her interests. She got good feedback. Her self confidence ticked up, and she landed a successful job. So there’s a use case for you.

You should be able to find a short item that a new post appears on my blog. Write me and my surrogate will write you back and give you instructions about how to contact me. Why don’t I conduct conversations on LinkedIn? Have you checked out the telemetry functions in Microsoft software?

Stephen E Arnold, August 19, 2025

A Baloney Blizzard: What Is Missing? Oh, Nothing, Just Security

August 19, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

I do not know what a CVP is. I do know a baloney blizzard when I see one. How about these terms: Ambient, pervasive, and multi-modal. I interpret ambient as meaning temperature or music like the tunes honked in Manhattan elevators. Pervasive I view as surveillance; that is, one cannot escape the monitoring. What a clever idea. Who doesn’t want Microsoft Windows to be inescapable? And multi-modal sparks in me thoughts of a cave painting and a shaman. I like the idea of Windows intermediating for me.

Where did I get these three odd ball words? I read “Microsoft’s Windows Lead Says the Next Version of Windows Will Be More Ambient, Pervasive, and Multi-Modal As AI Redefines the Desktop Interface.” The source of this write up is an organization that absolutely loves Microsoft products and services.

Here’s a passage I noted:

Davuluri confirms that in the wake of AI, Windows is going to change significantly. The OS is going to become more ambient and multi-modal, capable of understanding the content on your screen at all times to enable context-aware capabilities that previously weren’t possible. Davuluri continues, “you’ll be able to speak to your computer while you’re writing, inking, or interacting with another person. You should be able to have a computer semantically understand your intent to interact with it.”

Very sci-fi. However, I don’t want to speak to my computer. I work in silence. My office is set up do I don’t have people interrupting, chattering, or asking me to go to get donuts. My view is, “Send me an email or a text. Don’t bother me.” Is that why in many high-tech companies people wear earbuds? It is. They don’t want to talk, interact, or discuss Netflix. These people want to “work” or what they think is “work.”

Does Microsoft care? Of course not. Here’s a reasonably clear statement of what Microsoft is going to try and force upon me:

It’s clear that whatever is coming next for Windows, it’s going to promote voice as a first class input method on the platform. In addition to mouse and keyboard, you will be able to ambiently talk to Windows using natural language while you work, and have the OS understand your intent based on what’s currently on your screen.

Several observations:

- AI is not reliable

- Microsoft is running a surveillance operation in my opinion

- This is the outfit which created Bob and Clippy.

But the real message in this PR marketing content essay: Security is not mentioned. Does a secure operation want people talking about their work?

Stephen E Arnold, August 19, 2025

Microsoft: Knee Jerk Management Enigma

July 29, 2025

This blog post is the work of an authentic dinobaby. Sorry. Not even smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. Not even smart software can help this reptilian thinker.

I read “In New Memo, Microsoft CEO Addresses Enigma of Layoffs Amid Record Profits and AI Investments.” The write up says in a very NPR-like soft voice:

“This is the enigma of success in an industry that has no franchise value,” he wrote. “Progress isn’t linear. It’s dynamic, sometimes dissonant, and always demanding. But it’s also a new opportunity for us to shape, lead through, and have greater impact than ever before.” The memo represents Nadella’s most direct attempt yet to reconcile the fundamental contradictions facing Microsoft and many other tech companies as they adjust to the AI economy. Microsoft, in particular, has been grappling with employee discontent and internal questions about its culture following multiple rounds of layoffs.

Discontent. Maybe the summer of discontent. No, it’s a reshaping or re-invention of a play by William Shakespeare (allegedly) which borrows from Chaucer’s Troilus and Criseyde with a bit more emphasis on pettiness and corruption to add spice to Boccaccio’s antecedent. Willie’s Troilus and Cressida makes the “love affair” more ironic.

Ah, the Microsoft drama. Let’s recap: [a] Troilus and Cressida’s Two Kids: Satya and Sam, [b] Security woes of SharePoint (who knew? eh, everyone]; [c] buying green credits or how much manure does a gondola rail card hold? [d] Copilot (are the fuel switches on? Nope); and [e] layoffs.

What’s the description of these issues? An enigma. This is a word popping up frequently it seems. An enigma is, according to Venice, a smart software system:

The word “enigma” derives from the Greek “ainigma” (meaning “riddle” or “dark saying”), which itself stems from the verb “aigin” (“to speak darkly” or “to speak in riddles”). It entered Latin as “aenigma”, then evolved into Old French as “énigme” before being adopted into English in the 16th century. The term originally referred to a cryptic or allegorical statement requiring interpretation, later broadening to describe any mysterious, puzzling, or inexplicable person or thing. A notable modern example is the Enigma machine, a cipher device used in World War II, named for its perceived impenetrability. The shift from “riddle” to “mystery” reflects its linguistic journey through metaphorical extension.

Okay, let’s work through this definition.

- Troilus and Cressida or Satya and Sam. We have a tortured relationship. A bit of a war among the AI leaders, and a bit of the collapse of moral certainty. The play seems to be going nowhere. Okay, that fits.

- Security woes. Yep, the cipher device in World War II. Its security or lack of it contributed to a number of unpleasant outcomes for a certain nation state associated with beer and Rome’s failure to subjugate some folks.

- Manure. This seems to be a metaphorical extension. Paying “green” or money for excrement is a remarkable image. Enough said.

- Fuel switches and the subsequent crash, explosion, and death of some hapless PowerPoint users. This lines up with “puzzling.” How did those Word paragraphs just flip around? I didn’t do it. Does anyone know why? Of course not.

- Layoffs. Ah, an allegorical statement. Find your future elsewhere. There is a demand for life coaches, LinkedIn profile consultants, and lawn service workers.

Microsoft is indeed speaking darkly. The billions burned in the AI push have clouded the atmosphere in Softie Land. When the smoke clears, what will remain? My thought is that the items a to e mentioned above are going to leave some obvious environmental alterations. Yep, dark saying because knee jerk reactions are good enough.

Stephen E Arnold, July 29, 2025