Sales SEO: A New Tool for Hype and Questionable Relevance

February 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Search engine optimization is a relevance eraser. Now SEO has arrived for a human. “Microsoft Copilot Can Now Write the Sales Pitch of a Lifetime” makes clear that hiring is going to become more interesting for both human personnel directors (often called chief people officers) and AI-powered résumé screening systems. And for people who are responsible for procurement, figuring out when a marketing professional is tweaking the truth and hallucinating about a product or service will become a daily part of life… in theory.

Thanks for the carnival barker image, MSFT Copilot Bing thing. Good enough. I love the spelling of “asiractson”. With workers who may not be able to read, so what? Right?

The write up explains:

Microsoft Copilot for Sales uses specific data to bring insights and recommendations into its core apps, like Outlook, Microsoft Teams, and Word. With Copilot for Sales, users will be able to draft sales meeting briefs, summarize content, update CRM records directly from Outlook, view real-time sales insights during Teams calls, and generate content like sales pitches.

The article explains:

… Copilot for Service for Service can pull in data from multiple sources, including public websites, SharePoint, and offline locations, in order to handle customer relations situations. It has similar features, including an email summary tool and content generation.

Why is MSFT expanding these interesting functions? Revenue. Paying extra unlocks these allegedly remarkable features. Prices range from $240 per year to a reasonable $600 per year per user. This is a small price to pay for an employee unable to craft solutions that sell, by golly.

Stephen E Arnold, February 5, 2024

Search Market Data: One Click to Oblivion Is Baloney, Mr. Google

January 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Do you remember the “one click away” phrase. The idea was and probably still is in the minds of some experts that any user can change search engines with a click. (Eric Schmidt, the adult once in charge of the Google) also suggested that he is kept awake at night worrying about Qwant. I know? Qwant what?

“I have all the marbles,” says the much loved child. Thanks, MSFT second string Copilot Bing thing. Good enough.

“I have all the marbles,” says the much loved child. Thanks, MSFT second string Copilot Bing thing. Good enough.

I read an interesting factoid. I don’t know if the numbers are spot on, but the general impression of the information lines up with what my team and I have noted for decades. The relevance champions at Search Engine Roundtable published “Report: Bing Gained Less Than 1% Market Share Since Adding Bing Chat.”

Here’s a passage I found interesting:

Bloomberg reported on the StatCounter data, saying, “But Microsoft’s search engine ended 2023 with just 3.4% of the global search market, according to data analytics firm StatCounter, up less than 1 percentage point since the ChatGPT announcement.”

There’s a chart which shows Google’s alleged 91.6 percent Web search market share. I love the precision of a point six, don’t you? The write up includes a survey result suggesting that Bing would gain more market share.

Yeah, one click away. Oh, Qwant.com is still on line at https://www.qwant.com/. Rest easy, Google.

Stephen E Arnold, January 24, 2024

IBM Charges Toward Consulting Services: Does Don Quixote Work at Big Blue?

January 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It is official. IBM consultants will use smart software to provide answers to clients. Why not ask the smart software directly and skip the consultants? Why aren’t IBM consultants sufficiently informed and intelligent to answer a client’s questions directly? Is IBM admitting that its consultants lack the knowledge depth and insight necessary to solve a client’s problems? Hmmm.

“IBM Introduces IBM Consulting Advantage, an AI Services Platform and Library of Assistants to Empower Consultants” asserts in corporate marketing lingo:

IBM Consulting Assistants are accessed through an intuitive conversational interface powered by IBM Watsonx, IBM’s AI and data platform. Consultants can toggle across multiple IBM and third-party generative AI models to compare outputs and select the right model for their task, and use the platform to rapidly build and share prompts and pre-trained assistants across teams or more widely across the consulting organization. The interface also enables easy uploading of project-specific documents for rapid insights that can then be shared into common business tools.

One of the key benefits of using smart software is to allow the IBM consultants to do more in the same billable hour. Thus, one can assume that billable hours will go up. “Efficiency” may not equate to revenue generation if the AI-assisted humanoids deliver incorrect, off-point, or unverifiable outputs.

A winner with a certain large company’s sure fire technology. Thanks, MSFT second string Copilot Bing thing. Good enough.

What can the AI-turbo charged system do? A lot. Here’s what IBM marketing asserts:

The IBM Consulting Advantage platform will be applied across the breadth of IBM Consulting’s services, spanning strategy, experience, technology and operations. It is designed to work in combination with IBM Garage, a proven, collaborative engagement model to help clients fast-track innovation, realize value three times faster than traditional approaches, and transparently track business outcomes. Today’s announcement builds on IBM Consulting’s concrete steps in 2023 to further expand its expertise, tools and methods to help accelerate clients’ business transformations with enterprise-grade AI…. IBM Consulting helps accelerate business transformation for our clients through hybrid cloud and AI technologies, leveraging our open ecosystem of partners. With deep industry expertise spanning strategy, experience design, technology, and operations, we have become the trusted partner to many of the world’s most innovative and valuable companies, helping modernize and secure their most complex systems. Our 160,000 consultants embrace an open way of working and apply our proven, collaborative engagement model, IBM Garage, to scale ideas into outcomes.

I have some questions; for example:

- Will IBM hire less qualified and less expensive humans, assuming that smart software lifts them up to super star status?

- Will the system be hallucination proof; that is, what procedure ensures that decisions based on smart software assisted outputs are based on factual, reliable information?

- When a consulting engagement goes off the rails, how will IBM allocate responsibility; for example, 100 percent to the human, 50 percent to the human and 50 percent to those who were involved in building the model, or 100 percent to the client since the client made a decision and consultants just provide options and recommendations?

I look forward to IBM Watsonx’s revolutionizing consulting related to migrating COBOL from a mainframe to a hybrid environment relying on a distributed network with diverse software. Will WatsonX participate in Jeopardy again?

Stephen E Arnold, January 23, 2024

Cyber Security Software and AI: Man and Machine Hook Up

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My hunch is that 2024 is going to be quite interesting with regards to cyber security. The race among policeware vendors to add “artificial intelligence” to their systems began shortly after Microsoft’s ChatGPT moment. Smart agents, predictive analytics coupled to text sources, real-time alerts from smart image monitoring systems are three application spaces getting AI boosts. The efforts are commendable if over-hyped. One high-profile firm’s online webinar presented jargon and buzzwords but zero evidence of the conviction or closure value of the smart enhancements.

The smart cyber security software system outputs alerts which the system manager cannot escape. Thanks, MSFT Copilot Bing thing. You produced a workable illustration without slapping my request across my face. Good enough too.

Let’s accept as a working presence that everyone from my French bulldog to my neighbor’s ex wife wants smart software to bring back the good old, pre-Covid, go-go days. Also, I stipulate that one should ignore the fact that smart software is a demonstration of how numerical recipes can output “good enough” data. Hallucinations, errors, and close-enough-for-horseshoes are part of the method. What’s the likelihood the door of a commercial aircraft would be removed from an aircraft in flight? Answer: Well, most flights don’t lose their doors. Stop worrying. Those are the rules for this essay.

Let’s look at “The I in LLM Stands for Intelligence.” I grant the title may not be the best one I have spotted this month, but here’s the main point of the article in my opinion. Writing about automated threat and security alerts, the essay opines:

When reports are made to look better and to appear to have a point, it takes a longer time for us to research and eventually discard it. Every security report has to have a human spend time to look at it and assess what it means. The better the crap, the longer time and the more energy we have to spend on the report until we close it. A crap report does not help the project at all. It instead takes away developer time and energy from something productive. Partly because security work is consider one of the most important areas so it tends to trump almost everything else.

The idea is that strapping on some smart software can increase the outputs from a security alerting system. Instead of helping the overworked and often reviled cyber security professional, the smart software makes it more difficult to figure out what a bad actor has done. The essay includes this blunt section heading: “Detecting AI Crap.” Enough said.

The idea is that more human expertise is needed. The smart software becomes a problem, not a solution.

I want to shift attention to the managers or the employee who caused a cyber security breach. In what is another zinger of a title, let’s look at this research report, “The Immediate Victims of the Con Would Rather Act As If the Con Never Happened. Instead, They’re Mad at the Outsiders Who Showed Them That They Were Being Fooled.” Okay, this is the ostrich method. Deny stuff by burying one’s head in digital sand like TikToks.

The write up explains:

The immediate victims of the con would rather act as if the con never happened. Instead, they’re mad at the outsiders who showed them that they were being fooled.

Let’s assume the data in this “Victims” write up are accurate, verifiable, and unbiased. (Yeah, I know that is a stretch.)

What do these two articles do to influence my view that cyber security will be an interesting topic in 2024? My answers are:

- Smart software will allegedly detect, alert, and warn of “issues.” The flow of “issues” may overwhelm or numb staff who must decide what’s real and what’s a fakeroo. Burdened staff can make errors, thus increasing security vulnerabilities or missing ones that are significant.

- Managers, like the staffer who lost a mobile phone, with company passwords in a plain text note file or an email called “passwords” will blame whoever blows the whistle. The result is the willful refusal to talk about what happened, why, and the consequences. Examples range from big libraries in the UK to can kicking hospitals in a flyover state like Kentucky.

- Marketers of remediation tools will have a banner year. Marketing collateral becomes a closed deal making the art history majors writing copy secure in their job at a cyber security company.

Will bad actors pay attention to smart software and the behavior of senior managers who want to protect share price or their own job? Yep. Close attention.

Stephen E Arnold, January 8, 2024

THE I IN LLM STANDS FOR INTELLIGENCE

xx

x

x

x

x

x

IBM: AI Marketing Like It Was 2004

January 5, 2024

This essay is the work of a dumb dinobaby. No smart software required. Note: The word “dinobaby” is — I have heard — a coinage of IBM. The meaning is an old employee who is no longer wanted due to salary, health care costs, and grousing about how the “new” IBM is not the “old” IBM. I am a proud user of the term, and I want to switch my tail to the person who whipped up the word.

This essay is the work of a dumb dinobaby. No smart software required. Note: The word “dinobaby” is — I have heard — a coinage of IBM. The meaning is an old employee who is no longer wanted due to salary, health care costs, and grousing about how the “new” IBM is not the “old” IBM. I am a proud user of the term, and I want to switch my tail to the person who whipped up the word.

What’s the future of AI? The answer depends on whom one asks. IBM, however, wants to give it the old college try and answer the question so people forget about the Era of Watson. There’s a new Watson in town, or at least, there is a new Watson at the old IBM url. IBM has an interesting cluster of information on its Web site. The heading is “Forward Thinking: Experts Reveal What’s Next for AI.”

IBM crows that it “spoke with 30 artificial intelligence visionaries to learn what it will take to push the technology to the next level.” Five of these interviews are now available on the IBM Web site. My hunch is that IBM will post new interviews, hit the new release button, post some links on social media, and then hit the “Reply” button.

Can IBM ignite excitement and capture the revenues it wants from artificial intelligence? That’s a good question, and I want to ask the expert in the cartoon for an answer. Unfortunately only customers and their decisions matter for AI thought leaders unless the intended audience is start ups, professors, and employees. Thanks, MSFT Copilot Bing thing. Good enough.

As I read the interviews, I thought about the challenge of predicting where smart software would go as it moved toward its “what’s next.” Here’s a mini-glimpse of what the IBM visionaries have to offer. Note that I asked Microsoft’s smart software to create an image capturing the expert sitting in an office surrounded by memorabilia.

Kevin Kelly (the author of What Technology Wants) says: “Throughout the business world, every company these days is basically in the data business and they’re going to need AI to civilize and digest big data and make sense out of it—big data without AI is a big headache.” My thought is that IBM is going to make clear that it can help companies with deep pockets tackle these big data and AI them. Does AI want something, or do those trying to generate revenue want something?

Mark Sagar (creator of BabyX) says: “We have had an exponential rise in the amount of video posted online through social media, etc. The increased use of video analysis in conjunction with contextual analysis will end up being an extremely important learning resource for recognizing all kinds of aspects of behavior and situations. This will have wide ranging social impact from security to training to more general knowledge for machines.” Maybe IBM will TikTok itself?

Chieko Asakawa (an unsighted IBM professional) says: “We use machine learning to teach the system to leverage sensors in smartphones as well as Bluetooth radio waves from beacons to determine your location. To provide detailed information that the visually impaired need to explore the real world, beacons have to be placed between every 5 to 10 meters. These can be built into building structures pretty easily today.” I wonder if the technology has surveillance utility?

Yoshua Bengio (seller of an AI company to ServiceNow) says: “AI will allow for much more personalized medicine and bring a revolution in the use of large medical datasets.” IBM appears to have forgotten about its Houston medical adventure and Mr. Bengio found it not worth mentioning I assume.

Margaret Boden (a former Harvard professor without much of a connection to Harvard’s made up data and administrative turmoil) says: “Right now, many of us come at AI from within our own silos and that’s holding us back.” Aren’t silos necessary for security, protecting intellectual property, and getting tenure? Probably the “silobreaking” will become a reality.

Several observations:

- IBM is clearly trying hard to market itself as a thought leader in artificial intelligence. The Jeopardy play did not warrant a replay.

- IBM is spending money to position itself as a Big Dog pulling the AI sleigh. The MIT tie up and this AI Web extravaganza are evidence that IBM is [a] afraid of flubbing again, [b] going to market its way to importance, [c] trying to get traction as outfits like OpenAI, Mistral, and others capture attention in the US and Europe.

- IBM’s ability to generate awareness of its thought leadership in AI underscores one of the challenges the firm faces in 2024.

Net net: The company that coined the term “dinobaby” has its work cut out for itself in my opinion. Is Jeopardy looking like a channel again?

Stephen E Arnold, January 5, 2024

Does Amazon Do Questionable Stuff? Sponsored Listings? Hmmm.

January 4, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Amazon, eBay, other selling platforms allow vendors to buy sponsored ads or listings. Sponsored ads or listings promote products and services to the top of search results. It’s similar to how Google sells ads. Unfortunately Google’s search results are polluted with more sponsored ads than organic results. Sponsored ads might not be a wise investment. Pluralistic explains that sponsored ads are really a huge waste of money: “Sponsored Listings Are A Ripoff For Sellers.”

Amazon relies on a payola sponsored ad system, where sellers bid to be the top-ranked in listings even if their products don’t apply to a search query. Payola systems are illegal but Amazon makes $31 billion annually from its system. The problem is that the $31 billion is taken from Amazon sellers who pay it in fees for the privilege to sell on the platform. Sellers then recoup that money from consumers and prices are raised across all the markets. Amazon controls pricing on the Internet.

Another huge part of a seller’s budget is for Amazon advertising. If sellers don’t buy ads in searches that correspond to their products, they’re kicked off the first page. The Amazon payola system only benefits the company and sellers who pay into the payola. Three business-school researchers Vibhanshu Abhishek, Jiaqi Shi and Mingyu Joo studied the harmful effects of payolas:

“After doing a lot of impressive quantitative work, the authors conclude that for good sellers, showing up as a sponsored listing makes buyers trust their products less than if they floated to the top of the results "organically." This means that buying an ad makes your product less attractive than not buying an ad. The exception is sellers who have bad products – products that wouldn’t rise to the top of the results on their own merits. The study finds that if you buy your mediocre product’s way to the top of the results, buyers trust it more than they would if they found it buried deep on page eleventy-million, to which its poor reviews, quality or price would normally banish it. But of course, if you’re one of those good sellers, you can’t simply opt not to buy an ad, even though seeing it with the little "AD" marker in the thumbnail makes your product less attractive to shoppers. If you don’t pay the danegeld, your product will be pushed down by the inferior products whose sellers are only too happy to pay ransom.”

It’s getting harder to compete and make a living on online selling platforms. It would be great if Amazon sided with the indy sellers and quit the payola system. That will never happen.

Whitney Grace, January 4, 2024

Ignoring the Big Thing: Google and Its PR Hunger

December 18, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “FunSearch: Making New Discoveries in Mathematical Sciences Using Large Language Models.” The main idea is that Google’s smart software is — once again — going where no mortal man has gone before. The write up states:

Today, in a paper published in Nature, we introduce FunSearch, a method to search for new solutions in mathematics and computer science. FunSearch works by pairing a pre-trained LLM, whose goal is to provide creative solutions in the form of computer code, with an automated “evaluator”, which guards against hallucinations and incorrect ideas. By iterating back-and-forth between these two components, initial solutions “evolve” into new knowledge. The system searches for “functions” written in computer code; hence the name FunSearch.

I like the idea of getting the write up in Nature, a respected journal. I like even better the idea of Google-splaining how a large language model can do mathy things. I absolutely love the idea of “new.”

“What’s with the pointed stick? I needed a wheel,” says the disappointed user of an advanced technology in days of yore. Thanks, MSFT Copilot. Good enough, which is a standard of excellence in smart software in my opinion.

Here’s a wonderful observation summing up Google’s latest development in smart software:

FunSearch is like one of those rocket cars that people make once in a while to break land speed records. Extremely expensive, extremely impractical and terminally over-specialized to do one thing, and do that thing only. And, ultimately, a bit of a show. YeGoblynQueenne via YCombinator.

My question is, “Is Google dusting a code brute force method with marketing sprinkles?” I assume that the approach can be enhanced with more tuning of the evaluator. I am not silly enough to ask if Google will explain the settings, threshold knobs, and probability levers operating behind the scenes.

Google’s prose makes the achievement clear:

This work represents the first time a new discovery has been made for challenging open problems in science or mathematics using LLMs. FunSearch discovered new solutions for the cap set problem, a longstanding open problem in mathematics. In addition, to demonstrate the practical usefulness of FunSearch, we used it to discover more effective algorithms for the “bin-packing” problem, which has ubiquitous applications such as making data centers more efficient.

The search for more effective algorithms is a never-ending quest. Who bothers to learn how to get a printer to spit out “Hello, World”? Today I am pleased if my printer outputs a Gmail message. And bin-packing is now solved. Good.

As I read the blog post, I found the focus on large language models interesting. But that evaluator strikes me as something of considerable interest. When smart software discovers something new, who or what allows the evaluator to “know” that something “new” is emerging. That evaluator must be something to prevent hallucination (a fancy term for making stuff up) and blocking the innovation process. I won’t raise any Philosophy 101 questions, but I will say, “Google has the keys to the universe” with sprinkles too.

There’s a picture too. But where’s the evaluator. Simplification is one thing, but skipping over the system and method that prevents smart software hallucinations (falsehoods, mistakes, and craziness) is quite another.

Google is not a company to shy from innovation from its human wizards. If one thinks about the thrust of the blog post, will these Googlers be needed. Google’s innovativeness has drifted toward me-too behavior and being clever with advertising.

The blog post concludes:

FunSearch demonstrates that if we safeguard against LLMs’ hallucinations, the power of these models can be harnessed not only to produce new mathematical discoveries, but also to reveal potentially impactful solutions to important real-world problems.

I agree. But the “how” hangs above the marketing. But when a company has quantum supremacy, the grimness of the recent court loss, and assorted legal hassles — what is this magical evaluator?

I find Google’s deal to use facial recognition to assist the UK in enforcing what appears to be “stop porn” regulations more in line with what Google’s smart software can do. The “new” math? Eh, maybe. But analyzing every person trying to access a porn site and having the technical infrastructure to perform cross correlation. Now that’s something that will be of interest to governments and commercial customers.

The bin thing and a short cut for a python script. Interesting but it lacks the practical “big bucks now” potential of the facial recognition play. That, as far as I know, was not written up and ponied around to prestigious journals. To me, that was news, not the FUN as a cute reminder of a “function” search.

Stephen E Arnold, December 18, 2023

Weaponizing AI Information for Rubes with Googley Fakes

December 8, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

From the “Hey, rube” department: “Google Admits That a Gemini AI Demo Video Was Staged” reports as actual factual:

There was no voice interaction, nor was the demo happening in real time.

Young Star Wars’ fans learn the truth behind the scenes which thrill them. Thanks, MSFT Copilot. One try and some work with the speech bubble and I was good to go.

And to what magical event does this mysterious statement refer? The Google Gemini announcement. Yep, 16 Hollywood style videos of “reality.” Engadget asserts:

Google is counting on its very own GPT-4 competitor, Gemini, so much that it staged parts of a recent demo video. In an opinion piece, Bloomberg says Google admits that for its video titled “Hands-on with Gemini: Interacting with multimodal AI,” not only was it edited to speed up the outputs (which was declared in the video description), but the implied voice interaction between the human user and the AI was actually non-existent.

The article makes what I think is a rather gentle statement:

This is far less impressive than the video wants to mislead us into thinking, and worse yet, the lack of disclaimer about the actual input method makes Gemini’s readiness rather questionable.

Hopefully sometime in the near future Googlers can make reality from Hollywood-type fantasies. After all, policeware vendors have been trying to deliver a Minority Report-type of investigative experience for a heck of a lot longer.

What’s the most interesting part of the Google AI achievement? I think it illuminates the thinking of those who live in an ethical galaxy far, far away… if true, of course. Of course. I wonder if the same “fake it til you make it” approach applies to other Google activities?

Stephen E Arnold, December 8, 2023

Google Smart Software Titbits: Post Gemini Edition

December 8, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In the Apple-inspired roll out of Google Gemini, the excitement is palpable. Is your heart palpitating? Ah, no. Neither is mine. Nevertheless, in the aftershock of a blockbuster “me to” the knowledge shrapnel has peppered my dinobaby lair; to wit: Gemini, according to Wired, is a “new breed” of AI. The source? Google’s Demis Hassabis.

What happens when the marketing does not align with the user experience? Tell the hardware wizards to shift into high gear, of course. Then tell the marketing professionals to evolve the story. Thanks, MSFT Copilot. You know I think you enjoyed generating this image.

Navigate to “Google Confirms That Its Cofounder Sergey Brin Played a Key Role in Creating Its ChatGPT Rival.” That’s a clickable headline. The write up asserts: “Google hinted that its cofounder Sergey Brin played a key role in the tech giant’s AI push.”

Interesting. One person involved in both Google and OpenAI. And Google responding to OpenAI after one year? Management brilliance or another high school science club method? The right information at the right time is nine-tenths of any battle. Was Google not processing information? Was the information it received about OpenAI incorrect or weaponized? Now Gemini is a “new breed” of AI. The Verge reports that McDonald’s burger joints will use Google AI to “make sure your fries are fresh.”

Google has been busy in non-AI areas; for instance:

- The Register asserts that a US senator claims Google and Apple reveal push notification data to non-US nation states

- Google has ramped up its donations to universities, according to TechMeme

- Lost files you thought were in Google Drive? Never fear. Google has a software tool you can use to fix your problem. Well, that’s what Engadget says.

So an AI problem? What problem?

Stephen E Arnold, December 8, 2023

Gemini Twins: Which Is Good? Which Is Evil? Think Hard

December 6, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I received a link to a Google DeepMind marketing demonstration Web page called “Welcome to Gemini.” To me, Gemini means Castor and Pollux. Somewhere along the line — maybe a wonky professor named Chapman — told my class that these two represented Zeus and Hades. Stated another way, one was a sort of “good” deity with a penchant for non-godlike behavior. The other downright awful most of the time. I assume that Google knows about Gemini, its mythological baggage, and the duality of a Superman type doing the trust, justice, American way, and the other inspiring a range of bad actors. Imagine. Something that is good and bad. That’s smart software I assume. The good part sells ads; the bad part fails at marketing perhaps?

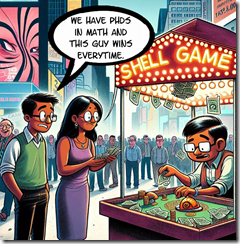

Two smart Googlers in New York City learn the difference between book learning for a PhD and street learning for a degree from the Institute of Hard Knocks. Thanks, MSFT Copilot. (Are you monitoring Google’s effort to dominate smart software by announcing breakthroughs very few people understand? Are you finding Google’s losses at the AI shell game entertaining?

Google’s blog post states with rhetorical aplomb:

Gemini is built from the ground up for multimodality — reasoning seamlessly across text, images, video, audio, and code.

Well, any other AI using Google’s previous technology is officially behind the curve. That’s clear to me. I wonder if Sam AI-Man, Microsoft, and the users of ChatGPT are tuned to the Google wavelength? There’s a video or more accurately more than a dozen of them, but I don’t like video so I skipped them all. There are graphs with minimal data and some that appear to jiggle in “real” time. I skipped those too. There are tables. I did read the some of the data and learned that Gemini can do basic arithmetic and “challenging” math like geometry. That is the 3, 4, 5 triangle stuff. I wonder how many people under the age of 18 know how to use a tape measure to determine if a corner is 90 degrees? (If you don’t, why not ask ChatGPT or MSFT Copilot.) I processed the twin’s size which come in three sizes. Do twins come in triples? Sigh. Anyway one can use Gemini Ultra, Gemini Pro, and Gemini Nano. Okay, but I am hung up on the twins and the three sizes. Sorry. I am a dinobaby. There are more movies. I exited the site and navigated to YCombinator’s Hacker News. Didn’t Sam AI-Man have a brush with that outfit?

You can find the comments about Gemini at this link. I want to highlight several quotations I found suggestive. Then I want to offer a few observations based on my conversation with my research team.

Here are some representative statements from the YCombinator’s forum:

- Jansan said: Yes, it [Google] is very successful in replacing useful results with links to shopping sites.

- FrustratedMonkey said: Well, deepmind was doing amazing stuff before OpenAI. AlphaGo, AlphaFold, AlphaStar. They were groundbreaking a long time ago. They just happened to miss the LLM surge.

- Wddkcs said: Googles best work is in the past, their current offerings are underwhelming, even if foundational to the progress of others.

- Foobar said: The whole things reeks of being desperate. Half the video is jerking themselves off that they’ve done AI longer than anyone and they “release” (not actually available in most countries) a model that is only marginally better than the current GPT4 in cherry-picked metrics after nearly a year of lead-time?

- Arson9416 said: Google is playing catchup while pretending that they’ve been at the forefront of this latest AI wave. This translates to a lot of talk and not a lot of action. OpenAI knew that just putting ChatGPT in peoples hands would ignite the internet more than a couple of over-produced marketing videos. Google needs to take a page from OpenAI’s playbook.

Please, work through the more than 600 comments about Gemini and reach your own conclusions. Here are mine:

- The Google is trying to market using rhetorical tricks and big-brain hot buttons. The effort comes across to me as similar to Ford’s marketing of the Edsel.

- Sam AI-Man remains the man in AI. Coups, tension, and chaos — irrelevant. The future for many means ChatGPT.

- The comment about timing is a killer. Google missed the train. The company wants to catch up, but it is not shipping products nor being associated to features grade school kids and harried marketers with degrees in art history can use now.

Sundar Pichai is not Sam AI-Man. The difference has become clear in the last year. If Sundar and Sam are twins, which represents what?

Stephen E Arnold, December 6, 2023

x

x

x

x

xx