Apple and Google: Lots of Nots, Nos, and Talk

January 15, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

This is the dinobaby, an 81 year old dinobaby. In my 60 plus year work career I have been around, in, and through what I call “not and no” PR. The basic idea is that one floods the zones with statements about what an organization will do. Examples range from “our Wi-Fi sniffers will not log home access point data” to “our AI service will not capture personal details” to “our security policies will not hamper usability of our devices.” I could go on, but each of these statements were uttered in meetings, in conference “hallway” conversations, or in public podcasts.

Thanks, Venice.ai. Good enough. See I am prevaricating. This image sucks. The logos are weird. GW looks like a wax figure.

I want to tell you that if the Nots and Nos identified in the flood of write ups about the Apple Google AI tie up immutable like Milton’s description of his God, the nots and nos are essentially pre-emptive PR. Both firms are data collection systems. The nature of the online world is that data are logged, metadata captured and mindlessly processed for a statistical signal, and content processed simply because “why not?”

Here’s a representative write up about the Apple Google nots and nos: “Report: Apple to Fine-Tune Gemini Independently, No Google Branding on Siri, More.” So what’s the more that these estimable firms will not do? Here’s an example:

Although the final experience may change from the current implementation, this partly echoes a Bloomberg report from late last year, in which Mark Gurman said: “I don’t expect either company to ever discuss this partnership publicly, and you shouldn’t expect this to mean Siri will be flooded with Google services or Gemini features already found on Android devices. It just means Siri will be powered by a model that can actually provide the AI features that users expect — all with an Apple user interface.”

How about this write up: “Official: Apple Intelligence & Siri To Be Powered By Google Gemini.”

Source details how Apple’s Gemini deal works: new Siri features launching in spring and at WWDC, Apple can finetune Gemini, no Google branding, and more

Let’s think about what a person who thinks the way my team does. Here are what we can do with these nots and nos:

- Just log everything and don’t talk about the data

- Develop specialized features that provide new information about use of the AI service

- Monitor the actions of our partners so we can be prepared or just pounce on good ideas captured with our “phone home” code

- Skew the functionality so that our partners become more dependent on our products and services; for example, exclusive features only for their users.

The possibilities are endless. Depending upon the incentives and controls put in place for this tie up, the employees of Apple and Google may do what’s needed to hit their goals. One can do PR about what won’t happen but the reality of certain big technology companies is that these outfits defy normal ethical boundaries, view themselves as the equivalent of nation states, and have a track record of insisting that bending mobile devices do not bend and that information of a personal nature is not cross correlated.

Watch the pre-emptive PR moves by Apple and Google. These outfits care about their worlds, not those of the user.

Just keep in mind that I am an old, very old, dinobaby. I have some experience in these matters.

Stephen E Arnold, January 15, 2025

Security Chaos: So We Just Live with Failure?

January 14, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read a write up that baffled me. The article appeared in what I consider a content marketing or pay to play publication. I may be wrong, but the content usually hits me as an infomercial. The story arresting my attention this morning (January 13, 2026) is “The 11 Runtime Attacks Breaking AI Security — And How CISOs Are Stopping Them.” I expected a how to. What did the write up deliver? Confusion and a question, “So we just give up?”

The article contains this cheerful statement from a consulting firm. Yellow lights flashed. I read this:

Gartner’s research puts it bluntly: “Businesses will embrace generative AI, regardless of security.” The firm found 89% of business technologists would bypass cybersecurity guidance to meet a business objective. Shadow AI isn’t a risk — it’s a certainty.

Does this mean that AI takes precedence over security?

The article spells out 11 different threats and provides solutions to each. The logic of the “stopping runtime attacks” with methods now available struck me as a remarkable suggestion.

The mice are the bad actors. Notice that the capable security system is now unable to deal with the little creatures. The realtime threats overwhelmed the expensive much hyped-cyber cat. Thanks, Venice.ai. Good enough.

Let’s look at three of the 11 threats and their solutions. Please, read the entire write up and make you own decision about the other eight problems presented and allegedly solved.

The first threat is called “multi turn crescendo attacks.” I had no idea what this meant when I read the phrase. That’s okay. I am a dinobaby and a stupid one at that. It turns out that this fancy phrase means that a bad actor plans prompts that work incrementally. The AI system responds. Then responds to another weaponized prompt. Over a series of prompts, the bad actor gets what he or she wants out of the system. ChatGPT and Gemini are vulnerable to this orchestrated prompt sequence. What’s the fix? I quote:

Stateful context tracking, maintaining conversation history, and flagging escalation patterns.

Really? I am not sure that LLM outfits or licensees have the tools and the technical resources to implement these linked functions. Furthermore, in the cat and mouse approach to security, the mice are many. The find and react approach is not congruent with runtime threats.

Another threat is synthetic identify fraud. The idea is that AI creates life like humans, statements, and supporting materials. For me, synthetic identities are phishing attacks on steroids. People are fooled by voice, video and voice, email, and SMS attacks. Some companies hire people who are not people because AI technology advances in real time. How does one fix this? The solution is, and I quote:

Multi-factor verification incorporating behavioral signals beyond static identity attributes, plus anomaly detection trained on synthetic identity patterns.

But when AI synthetic identity technology improves how will today’s solutions deal with the new spin from bad actors? Answer: They have not, cannot, and will not with the present solutions.

The last threat I will highlight is obfuscation attacks or fiddling with AI prompts. Developers of LLMs are in a cat and mouse game. Right now the mice are winning for one simple reason: The wizards developing these systems don’t have the perspective of bad actors. LLM developers just want to ship and slap on fixes that stop a discovered or exposed attack vector. What’s the fix? The solution, and I quote, is:

Wrap retrieved data in delimiters, instructing the model to treat content as data only. Strip control tokens from vector database chunks before they enter the context window.

How does this work when new attacks occur and are discovered? Not very well because the burden falls upon the outfit using the LLM. Do licensees have appropriate technical resources to “wrap retrieved data in delimiters” when the exploit may just work but no one is exactly sure why. Who knew that prompts in iambic pentameter or gibberish with embedded prompts ignore “guardrails”? The realtime is the killer. Licensees are not equipped to react and I am not confident smart AI cyber security systems are either.

Net net: Amazon Web Services will deal with these threats. Believe it or not. (I don’t believe it, but your mileage may vary.)

Stephen E Arnold, January 14, 2026

So What Smart Software Is Doing the Coding for Lagging Googlers?

January 13, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read “Google Programmer Claims AI Solved a Problem That Took Human Coders a Year.” I assume that I am supposed to divine that I should fill in “to crack,” “to solve,” or “to develop”? Furthermore, I don’t know if the information in the write up is accurate or if it is a bit of fluff devised by an art history major who got a job with a PR firm supporting Google.

I like the way a Googler uses Anthropic to outperform Googlers (I think). Anyway, thanks, ChatGPT, good enough.

The company’s commitment to praise its AI technology is notable. Other AI firms toss out some baloney before their “leadership” has a meeting with angry investors. Google, on the other hand, pumps out toots and confetti with appalling regularity.

This particular write up states:

Paul [a person with inside knowledge about Google’s AI coding system] passed on secondhand knowledge from "a Principal Engineer at Google [that] Claude Code matched 1 year of team output in 1 hour."

Okay, that’s about as unsupported an assertion I have seen this morning. The write up continues:

San Francisco-based programmer Jaana Dogan chimed in, outing herself as the Google engineer cited by Paul. "We have been trying to build distributed agent orchestrators at Google since last year," she commented. "There are various options, not everyone is aligned … I gave Claude Code a description of the problem, it generated what we built last year in an hour."

So the “anonymous” programmer is Jaana Dogan. She did not use Opal, Google’s own smart software. Ms. Dogan used the coding tools from Anthropic? Is this what the cited passage is telling me?

Let’s think about these statements for a moment:

- Perhaps Google’s coders were doom scrolling, playing Foosball, or thinking about how they could land a huge salary at Meta now that AI staff are allegedly jump off the good ship Zuck Up? Therefore, smart software could indeed produce code that took the Googlers one year to produce. Googlers are not necessarily productive unless it is in the PR department or the legal department.

- Is Google’s own coding capability so lousy that Googlers armed with Opal and other Googley smart software could not complete a project with software Google is pitching as the greatest thing since Google landed a Nobel Prize?

- Is the Anthropic software that much better than Google’s or Microsoft’s smart coding system? My experience is that none of these systems are that different from one another. In fact, I am not sure that new releases are much better than the systems we have tested over the last 12 months.

The larger question is, “Why does Google have to promote its approach to AI so relentlessly?” Why is Google using another firm’s smart software and presenting its use in a confusing way?

My answer to both these questions is, “Google has a big time inferiority complex. It is as if the leadership of Google believes that grandma is standing behind them when they were 12 years old. When attention flags doing homework, grandma bats the family loser with her open palm. “Do better. Concentrate,” she snarls at the hapless student.

Thus, PR emanates PR that seems to be about its own capabilities and staff while promoting a smart coding tool from another firm. What’s clear is that the need for PR coverage outpaces common sense and planning. Google is trying hard to convince people that AI is the greatest thing since ping pong tables at the office.

Stephen E Arnold, January 13, 2025

The Lineage of Bob: Microsoft to IBM

January 8, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Product names have interested me. I am not too clever. I started “Beyond Search” in 2008. The name wasn’t my idea. At lunch someone said, “We do a lot of search stuff.” Another person (maybe Shakes) said, “Let’s go beyond search, okay.” That was it. I was equally uninspired when I named our new information service “Telegram Notes.” One of my developers said, “What’s with these notecards?” I replied, “Those are my Telegram notes.” There it was. The name.

I wrote a semi-humorous for me post about Microsoft Cowpilot. Oh, sorry, I meant Copilot. The write up featured a picture of a cow standing in a bit of a mess of its own making. Yeah, hah hah. I referenced New Coke and a couple of other naming decisions that just did not work out.

In October 2025, just when I thought the lawn mowing season was ending because the noise drives me bonkers, I read about “Project Bob.” If you have not heard of it, this is an IBM initiative or what IBM calls a product. I know that IBM is a consulting and integration outfit, but this Bob is a product. IBM said when the leaves were choking my gutters:

IBM Project Bob isn’t just another coding assistant—it’s your AI development partner. Designed to work the way you do, Project Bob adapts to your workflow from design to deployment. Whether you’re modernizing legacy systems or building something entirely new, Bob helps you ship quality code faster. With agentic workflows, built-in security and enterprise-grade deployment flexibility, Bob doesn’t just automate tasks—it transforms the entire software development lifecycle. From modernization projects to new application builds, Bob makes development smarter, safer and more efficient. — Neel Sundares, General Manager, Automation and AI, IBM

I gave a couple of lectures about this time. In one of them I illustrated AI coding using Anthropic Claude. The audience yawned. Getting some smart software to write simple scripts was not exactly a big time insight.

But Bob, according to Mr. Sundares, General Manager of Automation and AI is different. He wrote:

Think of Bob as your AI-first IDE and pair developer: a tool that understands your intent, your codebase and your organization’s standards.

- Understands your intent: Switch into Architect Mode to scope and design complex systems or collaborate in Code Mode to move fast and iterate efficiently.

- Understands your repo: Bob reads your codebase, modernizes frameworks, refactors at scale and re-platforms with full context.

- Understands your standards: With built-in expertise for FedRAMP, HIPAA and PCI, Project Bob helps you deliver secure, production-ready code every time.

The Register, a UK online publication, wrote:

Security researchers at PromptArmor have been evaluating Bob prior to general release and have found that IBM’s “AI development partner” can be manipulated into executing malware. They report that the CLI is vulnerable to prompt injection attacks that allow malware execution and that the IDE is vulnerable to common AI-specific data exfiltration vectors.

Bob, if the Register is on the money, has some exploitable features too.

Okay, no surprise.

What is interesting is that IBM chose the name Bob for this “product”, the one with exploitable features.

Does anyone remember Microsoft Bob? I do. My recollection is that it presented a friendly, cartoon like interface. The objects in the room represented Microsoft applications. For example, click on the paper and word processing would open. Want to know the time? Click on the clock. If you did not know what to do, you could click on the dog. That was the help. The dog would guide you.

Screenshot from Habr.ru, but I am sure the image is the property of the estimable Microsoft Corporation. I provide this for its educational and inspirational value.

Rover was the precursor to Clippy I think. And Clippy yielded to Cowpilot. Ooops. Sorry, I meant to type Copilot. My bad. Bob died after a year, maybe less. Bill Gates seemed okay with Bob, and he was more than okay with its leadership as I recall. The marriage lasted longer than Bob.

So what?

First, I find it remarkable that IBM would use the product name “Bob” for the firm’s AI coding assistant. That’s what happens when one relies on young people and leadership unfamiliar with the remarkable Bob from Microsoft. Some of these creatives probably don’t know how to use a mimeograph machine either.

Second, apply the name Bob to an AI service which seems, according to the Register article cited above, has some flaws or as some bad actors might say “features.” I wonder if someone on the IBM Bob marketing team knew the IBM AI product would face some headwinds and was making a sly joke. IBM leadership has a funny bone, but if the reference does not compute, the joke just sails on by.

Third, Mr. Neel Sundares, General Manager, Automation and AI, IBM, said: “The future of AI-powered coding isn’t years away—it’s already here.” That’s right, sir. Anthropic, ChatGPT, Google, and the Chinese AI models output code. Today, once can orchestrate across these services. One can build agents using one of a dozen different services. Yep, it’s already here.

Net net: First, BackRub became Google and then Alphabet. Facebook morphed into Meta which now means AI yiiii AI. Karen became Jennifer. Now IBM embraces Bob. Watson is sad, very sad.

Stephen E Arnold, January 8, 2026

The Branding Genius of Cowpilot: New Coke and Jaguar Are No Longer the Champs

January 6, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

We are beavering away on our new Telegram Notes series. I opened one of my newsfeeds and there is was. Another gem of a story from PCGamer. As you may know, PCGamer inspired this bit of art work from the and AI system. I thought I would have a “cow” when I saw. Here’s the visual gag again:

Is that cow standing in its output? Could that be a metaphor for “cowpilot” output? I don’t know. Qwen, like other smart software can hallucinate. Therefore, I see a semi-sacred bovine standing in a muddy hole. I do not see AI output. If you do, I am not sure you are prepared for the contents about which I shall comment; that is, the story from PCGamer called “In a Truly Galaxy-Brained Rebrand, Microsoft Office Is Now the Microsoft 365 Copilot App, but Copilot Is Also Still the Name of the AI Assistant.”

I thought New Coke was an MBA craziness winner. I thought the Jaguar rebrand was an even crazier MBA craziness winner. I thought the OpenAI smart software non mobile phone rebranding effort that looks like a 1950s dime store fountain pen was in the running for crazy. Nope. We have a a candidate for the rebranding that tops the leader board.

Microsoft Office is now the M3CA or Microsoft 365 Copilot App.

The PCGamer write up says:

Copilot is the app for launching the other apps, but it’s also a chatbot inside the apps.

Yeah, I have a few. But what else does PCGamer say in this write up?

An MBA study group discusses the branding strategy behind Cowpilot. Thanks, Qwen. Nice consistent version of the heifer.

Here’s a statement I circled:

Copilot is, notably, a thing that already exists! But as part of the ongoing effort to juice AI assistant usage numbers by making it impossible to not use AI, Microsoft has decided to just call its whole productivity software suite Copilot, I guess.

Yep, a “guess.” That guess wording suggests that Microsoft is simply addled. Why name a product that causes a person to guess? Not even Jaguar made people “guess” about a weird square car painted some jazzy semi hip color. Even the Atlanta semi-behemoth slapped “new” Coke on something that did not have that old Coke vibe. Oh, both of these efforts were notable. I even remember when the brain trust at NBC dumped the peacock for a couple of geometric shapes. But forcing people to guess? That’s special.

Here’s another statement that caught my dinobaby brain:

Should Microsoft just go ahead and rebrand Windows, the only piece of its arsenal more famous than Office, as Copilot, too? I do actually think we’re not far off from that happening. Facebook rebranded itself “Meta” when it thought the metaverse would be the next big thing, so it seems just as plausible that Microsoft could name the next version of Windows something like “Windows with Copilot” or just “Windows AI.” I expect a lot of confusion around whatever Office is called now, and plenty more people laughing at how predictably silly this all is.

I don’t agree with this statement. I don’t think “silly” captures what Microsoft is attempting to do. In my experience, Microsoft is a company that bet on the AI revolution. That turned into a cost sink hole. Then AI just became available. Suddenly Microsoft has to flog its business customers to embrace not just Azure, Teams, and PowerPoint. Microsoft has to make it so users of these services have to do Copilot.

Take your medicine, Stevie. Just like my mother’s approach to giving me cough medicine. Take your medicine or I will nag you to your grave. My mother haunting me for the rest of my life was a bummer thought. Now I have the Copilot thing. Yikes, I have to take my Copilot medicines whether I want to or not. That’s not “silly.” This is desperation. This is a threat. This is a signal that MBA think has given common sense a pink slip.

Stephen E Arnold, January 6, 2026

Microsoft AI PR and PC Gamer: Really?1

January 5, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I was poking around in online gaming magazines. I was looking for some information about a “former gamer” named Logan Ryan Golema. He allegedly jumped from the lucrative world of eGames to chief technical officer of an company building AI data centers for distributed AI gaming. I did not find the interesting Mr. Golema, who has moved around with locations in the US, the Cayman Islands, and Portugal.

No Mr. Golema, but I did spot a write up with the interesting “gamer” title “Microsoft CEO Satya Nadella Says It’s Time to Stop Talking about AI Slop and Start Talking about a Theory of the Mind That Accounts for Humans Being Equipped with These New Cognitive Amplifier Tools.”

Wow, from AI slop to “new cognitive amplifier tools.” Remarkable. I have concluded that the Microsoft AI PR push for Copilot has achieved the public relations equivalent of climbing the Jirishanca mountain in Peru. Well done!

Thanks, Qwen. Good enough for AI output.

And what did the PC Gamer write up report as compelling AI news requiring a 34 word headline?

I noted this passage:

Nadella listed three key points the AI industry needs to focus on going forward, the first of which is developing a “new concept” of AI that builds upon the “bicycles for the mind” theory put forth by Steve Jobs in the early days of personal computing. “What matters is not the power of any given model, but how people choose to apply it to achieve their goals,” Nadella wrote. “We need to get beyond the arguments of slop vs. sophistication and develop a new equilibrium in terms of our ‘theory of the mind’ that accounts for humans being equipped with these new cognitive amplifier tools as we relate to each other. This is the product design question we need to debate and answer.”

“Bicycles of the mind” is not the fingerprint of Copilot, a dearly loved and revered AI system. “Bicycles of the mind” is the product of small meetings of great minds. The brilliance of the metaphor exceeds the cost of the educations of the participants in these meetings. Are the attendees bicycle riders? Yes. Do they depend on AI? Yes, yes, of course. Do they believe that regular people can “get beyond” slop versus sophistication?

Probably not. But these folks get paid, and they can demonstrate interest, enthusiasm, and corporatized creativity. Energized by Teams’ meetings. Invigorated with Word plus Copilot and magnetized by wonder of hallucinating data in Excel — these people believe in AI.

PC Gamer points out:

There’s a lot of jargon and bafflegab in Nadella’s post, as you might expect from a CEO who really needs to sell this stuff to someone, but what I find more interesting is the sense that he’s hedging a bit. Microsoft has sunk tens of billions of dollars into its pursuit of an AI panacea and expressed outright bafflement that people don’t get how awesome it all is (even though, y’know, it’s pretty obvious), and the chief result of that effort is hot garbage and angry Windows users.

Yep, bafflegab. I think this is the equivalent of cow output, but I am not sure. I am sure about that 34 word headline. I would have titled the essay “Softie Spews Bafflegab.” But I am a dinobaby. What do I know? How about AI output is not slop?

Stephen E Arnold, January 5, 2026

How Do You Get Numbers for Copilot? Microsoft Has a Good Idea

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In past couple of days, I tested some of the latest and greatest from the big tech outfits destined to control information flow. I uploaded text to Gemini, asked it a question answered in the test, and it spit out the incorrect answer. Score one for the Googlers. Then I selected an output from ChatGPT and asked it to determine who was really innovating in a very, very narrow online market space. ChatGPT did not disappoint. It just made up a non-existent person. Okay Sam AI-Man, I think you and Microsoft need to do some engineering.

Could a TV maker charge users to uninstall a high value service like Copilot? Could Microsoft make the uninstall app available for a fee via its online software store? Could both the TV maker and Microsoft just ignore the howls of the demented few who don’t love Copilot? Yeah, I go with ignore. Thanks, Venice.ai. Good enough.

And what did Microsoft do with its Copilot online service? According to Engadget, “LG quietly added an unremovable Microsoft Copilot app to TVs.” The write up reports:

Several LG smart TV owners have taken to Reddit over the past few days to complain that they suddenly have a Copilot app on the device

But Microsoft has a seductive way about its dealings. Engadget points out:

[LG TV owners] cannot uninstall it.

Let’s think about this. Most smart TVs come with highly valuable to the TV maker baloney applications. These can be uninstalled if one takes the time. I don’t watch TV very much, so I just leave the set the way it was. I routinely ignore pleas to update the software. I listen, so I don’t care if weird reminders obscure the visuals.

The Engadget article states:

LG said during the 2025 CES season that it would have a Copilot-powered AI Search in its next wave of TV models, but putting in a permanent AI fixture is sure to leave a bad taste in many customers’ mouths, particularly since Copilot hasn’t been particularly popular among people using AI assistants.

Okay, Microsoft has a vision for itself. It wants to be the AI operating system just as Google and other companies desire. Microsoft has been a bit pushy. I suppose I would come up with ideas that build “numbers” and provide fodder for the Microsoft publicity machine. If I hypothesize myself in a meeting at Microsoft (where I have been but that was years ago), I would reason this way:

- We need numbers.

- Why not pay a TV outfit to install Copilot.

- Then either pay more or provide some inducements to our TV partner to make Copilot permanent; that is, the TV owner has no choice.

The pushback for this hypothetical suggestion would be:

- How much?

- How many for sure?

- How much consumer backlash?

I further hypothesize that I would say:

- We float some trial balloon numbers and go from there.

- We focus on high end models because those people are more likely to be willing to pay for additional Microsoft services

- Who cares about consumer backlash? These are TVs and we are cloud and AI people.

Obviously my hypothetical suggestion or something similar to it took place at Microsoft. Then LG saw the light or more likely the check with some big numbers imprinted on it, and the deal was done.

The painful reality of consumer-facing services is that something like 95 percent of the consumers do not change the defaults. By making something uninstallable will not even register as a problem for most consumers.

Therefore, the logic of the LG play is rock solid. Microsoft can add the LG TVs with Copilot to its confirmed Copilot user numbers. Win.

Microsoft is not in the TV business so this is just advertising. Win

Microsoft is not a consumer product company like a TV set company. Win.

As a result, the lack of an uninstall option makes sense. If a lawyer or some other important entity complains, making Copilot something a user can remove eliminates the problem.

Love those LGs. Next up microwaves, freezers, smart lights, and possibly electric blankets. Numbers are important. Users demonstrate proof that Microsoft is on the right path.

But what about revenue from Copilot. No problem. Raise the cost of other services. Charging Outlook users per message seems like an idea worth pursuing? My hypothetical self would argue with type of toll or taxi meter approach. A per pixel charge in Paint seems plausible as well.

The reality is that I believe LG will backtrack. Does it need the grief?

Stephen E Arnold, December 22, 2025

Mistakes Are Biological. Do Not Worry. Be Happy

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read a short summary of a longer paper written by a person named Paul Arnold. I hope this is not misinformation. I am not related to Paul. But this could be a mistake. This dinobaby makes many mistakes.

The article that caught my attention is titled “Misinformation Is an Inevitable Biological Reality Across nature, Researchers Argue.” The short item was edited by a human named Gaby Clark. The short essay was reviewed by Robert Edan. I think the idea is to make clear that nothing in the article is made up and it is not misinformation.

Okay, but…. Let’s look at couple of short statements from the write up about misinformation. (I don’t want to go “meta” but the possibility exists that the short item is stuffed full of information. What do you think?

Here’s an image capturing a youngish teacher outputting misinformation to his students. Okay, Qwen. Good enough.

Here’s snippet one:

… there is nothing new about so-called “fake news…”

Okay, does this mean that software that predicts the next word and gets it wrong is part of this old, long-standing trajectory for biological creatures. For me, the idea that algorithms cobbled together gets a pass because “there is nothing new about so-called ‘fake news’ shifts the discussion about smart software. Instead of worrying about getting about two thirds of questions right, the smart software is good enough.

A second snippet says:

Working with these [the models Paul Arnold and probably others developed] led the team to conclude that misinformation is a fundamental feature of all biological communication, not a bug, failure, or other pathology.

Introducing the notion of “pathology” adds a bit of context to misinformation. Is a human assembled smart software system, trained on content that includes misinformation and processed by algorithms that may be biased in some way is just the way the world works. I am not sure I am ready to flash the green light for some of the AI outfits to output what is demonstrably wrong, distorted, weaponized, or non-verifiable outputs.

What puzzled me is that the article points to itself and to an article by Ling Wei Kong et al, “A Brief Natural history of Misinformation” in the Journal of the Royal Society Interface.

Here’s the link to the original article. The authors of the publication are, if the information on the Web instance of the article is accurate, Ling-Wei Kong, Lucas Gallart, Abigail G. Grassick, Jay W. Love, Amlan Nayak, and Andrew M. Hein. Seven people worked on the “original” article. The three people identified in the short version worked on that item. This adds up to 10 people. Apparently the group believes that misinformation is a part of the biological being. Therefore, there is no cause to worry. In fact, there are mechanisms to deal with misinformation. Obviously a duck quack that sends a couple of hundred mallards aloft can protect the flock. A minimum of one duck needs to check out the threat only to find nothing is visible. That duck heads back to the pond. Maybe others follow? Maybe the duck ends up alone in the pond. The ducks take the viewpoint, “Better safe than sorry.”

But when a system or a mobile device outputs incorrect or weaponized information to a user, there may not be a flock around. If there is a group of people, none of them may be able to identify the incorrect or weaponized information. Thus, the biological propensity to be wrong bumps into an output which may be shaped to cause a particular effect or to alter a human’s way of thinking.

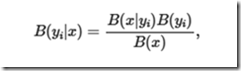

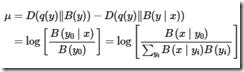

Most people will not sit down and take a close look at this evidence of scientific rigor:

and then follow the logic that leads to:

I am pretty old but it looks as if Mildred Martens, my old math teacher, would suggest the KL divergence wants me to assume some things about q(y). On the right side, I think I see some good old Bayesian stuff but I didn’t see the to take me from the KL-difference to log posterior-to-prior ratio. Would Miss Martens ask a student like me to clarify the transitions, fix up the notation, and eliminate issues between expectation vs. pointwise values? Remember, please, that I am a dinobaby and I could be outputting misinformation about misinformation.

Several observations:

- If one accepts this line of reasoning, misinformation is emergent. It is somehow part of the warp and woof of living and communicating. My take is that one should expect misinformation.

- Anything created by a biological entity will output misinformation. My take on this is that one should expect misinformation everywhere.

- I worry that researchers tackling information, smart software, and related disciplines may work very hard to prove that information is inevitable but the biological organisms can carry on.

I am not sure if I feel comfortable with the normalization of misinformation. As a dinobaby, the function of education is to anchor those completing a course of study in a collection of generally agreed upon facts. With misinformation everywhere, why bother?

Net net: One can read this research and the summary article as an explanation why smart software is just fine. Accept the hallucinations and misstatements. Errors are normal. The ducks are fine. The AI users will be fine. The models will get better. Despite this framing of misinformation is everywhere, the results say, “Knock off the criticism of smart software. You will be fine.”

I am not so sure.

Stephen E Arnold, December 18, 2025

Cloudflare: Data without Context Are Semi-Helpful for PR

December 5, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Every once in a while, Cloudflare catches my attention. One example is today (December 5, 2025). My little clumsy feedreader binged and told me it was dead. Okay, no big deal. I poked around and the Internet itself seemed to be dead. I went to the gym and upon my return, I checked and the Internet was alive. A bit of poking around revealed that the information in “Cloudflare Down: Canva to Valorant to Shopify, Complete List of Services Affected by Cloudflare Outage” was accurate. Yep, Cloudflare, PR campaigner, and gateway to some of the datasphere seemed to be having a hiccup.

So what did today’s adventure spark in my dinobaby brain? Memories. Cloudflare was down again. November, December, and maybe the New Year will deliver another outage.

Let’s shift to another facedt of Cloudflare.

When I was working on my handouts for my Telegram lecture, my team and I discovered comments that Pavel Durov was a customer. Then one of my Zoom talks failed because Cloudflare’s system failed. When Cloudflare struggled to its very capable and very fragile feet, I noted a link to “Cloudflare Has Blocked 416 Billion AI Bot Requests Since July 1.” Cloudflare appears to be on a media campaign to underscore that it, like Amazon, can take out a substantial chunk of the Internet while doing its level best to be a good service provider. Amusing idea: The Wired Magazine article coincides with Cloudflare stubbing its extremely comely and fragile toe.

Centralization for decentralized services means just one thing to me: A toll road with some profit pumping efficiencies guiding its repairs. Somebody pays for the concentration of what I call facilitating services. Even bulletproof hosting services have to use digital nodes or junction boxes like Cloudflare. Why? Many allow a person with a valid credit card to sign up for self-managed virtual servers. With these technical marvels, knowing what a customer is doing is work, hard work.

The numbers amaze the onlookers. Thanks, Venice.ai. Good enough.

The numbers amaze the onlookers. Thanks, Venice.ai. Good enough.

When in Romania, I learned that a big service provider allows a customer with a credit card use the service provider’s infrastructure and spin up and operate virtual gizmos. I heard from a person (anonymous person, of course), “We know some general things, but we don’t know what’s really going on in those virtual containers and servers.” The approach implemented by some service providers suggested that modern service providers build opacity into their architecture. That’s no big deal for me, but some folks do want to know a bit more than “Dude, we don’t know. We don’t want to know.”

That’s what interested me in the cited article. I don’t know about blocking bots. Is bot recognition 100 percent accurate? I have case examples of bots fooling business professionals into downloading malware. After 18 months of work on my Telegram project, my team and I can say with confidence, “In the Telegram systems, we don’t know how many bots are running each day. Furthermore, we don’t know how many Telegram have been coded in the last decade. It is difficult to know if an eGame is a game or a smart bot enhanced experience designed to hook kids on gambling and crypto.” Most people don’t know this important factoid. But Cloudflare, if the information in the Wired article is accurate, knows exactly how may AI bot request have been blocked since July 1. That’s interesting for a company that has taken down the Internet this morning. How can a firm know one thing and not know it has a systemic failure. A person on Reddit.com noted, “Call it Clownflare.”

But the paragraph Wired article from which I shall quote is particularly fascinating:

Prince cites stats that Cloudflare has not previously shared publicly about how much more of the internet Google can see compared to other companies like OpenAI and Anthropic or even Meta and Microsoft. Prince says Cloudflare found that Google currently sees 3.2 times more pages on the internet than OpenAI, 4.6 times more than Microsoft, and 4.8 times more than Anthropic or Meta does. Put simply, “they have this incredibly privileged access,” Prince says.

Several observations:

- What does “Google can see” actually mean? Is Google indexing content not available to other crawlers?

- The 4.6 figure is equally intriguing. Does it mean that Google has access to four times the number of publicly accessible online Web pages than other firms? None of the Web indexing outfits put “date last visited” or any time metadata on a result. That’s an indication that the “indexing” is a managed function designed for purposes other than a user’s need to know if the data are fresh.

- The numbers for Microsoft are equally interesting. Microsoft, based on what I learned when speaking with some Softies, was that at one time Bing’s results were matched to Google’s results. The idea was that reachable Web sites not deemed important were not on the Bing must crawl list. Maybe Bing has changed? Microsoft is now in a relationship with Sam AI-Man and OpenAI. Does that help the Softies?

- The cited paragraph points out that Google has 3.2 more access or page index counts than OpenAI. However, spot checks in ChatGPT 5.1 on December 5, 2025, showed that OpenAI cited more current information that Gemini 3. Maybe my prompts were flawed? Maybe the Cloudflare numbers are reflecting something different from index and training or wrapper software freshness? Is there more to useful results than raw numbers?

- And what about the laggards? Anthropic and Meta are definitely behind the Google. Is this a surprise? For Meta, no. Llama is not exactly a go-to solution. Even Pavel Durov chose a Chinese large language model over Llama. But Anthropic? Wow, dead last. Given Anthropic’s relationship with its Web indexing partners, I was surprised. I ask, “What are those partners sharing with Anthropic besides money?”

Net net: These Cloudflare data statements strike me as information floating in dataspace context free. It’s too bad Wired Magazine did not ask more questions about the Prince data assertions. But it is 2025, and content marketing, allegedly and unverifiable facts, and a rah rah message are more important than providing context and answering more pointed questions. But I am a dinobaby. What do I know?

Stephen E Arnold, December 5, 2025

An SEO Marketing Expert Is an Expert on Search: AI Is Good for You. Adapt

December 2, 2025

![green-dino_thumb_thumb[1]_thumb green-dino_thumb_thumb[1]_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb1_thumb_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I found it interesting to learn that a marketer is an expert on search and retrieval. Why? The expert has accrued 20 years of experience in search engine optimization aka SEO. I wondered, “Was this 20 years of diverse involvement in search and retrieval, or one year of SEO wizardry repeated 20 times?” I don’t know.

I spotted information about this person’s view of search in a newsletter from a group whose name I do not know how to pronounce. (I don’t know much.) The entity does business as BrXnd.ai. After some thought (maybe two seconds) I concluded that the name represented the concept “branding” with a dollop of hipness or ai.

Am I correct? I don’t know. Hey, that’s three admissions of intellectual failure a 10 seconds. Full disclosure: I know does not care.

Agentic SEO will put every company on the map. Relevance will become product sales. The methodology will be automated. The marketing humanoids will get fat bonuses. The quality of information available will soar upwards. Well, probably downwards. But no worries. Thanks, Venice.ai. Good enough.

The article is titled “The Future of Search and the Death of Links // BRXND Dispatch vol 96.” It points to a video called “The Future of Search and the Death of Links.” You can view the 22 minute talk at this link. Have at it, please.

Let me quote from the BrXnd.ai write up:

…we’re moving from an era of links to an era of recommendations. AI overviews now appear on 30-40% of search results, and when they do, clicks drop 20-40%. Google’s AI Mode sends six times fewer clicks than traditional search.

I think I have heard that Google handles 75 to 85 percent of global searches. If these data are on the money or even close to the eyeballs Google’s advertising money machine flogs, the estimable company will definitely be [a] pushing for subscriptions to anything and everything it once subsidized with oodles of advertisers’ cash; [b] sticking price tags on services positioned as free; [c] charging YouTube TV viewers the way disliked cable TV companies squeezed subscribers for money; [d] praying to the gods of AI that the next big thing becomes a Google sandbox; and [e] embracing its belief that it can control governments and neuter regulators with more than 0.01 milliliters of testosterone.

The write up states:

When search worked through links, you actively chose what to click—it was manual research, even if imperfect. Recommendations flip that relationship. AI decides what you should see based on what it thinks it knows about you. That creates interesting pressure on brands: they can’t just game algorithms with SEO tricks anymore. They need genuine value propositions because AI won’t recommend bad products. But it also raises questions about what happens to our relationship with information when we move from active searching to passive receiving.

Okay, let’s work through a couple of the ideas in this quoted passage.

First, clicking on links is indeed semi-difficult and manual job. (Wow. Take a break from entering 2.3 words and looking for a likely source on the first page of search results. Demanding work indeed.) However, what if those links are biased by inept programmers, the biases of the data set processed by the search machine, or intentionally manipulated to weaponize content to achieve a goal?

Second, the hook for the argument is that brands can no longer can game algorithms. Bid farewell to keyword stuffing. There is a new game in town: Putting a content object in as many places as possible in multiple formats, including the knowledge nugget delivered by TikTok-type services. Most people it seems don’t think about this and rely on consultants to help them.

Finally, the notion of moving from clicking and reading to letting a BAIT (big AI tech) company define one’s knowledge universe strikes me as something that SEO experts don’t find problematic. Good for them. Like me, the SEO mavens think the business opportunities for consulting, odd ball metrics, and ineffectual work will be rewarding.

I appreciate BrXnd.ai for giving me this glimpse of a the search and retrieval utopia I will now have available. Am I excited? Yeah, sure. However, I will not be dipping into the archive of the 95 previous issues of BrXnd “dispatches.” I know this to be a fact.

Stephen E Arnold, December 2, 2025