Google and Third-Party Cookies: The Writing Is on the Financial Projection Worksheet

July 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have been amused by some of the write ups about Google’s third-party cookie matter. Google is the king of the jungle when it comes to saying one thing and doing another. Let’s put some wood behind social media. Let’s make that Dodgeball thing take off. Let’s make that AI-enhanced search deliver more user joy. Now we are in third-party cookie revisionism. Even Famous Amos has gone back to its “original” recipe after the new and improved Famous Amos chips tanked big time. Google does not want to wait to watch ad and data sale-related revenue fall. The Google is changing its formulation before the numbers arrive.

“Google No Longer Plans to Eliminate Third-Party Cookies in Chrome” explains:

Google announced its cookie updates in a blog post shared today, where the company said that it instead plans to focus on user choice.

What percentage of Google users alter default choices? Don’t bother to guess. The number is very, very few. The one-click away baloney is a fabrication, an obfuscation. I have technical support which makes our systems as secure as possible given the resources an 80-year-old dinobaby has. But check out those in the rest home / warehouse for the soon to die? I would wager one US dollar that absolutely zero individuals will opt out of third-party cookies. Most of those in Happy Trail Ending Elder Care Facility cannot eat cookies. Opting out? Give me a break.

The MacRumors’ write up continues:

Back in 2020, Google claimed that it would phase out support for third-party cookies in Chrome by 2022, a timeline that was pushed back multiple times due to complaints from advertisers and regulatory issues. Google has been working on a Privacy Sandbox to find ways to improve privacy while still delivering info to advertisers, but third-party cookies will now be sticking around so as not to impact publishers and advertisers.

The Apple-centric online publication notes that UK regulators will check out Google’s posture. Believe me, Googzilla sits up straight when advertising revenue is projected to tank. Losing click data which can be relicensed, repurposed, and re-whatever is not something the competitive beastie enjoys.

MacRumors is not anti-Google. Hey, Google pays Apple big bucks to be “there” despite Safari. Here’s the online publications moment of hope:

Google does not plan to stop working on its Privacy Sandbox APIs, and the company says they will improve over time so that developers will have a privacy preserving alternative to cookies. Additional privacy controls, such as IP Protection, will be added to Chrome’s Incognito mode.

Correct. Google does not plan. Google outputs based on current situational awareness. That’s why Google 2020 has zero impact on Google 2024.

Three observations which will pain some folks:

- Google AI search and other services are under a microscope. I find the decision one which may increase scrutiny, not decrease regulators’ interest in the Google. Google made a decision which generates revenue but may increase legal expenses

- No matter how much money swizzles at each quarter’s end, Google’s business model may be more brittle than the revenue and profit figures suggest. Google is pumping billions into self driving cars, and doing an about face on third party cookies? The new Google puzzles me because search seems to be in the background.

- Google’s management is delivering revenues and profit, so the wizardly leaders are not going anywhere like some of Google’s AI initiatives.

Net net: After 25 years, the Google still baffles me. Time to head for Philz Coffee.

Stephen E Arnold, July 25, 2024

The Simple Fix: Door Dash Some Diversity in AI

July 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “There’s a Simple Answer to the AI Bias Conundrum: More Diversity.” I have read some amazing online write ups in the last few days, but this essay really stopped me in my tracks. Let’s begin with an anecdote from 1973. A half century ago I worked at a nuclear consulting firm which became part of Halliburton Industries. You know Halliburton. Dick Cheney. Ringing a bell?

One of my first tasks for the senior vice president who hired me was to assist the firm in identifying minorities in American universities about to graduate with a PhD in nuclear engineering. I am a Type A, and I set about making telephone calls, doing site visits, and working with our special librarian Dominique Doré, who had had a similar job when she worked in France for a nuclear outfit in that country. I chugged along and identified two possibles. Each was at the US Naval Academy at different stages of their academic career. The individuals would not be available for a commercial job until each had completed military service. So I failed, right?

Not even the clever wolf can put on a simple costume and become something he is not. Is this a trope for a diversity issue? Thanks, OpenAI. Good enough because I got tired of being told, “Inappropriate prompt.”

Nope. The project was designed to train me to identify high-value individuals in PhD programs. I learned three things:

- Nuclear engineers with PhDs in the early 1970s comprised a small percentage of those with the pre-requisites to become nuclear engineers. (I won’t go into details, but you can think in terms of mathematics, physics, and something like biology because radiation can ruin one’s life in a jiffy.)

- The US Navy, the University of California-Berkeley, and a handful of other universities with PhD programs in nuclear engineering were scouting promising high school students in order to convince them to enter the universities’ or the US government’s programs.

- The demand for nuclear engineers (forget female, minority, or non-US citizen engineers) was high. The competition was intense. My now-deceased friend Dr. Jim Terwilliger from Virginia Tech told me that he received job offers every week, including one from an outfit in the then Soviet Union. The demand was worldwide, yet the pool of qualified individuals graduating with a PhD seemed to be limited to six to 10 in the US, 20 in France, and a dozen in what was then called “the Far East.”

Everyone wanted the PhDs in nuclear engineering. Diversity simply did not exist. The top dog at Halliburton 50 years ago, told me, “We need more nuclear engineers. It is just not simple.”

Now I read “There’s a Simple Answer to the AI Bias Conundrum: More Diversity.” Okay, easy to say. Why not try to deliver? Believe me if the US Navy, Halliburton, and a dumb pony like myself could not figure out how to equip a person with the skills and capabilities required to fool around with nuclear material, how will a group of technology wizards in Silicon Valley with oodles of cash just do what’s simple? The answer is, “It will take structural change, time, and an educational system that is similar to that which was provided a half century ago.”

The reality is that people without training, motivation, education, and incentives will not produce the content outputs at a scale needed to recalibrate those wondrous smart software knowledge spaces and probabilistic-centric smart software systems.

Here’s a passage from the write up which caught my attention:

Given the rapid race for profits and the tendrils of bias rooted in our digital libraries and lived experiences, it’s unlikely we’ll ever fully vanquish it from our AI innovation. But that can’t mean inaction or ignorance is acceptable. More diversity in STEM and more diversity of talent intimately involved in the AI process will undoubtedly mean more accurate, inclusive models — and that’s something we will all benefit from.

Okay, what’s the plan? Who is going to take the lead? What’s the timeline? Who will do the work to address the educational and psychological factors? Simple, right? Words, words, words.

Stephen E Arnold, July 25, 2024

Why Is Anyone Surprised That AI Is Biased?

July 25, 2024

Let’s top this one last time, all right? Algorithms are biased against specific groups.

Why are they biased? They’re biased because the testing data sets contain limited information about diversity.

What types of diversity? There’s a range but it usually involves racism, sexism, and socioeconomic status.

How does this happen? It usually happens, not because the designers are racist or whatever, but from blind ignorance. They don’t see outside their technology boxes so their focus is limited.

But they can be racist, sexist, etc? Yes, they’re human and have their personal prejudices. Those can be consciously or inadvertently programmed into a data set.

How can this be fixed? Get larger, cleaner data sets that are more reflective of actual populations.

Did you miss any minority groups? Unfortunately yes and it happens to be an oldie but a goodie: disabled folks. Stephen Downes writes that, “ChatGPT Shows Hiring Bias Against People With Disabilities.” Downes commented on an article from Futurity that describes how a doctoral student from the University of Washington studies on ChatGPT ranks resumes of abled vs. disabled people.

The test discovered when ChatGPT was asked to rank resumes, people with resumes that included references to a disability were ranked lower. This part is questionable because it doesn’t state the prompt given to ChatGPT. When the generative text AI was told to be less “ableist” and some of the “disabled” resumes ranked higher. The article then goes into a valid yet overplayed argument about diversity and inclusion. No solutions were provided.

Downes asked questions that also beg for solutions:

“This is a problem, obviously. But in assessing issues of this type, two additional questions need to be asked: first, how does the AI performance compare with human performance? After all, it is very likely the AI is drawing on actual human discrimination when it learns how to assess applications. And second, how much easier is it to correct the AI behaviour as compared to the human behaviour? This article doesn’t really consider the comparison with humans. But it does show the AI can be corrected. How about the human counterparts?”

Solutions? Anyone?

Whitney Grace, July 25, 2024

Crowd What? Strike Who?

July 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

How are those Delta cancellations going? Yeah, summer, families, harried business executives, and lots of hand waving. I read a semi-essay about the minor update misstep which caused blue to become a color associated with failure. I love the quirky sad face and the explanations from the assorted executives, experts, and poohbahs about how so many systems could fail in such a short time on a global scale.

In “Surely Microsoft Isn’t Blaming EU for Its Problems?” I noted six reasons the CrowdStrike issue became news instead of a system administrator annoyance. In a nutshell, the reasons identified harken back to Microsoft’s decision to use an “open design.” I like the phrase because it beckons a wide range of people to dig into the plumbing. Microsoft also allegedly wants to support its customers with older computers. I am not sure older anything is supported by anyone. As a dinobaby, I have first-hand experience with this “we care about legacy stuff.” Baloney. The essay mentions “kernel-level access.” How’s that working out? Based on CrowdStrike’s remarkable ability to generate PR from exceptions which appear to have allowed the super special security software to do its thing, that access sure does deliver. (Why does the nationality of CrowdStrike’s founder not get mentioned? Dmitri Alperovitch, a Russian who became a US citizen and a couple of other people set up the firm in 2012. Is there any possibility that the incident was a test play or part of a Russian long game?)

Satan congratulates one of his technical professionals for an update well done. Thanks, MSFT Copilot. How’re things today? Oh, that’s too bad.

The essay mentions that the world today is complex. Yeah, complexity goes with nifty technology, and everyone loves complexity when it becomes like an appliance until it doesn’t work. Then fixes are difficult because few know what went wrong. The article tosses in a reference to Microsoft’s “market size.” But centralization is what an appliance does, right? Who wants a tube radio when the radio can be software defined and embedded in another gizmo like those FM radios in some mobile devices. Who knew? And then there is a reference to “security.” We are presented with a tidy list.

The one hitch in the git along is that the issue emerges from a business culture which has zero to do with technology. The objective of a commercial enterprise is to generate profits. Companies generate profits by selling high, subtracting costs, and keeping the rest for themselves and stakeholders.

Hiring and training professionals to do jobs like quality checks, environmental impact statements, and ensuring ethical business behavior in work processes is overhead. One can hire a blue chip consulting firm and spark an opioid crisis or deprecate funding for pre-release checks and quality assurance work.

Engineering excellence takes time and money. What’s valued is maximizing the payoff. The other baloney is marketing and PR to keep regulators, competitors, and lawyers away.

The write up encapsulates the reason that change will be difficult and probably impossible for a company whether in the US or Ukraine to deliver what the customer expects. Regulators have failed to protect citizens from the behaviors of commercial enterprises. The customers assume that a big company cares about excellence.

I am not pessimistic. I have simply learned to survive in what is a quite error-prone environment. Pundits call the world fragile or brittle. Those words are okay. The more accurate term is reality. Get used to it and knock off the jargon about failure, corner cutting, and profit maximization. The reality is that Delta, blue screens, and yip yap about software chock full of issues define the world.

Fancy talk, lists, and entitled assurances won’t do the job. Reality is here. Accept it and blame.

Stephen E Arnold, July 24, 2024

Automating to Reduce Staff: Money Talks, Employees? Yeah, Well

July 24, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Are you a developer who oversees a project? Are you one of those professionals who toiled to understand the true beauty of a PERT chart invented by a Type A blue-chip consulting firm I have heard? If so, you may sport these initials on your business card: PMP, PMI-RMP, PRINCE2, etc. I would suggest that Google is taking steps to eliminate your role. How do I know the death knell tolls for thee? Easy. I read “Google Brings AI Agent Platform Project Oscar Open Source.” The write up doesn’t come out and say, “Dev managers or project managers, find your future elsewhere, but the intent bubbles beneath the surface of the Google speak.

A 35-year-old executive gets the good news. As a project manager, he can now seek another information-mediating job at an indendent plumbing company, a local dry cleaner, or the outfit that repurposes basketball courts to pickleball courts. So many futures to find. Thanks, MSFT Copilot. That’s a pretty good Grim Reaper. The former PMP looks snappy too. Well, good enough.

The “Google Brings AI Agent Platform Project Oscar Open Source” real “news” story says:

Google has announced Project Oscar, a way for open-source development teams to use and build agents to manage software programs.

Say hi, to Project Oscar. The smart software is new, so expect it to morph, be killed, resurrected, and live a long fruitful life.

The write up continues:

“I truly believe that AI has the potential to transform the entire software development lifecycle in many positive ways,” Karthik Padmanabhan, lead Developer Relations at Google India, said in a blog post. “[We’re] sharing a sneak peek into AI agents we’re working on as part of our quest to make AI even more helpful and accessible to all developers.” Through Project Oscar, developers can create AI agents that function throughout the software development lifecycle. These agents can range from a developer agent to a planning agent, runtime agent, or support agent. The agents can interact through natural language, so users can give instructions to them without needing to redo any code.

Helpful? Seems like it. Will smart software reduce costs and allow for more “efficiency methods” to be implemented? Yep.

The article includes a statement from a Googler; to wit:

“We wondered if AI agents could help, not by writing code which we truly enjoy, but by reducing disruptions and toil,” Balahan said in a video released by Google. Go uses an AI agent developed through Project Oscar that takes issue reports and “enriches issue reports by reviewing this data or invoking development tools to surface the information that matters most.” The agent also interacts with whoever reports an issue to clarify anything, even if human maintainers are not online.

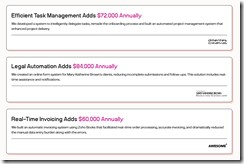

Where is Google headed with this “manage” software programs? A partial answer may be deduced from this write up from Linklemon. Its commercial “We Automate Workflows for Small to Medium (sic) Businesses.” The image below explains the business case clearly:

Those purple numbers are generated by chopping staff and making an existing system cheaper to operate. Translation: Find your future elsewhere, please.”

My hunch is that if the automation in Google India is “good enough,” the service will be tested in the US. Once that happens, Microsoft and other enterprise vendors will jump on the me-too express.

What’s that mean? Oh, heck, I don’t want to rerun that tired old “find your future elsewhere line,” but I will: Many professionals who intermediate information will here, “Great news, you now have the opportunity to find your future elsewhere.” Lucky folks, right, Google.

Stephen E Arnold, July 24, 2024

The Logic of Good Enough: GitHub

July 22, 2024

What happens when a big company takes over a good thing? Here is one possible answer. Microsoft acquired GitHub in 2018. Now, “‘GitHub’ Is Starting to Feel Like Legacy Software,” according Misty De Méo at The Future Is Now blog. And by “legacy,” she means outdated and malfunctioning. Paint us unsurprised.

De Méo describes being unable to use a GitHub feature she had relied on for years: the blame tool. She shares her process of tracking down what went wrong. Development geeks can see the write-up for details. The point is, in De Méo’s expert opinion, those now in charge made a glaring mistake. She observes:

“The corporate branding, the new ‘AI-powered developer platform’ slogan, makes it clear that what I think of as ‘GitHub’—the traditional website, what are to me the core features—simply isn’t Microsoft’s priority at this point in time. I know many talented people at GitHub who care, but the company’s priorities just don’t seem to value what I value about the service. This isn’t an anti-AI statement so much as a recognition that the tool I still need to use every day is past its prime. Copilot isn’t navigating the website for me, replacing my need to the website as it exists today. I’ve had tools hit this phase of decline and turn it around, but I’m not optimistic. It’s still plenty usable now, and probably will be for some years to come, but I’ll want to know what other options I have now rather than when things get worse than this.”

The post concludes with a plea for fellow developers to send De Méo any suggestions for GitHub alternatives and, in particular, a good local blame tool. Let us just hope any viable alternatives do not also get snapped up by big tech firms anytime soon.

Cynthia Murrell, July 23, 2024

AI: Helps an Individual, Harms Committee Thinking Which Is Often Sketchy at Best

July 16, 2024

![dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb dinosaur30a_thumb_thumb_thumb_thumb_[1]_thumb_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/dinosaur30a_thumb_thumb_thumb_thumb_1_thumb_thumb_thumb_thumb.gif) This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I spotted an academic journal article type write up called “Generative AI Enhances Individual Creativity But Reduces the Collective Diversity of Novel Content.” I would give the paper a C, an average grade. The most interesting point in the write up is that when one person uses smart software like a ChatGPT-type service, the output can make that person seem to a third party smarter, more creative, and more insightful than a person slumped over a wine bottle outside of a drug dealer’s digs.

The main point, which I found interesting, is that a group using ChatGPT drops down into my IQ range, which is “Dumb Turtle.” I think this is potentially significant. I use the word “potential” because the study relied upon human “evaluators” and imprecise subjective criteria; for instance, novelty and emotional characteristics. This means that if the evaluators are teacher or people who have to critique writing are making the judgments, these folks have baked in biases and preconceptions. I know first hand because one of my pieces of writing was published in the St. Louis Post Dispatch at the same time my high school English teacher clapped a C for narrative value and D for language choice. She was not a fan of my phrase “burger boat drive in.” Anyway I got paid $18 for the write up.

Let’s pick up this “finding” that a group degenerates or converges on mediocrity. (Remember, please, that a camel is a horse designed by a committee.) Here’s how the researchers express this idea:

While these results point to an increase in individual creativity, there is risk of losing collective novelty. In general equilibrium, an interesting question is whether the stories enhanced and inspired by AI will be able to create sufficient variation in the outputs they lead to. Specifically, if the publishing (and self-publishing) industry were to embrace more generative AI-inspired stories, our findings suggest that the produced stories would become less unique in aggregate and more similar to each other. This downward spiral shows parallels to an emerging social dilemma (42): If individual writers find out that their generative AI-inspired writing is evaluated as more creative, they have an incentive to use generative AI more in the future, but by doing so, the collective novelty of stories may be reduced further. In short, our results suggest that despite the enhancement effect that generative AI had on individual creativity, there may be a cautionary note if generative AI were adopted more widely for creative tasks.

I am familiar with the stellar outputs of committees. Some groups deliver zero and often retrograde outputs; that is, the committee makes a situation worse. I am thinking of the home owners’ association about a mile from my office. One aggrieved home owner attended a board meeting and shot one of the elected officials. Exciting plus the scene of the murder was a church conference room. Driveways can be hot topics when the group decides to change rules which affected this fellow’s own driveway.

Sometimes committees come up with good ideas; for example, at one government agency where I was serving as the IV&V professional (independent verification and validation) which decided to disband because there was a tiny bit of hanky panky in the procurement process. That was a good idea.

Other committee outputs are worthless; for example, the transcripts of the questions from elected officials directed to high-technology executives. I won’t name any committees of this type because I worked for a congress person, and I observe the unofficial rule: Button up, butter cup.

Let me offer several observations about smart software producing outputs that point to dumb turtle mode:

- Services firms (lawyers and blue chip consultants) will produce less useful information relying on smart software than on what crazed Type A achievers produce. Yes, I know that one major blue chip consulting firm helped engineer the excitement one can see in certain towns in West Virginia, but imagine even more negative downstream effects. Wow!

- Dumb committees relying on AI will be among the first to suggest, “Let AI set the agenda.” And, “Let AI provide the list of options.” Great idea and one that might be more exciting that an aircraft door exiting the airplane frame at 15,000 feet.

- The bean counters in the organization will look at the efficiency of using AI for committee work and probably suggest, “Let’s eliminate the staff who spend more than 85 percent of their time in committee meetings.” That will save money and produce some interesting downstream consequences. (I once had a job which was to attendee committee meetings.)

Net net: AI will help some; AI will produce surprises which cannot be easily anticipated it seems.

Stephen E Arnold, July 16, 2024

AI: Hurtful and Unfair. Obviously, Yes

July 5, 2024

It will be years before AI is “smart” enough to entirely replace humans, but it’s in the immediate future. The problem with current AI is that they’re stupid. They don’t know how to do anything unless they’re trained on huge datasets. These datasets contain the hard, copyrighted, trademarked, proprietary, etc. work of individuals. These people don’t want their work used to train AI without their permission, much less replace them. Futurism shares that even AI engineers are worried about their creations, “Video Shows OpenAI Admitting It’s ‘Deeply Unfair’ To ‘Build AI And Take Everyone’s Job Away.”

The interview with an AI software engineer’s admission of guilt originally appeared in The Atlantic, but their morality is quickly covered by their apathy. Brian Wu is the engineer in question. He feels about making jobs obsolete, but he makes an observation that happens with progress and new technology: things change and that is inevitable:

“It won’t be all bad news, he suggests, because people will get to ‘think about what to do in a world where labor is obsolete.’

But as he goes on, Wu sounds more and more unconvinced by his own words, as if he’s already surrendered himself to the inevitability of this dystopian AI future.

‘I don’t know,’ he said. ‘Raise awareness, get governments to care, get other people to care.’ A long pause. ‘Yeah. Or join us and have one of the few remaining jobs. I don’t know. It’s rough.’”

Wu’s colleague Daniel Kokotajlo believes human will invent an all-knowing artificial general intelligence (AGI). The AGI will create wealth and it won’t be distributed evenly, but all humans will be rich. Kokotaljo then delves into the typical science-fiction story about a super AI becoming evil and turning against humanity. The AI engineers, however, aren’t concerned with the moral ambiguity of AI. They want to invent, continuing building wealth, and are hellbent on doing it no matter the consequences. It’s pure motivation but also narcissism and entitlement.

Whitney Grace, July 5, 2024

Google YouTube: The Enhanced Turtle Walk?

July 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I like to figure out how a leadership team addresses issues lower on the priority list. Some outfits talk a good game when a problem arises. I typically think of this as a Microsoft-type response. Security is job one. Then there’s Recall and the weird de-release of a Windows 11 update. But stuff is happening.

A leadership team decides to lead my moving even more slowly, possibly not at all. Turtles know how to win by putting one claw in front of another…. just slowly. Thanks, MSFT Copilot.

Then there are outfits who just ignore everything. I think of this as the Boeing-type of approach to difficult situations. Doors fall off, astronauts are stranded, and the FAA does its government is run like a business thing. But can a cash-strapped airline ground jets from a single manufacturer when the company’s jets come from one manufacturer. The jets keep flying, the astronauts are really not stranded yet, and the government runs like a business.

Google does not fit into either category. I read “Two Years after an Open Letter to YouTube, Fact-Checkers Remain Dissatisfied with the Platform’s Inaction.” The write up describes what Google YouTube to do a better job at fact checking the videos it hoses to people and kids worldwide:

Two years ago, fact-checkers from all over the world signed an open letter to YouTube with four solutions for reducing disinformation and misinformation on the platform. As they convened this year at GlobalFact 11, the world’s largest annual fact-checking summit, fact-checkers agreed there has been no meaningful change.

This suggests that Google is less dynamic than a government agency and definitely not doing the yip yap thing associated with Microsoft-type outfits. I find this interesting.

The [YouTube] channel continued to publish livestreams with falsehoods and racked up hundreds of thousands of views, Kamath [the founder of Newschecker] said.

Google YouTube is a global resource. The write up says:

When YouTube does present solutions, it focuses on English and doesn’t give a timeline for applying it to other languages, [Lupa CEO Natália] Leal said.

The turtle play perhaps?

The big assertion in the article in my opinion is:

[The] system is ‘loaded against fact-checkers’

Okay, let’s summarize. At one end of the leadership spectrum we have the talkers and go slow or do nothing. At the other end of the spectrum we have the leaders who don’t talk and allegedly retaliate when someone does talk with the events taking place under the watchful eye of US government regulators.

The Google YouTube method involves several leadership practices:

- Pretend avoidance. Google did not attend the fact checking conference. This is the ostrich principle I think.

- Go really slow. Two years with minimal action to remove inaccurate videos.

- Don’t talk.

My hypothesis is that Google can’t be bothered. It has other issues demanding its leadership time.

Net net: Are inaccurate videos on the Google YouTube service? Will this issue be remediated? Nope. Why? Money. Misinformation is an infinite problem which requires infinite money to solve. Ergo. Just make money. That’s the leadership principle it seems.

Stephen E Arnold, July 4, 2024

The Check Is in the Mail and I Will Love You in the Morning. I Promise.

July 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Have you heard these phrases in a business context?

- “I’ll get back to you on that”

- “We should catch up sometime”

- “I’ll see what I can do”

- “I’m swamped right now”

- “Let me check my schedule and get back to you”

- “Sounds great, I’ll keep that in mind”

Thanks, MSFT Copilot. Good enough despite the mobile presented as a corded landline connected to a bank note. I understand and I will love you in the morning. No, really.

I read “It’s Safe to Update Your Windows 11 PC Again, Microsoft Reassures Millions after Dropping Software over Bug.” [If the linked article disappears, I would not be surprised.] The write up says:

Due to the severity of the glitch, Microsoft decided to ditch the roll-out of KB5039302 entirely last week. Since then, the Redmond-based company has spent time investigating the cause of the bug and determined that it only impacts those who use virtual machine tools, like CloudPC, DevBox, and Azure Virtual Desktop. Some reports suggest it affects VMware, but this hasn’t been confirmed by Microsoft.

Now the glitch has been remediated. Yes, “I’ll get back to you on that.” Okay, I am back:

…on the first sign that your Windows PC has started — usually a manufacturer’s logo on a blank screen — hold down the power button for 10 seconds to turn-off the device, press and hold the power button to turn on your PC again, and then when Windows restarts for a second time hold down the power button for 10 seconds to turn off your device again. Power-cycling twice back-to-back should means that you’re launched into Automatic Repair mode on the third reboot. Then select Advanced options to enter winRE. Microsoft has in-depth instructions on how to best handle this damaging bug on its forum.

No problem, grandma.

I read this reassurance the simple steps needed to get the old Windows 11 gizmo working again. Then I noted this article in my newsfeed this morning (July 1, 2024): “Microsoft Notifies More Customers Their Emails Were Accessed by Russian Hackers.” This write up reports as actual factual this Microsoft announcement:

Microsoft has told more customers that their emails were compromised during a late 2023 cyberattack carried out by the Russian hacking group Midnight Blizzard.

Yep, Russians… again. The write up explains:

The attack began in late November 2023. Despite the lengthy period the attackers were present in the system, Microsoft initially insisted that that only a “very small percentage” of corporate accounts were compromised. However, the attackers managed to steal emails and attached documents during the incident.

I can hear in the back of my mind this statement: “I’ll see what I can do.” Okay, thanks.

This somewhat interesting revelation about an event chugging along unfixed since late 2023 has annoyed some other people, not your favorite dinobaby. The article concluded with this passage:

In April [2023], a highly critical report [pdf] by the US Cyber Safety Review Board slammed the company’s response to a separate 2023 incident where Chinese hackers accessed emails of high-profile US government officials. The report criticized Microsoft’s “cascade of security failures” and a culture that downplayed security investments in favor of new products. “Microsoft had not sufficiently prioritized rearchitecting its legacy infrastructure to address the current threat landscape,” the report said. The urgency of the situation prompted US federal agencies to take action in April [2023]. An emergency directive was issued by the US Cybersecurity and Infrastructure Security Agency (CISA), mandating government agencies to analyze emails, reset compromised credentials, and tighten security measures for Microsoft cloud accounts, fearing potential access to sensitive communications by Midnight Blizzard hackers. CISA even said the Microsoft hack posed a “grave and unacceptable risk” to government agencies.

“Sounds great, I’ll keep that in mind.”

Stephen E Arnold, July 1, 2024