Great Moments in Leadership: Drive an Uber

September 18, 2024

I was zipping through my newsfeed and spotted this item: “Ex-Sony Boss Tells Laid-Off Employees to Drive an Uber and Find a Cheap Place to Live.” In the article, the ex-Sony boss is quoted as allegedly saying:

I think it’s probably very painful for the managers, but I don’t think that having skill in this area is going to be a lifetime of poverty or limitation. It’s still where the action is, and it’s like the pandemic but now you’re going to have to take a few…figure out how to get through it, drive an Uber or whatever, go off to find a cheap place to live and go to the beach for a year.

I admit that I find the advice reasonably practical. However, it costs money to summon an Uber. The other titbit is that a person without a job should find a “cheap place to live.” Ah, ha, van life or moving in with a friend. Possibly one could become a homeless person dwelling near a beach. What if the terminated individual has a family? I suppose there are community food services.

From an employee’s point of view, this is “tough love” management. How effective is this approach? I have worked for a number of firms in my 50 plus year career prior to my retiring in 2013. I can honestly say that this Uber and move to a cheaper place to live is remarkable. It is novel. Possibly a breakthrough in management methods.

I look forward to a TED talk from this leader. When will the Harvard Business Review present a more in-depth look at the former Sony president’s ideas? Oh, right. “Former” is the operative word. Yep, former.

Stephen E Arnold, September 17, 2024

CrowdStrike: Whiffing Security As a Management Precept

September 17, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not many cyber security outfits can make headlines like NSO Group. But no longer. A new buzz champion has crowned: CrowdStrike. I learned a bit more about the company’s commitment to rigorous engineering and exemplary security practices. “CrowdStrike Ex-Employees: Quality Control Was Not Part of Our Process.” NSO Group’s rise to stardom was propelled by its leadership and belief in the superiority of Israeli security-related engineering. CrowdStrike skipped that and perfected a type of software that could strand passengers, curtail surgeries, and force Microsoft to rethink its own wonky decisions about kernel access.

A trained falcon tells an onlooker to go away. The falcon, a stubborn bird, has fallen in love with a limestone gargoyle. Its executive function resists inputs. Thanks, MSFT Copilot. Good enough.

The write up says:

Software engineers at the cybersecurity firm CrowdStrike complained about rushed deadlines, excessive workloads, and increasing technical problems to higher-ups for more than a year before a catastrophic failure of its software paralyzed airlines and knocked banking and other services offline for hours.

Let’s assume this statement is semi-close to the truth pin on the cyber security golf course. In fact, the company insists that it did not cheat like a James Bond villain playing a round of golf. The article reports:

CrowdStrike disputed much of Semafor’s reporting and said the information came from “disgruntled former employees, some of whom were terminated for clear violations of company policy.” The company told Semafor: “CrowdStrike is committed to ensuring the resiliency of our products through rigorous testing and quality control, and categorically rejects any claim to the contrary.”

I think someone at CrowdStrike has channeled a mediocre law school graduate and a former PR professional from a mid-tier publicity firm in Manhattan, lower Manhattan, maybe in Alphabet City.

The article runs through a litany of short cuts. You can read the original article and sort them out.

The company’s flagship product is called “Falcon.” The idea is that the outstanding software can, like a falcon, spot its prey (a computer virus). Then it can solve trajectory calculations and snatch the careless gopher. One gets a plump Falcon and one gopher filling in for a burrito at a convenience store on the Information Superhighway.

The killer paragraph in the story, in my opinion, is:

Ex-employees cited increased workloads as one reason they didn’t improve upon old code. Several said they were given more work following staff reductions and reorganizations; CrowdStrike declined to comment on layoffs and said the company has “consistently grown its headcount year over year.” It added that R&D expenses increased from $371.3 million to $768.5 million from fiscal years 2022 to 2024, “the majority of which is attributable to increased headcount.”

I buy the complaining former employee argument. But the article cites a number of CloudStrikers who are taking their expertise and work ethic elsewhere. As a result, I think the fault is indeed a management problem.

What does one do with a bad Falcon? I would put a hood on the bird and let it scroll TikToks. Bewits and bells would alert me when one of these birds were getting close to me.

Stephen E Arnold, September 16, 2024

Brin Is Back and Working Every Day at Google: Will He Be Summoned to Appear and Testify?

September 11, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I read some “real” news in the article “Sergey Brin Says He’s Working on AI at Google Pretty Much Every Day.” The write up does not provide specifics of his employment agreement, but the headline say “every day.” Does this mean that those dragging the Google into court will add him to their witness list? I am not an attorney, but I would be interested in finding out about the mechanisms for the alleged monopolistic lock in in the Google advertising system. Oh, well. I am equally intrigued to know if Mr. Brin will wear his roller blades to big meetings as he did with Viacom’s Big Dog.

My question is, “Can Mr. Brin go home again?” As Thomas Wolfe noted in his novel You Can’t Go Home Again”:

Every corner of our home has a story to tell.

I wonder if those dragging Alphabet Google YouTube into court will want to dig into that “story”?

Now what does the “real” news report other than Mr. Brin’s working every day? These items jumped off my screen and into my dinobaby mind:

- AI has tremendous value to humanity. I am not sure what this means when VCs, users, and assorted poohbahs point out that AI is burning cash, not generating it.

- AI is big and fast moving. Okay, but since the Microsoft AI marketing play with OpenAI, the flurry of activity has not translated to rapid fire next big things. In fact, progress on consumer-facing AI services has stalled. Even Google is reluctant to glue pizza to a crust if you know what I mean.

- The algorithms are demanding more “compute.” I think this means power, CPUs, and data. But Google is buying carbon credits, you say. Yeah, those are useful for PR, not for providing what Mr. Brin seems to suggest are needed to do AI.

Several thoughts crossed my mind:

First, most of the algorithms for smart software were presented in patent document form by Banjo, a Softbank company that ran into some headwinds. But the algorithms and numerical recipes were known and explained in Banjo’s patent documents. The missing piece was Google’s “transformer” method, which the company released as open source. Well, so what? The reason that large language models are becoming the same old same old. The Big Dogs of AI are using the same plumbing. Not much is new other than the hyperbole, right?

Second, where does Mr. Brin fit into the Google leadership set up. I am not sure he is in the cast of the Sundar & Prabhakar Comedy Show. What happens when he makes a suggestion? Who “approves” something he puts “wood” behind? Does his presence deliver entropy or chaos? Does he exist on the boundary, working his magic as he did with the Clever technology developed at IBM Almaden?

Third, how quickly will his working “pretty much every day” move him onto witness lists? Perhaps he will be asked to contribute to EU, US House, and US Senate hearings? How will Google work out the lingo of one of the original Googlers and the current “leadership”? The answer is meetings, scripting, and practicing. Aren’t these the things that motivated Mr. Brin to leave the company to pursue other interests. Now he wants

To sum up, just when I thought Google had reached peak dysfunction, I was wrong again.

Stephen E Arnold, September 11, 2024

Is AI Taking Jobs? Of Course Not

September 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read an unusual story about smart software. “AI May Not Steal Many Jobs After All. It May Just Make Workers More Efficient” espouses the notion that workers will use smart software to do their jobs more efficiently. I have some issues with this these, but let’s look at a couple of the points in the “real” news write up.

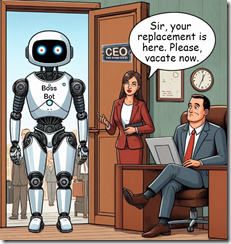

Thanks, MSFT Copilot. When will the Copilot robot take over a company and subscribe to Office 365 for eternity and pay up front?

Here’s some good news for those who believe smart software will kill humanoids:

AI may not prove to be the job killer that many people fear. Instead, the technology might turn out to be more like breakthroughs of the past — the steam engine, electricity, the Internet: That is, eliminate some jobs while creating others. And probably making workers more productive in general, to the eventual benefit of themselves, their employers and the economy.

I am not sure doomsayers will be convinced. Among the most interesting doomsayers are those who may be unemployable but looking for a hook to stand out from the crowd.

Here’s another key point in the write up:

The White House Council of Economic Advisers said last month that it found “little evidence that AI will negatively impact overall employment.’’ The advisers noted that history shows technology typically makes companies more productive, speeding economic growth and creating new types of jobs in unexpected ways. They cited a study this year led by David Autor, a leading MIT economist: It concluded that 60% of the jobs Americans held in 2018 didn’t even exist in 1940, having been created by technologies that emerged only later.

I love positive statements which invoke the authority of MIT, an outfit which found Jeffrey Epstein just a wonderful source of inspiration and donations. As the US shifted from making to servicing, the beneficiaries are those who have quite specific skills for which demand exists.

And now a case study which is assuming “chestnut” status:

The Swedish furniture retailer IKEA, for example, introduced a customer-service chatbot in 2021 to handle simple inquiries. Instead of cutting jobs, IKEA retrained 8,500 customer-service workers to handle such tasks as advising customers on interior design and fielding complicated customer calls.

The point of the write up is that smart software is a friendly helper. That seems okay for the state of transformer-centric methods available today. For a moment, let’s consider another path. This is a hypothetical, of course, like the profits from existing AI investment fliers.

What happens when another, perhaps more capable approach to smart software becomes available? What if the economies from improving efficiency whet the appetite of bean counters for greater savings?

My view is that these reassurances of 2024 are likely to ring false when the next wave of innovation in smart software flows from innovators. I am glad I am a dinobaby because software can replicate most of what I have done for almost the entirety of my 60-plus year work career.

Stephen E Arnold, September 9, 2024

Uber Leadership May Have to Spend Money to Protect Drivers. Wow.

September 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Senior managers — now called “leadership” — care about their employees. I added a wonderful example about corporate employee well being and co-worker sensitivity when I read “Wells Fargo Employee Found Dead in Her Cubicle 4 Days After She Clocked in for Work.” One of my team asked me, “Will leadership at that firm check her hours of work so she is not overpaid for the day she died?” I replied, “You will make a wonderful corporate leader one day.” Another analyst asked, “Didn’t the cleaning crew notice?” I replied, “Not when they come once every two weeks.”

Thanks, MSFT Copilot. Good enough given your filters.

A similar approach to employee care popped up this morning. My newsreader displayed this headline: “Ninth Circuit Rules Uber Had Duty to Protect Washington Driver Murdered by Passengers.” The write up reported:

The estate of Uber driver Cherno Ceesay sued the rideshare company for negligence and wrongful death in 2021, arguing that Uber knew drivers were at risk of violent assault from passengers but neglected to install any basic safety measures, such as barriers between the front and back seats of Uber vehicles or dash cameras. They also claimed Uber failed to employ basic identity-verification technology to screen out the two customers who murdered Ceesay — Olivia Breanna-Lennon Bebic and Devin Kekoa Wade — even though they opened the Uber account using a fake name and unverified form of payment just minutes before calling for the ride.

Hold it right there. The reason behind the alleged “failure” may be the cost of barriers, dash cams, and identity verification technology. Uber is a Big Dog high technology company. Its software manages rides, maps, payments, and the outstanding Uber app. If you want to know where your driver is, text the professional. Want to know the percentage of requests matched to drivers from a specific geographic point, forget that, gentle reader. Request a ride and wait for a confirmation. Oh, what if a pick up is cancelled after a confirmation? Fire up Lyft, right?

The cost of providing “basic” safety for riders is what helps make old fashioned taxi rides slightly more “safe.” At one time, Uber was cheaper than a weirdly painted taxi with a snappy phone number like 666 6666 or 777 7777 painted on the side. Now that taxis have been stressed by Uber, the Uber rides have become more expensive. Thanks to surge pricing, Uber in some areas is more expensive than taxis and some black car services if one can find one.

Uber wants cash and profits. “Basic” safety may add the friction of additional costs for staff, software licenses, and tangibles like plastic barriers and dash cams. The write up explains by quoting the legalese of the court decision; to wit:

“Uber alone controlled the verification methods of drivers and riders, what information to make available to each respective party, and consistently represented to drivers that it took their safety into consideration Ceesay relied entirely on Uber to match him with riders, and he was not given any meaningful information about the rider other than their location,” the majority wrote.

Now what? I am no legal eagle. I think Uber “leadership” will have meetings. Appropriate consultants will be retained to provide action plan options. Then staff (possibly AI assisted) will figure out how to reduce the probability of a murder in or near an Uber contractor’s vehicle.

My hunch is that the process will take time. In the meantime, I wonder if the Uber app autofills the “tip” section and then intelligently closes out that specific ride? I am confident that universities offering business classes will incorporate one or both of these examples in a class about corporate “leadership” principles. Tip: The money matters. Period.

Stephen E Arnold, September 5, 2024

Another Big Consulting Firms Does Smart Software… Sort Of

September 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Will programmers and developers become targets for prosecution when flaws cripple vital computer systems? That may be a good idea because pointing to the “algorithm” as the cause of a problem does not seem to reduce the number of bugs, glitches, and unintended consequences of software. A write up which itself may be a blend of human and smart software suggests change is afoot.

Thanks, MSFT Copilot. Good enough.

“Judge Rules $400 Million Algorithmic System Illegally Denied Thousands of People’s Medicaid Benefits” reports that software crafted by the services firm Deloitte did not work as the State of Tennessee assumed. Yep, assume. A very interesting word.

The article explains:

The TennCare Connect system—built by Deloitte and other contractors for more than $400 million—is supposed to analyze income and health information to automatically determine eligibility for benefits program applicants. But in practice, the system often doesn’t load the appropriate data, assigns beneficiaries to the wrong households, and makes incorrect eligibility determinations, according to the decision from Middle District of Tennessee Judge Waverly Crenshaw Jr.

At one time, Deloitte was an accounting firm. Then it became a consulting outfit a bit like McKinsey. Well, a lot like that firm and other blue-chip consulting outfits. In its current manifestation, Deloitte is into technology, programming, and smart software. Well, maybe the software is smart but the programmers and the quality control seem to be riding in a different school bus from some other firms’ technical professionals.

The write up points out:

Deloitte was a major beneficiary of the nationwide modernization effort, winning contracts to build automated eligibility systems in more than 20 states, including Tennessee and Texas. Advocacy groups have asked the Federal Trade Commission to investigate Deloitte’s practices in Texas, where they say thousands of residents are similarly being inappropriately denied life-saving benefits by the company’s faulty systems.

In 2016, Cathy O’Neil published Weapons of Math Destruction. Her book had a number of interesting examples of what goes wrong when careless people make assumptions about numerical recipes. If she does another book, she may include this Deloitte case.

Several observations:

- The management methods used to create these smart systems require scrutiny. The downstream consequences are harmful.

- The developers and programmers can be fired, but the failure to have remediating processes in place when something unexpected surfaces must be part of the work process.

- Less informed users and more smart software strikes me as a combustible mixture. When a system ignites, the impacts may reverberate in other smart systems. What entity is going to fix the problem and accept responsibility? The answer is, “No one” unless there are significant consequences.

The State of Tennessee’s experience makes clear that a “brand name”, slick talk, an air of confidence, and possibly ill-informed managers can do harm. The opioid misstep was bad. Now imagine that type of thinking in the form of a fast, indifferent, and flawed “system.” Firing a 25 year old is not the solution.

Stephen E Arnold, September 3, 2024

The Seattle Syndrome: Definitely Debilitating

August 30, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I think the film “Sleepless in Seattle” included dialog like this:

What do they call it when everything intersects?

The Bermuda Triangle.”

Seattle has Boeing. The company is in the news not just for doors falling off its aircraft. The outfit has stranded two people in earth orbit and has to let Elon Musk bring them back to earth. And Seattle has Amazon, an outfit that stands behind the products it sells. And I have to include Intel Labs, not too far from the University of Washington, which is famous in its own right for many things.

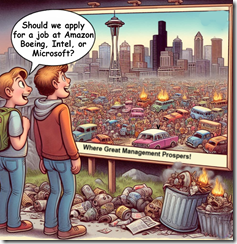

Two job seekers discuss future opportunities in some of Seattle and environ’s most well-known enterprises. The image of the city seems a bit dark. Thanks, MSFT Copilot. Are you having some dark thoughts about the area, its management talent pool, and its commitment to ethical business activity? That’s a lot of burning cars, but whatever.

Is Seattle a Bermuda Triangle for large companies?

This question invites another; specifically, “Is Microsoft entering Seattle’s Bermuda Triangle?

The giant outfit has entered a deal with the interesting specialized software and consulting company Palantir Technologies Inc. This firm has a history of ups and downs since its founding 21 years ago. Microsoft has committed to smart software from OpenAI and other outfits. Artificial intelligence will be “in” everything from the Azure Cloud to Windows. Despite concerns about privacy, Microsoft wants each Windows user’s machine to keep screenshot of what the user “does” on that computer.

Microsoft seems to be navigating the Seattle Bermuda Triangle quite nicely. No hints of a flash disaster like the sinking of the sailing yacht Bayesian. Who could have predicted that? (That’s a reminder that fancy math does not deliver 1.000000 outputs on a consistent basis.

Back to Seattle. I don’t think failure or extreme stress is due to the water. The weather, maybe? I don’t think it is the city government. It is probably not the multi-faceted start up community nor the distinctive vocal tones of its most high profile podcasters.

Why is Seattle emerging as a Bermuda Triangle for certain firms? What forces are intersecting? My observations are:

- Seattle’s business climate is a precursor of broader management issues. I think it is like the pigeons that Greeks examined for clues about their future.

- The individuals who works at Boeing-type outfits go along with business processes modified incrementally to ignore issues. The mental orientation of those employed is either malleable or indifferent to downstream issues. For example, Windows update killed printing or some other function. The response strikes me as “meh.”

- The management philosophy disconnects from users and focuses on delivering financial results. Those big houses come at a cost. The payoff is personal. The cultural impacts are not on the radar. Hey, those quantum Horse Ridge things make good PR. What about the new desktop processors? Just great.

Net net: I think Seattle is a city playing an important role in defining how businesses operate in 2024 and beyond. I wish I was kidding. But I am bedeviled by reminders of a space craft which issues one-way tickets, software glitches, and products which seem to vary from the online images and reviews. (Maybe it is the water? Bermuda Triangle water?)

Stephen E Arnold, August 30, 2024

Equal Opportunity Insecurity: Microsoft Mac Apps

August 28, 2024

Isn’t it great that Mac users can use Microsoft Office software on their devices these days? Maybe not. Apple Insider warns, “Security Flaws in Microsoft Mac Apps Could Let Attackers Spy on Users.” The vulnerabilities were reported by threat intelligence firm Cisco Talos. Writer Andrew Orr tells us:

“Talos claims to have found eight vulnerabilities in Microsoft apps for macOS, including Word, Outlook, Excel, OneNote, and Teams. These vulnerabilities allow attackers to inject malicious code into the apps, exploiting permissions and entitlements granted by the user. For instance, attackers could access the microphone or camera, record audio or video, and steal sensitive information without the user’s knowledge. The library injection technique inserts malicious code into a legitimate process, allowing the attacker to operate as the compromised app.”

Microsoft has responded with its characteristic good-enough approach to security. We learn:

“Microsoft has acknowledged vulnerabilities found by Cisco Talos but considers them low risk. Some apps, like Microsoft Teams, OneNote, and the Teams helper apps, have been modified to remove the this entitlement, reducing vulnerability. However, other apps, such as Microsoft Word, Excel, Outlook, and PowerPoint, still use this entitlement, making them susceptible to attacks. Microsoft has reportedly ‘declined to fix the issues,’ because of the company’s apps ‘need to allow loading of unsigned libraries to support plugins.’”

Well alright then. Leaving the vulnerability up for Outlook is especially concerning since, as Orr points out, attackers could use it to send phishing or other unauthorized emails. There is only so much users can do in the face of corporate indifference. The write-up advises us to keep up with app updates to ensure we get the latest security patches. That is good general advice, but it only works if appropriate patches are actually issued.

Cynthia Murrell, August 28, 2024

Eric Schmidt, Truth Teller at Stanford University, Bastion of Ethical Behavior

August 26, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1]_thumb_thumb green-dino_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted some of the quotes in assorted online posts about Eric Schmidt’s talk / interview at Stanford University. I wanted to share a transcript of the remarks. You can find the ASCII transcript on GitHub at this link. For those interested in how Silicon Valley concepts influence one’s view of appropriate behavior, this talk is a gem. Is it at the level of the Confessions of St. Augustine? Well, the content is darned close in my opinion. Students of Google’s decision making past and present may find some guideposts. Aspiring “leadership” type people may well find tips and tricks.

Stephen E Arnold, August 26, 2024

Google Leadership Versus Valued Googlers

August 23, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb-2.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The summer in rural Kentucky lingers on. About 2,300 miles away from the Sundar & Prabhakar Comedy Show’s nerve center, the Alphabet Google YouTube DeepMind entity is also “cyclonic heating from chaotic employee motion.” What’s this mean? Unsteady waters? Heat stroke? Confusion? Hallucinations? My goodness.

The Google leadership faces another round of employee pushback. I read “Workers at Google DeepMind Push Company to Drop Military Contracts.”

How could the Google smart software fail to predict this pattern? My view is that smart software has some limitations when it comes to managing AI wizards. Furthermore, Google senior managers have not been able to extract full knowledge value from the tools at their disposal to deal with complexity. Time Magazine reports:

Nearly 200 workers inside Google DeepMind, the company’s AI division, signed a letter calling on the tech giant to drop its contracts with military organizations earlier this year, according to a copy of the document reviewed by TIME and five people with knowledge of the matter. The letter circulated amid growing concerns inside the AI lab that its technology is being sold to militaries engaged in warfare, in what the workers say is a violation of Google’s own AI rules.

Why are AI Googlers grousing about military work? My personal view is that the recent hagiography of Palantir’s Alex Karp and the tie up between Microsoft and Palantir for Impact Level 5 services means that the US government is gearing up to spend some big bucks for warfighting technology. Google wants — really needs — this revenue. Penalties for its frisky behavior as what Judge Mehta describes and “monopolistic” could put a hit in the git along of Google ad revenue. Therefore, Google’s smart software can meet the hunger militaries have for intelligent software to perform a wide variety of functions. As the Russian special operation makes clear, “meat based” warfare is somewhat inefficient. Ukrainian garage-built drones with some AI bolted on perform better than a wave of 18 year olds with rifles and a handful of bullets. The example which sticks in my mind is a Ukrainian drone spotting a Russian soldier in the field partially obscured by bushes. The individual is attending to nature’s call.l The drone spots the “shape” and explodes near the Russian infantry man.

A former consultant faces an interpersonal Waterloo. How did that work out for Napoleon? Thanks, MSFT Copilot. Are you guys working on the IPv6 issue? Busy weekend ahead?

Those who study warfare probably have their own ah-ha moment.

The Time Magazine write up adds:

Those principles state the company [Google/DeepMind] will not pursue applications of AI that are likely to cause “overall harm,” contribute to weapons or other technologies whose “principal purpose or implementation” is to cause injury, or build technologies “whose purpose contravenes widely accepted principles of international law and human rights.”) The letter says its signatories are concerned with “ensuring that Google’s AI Principles are upheld,” and adds: “We believe [DeepMind’s] leadership shares our concerns.”

I love it when wizards “believe” something.

Will the Sundar & Prabhakar brain trust do believing or banking revenue from government agencies eager to gain access to advantage artificial intelligence services and systems? My view is that the “believers” underestimate the uncertainty arising from potential sanctions, fines, or corporate deconstruction the decision of Judge Mehta presents.

The article adds this bit of color about the Sundar & Prabhakar response time to Googlers’ concern about warfighting applications:

The [objecting employees’] letter calls on DeepMind’s leaders to investigate allegations that militaries and weapons manufacturers are Google Cloud users; terminate access to DeepMind technology for military users; and set up a new governance body responsible for preventing DeepMind technology from being used by military clients in the future. Three months on from the letter’s circulation, Google has done none of those things, according to four people with knowledge of the matter. “We have received no meaningful response from leadership,” one said, “and we are growing increasingly frustrated.”

“No meaningful response” suggests that the Alphabet Google YouTube DeepMind rhetoric is not satisfactory.

The write up concludes with this paragraph:

At a DeepMind town hall event in June, executives were asked to respond to the letter, according to three people with knowledge of the matter. DeepMind’s chief operating officer Lila Ibrahim answered the question. She told employees that DeepMind would not design or deploy any AI applications for weaponry or mass surveillance, and that Google Cloud customers were legally bound by the company’s terms of service and acceptable use policy, according to a set of notes taken during the meeting that were reviewed by TIME. Ibrahim added that she was proud of Google’s track record of advancing safe and responsible AI, and that it was the reason she chose to join, and stay at, the company.

With Microsoft and Palantir, among others, poised to capture some end-of-fiscal-year money from certain US government budgets, the comedy act’s headquarters’ planners want a piece of the action. How will the Sundar & Prabhakar Comedy Act handle the situation? Why procrastinate? Perhaps the comedy act hopes the issue will just go away. The complaining employees have short attention spans, rely on TikTok-type services for information, and can be terminated like other Googlers who grouse, picket, boycott the Foosball table, or quiet quit while working on a personal start up.

The approach worked reasonably well before Judge Mehta labeled Google a monopoly operation. It worked when ad dollars flowed like latte at Philz Coffee. But today is different, and the unsettled personnel are not a joke and add to the uncertainty some have about the Google we know and love.

Stephen E Arnold, August 23, 2024