Meta 2026: Grousing Baby Dinobabies and Paddling Furiously

January 7, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I am an 81 year old dinobaby. I get a kick out of a baby dinobaby complaining about young wizards. Imagine how that looks to me. A pretend dinobaby with many years to rock and roll complaining about how those “young people” are behaving. What a hoot!

A dinobaby explains to a young, brilliant entrepreneur, “You are clueless.” My response: Yeah, but who has a job? Thanks, Qwen. Good enough.

Why am I thinking about age classification of dinobabies? I read “Former Meta Scientist Says Mark Zuckerberg’s New AI Chief Is ‘Young’ And ‘Inexperienced’—Warns ‘Lot Of People’ Who Haven’t Yet Left Meta ‘Will Leave’.” Now this is a weird newsy story or a story presented as a newsy release by an outfit called Benzinga. I don’t know much about Benzinga. I will assume it is a version of the estimable Wall Street Journal or the more estimable New York Times. With that nod to excellence in mind, what is this write up about?

Answer: A staff change and what I call departure grief. People may hate their job. However, when booted from that job, a problem looms. No matter how important a person’s family, no matter how many technical accolades a person has accrued, and no matter the sense of self worth — the newly RIFed, terminated, departed, or fired feels bad.

Many Xooglers fume online after losing their status as Googlers. These essays are amusing to me. Then when Mother Google kicks them out of the quiet pod, the beanbag, or the table tennis room — these people fume. I think that’s what this Benzinga “zinger” of a write up conveys.

Let’s take a quick look, shall we?

First, the write up reports that the French-born Yann LeCun is allegedly 65 years old. I noted this passage about Alexandr [sic] Wang is the top dog in Meta’s Superintelligence Labs (MSL) or “MISSILE” I assume. That’s quite a metaphor. Missiles are directed or autonomous. Sometimes they work and sometimes they explode at wedding parties in some countries. Whoops. Now what does the baby dinobaby Mr. LeCun say about the 28 year old sprout Alexandr (sic) Wang, founder of Scale AI. Keep in mind that the genius Mark Zuckerberg paid $14 billion dollars for this company in the middle of 2025.

Alexandr Wang is intelligent and learns quickly, but does not yet grasp what attracts — or alienates — top researchers.

Okay, we have a baby dinobaby complaining about the younger generation. Nothing new here except that Mr. Wang is still employed by the genius Mark Zuckerberg. Mr. LeCun is not as far as I know.

Second, the article notes:

According to LeCun, internal confidence eroded after Meta was criticized for allegedly overstating benchmark results tied to Llama 4. He said the controversy angered Zuckerberg and led him to sideline much of Meta’s existing generative AI organization.

And why? According the zinger write up:

LLMs [are] a dead end.

But was Mr. LeCun involved in these LLMs and was he tainted by the failure that appears to have sparked the genius Mark Zuckerberg to pay $14 billion for an indexing and content-centric company? I would assume that the answer is, “Yep, Mr. LeCun was in his role for about 13 years.” And the result of that was a “dead end.”

I would suggest that the former Facebook and Meta employee was not able to get the good ship Facebook and its support boats Instagram and WhatsApp on course despite 156 months of navigation, charting, and inputting.

Several observations:

- Real dinobabies and pretend dinobabies complain. No problem. Are the complaints valid? One must know about the mental wiring of said dinobaby. Young dinobabies may be less mature complainers.

- Geniuses with a lot of money can be problematic. Mr. LeCun may not appreciate the wisdom of this dinobaby’s statement … yet.

- The genius Mr. Zuckerberg is going to spend his way back into contention in the AI race.

Net net: Meta (Facebook) appears to have floundered with the virtual worlds thing and now is paddling furiously as the flood of AI solutions rushes past him. Can geniuses paddle harder or just buy bigger and more powerful boats? Yep, zinger.

Stephen E Arnold, January 7, 2026

Software in 2026: Whoa, Nellie! You Lame, Girl?

December 31, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

When there were Baby Bells, I got an email from a person who had worked with me on a book. That person told me that one of the Baby Bells wanted to figure out how to do their Yellow Pages as an online service. The problem, as I recall my contact’s saying, was “not to screw up the ad sales in the dead tree version of the Yellow Pages.” I won’t go into the details about the complexities of this project. However, my knowledge of how pre- and post-Judge Green was supposed to work and some on-site experience creating software for what did business as Bell Communications Research gave me some basic information about the land of Bell Heads. (If you don’t get the reference, that’s okay. It’s an insider metaphor but less memorable than Achilles’ heel.)

A young whiz kid from a big name technology school experiences a real life learning experience. Programming the IBM MVS TSO set up is different from vibe coding an app from a college dorm room. Thanks, Qwen, good enough.

The point of my reference to a Baby Bell was that a newly minted stand alone telecommunications company ran on what was a pretty standard line up of IBM and B4s (designed by Bell Labs, the precursor to Bellcore) plus some DECs, Wangs, and other machines. The big stuff ran on the IBM machines with proprietary, AT&T specific applications on the B4s. If you are following along, you might have concluded that slapping a Yellow Pages Web application into the Baby Bell system was easy to talk about but difficult to do. We did the job using my old trick. I am a wrapper guy. Figure out what’s needed to run a Yellow Pages site online, what data are needed and where to get it and where to put it, and then build a nice little Web set up and pass data back and forth via what I call wrapper scripts and code. The benefits of the approach are that I did not have to screw around with the software used to make a Baby Bell actually work. When the Web site went down, no meetings were needed with the Regional Manager who had many eyeballs trained on my small team. Nope, we just fixed the Web site and keep on doing Yellow Page things. The solution worked. The print sales people could use the Web site to place orders or allow the customer to place orders. Open the valve to the IBM and B4s, push in data just the way these systems wanted it, and close the valve. Hooray.

Why didn’t my team just code up the Web stuff and run it on one of those IBM MVS TSO gizmos? The answer appears, quite surprisingly in a blog post published December 24, 2025. I never wrote about the “why” of my approach. I assumed everyone with some Young Pioneer T shirts knew the answer. Guess not. “Nobody Knows How Large Software Products Work” provides the information that I believed every 23 year old computer whiz kid knew.

The write up says:

Software is hard. Large software products are prohibitively complicated.

I know that the folks at Google now understand why I made cautious observations about the complexity of building interlocking systems without the type of management controls that existed at the pre break up AT&T. Google was very proud of its indexing, its 150 plus signal scores for Web sites, and yada yada. I just said, “Those new initiatives may be difficult to manage.” No one cared. I was an old person and a rental. Who really cares about a dinobaby living in rural Kentucky. Google is the new AT&T, but it lacks the old AT&T’s discipline.

Back to the write up. The cited article says:

Why can’t you just document the interactions once when you’re building each new feature? I think this could work in theory, with a lot of effort and top-down support, but in practice it’s just really hard….The core problem is that the system is rapidly changing as you try to document it.

This is an accurate statement. AT&T’s technical regional managers demanded commented code. Were the comments helpful? Sometimes. The reality is that one learns about the cute little workarounds required for software that can spit out the PIX (plant information exchange data) for a specific range of dialing codes. Google does some fancy things with ads. AT&T in the pre Judge Green era do some fancy things for the classified and unclassified telephone systems for every US government entity, commercial enterprises, and individual phones and devices for the US and international “partners.”

What does this mean? In simple terms, one does not dive into a B4 running the proprietary Yellow Page data management system and start trying to read and write in real time from a dial up modem in some long lost corner of a US state with a couple of mega cities, some military bases, and the national laboratory.

One uses wrappers. Period. Screw up with a single character and bad things happen. One does not try to reconstruct what the original programming team actually did to make the PIX system “work.”

The write up says something that few realize in this era of vibe coding and AI output from some wonderful system like Claude:

It’s easier to write software than to explain it.

Yep, this is actual factual. The write up states:

Large software systems are very poorly understood, even by the people most in a position to understand them. Even really basic questions about what the software does often require research to answer. And once you do have a solid answer, it may not be solid for long – each change to a codebase can introduce nuances and exceptions, so you’ve often got to go research the same question multiple times. Because of all this, the ability to accurately answer questions about large software systems is extremely valuable.

Several observations are warranted:

- One gets a “feel” for how certain large, complex systems work. I have, prior to my retiring, had numerous interactions with young wizards. Most were job hoppers or little entrepreneurs eager to poke their noses out of the cocoon of a regular job. I am not sure if these people have the ability to develop a “feel” for a large complex of code like the old AT&T had assembled. These folks know their code, I assume. But the stuff running a machine lost in the mists of time. Probably not. I am not sure AI will be much help either.

- The people running some of the companies creating fancy new systems are even more divorced from the reality of making something work and how to keep it going. Hence, the problems with computer systems at airlines, hospitals, and — I hate to say it — government agencies. These problems will only increase, and I don’t see an easy fix. One sure can’t rely on ChatGPT, Gemini, or Grok.

- The push to make AI your coding partner is sort of okay. But the old-fashioned way involved a young person like myself working side by side with expert DEC people or IBM professionals, not AI. What one learns is not how to do something but how not to do something. Any one, including a software robot, can look up an instruction in a manual. But, so far, only a human can get a “sense” or “hunch” or “intuition” about a box with some flashing lights running something called CB Unix. There is, in my opinion, a one way ticket down the sliding board to system failure with the today 2025 approach to most software. Think about that the next time you board an airplane or head to the hospital for heart surgery.

Net net: Software excitement ahead. And that’s a prediction for 2026. I have a high level of confidence in this peek at the horizon.

Stephen E Arnold, December 31, 2025

Yep, Making the AI Hype Real Will Be Expensive. Hundreds of Billions, Probably More, Says Microsoft

December 26, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I really don’t want to write another “if you think it, it will become real.” But here goes. I read “Microsoft AI CEO Mustafa Suleyman Says It Will Cost Hundreds of Billions to Keep Up with Frontier AI in the Next Decade.”

What’s the pitch? The write up says:

Artificial general intelligence, or AGI, refers to AI systems that can match human intelligence across most tasks. Superintelligence goes a step further — systems that surpass human abilities.

So what’s the cost? Allegedly Mr. AI at Microsoft (aka Microsoft AI CEO Mustafa Suleyman) asserts:

it’s going to cost “hundreds of billions of dollars” to compete at the frontier of AI over the next five to 10 years….Not to mention the prices that we’re paying for individual researchers or members of technical staff.

Microsoft seems to have some “we must win” DNA. The company appears to be willing to ignore users requests for less of that Copilot goodness.

The vice president of AI finance seems shocked by an AI wizard’s request for additional funds… right now. Thanks, Qwen. Good enough.

Several observations:

- The assumption is that more money will produce results. When? Who knows?

- The mental orientation is that outfits like Microsoft are smart enough to convert dreams into reality. That is a certain type of confidence. A failure is a stepping stone, a learning experience. No big deal.

- The hype has triggered some non-AI consequences. The lack of entry level jobs that AI will do is likely to derail careers. Remember the baloney that online learning was better than sitting in a classroom. Real world engagement is work. Short circuiting that work in my opinion is a problem not easily corrected.

Let’s step back. What’s Microsoft doing? First, the company caught Google by surprise in 2022. Now Google is allegedly as good or better than OpenAI’s technology. Microsoft, therefore, is the follower instead of the pace setter. The result is mild concern with a chance of fear tomorrow. the company’s “leadership” is not stabilizing the company, its messages, and its technology offerings. Wobble wobble. Not good.

Second, Microsoft has demonstrated its “certain blindness” to two corporate activities. The first is the amount of money Microsoft has spent and apparently will continue to spend. With inputs from the financially adept Mr. Suleyman, the bean counters don’t have a change. Sure, Microsoft can back out of some data center deals and it can turn some knobs and dials to keep the company’s finances sparkling in the sun… for a while. How long? Who knows?

Third, even Microsoft fan boys are criticizing the idea of shifting from software that a users uses for a purpose to an intelligent operating system that users its users. My hunch is that this bulldozing of user requests, preferences, and needs may be what some folks call a “moment.” Google’s Waymo killed a cat in the Mission District. Microsoft may be running over its customers. Is this risky? Who knows.

Fourth, can Microsoft deliver AI that is not like AI from other services; namely, the open source solutions that are available and the customer-facing apps built on Qwen, for example. AI is a utility and not without errors. Some reports suggest that smart software is wrong two thirds of the time. It doesn’t matter what the “real” percentage is. People now associate smart software with mistakes, not a rock solid tool like a digital tire pressure gauge.

Net net: Mr. Suleyman will have an opportunity to deliver. For how long? Who knows?

Stephen E Arnold, December 26, 2025

Modern Management Method with and without Smart Software

December 22, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I enjoy reading and thinking about business case studies. The good ones are few and far between. Most are predictable, almost as if the author was relying on a large language model for help.

“I’m a Tech Lead, and Nobody Listens to Me. What Should I Do?” is an example of a bright human hitting on tactics to become more effective in his job. You can work through the full text of the article and dig out the gems that may apply to you. I want to focus on two points in the write up. The first is the matrix management diagram based on or attributed to Spotify, a music outfit. The second is a method for gaining influence in a modern, let’s go fast company.

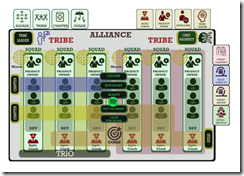

Here’s the diagram that caught my attention:

Instead of the usual business school lingo, you will notice “alliance,” “tribe,” “squad,” and “trio.” I am not sure what these jazzy words mean, but I want to ask you a question, “Looking at this matrix, who is responsible when a problem occurs?” Take you time. I did spend some time looking at this chart, and I formulated several hypotheses:

- The creator wanted to make sure that a member of leadership would have a tough time figuring out who screwed up. If you disagree, that’s okay. I am a dinobaby, and I like those old fashioned flow diagrams with arrows and boxes. In those boxes is the name of the person who has to fix a problem. I don’t know about one’s tribe. I know Problem A is here. Person B is going to fix it. Simple.

- The matrix as displayed allows a lot of people to blame other people. For example, what if the coach is like the leader of the Cleveland Browns, who brilliantly equipped a young quarterback with the incorrect game plan for the first quarter of a football game. Do we blame the coach or do we chase down a product owner? What if the problem is a result of a dependency screw up involving another squad in a different tribe? In practical terms, there is no one with direct responsibility for the problem. Again: Don’t agree? That’s okay.

- The matrix has weird “leadership” or “employment categories” distributed across the X axes at the top of the chart. What’s a chapter? What’s an alliance? What’s self organized and autonomous in a complex technical system? My view is that this is pure baloney designed to make people feel important yet shied any one person from responsibility. I bet some reading this numbered point find my statement out of line. Tough.

The diagram makes clear that the organization is presented as one that will just muddle forward. No one will have responsibility when a problem occurs? No one will know how to fix the problem without dropping other work and reverse engineering what is happening. The chart almost guarantees bafflement when a problem surfaces.

The second item I noticed was this statement or “learning” from the individual who presented the case example. Here’s the passage:

When you solve a real problem and make it visible, people join in. Trust is also built that way, by inviting others to improve what you started and celebrating when they do it better than you.

For this passage hooks into the one about solving a problem; to wit:

Helping people debug. I have never considered myself especially smart, but I have always been very systematic when connecting error messages, code, hypotheses, and system behavior. To my surprise, many people saw this as almost magical. It was not magic. It was a mix of experience, fundamentals, intuition, knowing where to look, and not being afraid to dive into third-party library code.

These two passages describe human interactions. Working with others can result in a collective effort greater than the sum of its parts. It is a human manifestation. One fellow described this a interaction efflorescence. Fancy words for what happens when a few people face a deadline and severe consequences for failure.

Why did I spend time pointing out an organizational structure purpose built to prevent assigning responsibility and the very human observations of the case study author?

The answer is, “What will happen when smart software is tossed into this management structure?” First, people will be fired. The matrix will have lots of empty boxes. Second, the human interaction will have to adapt to the smart software. The smart software is not going to adapt to humans. Don’t believe me. One smart software company defended itself by telling a court it is in our terms of service that suicide in not permissible. Therefore, we are not responsible. The dead kid violated the TOS.

How functional will the company be as the very human insight about solving real problems interfaces with software? Man machine interface? Will that be an issue in a go fast outfit? Nope. The human will be excised as a consequence of efficiency.

Stephen E Arnold, December 23, 2025

Poor Meta! Allegations about Accepting Scam Advertising

December 19, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

That well managed, forward leaning, AI and goggle centric company is in the news again. This time the focus is advertising that is scammy. “Mark Zuckerberg’s Meta Allows Rampant Scam Ads from China While Raking in Billions, Explosive Report Says” states:

According to an investigation by Reuters, Meta earned more than $3 billion in China last year through scam ads for illegal gambling, pornography, and other inappropriate content. That figure represents nearly 19 percent of the company’s $18 billion in total ad revenue from China during the same period. Reuters had previously reported that 10 percent of Meta’s global revenue came from fraudulent ads.

The write up makes a pointed statement:

The investigation suggests Meta knew about the scale of the ad fraud problem on its platforms, but chose not to act because it would have affected revenue.

Guess what happens when senior managers at a large social media outfit pay little attention to what happens around them? Thanks, ChatGPT, good enough.

Let’s assume that the allegations are accurate and verifiable. The question is, “Why did Meta take in billions from scam ads?” My view is that there were several reasons:

- Revenue

- Figuring out what is and is not “spammy” is expensive. Spam may be like the judge’s comment years ago, “I will know it when I see it.” Different people have different perceptions

- Changing the ad sales incentive programs is tricky, time consuming, and expensive.

The logical decision is, based on my limited understanding of how managerial decisions are made at Meta simple: Someone may have said, “Hey, keep doing it until someone makes us stop.”

Why would a very large company adopt this hypothetical response to spammy ads?

My hunch is that management looked the other way. Revenue is important.

Stephen E Arnold, December 19, 2025

AI and Management: Look for Lists and Save Time

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

How does a company figure out whom to terminate? [a] Ask around. [b] Consult “objective” performance reviews. [c] Examine a sales professionals booked deal? [d] Look for a petition signed by employees unhappy with company policies? The answer is at the end of this short post.

A human resources professional has figured out which employees are at the top of the reduction in force task. Thanks Venice.ai. How many graphic artists did you annoy today?

I read “More Than 1,000 Amazon Employees Sign Open Letter Warning the Company’s AI Will Do Staggering Damage to Democracy, Our Jobs, and the Earth .”* The write up states:

The letter was published last week with signatures from over 1,000 unnamed Amazon employees, from Whole Foods cashiers to IT support technicians. It’s a fraction of Amazon’s workforce, which amounts to about 1.53 million, according to the company’s third-quarter earnings release. In it, employees claim the company is “casting aside its climate goals to build AI,” forcing them to use the tech while working toward cutting its workforce in favor of AI investments, and helping to build “a more militarized surveillance state with fewer protections for ordinary people.”

Okay, grousing employees. Signatures. Amazon AI. Hmm. I wonder if some of that old time cross correlation will highlight these individuals and their “close” connections in the company. Who are the managers of these individuals? Are the signers and their close connections linked by other factors; for example a manager? What if a manager has a disproportionate number of grousers? These are made up questions in a purely hypothetical scenario. But they crossed my mind

Do you think someone in Amazon leadership might think along similar lines?

The write up says:

Amazon announced in October it would cut around 14,000 corporate jobs, about 4% of its 350,000-person corporate workforce, as part of a broader AI-driven restructuring. Total corporate cuts could reach up to 30,000 jobs, which would be the company’s single biggest reduction ever, Reuters reported a day prior to Amazon’s announcement.

My reaction was, “Just 1,000 employees signed the grousing letter?” The rule of thumb in a company with pretty good in-person customer support had a truism, “One complaint means 100 people are annoyed just too lazy to call us.” I wonder if this rule of thumb would apply to an estimable firm like Amazon. It only took me 30 minutes to get a refund for the prone to burn or explode mobile phone battery. Pretty swift, but not exactly the type of customer services that company at which I worked responded.

The write up concludes with a quote from a person in carpetland at Amazon:

“What we need to remember is that the world is changing quickly. This generation of AI is the most transformative technology we’ve seen since the Internet, and it’s enabling companies to innovate much faster than ever before,” Beth Galetti, Amazon’s senior vice president of people and experience, wrote in the memo.

I like the royal “we” or the parental “we.” I don’t think it is the in the trenches we, but that is my personal opinion. I like the emphasis on faster and innovation. That move fast and break things is just an outstanding approach to dealing with complex problems.

Ah, Amazon, why does my Kindle iPad app no longer work when I don’t have an Internet connection? You are definitely innovating.

And the correct answer to the multiple choice test? [d] Names on a list. Just sayin’.

———————

* This is one of those wonky Yahoo news urls. If it doesn’t work, don’t hassle me. Speak with that well managed outfit Yahoo, not someone who is 81 and not well managed.

Stephen E Arnold, December 18, 2025

The Google Has a New Sheep Herder: An AI Boss to Nip at the Heels of the AI Beasties

December 17, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Staffing turmoil appears to be the end-of-year trend in some Silicon Valley outfits. Apple is spitting out executives. Meta is thrashing. OpenAI is doing the Code Red alert thing amidst unsettled wizards. And, today I learned that Google has a chief technologist for AI infrastructure. I think that means data centers, but it could extend some oversight to the new material science lab in the UK that will use AI (of course) to invent new materials. “Exclusive / Google Names New Chief of AI Infrastructure Buildout” reports:

Amin Vahdat, who joined Google from academia roughly 15 years ago, will be named chief technologist for AI infrastructure, according to the memo, and become one of 15 to 20 people reporting directly to CEO Sundar Pichai. Google estimates it will have spent more than $90 billion on capital expenditures by the end of 2025, most of it going into the part of the company Vahdat will now oversee.

The sheep dog attempts to herd the little data center doggies away from environmental issues, infrastructure inconsistencies, and roll-your-own engineering. Woof. Thanks, Venice.ai. Close enough for horseshoes.

I read this as making clear the following:

- Google spent “more than $90 billion” on infrastructure in 2025

- No one was paying attention to this investment

- For 2025, a former academic steeped in Googliness will herd the sheep in 2026.

I assume that is part of the McKinsey way, Fire, Aim, Ready! Dinobabies like me with some blue chip consulting experience feel slightly more comfortable with the old school Ready, Aim, Fire! But the world today is different from the one I traveled through decades ago. Nostalgia does not cut it in the “we have to win AI” business environment today.

Here’s a quote making clear that planning and organizing were not part of the 2025 check writing. I quote:

“This change establishes AI Infrastructure as a key focus area for the company,” wrote Google Cloud CEO Thomas Kurian in the Wednesday memo congratulating Vahdat.

The cited article puts this sheep herder in context:

In August, Google disclosed in a paper co-authored by Vahdat that the amount of energy used to run the median prompt on its AI models was equivalent to watching less than nine seconds of television and consuming five drops of water. The numbers were far less than what some critics had feared and competitors had likely hoped for. There’s no single answer for how to best run an AI data center. It’s small, coordinated efforts across disparate teams that span the globe. The job of coordinating it all now has an official title.

See and understand. The power consumption for the Google AI data centers is trivial. The Google can plug these puppies into the local power grid, nip at the heels of the people who complain about rising electricity prices and brown outs, and nuzzle the folks who:

- Promise small, local nuclear power generation facilities. No problems with licensing, component engineering, and nuclear waste. Trivialities.

- Repurposed jet engines from a sort of real supersonic jet source. Noise? No problem. Heat? No problem. Emission control? No problem.

- Brand spanking new pressurized water reactors built by the old school nuclear crowd. No problem. Time? No problem. The new folks are accelerationists.

- Recommissioning turned off (deactivated) nuclear power stations. No problem. Costs? No problem. Components? No problem. Environmental concerns? Absolutely no problem.

Google is tops in strategic planning and technology. It should be. It crafted its expertise selling advertising. AI infrastructure is a piece of cake. I think sheep dogs herding AI can do the job which apparently was not done for more than a year. When a problem becomes to big to ignore, restructure. Grrr or Woof, not Yipe, little herder.

Stephen E Arnold, December 17, 2025

Can Sergey Brin Manage? Maybe Not?

December 12, 2025

True Reason Sergey Used “Super Voting Power”

Yuchen Jin, the CTO and co-founder of Hyperbolic Labs posted on X about recent situation at Google. According topmost, Sergey Brin was disappointed in how Google was using Gemini. The AI algorithm, in fact, wasn’t being used for coding and Sergey wanted it to be used for that.

It created a big tiff. Sergey told Sundar that, “I can’t deal with these people. You have to deal with this.” Sergey still owns Google and has super voting power. Translation: he can do whatever he darn well pleases with his own company.

Yuchin Jin summed it up well:

“Big companies always build bureaucracy. Sergey (and Larry) still have super voting power, and he used it to cut through the BS. Suddenly Google is moving like a startup again. Their AI went from “way behind” to “easily #1” across domains in a year.”

Congratulations to Google making a move that other Big Tech companies knew to make without the intervention of founder.

Google would have eventually shifted to using Gemini for coding. Sergey’s influence only sped it up. The bigger question is if this “tiff” indicates something else. Big companies do have bureaucracies but if older workers have retired, then that means new blood is needed. The current new blood is Gen Z and they are as despised as Millennials once were.

I think this means Sergey cannot manage young tech workers either. He had to turn to the “consultant” to make things happen. It’s quite the admission from a Big Tech leader.

Whitney Grace, December 12, 2025

Clippy, How Is Copilot? Oh, Too Bad

December 8, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In most of my jobs, rewards landed on my desk when I sold something. When the firms silly enough to hire me rolled out a product, I cannot remember one that failed. The sales professionals were the early warning system for many of our consulting firm’s clients. Management provided money to a product manager or R&D whiz with a great idea. Then a product or new service idea emerged, often at a company event. Some were modest, but others featured bells and whistles. One such roll out had a big name person who a former adviser to several presidents. These firms were either lucky or well managed. Product dogs, diseased ferrets, and outright losers were identified early and the efforts redirected.

Two sales professionals realize that their prospects resist Microsoft’s agentic pawing. Mortgages must be paid. Sneakers must be purchased. Food has to be put on the table. Sales are needed, not push backs. Thanks, Venice.ai. Good enough.

But my employers were in tune with what their existing customer base wanted. Climbing a tall tree and going out on a limb were not common occurrences. Even Apple, which resides in a peculiar type of commercial bubble, recognizes a product that does not sell. A recent example is the itsy bitsy, teeny weenie mobile thingy. Apple bounced back with the Granny Scarf designed to hold any mobile phone. The thin and light model is not killed; its just not everywhere like the old reliable orange iPhone.

Sales professionals talk to prospects and customers. If something is not selling, the sales people report, “Problemo, boss.”

In the companies which employed me, the sales professionals knew what was coming and could mention in appropriately terms to those in the target market. This happened before the product or service was in production or available to clients. My employers (Halliburton, Booz, Allen, and a couple of others held in high esteem) had the R&D, the market signals, the early warning system for bad ideas, and the refinement or improvement mechanism working in a reliable way.

I read “Microsoft Drops AI Sales Targets in Half after Salespeople Miss Their Quotas.” The headline suggested three things to me instantly:

- The pre-sales early warning radar system did not exist or it was broken

- The sales professionals said in numbers, “Boss, this Copilot AI stuff is not selling.”

- Microsoft committed billions of dollars and significant, expensive professional staff time on something that prospects and customers do not rush to write checks, use, or tell their friends about the next big thing.”

The write up says:

… one US Azure sales unit set quotas for salespeople to increase customer spending on a product called Foundry, which helps customers develop AI applications, by 50 percent. Less than a fifth of salespeople in that unit met their Foundry sales growth targets. In July, Microsoft lowered those targets to roughly 25 percent growth for the current fiscal year. In another US Azure unit, most salespeople failed to meet an earlier quota to double Foundry sales, and Microsoft cut their quotas to 50 percent for the current fiscal year. The sales figures suggest enterprises aren’t yet willing to pay premium prices for these AI agent tools. And Microsoft’s Copilot itself has faced a brand preference challenge: Earlier this year, Bloomberg reported that Microsoft salespeople were having trouble selling Copilot to enterprises because many employees prefer ChatGPT instead.

Microsoft appears to have listened to the feedback. The adjustment, however, does not address the failure to implement the type of marketing probing process used by Halliburton and Booz, Allen: Microsoft implemented the “think it and it will become real.” The thinking in this case is that software can perform human work roles in a way that is equivalent to or better than a human’s execution.

I may be a dinobaby, but I figured out quickly that smart software has been for the last three years a utility. It is not quite useless, but it is not sufficiently robust to do the work that I do. Other people are on the same page with me.

My take away from the lower quotas is that Microsoft should have a rethink. The OpenAI bet, the AI acquisitions, the death march to put software that makes mistakes in applications millions use in quite limited ways, and the crazy publicity output to sell Copilot are sending Microsoft leadership both audio and visual alarms.

Plus, OpenAI has copied Google’s weird Red Alert. Since Microsoft has skin in the game with OpenAI, perhaps Microsoft should open its eyes and check out the beacons and listen to the klaxons ringing in Softieland sales meetings and social media discussions about Microsoft AI? Just a thought. (That Telegram virtual AI data center service looks quite promising to me. Telegram’s management is avoiding the Clippy-type error. Telegram may fail, but that outfit is paying GPU providers in TONcoin, not actual fiat currency. The good news is that MSFT can make Azure AI compute available to Telegram and get paid in TONcoin. Sounds like a plan to me.)

Stephen E Arnold, December 8, 2025

Apples Misses the AI Boat Again

December 4, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Apple and Telegram have a characteristic in common. Both did not recognize the AI boomlet that began in 2020 or so. Apple was thinking about Granny scarfs that could hold an iPhone and working out ways to cope with its dependence on Chinese manufacturing. Telegram was struggling with the US legal system and trying to create a programming language that a mere human could use to code a distributed application.

Apple’s ship has sailed, and it may dock at Google’s Gemini private island or it could decide to purchase an isolated chunk of real estate and build its de-perplexing AI system at that location.

Thanks, MidJourney. Good enough.

I thought about missing a boat or a train. The reason? I read “Apple AI Chief John Giannandrea Retiring After Siri Delays.” I simply don’t know who has been responsible for Apple AI. Siri did not work when I looked at it on my wife’s iPhone many years ago. Apparently it doesn’t work today. Could that be a factor in the leadership changes at the Tim Apple outfit?

The write up states:

Giannandrea will serve as an advisor between now and 2026, with former Microsoft AI researcher Amar Subramanya set to take over as vice president of AI. Subramanya will report to Apple engineering chief Craig Federighi, and will lead Apple Foundation Models, ML research, and AI Safety and Evaluation. Subramanya was previously corporate vice president of AI at Microsoft, and before that, he spent 16 years at Google.

Apple will probably have a person who knows some people to call at Softie and Google headquarters. However, when will the next AI boat arrive. Apple excelled at announcing AI, but no boat arrived. Telegram has an excuse; for example, our owner Pavel Durov has been embroiled in legal hassles and arm wrestling with the reality that developing complex applications for the Telegram platform is too difficult. One would have thought that Apple could have figured out a way to improve Siri, but it apparently was lost in a reality distortion field. Telegram didn’t because Pavel Durov was in jail in Paris, then confined to the country, and had to report to the French judiciary like a truant school boy. Apple just failed.

The write up says:

Giannandrea’s departure comes after Apple’s major iOS 18 Siri failure. Apple introduced a smarter, “?Apple Intelligence?” version of ?Siri? at WWDC 2024, and advertised the functionality when marketing the iPhone 16. In early 2025, Apple announced that it would not be able to release the promised version of ?Siri? as planned, and updates were delayed until spring 2026. An exodus of Apple’s AI team followed as Apple scrambled to improve ?Siri? and deliver on features like personal context, onscreen awareness, and improved app integration. Apple is now rumored to be partnering with Google for a more advanced version of ?Siri? and other ?Apple Intelligence? features that are set to come out next year.

My hunch is that grafting AI into the bizarro world of the iPhone and other Apple computing devices may be a challenge. Telegram’s solution is to not do hardware. Apple is now an outfit distinguishing itself by missing the boat. When does the next one arrive?

Stephen E Arnold, December 4, 2025