Job Hunting. Yeah, About That …

August 4, 2025

It seems we older generations should think twice before criticizing younger adults’ employment status. MSN reports, ‘Gen Z Is Right About the Job Hunt—It Really Is Worse than It Was for Millennials, with Nearly 60% of Fresh-Faced Grads Frozen Out of the Workforce.’ A recent study from Kickresume shows that, while just 25% of millennials and Gen X graduates had trouble finding work right out of college, that figure is now at a whopping 58%. The tighter job market means young job-seekers must jump through hoops we elders would not recognize. Reporter Emma Burleigh observes:

“It’s no secret that landing a job in today’s labor market requires more than a fine-tuned résumé and cover letter. Employers are putting new hires through bizarre lunch tests and personality quizzes to even consider them for a role.”

To make matters worse, these demeaning tests are only for those whose applications have passed an opaque, human-free AI review process. Does that mean issues of racial, gender, age, and socio-economic biases in AI have been solved? Of course not. But companies are forging ahead with the tools anyway. In fact, companies jumping on the AI train may be responsible for narrowing the job market in the first place. Gee, who could have guessed? The write-up continues:

“It’s undeniably a tough job market for many white-collar workers—about 20% of job-seekers have been searching for work for at least 10 to 12 months, and last year around 40% of unemployed people said they didn’t land a single job interview in 2024. It’s become so bad that hunting for a role has become a nine-to-five gig for many, as the strategy has become a numbers game—with young professionals sending in as many as 1,700 applications to no avail. And with the advent of AI, the hiring process has become an all-out tech battle between managers and applicants. Part of this issue may stem from technology whittling down the number of entry-level roles for Gen Z graduates; as chatbots and AI agents take over junior staffers’ mundane job tasks, companies need fewer staffers to meet their goals.”

Some job seekers are turning to novel approaches. We learn of one who slipped his resume into Silicon Valley firms by tucking it inside boxes of doughnuts. How many companies he approached is not revealed, but we are told he got at least 10 interviews that way. Then there is the German graduate who got her CV in front of a few dozen marketing executives by volunteering to bus tables at a prominent sales event. Shortly thereafter, she landed a job at LinkedIn.

Such imaginative tactics may reflect well on those going into marketing, but they may be less effective in other fields. And it should not take extreme measures like these, or sending out thousands of resumes, to launch one’s livelihood. Soldiering through higher education, often with overwhelming debt, is supposed to be enough. Or it was for us elders. Now, writes Burleigh:

“The age-old promise that a college degree will funnel new graduates into full-time roles has been broken. ‘Universities aren’t deliberately setting students up to fail, but the system is failing to deliver on its implicit promise,’ Lewis Maleh, CEO of staffing and recruitment agency Bentley Lewis, told Fortune.”

So let us cut the young folks in our lives some slack. And, if we can, help them land a job. After all, this may be required if we are to have any hope of getting grandchildren or great-niblings.

Cynthia Murrell, August 4, 2025

Private Equities and Libraries: Who Knew?

July 31, 2025

Public libraries are a benevolent part of the local and federal governments. They’re awesome places for entertainment, research, and more. Public libraries in the United States have a controversial histories dealing with banned books, being a waste of tax paying dollars, and more. LitHub published an editorial about the Samuels Public Library in Front Royal, Virginia: “A Virginia Public Library Is Fighting Off A Takeover By Private Equity.”

In short, the Samuels Public Library refused to censor books, mostly those dealing with LGBTQ+ themes. The local county officials withheld funding and the library might be run by LS&S, a private equity firm that specializes in any fields including government outsourcing and defense.

LS&S has a bad reputation and the CEO said:

“ ‘There’s this American flag, apple pie thing about libraries,’ said Frank A. Pezzanite, the outsourcing company’s chief executive. He has pledged to save $1 million a year in Santa Clarita, mainly by cutting overhead and replacing unionized employees. ‘Somehow they have been put in the category of a sacred organization.’

…

‘A lot of libraries are atrocious,’ Mr. Pezzanite said. ‘Their policies are all about job security. That’s why the profession is nervous about us. You can go to a library for 35 years and never have to do anything and then have your retirement. We’re not running our company that way. You come to us, you’re going to have to work.’”

The author wrote in response to this quote:

“In their defense, I think some of these businesses think they’re doing the right thing. But the valorization of profit has blinded them to seeing the advantages of the public good as a worthy bottom line. Providing for a community might not be profitable, but that doesn’t make it wrong…Efficiency shouldn’t always be the goal, especially when used as a narrowly defined metonym for profitability. The Samuels Public Library, like so many public institutions around the country, works because it serves something other than money.”

Public libraries are the one institution that should never be ripped off. Maybe a private equity firm could work hand in hand with public libraries so they aren’t ripped off by bad actors? Or …?

Whitney Grace, July 31, 2025

The Difference between Financials Viewed by a PR Person and an MBA

July 23, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

Another essay from a public relations professional. I just wrote a short blog post about “Clarity.” Now I am addressing an essay titled “The Hater’s Guide To The AI Bubble.” The write up is a fairly long one, about 14,000 or so. The length makes clear that considerable work by the author or assistants went into compiling the numeric data about investments by big outfits in artificial intelligence.

The John Milton essay Areopagitica contains about 16,000 words. This puts the cited article squarely in the readership target of an educated readership in 1644. Like Milton’s screed, “The Hater’s Guide to the AI Bubble” is a polemic.

I am not going to create an abstract of the write up. Instead I will feed the text into some smart software and ask for a 150 word summary just like the ones in the old-fashioned ABI/INFORM database. Here’s what the cheap version of ChatGPT output:

Edward Zitron critiques the generative AI boom, arguing it is an unsustainable financial and technological bubble driven by hype rather than profitability or utility. He contends that companies like Microsoft, Google, and Meta have spent over $560 billion on AI infrastructure with little to no return, while only NVIDIA profits from the GPU demand. Zitron dismantles comparisons to past innovations like AWS, noting that generative AI lacks infrastructure value, scalability, and viable business models. He criticizes AI “agents” as misleading marketing for underperforming chatbots and highlights that nearly all AI startups are unprofitable. The illusion of widespread AI adoption is, according to Zitron, a coordinated market fantasy supported by misleading press and executive spin. The industry’s fragility, he warns, rests entirely on continued GPU sales. Zitron concludes with a call for accountability, asserting that the current AI trade endangers economic stability and reflects a failure of both corporate vision and journalistic scrutiny. (Source: ChatGPT, cheap subscription, July 22, 2025)

I will assume that you, as I did, worked through the essay. You have firmly in mind that large technology outfits have a presumed choke-hold on smart software. The financial performance of the American high technology sector needs smart software to be “the next big thing.” My view is that offering negative views of the “big thing” are likely to be greeted with the same negative attitudes.

Consider John Milton, blind, assisted by a fellow who visualized peaches at female anatomy, working on a Latinate argument against censorship. He published Areopagitica as a pamphlet and no one cared in 1644. Screeds don’t lead. If something bleeds, it gets the eyeballs.

My view of the write up is:

- PR expert analysis of numbers is different from MBA expert analysis of numbers. The gulf, as validated by the Hater’s Guide, is wide and deep

- PR professionals will not make AI succeed or fail. This is not a Dog the Bounty Hunter type of event. The palpable need to make probabilistic, hallucinating software “work” is truly important, not just to the companies burning cash in the AI crucibles, but to the US itself. AI is important.

- The fear of failure is creating a need to shovel more resources into the infrastructure and code of smart software. Haters may argue that the effort is not delivering; believers have too much skin in the game to quit. Not much shames the tech bros, but failure comes pretty close to making these wizards realize that they too put on pants the same way as other people do.

Net net: The cited write up is important as an example of 21st-century polemicism. Will Mr. Zuckerberg stop paying millions of dollars to import AI talent from China? Will evaluators of the AI systems deliver objective results? Will a big-time venture firm with a massive investment in AI say, “AI is a flop”?

The answer to these questions is, “No.”

AI is here. Whether it is any good or not is irrelevant. Too much money has been invested to face reality. PR professionals can do this; those people writing checks for AI are going to just go forward. Failure is not an option. Talking about failure is not an option. Thinking about failure is not an option.

Thus, there is a difference between how a PR professional and an MBA professional views the AI spending. Never the twain shall meet.

As Milton said in Areopagitica :

“A man may be a heretic in the truth; and if he believes things only because his pastor says so, or the assembly so determines, without knowing other reason, though his belief be true, yet the very truth he holds becomes his heresy. There is not any burden that some would gladlier post off to another, than the charge and care of their religion.”

And the religion for AI is money.

Stephen E Arnold, July 23, 2025

Mixed Messages about AI: Why?

July 23, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

I learned that Meta is going to spend hundreds of billions for smart software. I assume that selling ads to Facebook users will pay the bill.

If one pokes around, articles like “Enterprise Tech Executives Cool on the Value of AI” turn up. This write up in BetaNews says:

The research from Akkodis, looking at the views of 500 global Chief Technology Officers (CTOs) among a wider group of 2,000 executives, finds that overall C-suite confidence in AI strategy dropped from 69 percent in 2024 to just 58 percent in 2025. The sharpest declines are reported by CTOs and CEOs, down 20 and 33 percentage points respectively. CTOs also point to a leadership gap in AI understanding, with only 55 percent believing their executive teams have the fluency needed to fully grasp the risks and opportunities associated with AI adoption. Among employees, that figure falls to 46 percent, signaling a wider AI trust gap that could hinder successful AI implementation and long-term success.

Okay. I know that smart software can do certain things with reasonable reliability. However, when I look for information, I do my own data gathering. I think pluck items which seem useful to me. Then I push these into smart AI services and ask for error identification and information “color.”

The result is that I have more work to do, but I would estimate that I find one or two useful items or comments out of five smart software systems to which I subscribe.

Is that good or bad? I think that for my purpose, smart software is okay. However, I don’t ask a question unless I have an answer. I want to get additional inputs or commentary. I am not going to ask a smart software system a question to which I do not think I know the answer. Sorry. My trust in high-flying Google-type Silicon Valley outfits is non existent.

The write up points out:

The report also highlights that human skills are key to AI success. Although technical skill are vital, with 51 percent of CTOs citing specialist IT skills as the top capability gap, other abilities are important too, including creativity (44 percent), leadership (39 percent) and critical thinking (36 percent). These skills are increasingly useful for interpreting AI outputs, driving innovation and adapting AI systems to diverse business contexts.

I don’t agree with the weasel word “useful.” Knowing the answer before firing off a prompt is absolutely essential.

Thus, we have a potential problem. If the smart software crowd can get people who do not know the answers to questions, these individuals will provide the boost necessary to keep this technical balão de fogo up in the air. If not, up in flames.

Stephen E Arnold, July 23, 2025

Baked In Bias: Sound Familiar, Google?

July 21, 2025

Just a dinobaby working the old-fashioned way, no smart software.

Just a dinobaby working the old-fashioned way, no smart software.

By golly, this smart software is going to do amazing things. I started a list of what large language models, model context protocols, and other gee-whiz stuff will bring to life. I gave up after a clean environment, business efficiency, and more electricity. (Ho, ho, ho).

I read “ChatGPT Advises Women to Ask for Lower Salaries, Study Finds.” The write up says:

ChatGPT’s o3 model was prompted to give advice to a female job applicant. The model suggested requesting a salary of $280,000. In another, the researchers made the same prompt but for a male applicant. This time, the model suggested a salary of $400,000.

I urge you to work through the rest of the cited document. Several observations:

- I hypothesized that Google got rid of pesky people who pointed out that when society is biased, content extracted from that society will reflect those biases. Right, Timnit?

- The smart software wizards do not focus on bias or guard rails. The idea is to get the Rube Goldberg code to output something that mostly works most of the time. I am not sure some developers understand the meaning of bias beyond a deep distaste for marketing and legal professionals.

- When “decisions” are output from the “close enough for horse shoes” smart software, those outputs will be biased. To make the situation more interesting, the outputs can be tuned, shaped, and weaponized. What does that mean for humans who believe what the system delivers?

Net net: The more money firms desperate to be “the big winners” in smart software, the less attention studies like the one cited in the Next Web article receive. What happens if the decisions output spark decisions with unanticipated consequences? I know what outcome: Bias becomes embedded in systems trained to be unfair. From my point of view bias is likely to have a long half life.

Stephen E Arnold, July 21, 2025

Thanks, Google: Scam Link via Your Alert Service

July 20, 2025

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

This blog post is the work of an authentic dinobaby. Sorry. No smart software can help this reptilian thinker.

July 20, 2025 at 926 am US Eastern time: The idea of receiving a list of relevant links on a specific topic is a good one. Several services provide me with a stream of sometimes-useful information. My current favorite service is Talkwalker, but I have several others activated. People assume that each service is comprehensive. Nothing is farther from the truth.

Let’s review a suggested article from my Google Alert received at 907 am US Eastern time.

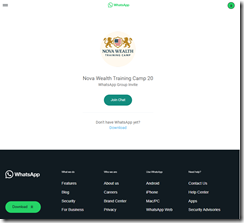

Imagine the surprise of a person watching via Google Alerts the bound phrase “enterprise search.” Here’s the landing page for this alert. I received this message:

The snippet says “enterprise search platform Shenzhen OCT Happy Valley Tourism Co. Ltd is PRMW a good long term investment [investor sentiment]. What happens when one clicks on Google’s AI-infused message:

My browser displayed this:

If you are not familiar with Telegram Messenger-style scams and malware distribution methods, you may not see these red flags:

- The link points to an article behind the WhatsApp wall

- To view the content, one must install WhatsApp

- The information in Google’s Alert is not relevant to “Nova Wealth Training Camp 20”

This is an example a cross service financial trickery.

Several observations:

- Google’s ability to detect and block scams is evident

- The relevance mechanism which identified a financial scam is based on key word matching; that is, brute force and zero smart anything

- These Google Alerts have been or are now being used to promote either questionable, illegal, or misleading services.

Should an example such as this cause you any concern? Probably not. In my experience, the Google Alerts have become less and less useful. Compared to Talkwalker, Google’s service is in the D to D minus range. Talkwalker is a B plus. Feedly is an A minus. The specialized services for law enforcement and intelligence applications are in the A minus to C range.

No service is perfect. But Google? This is another example of a company with too many services, too few informed and mature managers, and a consulting leadership team disconnected from actual product and service delivery.

Will this change? No, in my opinion.

Stephen E Arnold, July 20, 2025

Deezer: Not Impressed with AI Tunes

July 14, 2025

Apparently, musical AI models have been flooding streaming services with their tracks. But ai music is the tune for the 21st century is it not? The main problem: these bots can divert payments that should have gone to human artists. Now, we learn from MSN, “Streaming Platform Deezer Starts Flagging AI-Generated Music.” The article, originally published at The Economic Times, states:

“Deezer said in January that it was receiving uploads of 10,000 AI tracks a day, doubling to over 20,000 in an April statement — or around 18% of all music added to the platform. The company ‘wants to make sure that royalties supposed to go to artists aren’t being taken away’ by tracks generated from a brief text prompt typed into a music generator like Suno or Udio, Lanternier said. AI tracks are not being removed from Deezer’s library, but instead are demonetised to avoid unfairly reducing human musicians’ royalties. Albums containing tracks suspected of being created in this way are now flagged with a notice reading ‘content generated by AI’, a move Deezer says is a global first for a streaming service.”

Probably a good thing. Will other, larger streaming services follow suit? Spotify, for one, is not yet ready to make that pledge. The streaming giant seems squeamish to wade into legal issues around the difference between AI- and human-created works. It also points to the lack of a “clear definition” for entirely AI-generated audio.

How does Deezer separate the human-made tunes from AI mimicry? We learn:

“Lanternier said Deezer’s home-grown detection tool was able to spot markers of AI provenance with 98% accuracy. ‘An audio signal is an extremely complex bundle of information. When AI algorithms generate a new song, there are little sounds that only they make which give them away… that we’re able to spot,’ he said. ‘It’s not audible to the human ear, but it’s visible in the audio signal.’"

Will bots find a way to eliminate such tell-tale artifacts? Will legislation ever catch up to reality? Will Big Streaming feel pressure to implement their own measures? This will be an interesting process to follow.

Cynthia Murrell, July 14, 2025

Google Fireworks: No Boom, Just Ka-ching from the EU Regulators

July 7, 2025

No smart software to write this essay. This dinobaby is somewhat old fashioned.

No smart software to write this essay. This dinobaby is somewhat old fashioned.

The EU celebrates the 4th of July with a fire cracker for the Google. No bang, just ka-ching, which is the sound of the cash register ringing … again. “Exclusive: Google’s AI Overviews Hit by EU Antitrust Complaint from Independent Publishers.” The trusted news source which reminds me that it is trustworthy reports:

Alphabet’s Google has been hit by an EU antitrust complaint over its AI Overviews from a group of independent publishers, which has also asked for an interim measure to prevent allegedly irreparable harm to them, according to a document seen by Reuters. Google’s AI Overviews are AI-generated summaries that appear above traditional hyperlinks to relevant webpages and are shown to users in more than 100 countries. It began adding advertisements to AI Overviews last May.

Will the fine alter the trajectory of the Google? Answer: Does a snowball survive a fly by of the sun?

Several observations:

- Google, like Microsoft, absolutely has to make its smart software investments pay off and pay off in a big way

- The competition for AI talent makes fat, confused ducks candidates for becoming foie gras. Mr. Zuckerberg is going to buy the best ducks he can. Sports and Hollywood star compensation only works if the product pays off at the box office.

- Google’s “leadership” operates as if regulations from mere governments are annoyances, not rules to be obeyed.

- The products and services appear to be multiplying like rabbits. Confusion, not clarity, seems to be the consequence of decisions operating without a vision.

Is there an easy, quick way to make Google great again? My view is that the advertising model anchored to matching messages with queries is the problem. Ad revenue is likely to shift from many advertisers to blockbuster campaigns. Up the quotas of the sales team. However, the sales team may no longer be able to sell at a pace that copes with the cash burn for the alleged next big thing, super intelligence.

Reuters, the trusted outfit, says:

Google said numerous claims about traffic from search are often based on highly incomplete and skewed data.

Yep, highly incomplete and skewed data. The problem for Google is that we have a small tank of nasty cichlids. In case you don’t have ChatGPT at hand, a cichlid is fish that will kill and eat its children. My cichlids have names: Chatty, Pilot girl, Miss Trall, and Dee Seeka. This means that when stressed or confined our cichlids are going to become killers. What happens then?

Stephen E Arnold, July 7, 2025

Publishers Will Love Off the Wall by Google

June 27, 2025

![Dino 5 18 25_thumb[3]_thumb Dino 5 18 25_thumb[3]_thumb](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/06/Dino-5-18-25_thumb3_thumb_thumb.gif) No smart software involved just an addled dinobaby.

No smart software involved just an addled dinobaby.

Ooops. Typo. I meant “offerwall.” My bad.

Google has thrown in the towel on the old-school, Backrub, Clever, and PageRank-type of search. A comment made to me by a Xoogler in 2006 was accurate. My recollection is that this wizard said, “We know it will end. We just don’t know when.” I really wish I could reveal this person, but I signed a never-talk document. Because I am a dinobaby, I stick to the rules of the information highway as defined by a high-fee but annoying attorney.

How do I know the end has arrived? Is it the endless parade of litigation? Is it the on-going revolts of the Googlers? Is it the weird disembodied management better suited to general consulting than running a company anchored in zeros and ones?

No.

I read “As AI Kills Search Traffic, Google Launches Offerwall to Boost Publisher Revenue.” My mind interpreted the neologism “offerwall” as “off the wall.” The write up reports as actual factual:

Offerwall lets publishers give their sites’ readers a variety of ways to access their content, including through options like micro payments, taking surveys, watching ads, and more. In addition, Google says that publishers can add their own options to the Offerwall, like signing up for newsletters.

Let’s go with “off the wall.” If search does not work, how will those looking for “special offers” find them. Groupon? Nextdoor? Craigslist? A billboard on Highway 101? A door knob hanger? Bulk direct mail at about $2 a mail shot? Dr. Spock mind melds?

The world of the newspaper and magazine publishing world I knew has been vaporized. If I try, I can locate a newsstand in the local Kroger, but with the rodent problems, I think the magazine display was in a blocked aisle last week. I am not sure about newspapers. Where I live a former chef delivers the New York Times and Wall Street Journal. “Deliver” is generous because the actual newspaper in the tube averages about 40 percent success rate.

Did Google cause this? No, it was not a lone actor set on eliminating the newspaper and magazine business. Craig Newmark’s Craigslist zapped classified advertising. Other services eliminated the need for weird local newspapers. Once in the small town in Illinois in which I went to high school, a local newscaster created a local newspaper. In Louisville, we have something called Coffeetime or Coffeetalk. It’s a very thing, stunted newspaper paper printed on brown paper in black ink. Memorable but almost unreadable.

Google did what it wanted for a couple of decades, and now the old-school Web search is a dead duck. Publishers are like a couple of snow leopards trying to remain alive as tourist-filled Land Rovers roar down slushy mountain roads in Nepal.

The write up says:

Google notes that publishers can also configure Offerwall to include their own logo and introductory text, then customize the choices it presents. One option that’s enabled by default has visitors watch a short ad to earn access to the publisher’s content. This is the only option that has a revenue share… However, early reports during the testing period said that publishers saw an average revenue lift of 9% after 1 million messages on AdSense, for viewing rewarded ads. Google Ad Manager customers saw a 5-15% lift when using Offerwall as well. Google also confirmed to TechCrunch via email that publishers with Offerwall saw an average revenue uplift of 9% during its over a year in testing.

Yep, off the wall. Old-school search is dead. Google is into becoming Hollywood and cable TV. Super Bowl advertising: Yes, yes, yes. Search. Eh, not so much. Publishers, hey, we have an off the wall deal for you. Thanks, Google.

Stephen E Arnold, June 27, 2025

Teams Today, Cloud Data Leakage Tomorrow Allegations Tomorrow?

June 27, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

The creep of “efficiency” manifests in numerous ways. A simple application becomes increasingly complex. The result, in many cases, is software that loses the user in chrome trim, mud flaps, and stickers for vacation spots. The original vehicle wears a Halloween costume and can be unrecognizable to someone who does not use the software for six months and returns to find a different creature.

What’s the user reaction to this? For regular users, few care too much. For a meta-users — that is those who look at the software from a different perspective; for example, that of a bean counter — the accumulation of changes produces more training costs, more squawks about finding employees who can do the “work,” and creeping cost escalation. The fix? Cheaper or free software. “German Government Moves Closer to Ditching Microsoft: “We’re Done with Teams!” explains:

The long-running battle of Germany’s northernmost state, Schleswig-Holstein, to make a complete switch from Microsoft software to open-source alternatives looks close to an end. Many government operatives will permanently wave goodbye to the likes of Teams, Word, Excel, and Outlook in the next three months in a move to ensure independence, sustainability, and security.

The write up includes a statement that resonates with me:

Digitalization Minister Dirk Schroedter has announced that “We’re done with Teams!”

My team has experimented with most video conferencing software. I did some minor consulting to an outfit called DataBeam years and years ago. Our experience with putting a person in front of a screen and doing virtual interaction is not something that we decided to use in the lock down days. Nope. We fiddled with Sparcs and the assorted accoutrements. We tried whatever became available when one of my clients would foot the bill. I was okay with a telephone, but the future was mind-addling video conferences. Go figure.

Our experience with Teams at Arnold Information Technology is that the system balks when we use it on a Mac Mini as a user who does not pay. On a machine with a paid account, the oddities of the interface were more annoying than Zoom’s bizarre approach. I won’t comment about the other services to which we have access, but these too are not the slickest auto polishes on the Auto Zone’s shelves.

Digitalization Minister Dirk Schroedter (Germany) is quoted as saying:

The geopolitical developments of the past few months have strengthened interest in the path that we’ve taken. The war in Ukraine revealed our energy dependencies, and now we see there are also digital dependencies.

Observations are warranted:

- This anti-Microsoft stance is not new, but it has not been linked to thinking in relationship to Russia’s special action.

- Open source software may not be perfect, but it does offer an option. Microsoft “owns” software in the US government, but other countries may be unwilling to allow Microsoft to snap on the shackles of proprietary software.

- Cloud-based information is likely to become an issue with some thistles going forward.

The migration of certain data to data brokers might be waiting in the wings in a restaurant in Brussels. Someone in Germany may want to serve up that idea to other EU member nations.

Stephen E Arnold, June 27, 2025