Amazon: A Secret of Success Revealed

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Jeff Bezos Reportedly Told His Team to Attack Small Publishers Like a Cheetah Would Pursue a Sickly Gazelle in Amazon’s Early Days — 3 Ruthless Strategies He’s Used to Build His Empire.” The inspirational story make clear why so many companies, managers, and financial managers find the Bezos Bulldozer a slick vehicle. Who needs a better role model for the Information Superhighway?

Although this machine-generated cheetah is chubby, the big predator looks quite content after consuming a herd of sickly gazelles. No wonder so many admire the beast. Can the chubby creature catch up to the robotic wizards at OpenAI-type firms? Thanks, MSFT Copilot Bing thing. It was a struggle to get this fat beast but good enough.

The write up is not so much news but a summing up of what I think of as Bezos brainwaves. For example, the write up describes either the creator of the Bezos Bulldozer as “sadistic” or a “godfather.” Another facet of Mr. Bezos’ approach to business is an aggressive price strategy. The third tool in the bulldozer’s toolbox is creating an “adversarial” environment. That sounds delightful: “Constant friction.”

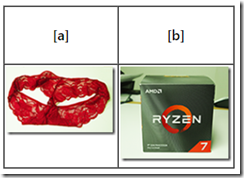

But I think there are other techniques in play. For example, we ordered a $600 dollar CPU. Amazon or one of its “trusted partners” shipped red panties in an AMD Ryzen box. [a] The CPU and [b] its official box. Fashionable, right?

This image appeared in my April 2022 Beyond Search. Amazon customer support insisted that I received a CPU, not panties in an AMB box. The customer support process made it crystal clear that I was trying the cheat them. Yeah, nice accusation and a big laugh when I included the anecdote in one of my online fraud lectures at a cyber crime conference.

More recently, I received a smashed package with a plastic bag displaying this message: “We care.” When I posted a review of the shoddy packaging and the impossibility of contacting Amazon, I received several email messages asking me to go to the Amazon site and report the problem. Oh, the merchant in question is named Meta Bosem:

Amazon asks me to answer this question before getting a resolution to this predatory action. Amazon pleads, “Did this solve my problem?” No, I will survive being the victim of what seems to a way to down a sickly gazelle. (I am just old, not sickly.)

The somewhat poorly assembled article cited above includes one interesting statement which either a robot or an underpaid humanoid presented as a factoid about Amazon:

Malcolm Gladwell’s research has led him to believe that innovative entrepreneurs are often disagreeable. Businesses and society may have a lot to gain from individuals who “change up the status quo and introduce an element of friction,” he says. A disagreeable personality — which Gladwell defines as someone who follows through even in the face of social approval — has some merits, according to his theory.

Yep, the benefits of Amazon. Let me identify the ones I experienced with the panties and the smashed product in the “We care” wrapper:

- Quality control and quality assurance. Hmmm. Similar to aircraft manufacturer’s whose planes feature self removing doors at 14,000 feet

- Customer service. I love the question before the problem is addressed which asks, “Did this solve your problem?” (The answer is, “No.”)

- Reliable vendors. I wonder if the Meta Bosum folks would like my pair of large red female undergarments for one of their computers?

- Business integrity. What?

But what does one expect from a techno feudal outfit which presents products named by smart software. For details of this recent flub, navigate to “Amazon Product Name Is an OpenAI Error Message.” This article states:

We’re accustomed to the uncanny random brand names used by factories to sell directly to the consumer. But now the listings themselves are being generated by AI, a fact revealed by furniture maker FOPEAS, which now offers its delightfully modern yet affordable I’m sorry but I cannot fulfill this request it goes against OpenAI use policy. My purpose is to provide helpful and respectful information to users in brown.

Isn’t Amazon a delightful organization? Sickly gazelles, be cautious when you hear the rumble of the Bezos Bulldozer. It does not move fast and break things. The company has weaponized its pursuit of revenue. Neither, publishers, dinobabies, or humanoids can be anything other than prey if the cheetah assertion is accurate. And the government regulatory authorities in the US? Great job, folks.

Stephen E Arnold, January 15, 2024

Open Source Software: Free Gym Shoes for Bad Actors

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Many years ago, I completed a number of open source projects. Although different clients hired my team and me, the big question was, “What’s the future of open source software as an investment opportunity and as a substitute for commercial software. Our work focused on two major points:

- Community support for a widely-used software once the original developer moved on

- A way to save money and get rid of the “licensing handcuffs” commercial software companies clamped on their customers

- Security issues resulting from poisoned code or obfuscated “special features.:

My recollection is that the customers focused on one point, the opportunity to save money. Commercial software vendors were in the “lock in” game, and open source software for database, utility, and search and retrieval.

Today, a young innovator may embrace an open source solution to the generative smart software approach to innovation. Apart from the issues embedded in the large language model methods themselves, building a product on other people’s code available a open source software looks like a certain path to money.

An open source game plan sounds like a winner. Then upon starting work, the path reveals its risks. Thanks, MSFT Copilot, you exhausted me this morning. Good enough.

I thought about our work in open source when I read “So, Are We Going to Talk about How GitHub Is an Absolute Boon for Malware, or Nah?” The write up opines:

In a report published on Thursday, security shop Recorded Future warns that GitHub’s infrastructure is frequently abused by criminals to support and deliver malware. And the abuse is expected to grow due to the advantages of a “living-off-trusted-sites” strategy for those involved in malware. GitHub, the report says, presents several advantages to malware authors. For example, GitHub domains are seldom blocked by corporate networks, making it a reliable hosting site for malware.

Those cost advantages can be vaporized once a security issue becomes known. The write up continues:

Reliance on this “living-off-trusted-sites” strategy is likely to increase and so organizations are advised to flag or block GitHub services that aren’t normally used and could be abused. Companies, it’s suggested, should also look at their usage of GitHub services in detail to formulate specific defensive strategies.

How about a risk round up?

- The licenses vary. Litigation is a possibility. For big companies with lots of legal eagles, court battles are no problem. Just write a check or cut a deal.

- Forks make it easy for bad actors to exploit some open source projects.

- A big aggregator of open source like MSFT GitHub is not in the open source business and may be deflect criticism without spending money to correct issues as they are discovered. It’s free software, isn’t it.

- The “community” may be composed of good actors who find that cash from what looks like a reputable organization becomes the unwitting dupe of an industrialized cyber gang.

- Commercial products integrating or built upon open source may have to do some very fancy dancing when a problem becomes publicly known.

There are other concerns as well. The problem is that open source’s appeal is now powered by two different performance enhancers. First, is the perception that open source software reduces certain costs. The second is the mad integration of open source smart software.

What’s the fix? My hunch is that words will take the place of meaningful action and remediation. Economic pressure and the desire to use what is free make more sense to many business wizards.

Stephen E Arnold, January 15, 2024

Believe in Smart Software? Sure, Why Not?

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Predictions are slippery fish. Grab one, a foot long Lake Michigan beastie. Now hold on. Wow, that looked easy. Predictions are similar. But slippery fish can get away or flop around and make those in the boat look silly. I thought about fish and predictions when I read “What AI will Never Be Able To Do.” The essay is a replay of an answer from an AI or smart software system.

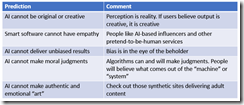

My initial reaction was that someone came up with a blog post that required Google Bard and what seems to be minimal effort to create. I am thinking about how a high school student might rely on ChatGPT to write an essay about a current event or a how-to essay. I reread the write up and formulated several observations. The table below presents the “prediction” and my comment about that statement. I end the essay with a general comment about smart software.

The presentation of word salad reassurances underscores a fundamental problem of smart software. The system can be tuned to reassure. At the same time, the companies operating the software can steer, shape, and weaponize the information presented. Those without the intellectual equipment to research and reason about outputs are likely to accept the answers. The deterioration of education in the US and other countries virtually guarantees that smart software will replace critical thinking for many people.

Don’t believe me. Ask one of the engineers working on next generation smart software. Just don’t ask the systems or the people who use another outfit’s software to do the thinking.

Stephen E Arnold, January 12, 2024

Cheating: Is It Not Like Love, Honor, and Truth?

January 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I would like to believe the information in this story: “ChatGPT Did Not Increase Cheating in High Schools, Stanford Researchers Find.” My reservations can be summed up with three points: [a] The Stanford president (!) who made up datal, [b] The behavior of Stanford MBAs at certain go-go companies, and [c] How does one know a student did not cheat? (I know the answer: Surveillance technology, perchance. Ooops. That’s incorrect. That technology is available to Stanford graduates working at certain techno feudalist outfits.

Mom asks her daughter, “I showed you how to use the AI generator, didn’t I? Why didn’t you use it?” Thanks, MSFT Copilot Bing thing. Pretty good today.

The cited write up reports as actual factual:

The university, which conducted an anonymous survey among students at 40 US high schools, found about 60% to 70% of students have engaged in cheating behavior in the last month, a number that is the same or even decreased slightly since the debut of ChatGPT, according to the researchers.

I have tried to avoid big time problems in my dinobaby life. However, I must admit that in high school, I did these things: [a] Worked with my great grandmother to create a poem subsequently published in a national anthology in 1959. Granny helped me cheat; she was a deceitful septuagenarian as I recall. I put my name on the poem, omitting Augustus. Yes, cheating. [b] Sold homework to students not in my advanced classes. I would consider this cheating, but I was saving money for my summer university courses at the University of Illinois. I went for the cash. [c] After I ended up in the hospital, my girl friend at the time showed up at the hospital, reviewed the work covered in class, and finished a science worksheet because I passed out from the post surgery medications. Yes, I cheated, and Linda Mae who subsequently spent her life in Africa as a nurse, helped me cheat. I suppose I will burn in hell. My summary suggests that “cheating” is an interesting concept, and it has some nuances.

Did the Stanford (let’s make up data) University researchers nail down cheating or just hunt for the AI thing? Are the data reproducible? Was the methodology rigorous, the results validated, and micro analyses run to determine if the data were on the money? Yeah, sure, sure.

I liked this statement:

Stanford also offers an online hub with free resources to help teachers explain to high school students the dos and don’ts of using AI.

In the meantime, the researchers said they will continue to collect data throughout the school year to see if they find evidence that more students are using ChatGPT for cheating purposes.

Yep, this is a good pony to ride. I would ask is plain vanilla Google search a form of cheating? I think it is. With most of the people online using it, doesn’t everyone cheat? Let’s ask the Harvard ethics professor, a senior executive at a Facebook-type outfit, and the former president of Stanford.

Stephen E Arnold, January 10, 2023

Google and the Company It Keeps: Money Is Money

January 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If this recent report from Adalytics is accurate, not even Google understands how and where its Google Search Partners (GSP) program is placing ads for both its advertising clients and itself. A piece at Adotat discusses “The Google Exposé: Peeling Back the Layers of Ad Network Mysteries.” Google promises customers of this highly lucrative program their ads will only appear alongside content they would approve of. However, writer Pesach Lattin charges:

“The program, shrouded in opacity, is alleged to be a haven for brand-unsafe ad inventory, a digital Wild West where ads could unwittingly appear alongside content on pornography sites, right-wing fringe publishers, and even on sites sanctioned by the White House in nations like Iran and Russia.”

How could this happen? Google expands its advertising reach by allowing publishers to integrate custom searches into their sites. If a shady publisher has done so, there’s no way to know short of stumbling across it: unlike Bing, Google does not disclose placement URLs. To make matters worse, Google search advertisers are automatically enrolled GSP with no clear way to opt out. But surely the company at least protects itself, right? The post continues:

“Surprisingly, even Google’s own search ads weren’t immune to these problematic placements. This startling fact raises serious questions about the awareness and control Google’s ad buyers have over their own system. It appears that even within Google, there’s a lack of clarity about the inner workings of their ad technology. According to TechCrunch, Laura Edelson, an assistant professor of computer science at Northeastern University, known for her work in algorithmic auditing and transparency, echoes this sentiment. She suggests that Google may not fully grasp the complexities of its own ad network, losing sight of how and where its ads are displayed.”

Well that is not good. Lattin points out the problem, and the lack of transparency around it, mean Google and its clients may be unwittingly breaking ethical advertising standards and even violating the law. And they might never know or, worse, a problematic placement could spark a PR or legal nightmare. Ah, Google.

Cynthia Murrell, January 10, 2023

Googley Gems: 2024 Starts with Some Hoots

January 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Another year and I will turn 80. I have seen some interesting things in my 58 year work career, but a couple of black swans have flown across my radar system. I want to share what I find anomalous or possibly harbingers of the new normal.

A dinobaby examines some Alphabet Google YouTube gems. The work is not without its AGonY, however. Thanks, MSFT Copilot Bing thing. Good enough.

First up is another “confession” or “tell all” about the wild, wonderful Alphabet Google YouTube or AGY. (Wow, I caught myself. I almost typed “agony”, not AGY. I am indeed getting old.)

I read “A Former Google Manager Says the Tech Giant Is Rife with Fiefdoms and the Creeping Failure of Senior Leaders Who Weren’t Making Tough Calls.” The headline is a snappy one. I like the phrase “creeping failure.” Nifty image like melting ice and tundra releasing exciting extinct biological bits and everyone’s favorite gas. Let me highlight one point in the article:

[Google has] “lots of little fiefdoms” run by engineers who didn’t pay attention to how their products were delivered to customers. …this territorial culture meant Google sometimes produced duplicate apps that did the same thing or missed important features its competitors had.

I disagree. Plenty of small Web site operators complain about decisions which destroy their businesses. In fact, I am having lunch with one of the founders of a firm deleted by Google’s decider. Also, I wrote about a fellow in India who is likely to suffer the slings and arrows of outraged Googlers because he shoots videos of India’s temples and suggests they have meanings beyond those inculcated in certain castes.

My observation is that happy employees don’t run conferences to explain why Google is a problem or write these weird “let me tell you what life is really like” essays. Something is definitely being signaled. Could it be distress, annoyance, or down-home anger? The “gem”, therefore, is AGY’s management AGonY.

Second, AGY is ramping up its thinking about monetization of its “users.” I noted “Google Bard Advanced Is Coming, But It Likely Won’t Be Free” reports:

Google Bard Advanced is coming, and it may represent the company’s first attempt to charge for an AI chatbot.

And why not? The Red Alert hooted because MIcrosoft’s 2022 announcement of its OpenAI tie up made clear that the Google was caught flat footed. Then, as 2022 flowed, the impact of ChatGPT-like applications made three facets of the Google outfit less murky: [a] Google was disorganized because it had Google Brain and DeepMind which was expensive and confusing in the way Abbott and Costello’s “Who’s on First Routine” made people laugh. [b] The malaise of a cooling technology frenzy yielded to AI craziness which translated into some people saying, “Hey, I can use this stuff for answering questions.” Oh, oh, the search advertising model took a bit of a blindside chop block. And [c] Google found itself on the wrong side of assorted legal actions creating a model for other legal entities to explore, probe, and probably use to extract Google’s life blood — Money. Imagine Google using its data to develop effective subscription campaigns. Wow.

And, the final Google gem is that Google wants to behave like a nation state. “Google Wrote a Robot Constitution to Make Sure Its New AI Droids Won’t Kill Us” aims to set the White House and other pretenders to real power straight. Shades of Isaac Asimov’s Three Laws of Robotics. The write up reports:

DeepMind programmed the robots to stop automatically if the force on its joints goes past a certain threshold and included a physical kill switch human operators can use to deactivate them.

You have to embrace the ethos of a company which does not want its “inventions” to kill people. For me, the message is one that some governments’ officials will hear: Need a machine to perform warfighting tasks?

Small gems but gems not the less. AGY, please, keep ‘em coming.

Stephen E Arnold, January 9, 2024

Remember Ike and the MIC: He Was Right

January 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It used to be common for departing Pentagon officials and retiring generals to head for weapons makers like Boeing and Lockheed Martin. But the hot new destination is venture capital firms, according to

the article, “New Spin on a Revolving Door: Pentagon Officials Turned Venture Capitalists” at DNYUZ. We learn:

“The New York Times has identified at least 50 former Pentagon and national security officials, most of whom left the federal government in the last five years, who are now working in defense-related venture capital or private equity as executives or advisers. In many cases, The Times confirmed that they continued to interact regularly with Pentagon officials or members of Congress to push for policy changes or increases in military spending that could benefit firms they have invested in.”

Yes, pressure from these retirees-turned-venture-capitalists has changed the way agencies direct their budgets. It has also achieved advantageous policy changes: The Defense Innovation Unit now reports directly to the defense secretary. Also, the prohibition against directing small-business grants to firms with more than 50% VC funding has been scrapped.

In one way this trend could be beneficial: instead of lobbying for federal dollars to flow into specific companies, venture capitalists tend to advocate for investment in certain technologies. That way, they hope, multiple firms in which they invest will profit. On the other hand, the nature of venture capitalists means more pressure on Congress and the military to send huge sums their way. Quickly and repeatedly. The article notes:

“But not everyone on Capitol Hill is pleased with the new revolving door, including Senator Elizabeth Warren, Democrat of Massachusetts, who raised concerns about it with the Pentagon this past summer. The growing role of venture capital and private equity firms ‘makes President Eisenhower’s warning about the military-industrial complex seem quaint,’ Ms. Warren said in a statement, after reviewing the list prepared by The Times of former Pentagon officials who have moved into the venture capital world. ‘War profiteering is not new, but the significant expansion risks advancing private financial interests at the expense of national security.’”

Senator Warren may have a point: the article specifies that many military dollars have gone to projects that turned out to be duds. A few have been successful. See the write-up for those details. This moment in geopolitics is an interesting time for this change. Where will it take us?

Cynthia Murrell, January 9, 2024

Is Philosophy Irrelevant to Smart Software? Think Before Answering, Please

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I listened to Lex Fridman’s interview with the founder of Extropic. The is into smart software and “inventing” a fresh approach to the the plumbing required to make AI more like humanoids.

As I listened to the questions and answers, three factoids stuck in my mind:

- Extropic’s and its desire to just go really fast is a conscious decision shared among those involved with the company; that is, we know who wants to go fast because they work there or work at the firm. (I am not going to argue about the upside and downside of “going fast.” That will be another essay.)

- The downstream implications of the Extropic vision are secondary to the benefits of finding ways to avoid concentration of AI power. I think the idea is that absolute power produces outfits like the Google-type firms which are bedeviling competitors, users, and government authorities. Going fast is not a thrill for processes that require going slow.

- The decisions Extropic’s founder have made are bound up in a world view, personal behaviors for productivity, interesting foods, and learnings accreted over a stellar academic and business career. In short, Extropic embodies a philosophy.

Philosophy, therefore, influences decisions. So we come to my topic in this essay. I noted two different write ups about how informed people take decisions. I am not going to refer to philosophers popular in introductory college philosophy classes. I am going to ignore the uneven treatment of philosophers in Will and Ariel Durant’s Story of Philosophy. Nah. I am going with state of the art modern analysis.

The first online article I read is a survey (knowledge product) of the estimable IBM / Watson outfit or a contractor. The relatively current document is “CEO Decision Making in the Ago of AI.” The main point of the document in my opinion is summed up in this statement from a computer hardware and services company:

Any decision that makes its way to the CEO is one that involves high degrees of uncertainty, nuance, or outsize impact. If it was simple, someone else— or something else—would do it. As the world grows more complex, so does the nature of the decisions landing on a CEO’s desk.

But how can a CEO decide? The answer is, “Rely on IBM.” I am not going to recount the evolution (perhaps devolution) of IBM. The uncomfortable stories about shedding old employees (the term dinobaby originated at IBM according to one former I’ve Been Moved veteran). I will not explain how IBM’s decisions about chip fabrication, its interesting hiring policies of individuals who might have retained some fondness for the land of their fathers and mothers, nor the fancy dancing required to keep mainframes as a big money pump. Nope.

The point is that IBM is positioning itself as a thought leader, a philosopher of smart software, technology, and management. I find this interesting because IBM, like some Google type companies, are case examples of management shortcoming. These same shortcomings are swathed in weird jargon and buzzwords which are bent to one end: Generating revenue.

Let me highlight one comment from the 27 page document and urge you to read it when you have a few moments free. Here’s the one passage I will use as a touchstone for “decision making”:

The majority of CEOs believe the most advanced generative AI wins.

Oh, really? Is smart software sufficiently mature? That’s news to me. My instinct is that it is new information to many CEOs as well.

The second essay about decision making is from an outfit named Ness Labs. That essay is “The Science of Decision-Making: Why Smart People Do Dumb Things.” The structure of this essay is more along the lines of a consulting firm’s white paper. The approach contrasts with IBM’s free-floating global survey document.

The obvious implication is that if smart people are making dumb decisions, smart software can solve the problem. Extropic would probably agree and, were the IBM survey data accurate, “most CEOs” buy into a ride on the AI bandwagon.k

The Ness Labs’ document includes this statement which in my view captures the intent of the essay. (I suggest you read the essay and judge for yourself.)

So, to make decisions, you need to be able to leverage information to adjust your actions. But there’s another important source of data your brain uses in decision-making: your emotions.

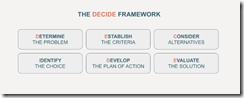

Ah, ha, logic collides with emotions. But to fix the “problem” Ness Labs provides a diagram created in 2008 (a bit before the January 2022 Microsoft OpenAI marketing fireworks:

Note that “decide” is a mnemonic device intended to help me remember each of the items. I learned this technique in the fourth grade when I had to memorize the names of the Great Lakes. No one has ever asked me to name the Great Lakes by the way.

Okay, what we have learned is that IBM has survey data backing up the idea that smart software is the future. Those data, if on the money, validate the go-go approach of Extropic. Plus, Ness Labs provides a “decider model” which can be used to create better decisions.

I concluded that philosophy is less important than fostering a general message that says, “Smart software will fix up dumb decisions.” I may be over simplifying, but the implicit assumptions about the importance of artificial intelligence, the reliability of the software, and the allegedly universal desire by big time corporate management are not worth worrying about.

Why is the cartoon philosopher worrying? I think most of this stuff is a poorly made road on which those jockeying for power and money want to drive their most recent knowledge vehicles. My tip? Look before crossing that information superhighway. Speeding myths can be harmful.

Stephen E Arnold, January 8, 2024

AI Ethics: Is That What Might Be Called an Oxymoron?

January 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

MSN.com presented me with this story: “OpenAI and Microsoft on Trial — Is the Clash with the NYT a Turning Point for AI Ethics?” I can answer this question, but that would spoil the entertainment value of my juxtaposition of this write up with the quasi-scholarly list of business start up resources. Why spoil the fun?

Socrates is lecturing at a Fancy Dan business school. The future MBAs are busy scrolling TikTok, pitching ideas to venture firms, and scrolling JustBang.com. Viewing this sketch, it appears that ethics and deep thought are not as captivating as mobile devices and having fund. Thanks, MSFT Copilot. Two tries and a good enough image.

The article asks a question which I find wildly amusing. The “on trial” write up states in 21st century rhetoric:

The lawsuit prompts critical questions about the ownership of AI-generated content, especially when it comes to potential inaccuracies or misleading information. The responsibility for losses or injuries resulting from AI-generated content becomes a gray area that demands clarification. Also, the commercial use of sourced materials for AI training raises concerns about the value of copyright, especially if an AI were to produce content with significant commercial impact, such as an NYT bestseller.

For more than two decades online outfits have been sucking up information which is usually slapped with the bright red label “open source information.”

The “on trial” essay says:

The future of AI and its coexistence with traditional media hinges on the resolution of this legal battle.

But what about ethics? The “on trial” write up dodges the ethics issue. I turned to a go-to resource about ethics. No, I did not look at the papers of the Harvard ethics professor who allegedly made up data for ethic research. Ho ho ho. Nope. I went to the Enchanting Trader and its list of 4000+ Essential Business Startup Database of information.

I displayed the full list of resources and ran a search for the word “ethics.” There was one hit to “Will Joe Rogan Ever IPO?” Amazing.

What I concluded is that “ethics” is not number one with a bullet among the resources of the 4000+ essential business start up items. It strikes me that a single trial about smart software is unlikely to resolve “ethics” for AI. If it does, will the resolution have the legs that Socrates’ musing have had. More than likely, most people will ask, “Who is Socrates?” or “What the heck are ethics?”

Stephen E Arnold, January 5, 2023

YouTube: Personal Views, Policies, Historical Information, and Information Shaping about Statues

January 4, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have never been one to tour ancient sites. Machu Pichu? Meh. The weird Roman temple in Nimes? When’s lunch? The bourbon trail? You must be kidding me! I have a vivid memory of visiting the US Department of Justice building for a meeting, walking through the Hall of Justice, and seeing Lady Justice covered up. I heard that the drapery cost US$8,000. I did not laugh, nor did I make any comments about cover ups at that DoJ meeting or subsequent meetings. What a hoot! Other officials have covered up statues and possibly other disturbing things.

I recall the Deputy Administrator who escorted me and my colleague to a meeting remarking, “Yeah, Mr. Ashcroft has some deeply held beliefs.” Yep, personal beliefs, propriety, not offending those entering a US government facility, and a desire to preserve certain cherished values. I got it. And I still get it. Hey, who wants to lose a government project because some sculpture artist type did not put clothes on a stone statue?

Are large technology firms in a position to control, shape, propagandize, and weaponize information? If the answer is, “Sure”, then users are little more than puppets, right? Thanks, MSFT Copilot Bing thing. Good enough.

However, there are some people who do visit historical locations. Many of these individuals scrutinize the stone work, the carvings, and the difficulty of moving a 100 ton block from Point A (a quarry 50 miles away) to Point B (a lintel in the middle of nowhere). I am also ignorant of art because I skipped Art History in college. I am clueless about ancient history. (I took another useless subject like a math class.) And many of these individuals have deep-rooted beliefs about the “right way” to present information in the form of stone carvings.

Now let’s consider a YouTuber who shoots videos in temples in southeast Asia. The individual works hard to find examples of deep meanings in the carvings beyond what the established sacred texts present. His hobby horse, as I understand the YouTuber, is that ancient aliens, fantastical machines, and amazing constructions are what many carvings are “about.” Obviously if one embraces what might be received wisdom about ancient texts from Indian and adjacent / close countries, the presentation of statues with disturbing images and even more troubling commentary is a problem. I think this is the same type of problem that a naked statue in the US Department of Justice posed.

The YouTuber allegedly is Praveen Mohan, and his most recent video is “YouTube Will Delete Praveen Mohan Channel on January 31.” Mr. Mohan’s angle is to shoot a video of an ancient carving in a temple and suggest that the stone work conveys meanings orthogonal to the generally accepted story about giant temple carvings. From my point of view, I have zero clue if Mr. Mohan is on the money with his analyses or if he is like someone who thinks that Peruvian stone masons melted granite for Cusco’s walls. The point of the video is that by taking pictures of historical sites and their carvings violates YouTube’s assorted rules, regulations, codes, mandates, and guidelines.

Mr. Mohan expresses the opinion that he will be banned, blocked, downchecked, punished, or made into a poster child for stone pornography or some similar punishment. He shows images which have been demonetized. He shows his “dashboard” with visual proof that he is in hot water with the Alphabet Google YouTube outfit. He shows proof that his videos are violating copyright. Okay. Maybe a reincarnated stone mason from ancient times has hired a lawyer, contacted Google from a quantum world, and frightened the YouTube wizards? I don’t know.

Several question arose when my team and I discussed this interesting video addressing YouTube’s actions toward Mr. Mohan. Let me share several with you:

- Is the alleged intentional action against Mr. Mohan motivated by Alphabet Google YouTube managers with roots in southeast Asia? Maybe a country like India? Maybe?

- Is YouTube going after Mr. Mohan because his making videos about religious sites, icons, and architecture is indeed a violation of copyright? I thought India was reasonably aggressive in its enforcement of its laws? Has Alphabet Google YouTube decided to help out India and other countries with ancient art by southeast Asia countries’ ancient artisans?

- Has Mr. Mohan created a legal problem for YouTube and the company is taking action to shore up its legal arguments should the naked statue matter end up in court?

- Is Mr. Mohan’s assertion about directed punishment accurate?

Obviously there are many issues in play. Should one try to obtain more clarification from Alphabet Google YouTube? That’s a great idea. Mr. Mohan may pursue it. However, will Google’s YouTube or the Alphabet senior management provide clarification about policies?

I will not hold my breath. But those statues covered up in the US Department of Justice reflected one person’s perception of what was acceptable. That’s something I won’t forget.

Stephen E Arnold, January 4, 2024