A Nice Way of Saying AI Will Crash and Burn

November 5, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a write up last week. Today is October 27, 2025, and this dinobaby has a tough time keeping AI comments, analyses, and proclamations straight. The old fashioned idea of putting a date on each article or post is just not GenAI. As a dinobaby, I find this streamlining like Google dumping apostrophes from its mobile keyboard ill advised. I would say “stupid,” but one cannot doubt the wisdom of the quantumly supreme AI PR and advertising machine, can one? One cannot tell some folks that AI is a utility. It may be important, but the cost may be AI’s Achilles’ heel or the sword on which AI impales itself.

A young wizard built a wonder model aircraft. But it caught on fire and is burning. This is sort of sad. Thanks, ChatGPT. Good enough.

These and other thoughts flitted through my mind when I read “Surviving the AI Capex Boom.” The write up includes an abstract, and you can work through the 14 page document to get the inside scoop. I will assume you have read the document. Here are some observations:

- The “boom” is visible to anyone who scans technical headlines. The hype for AI is exceeded only by the money pumped into the next big thing. The problem is that the payoff from AI is underwhelming when compared to the amount of cash pumped into a sector relying on a single technical innovation or breakthrough: The “transformer” method. Fragile is not the word for the situation.

- The idea that there are cheap and expensive AI stocks is interesting. I am, however, that cheap and expensive are substantively different. Google has multiple lines of revenue. If AI fails, it has advertising and some other cute businesses. Amazon has trouble with just about everything at the moment. Meta is — how shall I phrase it — struggling with organizational issues that illustrate managerial issues. So there is Google and everyone else.

- OpenAI is a case study in an entirely new class of business activities. From announcing that erotica is just the thing for ChatGPT to sort of trying to invent the next iPhone, Sam AI-Man is a heck of a fund raising machine. Only his hyperbole power works as well. His love of circular deals means that he survives, or he does some serious damage to a number of fellow travelers. I say, “No thanks, Sam.”

- The social impact of flawed AI is beginning to take shape. The consequences will be unpleasant in many ways. One example: Mental health knock ons. But, hey, this is a tiny percentage, a rounding error.

Net net: I am not convinced that investing in AI at this time is the wise move for an 81 year old dinobaby. Sorry, Kai Wu. You will survive the AI boom. You are, from my viewpoint, a banker. Bankers usually win. But others may not enjoy the benefits you do.

Stephen E Arnold, November 5, 2025

OpenAI Explains the Valueless Job

November 5, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted a Yahoo News story recycled from Futurism. The write up contains an allegedly true comment made by the outstanding professionals at OpenAI. “Sam Altman Says If Jobs Gets Wiped Out, Maybe They Weren’t Even “Real Work” to Start With” includes this statement but before I present it, I must remind you that the “new” Yahoo News is not the world champion of quickly resolving links. If you end up with a 404, contact the Yahooligans, not me. Now the quote:

… at OpenAI’s DevDay conference on Wednesday, Altman floated the idea that the work you do today, which might imminently be transformed or eliminated by AI, isn’t “real work.”

Let’s think about this.

First, work defines some people. If one’s work is valueless, that might annoy the people who do the work and enjoy it. Get enough of these people in one place and point out that they are valueless, and some excitement might ensue.

A large company values its individual employees. I know because the manager tells me so. Thanks, Venice ai. Good enough.

Second, when one questions the value of another person’s work, what does that reveal about the person making the assertion. Could it suggest a certain sense of superiority? Could that individual perceive the world as one of those wonky but often addled Greek gods? Could the individual have another type of mental issue?

Third, the idea that smart software, everything apps, Orbs, and World Networks will rule the world is not science fiction. OpenAI and his team are busy working to make this utopia happen. Are there other ideas about what online services should provide to farmers, for instance?

The cited article states:

“The thing about that farmer,” Altman said, is not only that they wouldn’t believe you, but “they very likely would look at what you do and I do and say, ‘that’s not real work.’” This, Altman said, makes him feel “a little less worried” but “more worried in some other ways.” “If you’re, like, farming, you’re doing something people really need,” Altman explained. “You’re making them food, you’re keeping them alive. This is real work.” But the farmer would see our modern jobs as “playing a game to fill your time,” and therefore not a “real job.” “It’s very possible that if we could see those jobs of the future,” Altman said, we’d think “maybe our jobs were not as real as a farmer’s job, but it’s a lot more real than this game you’re playing to entertain yourself.”

Sam AI-Man is a philosopher for our time. That’s a high value job, right. I think obtaining investment dollars to build smart software is a real job. It is much more useful than doing a valueless job like farming. You eat, don’t you, Sam?

Stephen E Arnold, November 5, 2025

We Must Admire a $5 Trillion Outfit

November 5, 2025

The title of this piece refers to the old adage of not putting all of your eggs in one basket. It’s a popular phrase used by investors and translates to: diversify, diversify, diversify! Nvidia really needs to take that heart, because despite having record breaking sales in the last quarter, their top customer base is limited to three. Tom’s Hardware reports, “More Than 50% Of Nvidia’s Data Center Revenue Comes From Three Customers — $21.9 Billion In Sales Recorded From The Unnamed Companies.”

Business publication Sherwood reported that 53% of Nvidia’s sales are from three anonymous customers and they total $21.9 billion. Here’s where the old adage about ego enters:

“This might not sound like a problem — after all, why complain if three different entities are handing you piles and piles of money — but concentrating the majority of your sales to just a handful of clients could cause a sudden, unexpected issue. For example, the company’s entire second-quarter revenue is around $46 billion, which means that Customer A makes up more than 20% of its sales. If this company were to suddenly vanish (say it decided to build its own chips, go with AMD, or a scandal forces it to cease operations), then it would have a massive impact on Nvidia’s cash flow and operations.”

The article then hypothesizes that the mysterious customers are Elon Musk, xAI, OpenAI, Oracle, and Meta. The company did lose sales in China because of President Trump’s actions, so the customers aren’t from Asia. Nvidia needs to diversify its client portfolio if it doesn’t want to sink when and if these customers head to greener pastures. With a $5 trillion value, how many green pastures await Nvidia. Just think of them and they will manifest themselves. That works.

Whitney Grace, November 5, 2025

Transformers May Face a Choice: The Junk Pile or Pizza Hut

November 4, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I read a marketing collateral-type of write up in Venture Beat. The puffy delight carries this title “The Beginning of the End of the Transformer Era? Neuro-Symbolic AI Startup AUI Announces New Funding at $750M Valuation.” The transformer is a Googley thing. Obviously with many users of Google’s Googley AI, Google perceives itself as the Big Dog in smart software. Sorry, Sam AI-Man, Google really, really believes it is the leader; otherwise, why would Apple turn to Google for help with its AI challenges? Ah, you don’t know? Too bad, Sam, I feel for you.

Thanks, MidJourney. Good enough.

This write up makes clear that someone has $750 million reasons to fund a different approach to smart software. Contrarian brilliance or dumb move? I don’t know. The write up says:

AUI is the company behind Apollo-1, a new foundation model built for task-oriented dialog, which it describes as the "economic half" of conversational AI — distinct from the open-ended dialog handled by LLMs like ChatGPT and Gemini. The firm argues that existing LLMs lack the determinism, policy enforcement, and operational certainty required by enterprises, especially in regulated sectors.

But there’s more:

Apollo-1’s core innovation is its neuro-symbolic architecture, which separates linguistic fluency from task reasoning. Instead of using the most common technology underpinning most LLMs and conversational AI systems today — the vaunted transformer architecture described in the seminal 2017 Google paper "Attention Is All You Need" — AUI’s system integrates two layers:

Neural modules, powered by LLMs, handle perception: encoding user inputs and generating natural language responses.

A symbolic reasoning engine, developed over several years, interprets structured task elements such as intents, entities, and parameters. This symbolic state engine determines the appropriate next actions using deterministic logic.

This hybrid architecture allows Apollo-1 to maintain state continuity, enforce organizational policies, and reliably trigger tool or API calls — capabilities that transformer-only agents lack.

What’s important is that interest in an alternative to the Googley approach is growing. The idea is that maybe — just maybe — Google’s transformer is burning cash and not getting much smarter with each billion dollar camp fire. Consequently individuals with a different approach warrant a closer look.

The marketing oriented write up ends this way:’

While LLMs have advanced general-purpose dialog and creativity, they remain probabilistic — a barrier to enterprise deployment in finance, healthcare, and customer service. Apollo-1 targets this gap by offering a system where policy adherence and deterministic task completion are first-class design goals.

Researchers around the world are working overtime to find a way to deliver smart software without the Mad Magazine economics of power, CPUs, and litigation associated with the Googley approach. When a practical breakthrough takes place, outfits mired in Googley methods may be working at a job their mothers did not envision for her progeny.

Stephen E Arnold, November 4, 2025

Medical Fraud Meets AI. DRG Codes Meet AI. Enjoy

November 4, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have heard that some large medical outfits make use of DRG “chains” or “coding sequences.” I picked up this information when my team and I worked on what is called a “subrogation project.” I am not going to explain how subrogation works or what the mechanisms operating are. These are back office or invisible services that accompany something that seems straightforward. One doesn’t buy stock from a financial advisor; there is plumbing and plumbing companies that do this work. The hospital sends you a bill; there is plumbing and plumbing companies providing systems and services. To sum up, a hospital bill is often large, confusing, opaque, and similar to a secret language. Mistakes happen, of course. But often inflated medical bills do more to benefit the institution and its professionals than the person with the bill in his or her hand. (If you run into me at an online fraud conference, I will explain how the “chain” of codes works. It is slick and not well understood by many of the professionals who care for the patient. It is a toss up whether Miami or Nashville is the Florence of medical fancy dancing. I won’t argue for either city, but I would add that Houston and LA should be in the running for the most creative center of certain activities.

“Grieving Family Uses AI Chatbot to Cut Hospital Bill from $195,000 to $33,000 — Family Says Claude Highlighted Duplicative Charges, Improper Coding, and Other Violations” contains some information that will be [a] good news for medical fraud investigators and [b] for some health care providers and individual medical specialists in their practices. The person with the big bill had to joust with the provider to get a detailed, line item breakdown of certain charges. Once that anti-social institution provider the detail, it was time for AI.

The write up says:

Claude [Anthropic, the AI outfit hooked up with Google] proved to be a dogged, forensic ally. The biggest catch was that it uncovered duplications in billing. It turns out that the hospital had billed for both a master procedure and all its components. That shaved off, in principle, around $100,000 in charges that would have been rejected by Medicare. “So the hospital had billed us for the master procedure and then again for every component of it,” wrote an exasperated nthmonkey. Furthermore, Claude unpicked the hospital’s improper use of inpatient vs emergency codes. Another big catch was an issue where ventilator services are billed on the same day as an emergency admission, a practice that would be considered a regulatory violation in some circumstances.

Claude, the smart software, clawed through the data. The smart software identified certain items that required closer inspection. The AI helped the human using Claude to get the health care provider to adjust the bill.

Why did the hospital make billing errors? Was it [a] intentional fraud programmed into the medical billing system; [b] was it an intentional chain of DRG codes tuned to bill as many items, actions, and services as possible within reason and applicable rules; or [c] a computer error. If you picked item c, you are correct. The write up says:

Once a satisfactory level of transparency was achieved (the hospital blamed ‘upgraded computers’), Claude AI stepped in and analyzed the standard charging codes that had been revealed.

Absolutely blame the problem on the technology people. Who issued the instructions to the technology people? Innocent MBAs and financial whiz kids who want to maximize their returns are not part of this story. Should they be? Of course not. Computer-related topics are for other people.

Stephen E Arnold, November 4, 2025

Google Is Really Cute: Push Your Content into the Jaws of Googzilla

November 4, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google has a new, helpful, clever, and cute service just for everyone with a business Web site. “Google Labs’ Free New Experiment Creates AI-Generated Ads for Your Small Business” lays out the basics of Pomelli. (I think this word means knobs or handles.)

A Googley business process designed to extract money and data from certain customers. Thanks, Venice.ai. Good enough.

The cited article states:

Pomelli uses AI to create campaigns that are unique to your business; all you need to do is upload your business website to begin. Google says Pomelli uses your business URL to create a “Business DNA” that analyzes your website images to identify brand identity. The Business DNA profile includes tone of voice, color palettes, fonts, and pictures. Pomelli can also generate logos, taglines, and brand values.

Just imagine Google processing your Web site, its content, images, links, and entities like email addresses, phone numbers, etc. Then using its smart software to create an advertising campaign, ads, and suggestions for the amount of money you should / will / must spend via Google’s own advertising system. What a cute idea!

The write up points out:

Google says this feature eliminates the laborious process of brainstorming unique ad campaigns. If users have their own campaign ideas, they can enter them into Pomelli as a prompt. Finally, Pomelli will generate marketing assets for social media, websites, and advertisements. These assets can be edited, allowing users to change images, headers, fonts, color palettes, descriptions, and create a call to action.

How will those tireless search engine optimization consultants and Google certified ad reselling outfits react to this new and still “experimental” service? I am confident that [a] some will rationalize the wonderfulness of this service and sell advisory services about the automated replacement for marketing and creative agencies; [b] some will not understand that it is time to think about a substantive side gig because Google is automating basic business functions and plugging into the customer’s wallet with no pesky intermediary to shave off some bucks; and [c] others will watch as their own sales efforts become less and less productive and then go out of business because adaptation is hard.

Is Google’s idea original? No, Adobe has something called AI Found, according to the write up. Google is not into innovation. Need I remind you that Google advertising has some roots in the Yahoo garden in bins marked GoTo.com and Overture.com. Also, there is a bank account with some Google money from a settlement about certain intellectual property rights that Yahoo believed Google used as a source of business process inspiration.

As Google moves into automating hooks, it accrues several significant benefits which seem to stick up in Google’s push to help its users:

- Crawling costs may be reduced. The users will push content to Google. This may or may not be a significant factor, but the user who updates provides Google with timely information.

- The uploaded or pushed content can be piped into the Google AI system and used to inform the advertising and marketing confection Pomelli. Training data and ad prospects in one go.

- The automation of a core business function allows Google to penetrate more deeply into a business. What if that business uses Microsoft products? It strikes me that the Googlers will say, “Hey, switch to Google and you get advertising bonus bucks that can be used to reduce your overall costs.”

- The advertising process is a knob that Google can be used to pull the user and his cash directly into the Google business process automation scheme.

As I said, cute and also clever. We love you, Google. Keep on being Googley. Pull those users’ knobs, okay.

Stephen E Arnold, November 4, 2025

News Flash: Software Has a Quality Problem. Insight!

November 3, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “The Great Software Quality Collapse: How We Normalized Catastrophe.” What’s interesting about this essay is that the author cares about doing good work.

The write up states:

We’ve normalized software catastrophes to the point where a Calculator leaking 32GB of RAM barely makes the news. This isn’t about AI. The quality crisis started years before ChatGPT existed. AI just weaponized existing incompetence.

Marketing is more important than software quality. Right, rube? Thanks, Venice.ai. Good enough.

The bound phrase “weaponized existing incompetence” points to an issue in a number of knowledge-value disciplines. The essay identifies some issues he has tracked; for example:

- Memory consumption in Google Chrome

- Windows 11 updates breaking the start menu and other things (printers, mice, keyboards, etc.)

- Security problems such as the long-forgotten CrowdStrike misstep that cost customers about $10 billion.

But the list of indifferent or incompetent coding leads to one stop on the information superhighway: Smart software. The essay notes:

But the real pattern is more disturbing. Our research found:

AI-generated code contains 322% more security vulnerabilities

45% of all AI-generated code has exploitable flaws

Junior developers using AI cause damage 4x faster than without it

70% of hiring managers trust AI output more than junior developer code

We’ve created a perfect storm: tools that amplify incompetence, used by developers who can’t evaluate the output, reviewed by managers who trust the machine more than their people.

I quite like the bound phrase “amplify incompetence.”

The essay makes clear that the wizards of Big Tech AI prefer to spend money on plumbing (infrastructure), not software quality. The write up points out:

When you need $364 billion in hardware to run software that should work on existing machines, you’re not scaling—you’re compensating for fundamental engineering failures.

The essay concludes that Big Tech AI as well as other software development firms shift focus.

Several observations:

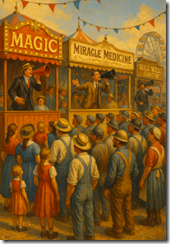

- Good enough is now a standard of excellence

- “Go fast” is better than “good work”

- The appearance of something is more important than its substance.

Net net: It’s a TikTok-, YouTube, and carnival midway bundled into a new type of work environment.

Stephen E Arnold, November 3, 2025

Don Quixote Takes on AI in Research Integrity Battle. A La Vista!

November 3, 2025

Scientific publisher Frontiers asserts its new AI platform is the key to making the most of valuable research data. ScienceDaily crows, “90% of Science is Lost. This New AI Just Found It.” Wow, 90%. Now who is hallucinating? Turns out that percentage only applies if one is looking at new research submitted within Frontiers’ new system. Cutting out past and outside research really narrows the perspective. The press release explains:

“Out of every 100 datasets produced, about 80 stay within the lab, 20 are shared but seldom reused, fewer than two meet FAIR standards, and only one typically leads to new findings. … To change this, [Frontiers’ FAIR² Data Management Service] is designed to make data both reusable and properly credited by combining all essential steps — curation, compliance checks, AI-ready formatting, peer review, an interactive portal, certification, and permanent hosting — into one seamless process. The goal is to ensure that today’s research investments translate into faster advances in health, sustainability, and technology. FAIR² builds on the FAIR principles (Findable, Accessible, Interoperable and Reusable) with an expanded open framework that guarantees every dataset is AI-compatible and ethically reusable by both humans and machines.”

That does sound like quite the time- and hassle- saver. And we cannot argue with making it easier to enact the FAIR principles. But the system will only achieve its lofty goals with wide buy-in from the academic community. Will Frontiers get it? The write-up describes what participating researchers can expect:

“Researchers who submit their data receive four integrated outputs: a certified Data Package, a peer-reviewed and citable Data Article, an Interactive Data Portal featuring visualizations and AI chat, and a FAIR² Certificate. Each element includes quality controls and clear summaries that make the data easier to understand for general users and more compatible across research disciplines.”

The publisher asserts its system ensures data preservation, validation, and accessibility while giving researchers proper recognition. The press release describes four example datasets created with the system as well as glowing reviews from select researchers. See the post for those details.

Cynthia Murrell, November 3, 2025

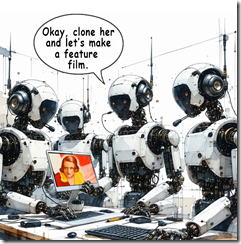

Hollywood Has to Learn to Love AI. You Too, Mr. Beast

October 31, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Russia’s leadership is good at talking, stalling, and doing what it wants. Is OpenAI copying this tactic? ”OpenAI Cracks Down on Sora 2 Deepfakes after Pressure from Bryan Cranston, SAG-AFTRA” reports:

OpenAI announced on Monday [October 20, 2025] in a joint statement that it will be working with Bryan Cranston, SAG-AFTRA, and other actor unions to protect against deepfakes on its artificial intelligence video creation app Sora.

Talking, stalling or “negotiating,” and then doing what it wants may be within the scope of this sentence.

The write up adds via a quote from OpenAI leadership:

“OpenAI is deeply committed to protecting performers from the misappropriation of their voice and likeness,” Altman said in a statement. “We were an early supporter of the NO FAKES Act when it was introduced last year, and will always stand behind the rights of performers.”

This sounds good. I am not sure it will impress teens as much as Mr. Altman’s posture on erotic chats, but the statement sounds good. If I knew Russian, it would be interesting to translate the statement. Then one could compare the statement with some of those emitted by the Kremlin.

Producing a big budget commercial film or a Mr. Beast-type video will look very different in 18 to 24 months. Thanks, Venice.ai. Good enough.

Several observations:

- Mr. Altman has to generate cash or the appearance of cash. At some point investors will become pushy. Pushy investors can be problematic.

- OpenAI’s approach to model behavior does not give me confidence that the company can figure out how to engineer guard rails and then enforce them. Young men and women fiddling with OpenAI can be quite ingenious.

- The BBC ran a news program with the news reader as a deep fake. What does this suggest about a Hollywood producer facing financial pressure working out a deal with an AI entrepreneur facing even greater financial pressure? I think it means that humanoids are expendable first a little bit and then for the entire digital production. Gamification will be too delicious.

Net net: I think I know how this interaction will play out. Sam Altman, the big name stars, and the AI outfits know. The lawyers know. Who doesn’t know? Frankly everyone knows how digital disintermediation works. Just ask a recent college grad with a degree in art history.

Stephen E Arnold, October 31, 2025

Will AMD Deal Make OpenAI Less Deal Crazed? Not a Chance

October 31, 2025

Why does this deal sound a bit like moving money from dad’s coin jar to mom’s spare change box? AP News reports, “OpenAI and Chipmaker AMD Sign Chip Supply Partnership for AI Infrastructure.” We learn AMD will supply OpenAI with hardware so cutting edge it won’t even hit the market until next year. The agreement will also allow OpenAI to buy up about 10% of AMD’s common stock. The day the partnership was announced, AMD’s shares went up almost 24%, while rival chipmaker Nvidia’s went down 1%. The write-up observes:

“The deal is a boost for Santa Clara, Calif.-based AMD, which has been left behind by rival Nvidia. But it also hints at OpenAI’s desire to diversify its supply chain away from Nvidia’s dominance. The AI boom has fueled demand for Nvidia’s graphics processing chips, sending its shares soaring and making it the world’s most valuable company. Last month, OpenAI and Nvidia announced a $100 billion partnership that will add at least 10 gigawatts of data center computing power. OpenAI and its partners have already installed hundreds of Nvidia’s GB200, a tall computing rack that contains dozens of specialized AI chips within it, at the flagship Stargate data center campus under construction in Abilene, Texas. Barclays analysts said in a note to investors Monday that OpenAI’s AMD deal is less about taking share away from Nvidia than it is a sign of how much computing is needed to meet AI demand.”

No doubt. We are sure OpenAI will buy up all the high-powered graphics chips it can get. But after it and other AI firms acquire their chips, will there be any left for regular consumers? If so, expect their costs to remain sky high. Just one more resource AI firms are devouring with little to no regard for the impact on others.

Cynthia Murrell, October 31, 2025