Microsoft: Desperation or Inspiration? Copilot, Have We Lost an Engine?

November 10, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Microsoft is an interesting member of the high-tech in-crowd. It is the oldest of the Big Players. It invented Bob and Clippy. It has not cracked the weirdness of Word’s numbering weirdness. Updates routinely kill services. I think it would be wonderful if Task Manager did not spawn multiple instances of itself.

Furthermore Microsoft, the cloudy giant with oodles of cash, ignited the next big thing frenzy a couple of years ago. The announcement that Bob and Clippy would operate on AI steroids. Googzilla experienced the equivalent of traumatic stress injury and blinked red, yellow, and orange for months. Crisis bells rang. Klaxons interrupted Foosball games. Napping in pods became difficult.

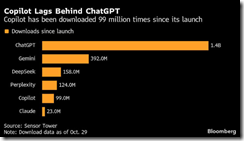

Imagine what’s happening at Microsoft now that this Sensor Tower chart is popping up in articles like “Microsoft Bets on Influencers Like Alix Earle to Close the Gap With ChatGPT.” Here’s the gasp inducer:

Source: The chart comes from Sensor Tower. It carries Bloomberg branding. But it appeared in an MSN.com article. Who crafted the data? How were the data assembled? What mathematical processes were use to produce such nice round numbers? I have no clue, but let’s assume those fat, juicy round numbers are “real,” and the weird imaginary “i” things electrical engineers enjoy each day.

The write up states:

Microsoft Corp., eager to boost downloads of its Copilot chatbot, has recruited some of the most popular influencers in America to push a message to young consumers that might be summed up as: Our AI assistant is as cool as ChatGPT. Microsoft could use the help. The company recently said its family of Copilot assistants attracts 150 million active users each month. But OpenAI’s ChatGPT claims 800 million weekly active users, and Google’s Gemini boasts 650 million a month. Microsoft has an edge with corporate customers, thanks to a long history of selling them software and cloud services. But it has struggled to crack the consumer market — especially people under 30.

Microsoft came up with a novel solution to its being fifth in the smart software league table. Is Microsoft developing useful AI-infused services for Notepad? Yes. Is Microsoft pushing Copilot and its hallucinatory functions into Excel? Yes. Is Microsoft using Copilot to help partners code widgets for their customers to use in Azure? Yeah, sort of, but I have heard that Anthropic Claude has some Certified Partners as fans.

The Microsoft professionals, the leadership, and the legions of consultants have broken new marketing ground. Microsoft is paying social media influencers to pitch Microsoft Copilot as the one true smart software. Forget that “God is my copilot” meme. It is now “Meme makers are Microsoft’s Copilot.”

The write up includes this statement about this stunningly creative marketing approach:

“We’re a challenger brand in this area, and we’re kind of up and coming,” Consumer Chief Marketing Officer Yusuf Mehdi

Excuse me, Microsoft was first when it announced its deal with OpenAI a couple of years ago. Microsoft was the only game in town. OpenAI was a Silicon Valley start up with links to Sam AI-Man and Mr. Tesla. Now Microsoft, a giant outfit, is “up and coming.” No, I would suggest Microsoft is stalled and coming down.

The write up from that university / consulting outfit New York University is quoted in the cited write up. Here is that snippet:

Anindya Ghose, a marketing professor at New York University’s Stern School of Business, expressed surprised that Microsoft is using lifestyle influencers to market Copilot. But he can see why the company would be attracted to their cult followings. “Even if the perceived credibility of the influencer is not very high but the familiarity with the influencers is high, there are some people who would be willing to bite on that apple,” Ghose said in an interview.

The article presents proof that the Microsoft creative light saber has delivered. Here’s that passage:

Mehdi cited a video Earle posted about the new Copilot Groups feature as evidence that the campaign is working. “We can see very much people say, ‘Oh, I’m gonna go try that,’ and we can see the usage it’s driving.” The video generated 1.9 million views on Earle’s Instagram account and 7 million on her TikTok. Earle declined to comment for this story.

Following my non-creative approach, here are several observations:

- From first to fifth. I am not sure social media influencers are likely to address the reason the firm associated with Clippy occupies this spot.

- I am not sure Microsoft knows how to fix the “problem.” My hunch is that the Softies see the issue as one that is the fault of the users. Its either the Russian hackers or the users of Microsoft products and services. Yeah, the problem is not ours.

- Microsoft, like Apple and Telegram, are struggling to graft smart software into ageing platforms, software, and systems. Google is doing a better job, but it is in second place. Imagine that. Google in the “place” position in the AI Derby. But Google has its own issues to resolve, and it is thinking about putting data centers in space, keeping its allegedly booming Web search business cranking along at top speed, and sucking enough cash from online advertising to pay for its smart software ambitions. Those wizards are busy. But Googzilla is in second place and coping with acute stress reaction.

Net net: The big players have put huge piles of casino chips in the AI poker game. Desperation takes many forms. The sport of extreme marketing is just one of the disorder’s manifestations. Watch it on TikTok-type services.

Stephen E Arnold, November 10, 2025

Train Models on Hostility Oriented Slop and You Get Happiness? Nope, Nastiness on Steroids

November 10, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Informed discourse, factual reports, and the type of rhetoric loved by Miss Spurling in 1959 can have a positive effect. I wasn’t sure at the time if I wanted to say Whoa! Nelly to my particular style of writing and speaking. She did her best to convert me from a somewhat weird 13 year old into a more civilized creature. She failed I fear.

A young student is stunned by the criticism of his approach to a report. An “F”. Failure. The solution is not to listen. Some AI vendors take the same approach. Thanks, Venice.ai, good enough.

When I read “Study: AI Models Trained on Clickbait Slop Result In AI Brain Rot, Hostility,” I thought about Miss Spurling and her endless supply of red pencils. Algorithms, it seems, have some of the characteristics of an immature young person. Feed that young person some unusual content, and you get some wild and crazy outputs

The write up reports:

To see how these [large language] models would “behave” after subsisting on a diet of clickbait sewage, the researchers cobbled together a sample of one million X posts and then trained four different LLMs on varying mixtures of control data (long form, good faith, real articles and content) and junk data (lazy, engagement chasing, superficial clickbait) to see how it would affect performance. Their conclusion isn’t too surprising; the more junk data that is fed into an AI model, the lower quality its outputs become and the more “hostile” and erratic the model is …

But here’s the interesting point:

They also found that after being fed a bunch of ex-Twitter slop, the models didn’t just get “dumber”, they were (shocking, I know) far more likely to take on many of the nastier “personality traits” that now dominate the right wing troll platform …

The write up makes a point about the wizards creating smart software; to wit:

The problem with AI generally is a decidedly human one: the terrible, unethical, and greedy people currently in charge of it’s implementation (again, see media, insurance, countless others) — folks who have cultivated some unrealistic delusions about AI competency and efficiency (see this recent Stanford study on how rushed AI adoption in the workforce often makes people less efficient).

I am not sure that the highly educated experts at Google-type AI companies would agree. I did not agree with Miss Spurling. On may points, she was correct. Adolescent thinking produces some unusual humans as well as interesting smart software. I particularly like some of the newer use cases; for instance, driving some people wacky or appealing to the underbelly of human behavior.

Net net: Scale up, shut up, and give up.

Stephen E Arnold, November 10, 2025

News Flash: Smart Software Can Be Truly Stupid about News

November 10, 2025

Chatbots Are Wrong About News

Do you receive your news via your favorite chatbot? It doesn’t matter which one is your favorite, because most of the time you’re being served misinformation. While the Trump and Biden administrations went crazy about the spreading of fake news, they weren’t entirely wrong. ZDNet reports that if, “Get Your News Drom AI? Watch Out – It’s Wrong Almost Half The Time.”

The European Broadcasting Union and the BBC discovered that popular chatbots are incorrectly reporting the news. The BBC and the EBU had journalists study media in eighteen countries and fourteen languages from Perplexity, Copilot, Gemini, and ChatGPT. Here are the results:

“The researchers found that close to half (45%) of all of the responses generated by the four AI systems “had at least one significant issue,” according to the BBC, while many (20%) “contained major accuracy issues,” such as hallucination — i.e., fabricating information and presenting it as fact — or providing outdated information. Google’s Gemini had the worst performance of all, with 76% of its responses containing significant issues, especially regarding sourcing.”

The implications are obvious to the smart thinker: distorted information. Thankfully Reuters found that only 7% of adults received all of their news from AI sources, while the numbers were larger at 15% for those under age twenty-five. More than three-quarters of adults never turn to chatbots for their news.

Why is anyone surprised by this? More importantly, why aren’t the big news outlets, including those on the fr left and right, sharing this information? I thought these companies were worried about going out of business because of chatbots. Why aren’t they reporting on this story?

Whitney Grace, November 7, 2025

How Frisky Will AI Become? Users Like Frisky… a Lot

November 7, 2025

OpenAI promised to create technology that would benefit humanity, much like Google and other Big tech companies. We know how that has gone. Much to the worry of its team, OpenAI released a TikTok-like app powered by AI. What could go wrong? Well we’re still waiting to see the fallout, but TechCrunch shares that possibilities in the story: “OpenAI Staff Grapples With The Company’s Social Media Push.”

OpenAI is headed into social media because that is where the money is. The push for social media is by OpenAI’s bigwigs. The new TikTok-like app is called Sora 2 and it has an AI-based feed. Past and present employees are concerned how Sora 2 will benefit humanity. They are worried that Sora 2 will produce more AI slop, the equivalent of digital brain junk food, to consumers instead of benefitting humanity. Even OpenAI’s CEO Sam Altman is astounded by the amount of money allowed to AI social media projects:

‘ ‘We do mostly need the capital for build [sic] AI that can do science, and for sure we are focused on AGI with almost all of our research effort,’ said Altman. ‘It is also nice to show people cool new tech/products along the way, make them smile, and hopefully make some money given all that compute need.’ ‘When we launched chatgpt there was a lot of ‘who needs this and where is AGI,’ Altman continued. ‘[R]eality is nuanced when it comes to optimal trajectories for a company.’”

Here’s another quote about the negative effects of AI:

‘One of the big mistakes of the social media era was [that] the feed algorithms had a bunch of unintended, negative consequences on society as a whole, and maybe even individual users. Although they were doing the thing that a user wanted — or someone thought users wanted — in the moment, which is [to] get them to, like, keep spending time on the site.’”

Let’s start taking bets about how long it will take the bad actors to transform Sora 2 into quite frisky service.

Whitney Grace, November 7, 2025

Copilot in Excel: Brenda Has Another Problem

November 6, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Simon Wilson posted an interesting snippet from a person whom I don’t know. The handle is @belligerentbarbies who is a member of TikTok. You can find the post “Brenda” on Simon Wilson’s Weblog. The main idea in the write up is that a person in accounting or finance assembles an Excel worksheet. In many large outfits, the worksheets are templates or set up to allow the enthusiastic MBA to plug in a few numbers. Once the numbers are “in,” then the bright, over achiever hits Shift F9 to recalculate the single worksheet. If it looks okay, the MBA mashes F9 and updates the linked spreadsheets. Bingo! A financial services firm has produced the numbers needed to slap into a public or private document. But, and here’s the best part…

Thanks, Venice.ai. Good enough.

Before the document leaves the office, a senior professional who has not used Excel checks the spreadsheet. Experience dictates to look at certain important cells of data. If those pass the smell test, then the private document is moved to the next stage of its life. It goes into production so that the high net worth individual, the clued in business reporter, the big customers, and people in the CEO’s bridge group get the document.

Because those “reports” can move a stock up or down or provide useful information about a deal that is not put into a number context, most outfits protect Excel spreadsheets. Heck, even the fill-in-the-blank templates are big time secrets. Each of the investment firms for which I worked over the years follow the same process. Each uses its own, custom-tailored, carefully structure set of formulas to produce the quite significant reports, opinions, and marketing documents.

Brenda knows Excel. Most Big Dogs know some Excel, but as these corporate animals fight their way to Carpetland, those Excel skills atrophy. Now Simon Wilson’s post enters and references Copilot. The post is insightful because it highlights a process gap. Specifically if Copilot is involved in an Excel spreadsheet, Copilot might— just might in this hypothetical — make a change. The Big Dog in Carpetland does not catch the change. The Big Dog just sniffs a few spots in the forest or jungle of numbers.

Before Copilot Brenda or similar professional was involved. Copilot may make it possible to ignore Brenda and push the report out. If the financial whales make money, life is good. But what happens if the Copilot tweaked worksheet is hallucinating. I am not talking a few disco biscuits but mind warping errors whipped up because AI is essentially operating at “good enough” levels of excellence.

Bad things transpire. As interesting as this problem is to contemplate, there’s another angle that the Simon Wilson post did not address. What if Copilot is phoning home. The idea is that user interaction with a cloud-based service is designed to process data and add those data to its training process. The AI wizards have some jargon for this “learn as you go” approach.

The issue is, however, what happens if that proprietary spreadsheet or the “numbers” about a particular company find their way into a competitor’s smart output? What if Financial firm A does not know this “process” has compromised the confidentiality of a worksheet. What if Financial firm B spots the information and uses it to advantage firm B?

Where’s Brenda in this process? Who? She’s been RIFed. What about Big Dog in Carpetland? That professional is clueless until someone spots the leak and the information ruins what was a calm day with no fires to fight. Now a burning Piper Cub is in the office. Not good, is it.

I know that Microsoft Copilot will be or is positioned as super secure. I know that hypotheticals are just that: Made up thought donuts.

But I think the potential for some knowledge leaking may exist. After all Copilot, although marvelous, is not Brenda. Clueless leaders in Carpetland are not interested in fairy tales; they are interested in making money, reducing headcount, and enjoying days without a fierce fire ruining a perfectly good Louis XIV desk.

Net net: Copilot, how are you and Brenda communicating? What’s that? Brenda is not answering her company provided mobile. Wow. Bummer.

Stephen E Arnold, November 6, 2025

Fear in Four Flavors or What Is in the Closet?

November 6, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

AI fear. Are you afraid to resist the push to make smart software a part of your life. I think of AI as a utility, a bit like old fashioned enterprise search just on very expensive steroids. One never knows how that drug use will turn out. Will the athlete win trophies or drop from heart failure in the middle of an event?

The write up “Meet the People Who Dare to Say No to Artificial Intelligence” is a rehash of some AI tropes. What makes the write up stand up and salute is a single line in the article. (This is a link from Microsoft. If the link is dead, call let one of its caring customer support chatbots know, not me.) Here it is:

Michael, a 36-year-old software engineer in Chicago who spoke on the condition that he be identified only by his first name out of fear of professional repercussions…

I find this interesting. A professional will not reveal his name for fear of “professional repercussions.” I think the subject is algorithms, not politics. I think the subject is neural networks, not racial violence. I think the subject is online, not the behavior of a religious figure.

Two roommates are afraid of a blue light. Very normal. Thanks, Venice.ai. Good enough.

Let’s think about the “fear” of talking about smart software.

I asked AI why a 35-year-old would experience fear. Here’s the short answer from the remarkably friendly, eager AI system:

- Biological responses to perceived threats,

- Psychological factors like imagination and past trauma,

- Personality traits,

- Social and cultural influences.

It seems to me that external and internal factors enter into fear. In the case of talking about smart software, what could be operating. Let me hypothesize for a moment.

First, the person may see smart software as posing a threat. Okay, that’s an individual perception. Everyone can have an opinion. But the fear angle strikes me as a displacement activity in the brain. Instead of thinking about the upside of smart software, the person afraid to talk about a collection of zeros and ones only sees doom and gloom. Okay, I sort of understand.

Second, the person may have some psychological problems. But software is not the same as a seven year old afraid there is a demon in the closet. We are back, it seems, to the mysteries of the mind.

Third, the person is fearful of zeros and ones because the person is afraid of many things. Software is just another fear trigger like a person uncomfortable around little spiders is afraid of a great big one like the tarantulas I had to kill with a piece of wood when my father wanted to drive his automobile in our garage in Campinas, Brazil. Tarantulas, it turned out, liked the garage because it was cool and out of the sun. I guess the garage was similar to a Philz’ Coffee to an AI engineer in Silicon Valley.

Fourth, social and cultural influences cause a person to experience fear. I think of my neighbor approached by a group of young people demanding money and her credit card. Her social group consists of 75 year old females who play bridge. The youngsters were a group of teenagers hanging out in a parking lot in an upscale outdoor mall. Now my neighbor does not want to go to the outdoor mall alone. Nothing happened but those social and cultural influences kicked in.

Anyway fear is real.

Nevertheless, I think smart software fear boils down to more basic issues. One, smart software will cause a person to lose his or her job. The job market is not good; therefore, fear of not paying bills, social disgrace, etc. kick in. Okay, but it seems that learning about smart software might take the edge off.

Two, smart software may suck today, but it is improving rapidly. This is the seven year old afraid of the closet behavior. Tough love says, “Open the closet. Tell me what you see.” In most cases, there is no person in the closet. I did hear about a situation involving a third party hiding in the closet. The kid’s opening the door revealed the stranger. Stuff happens.

Three, a person was raised in an environment in which fear was a companion that behavior may carry forward. Boo.

Net net: What is in Mr. AI’s closet?

Stephen E Arnold, November 6, 2025

If You Want to Be Performant, Do AI or Try to Do AI

November 6, 2025

For firms that have invested heavily in AI only to be met with disappointment, three tech executives offer some quality spin. Fortune reports, “Experts Say the High Failure Rate in AI adoption Isn’t a Bug, but a Feature.” The leaders expressed this interesting perspective at Fortune’s recent Most Powerful Women Summit. Writer Dave Smith writes:

“The panel discussion, titled ‘Working It Out: How AI Is Transforming the Office,’ tackled head-on a widely circulated MIT study suggesting that approximately 95% of enterprise AI pilots fail to pay off. The statistic has fueled doubts about whether AI can deliver on its promises, but the three panelists—Amy Coleman, executive vice president and chief people officer at Microsoft; Karin Klein, founding partner at Bloomberg Beta; and Jessica Wu, cofounder and CEO of Sola—pushed back forcefully on the narrative that failure signals fundamental problems with the technology.? ‘We’re in the early innings,’ Klein said. ‘Of course, there’s going to be a ton of experiments that don’t work. But, like, has anybody ever started to ride a bike on the first try? No. We get up, we dust ourselves off, we keep experimenting, and somehow we figure it out. And it’s the same thing with AI.’”

Interesting analogy. Ideally kiddos learn to ride on a cul-de-sac with supervision, not set loose on the highway. Shouldn’t organizations do their AI experimentations before making huge investments? Or before, say, basing high-stakes decisions in medicine, law-enforcement, social work, or mortgage approvals on AI tech? Ethical experimentation calls for parameters, after all. Have those been trampled in the race to adopt AI?

Cynthia Murrell, November 6, 2025

AI Dreams Are Plugged into Big Rock Candy Mountain

November 5, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

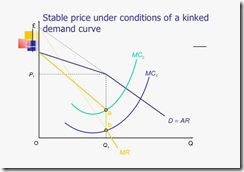

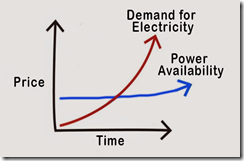

One of the niches in the power generation industry is demand forecasting. When I worked at Halliburton Nuclear, I sat in meetings. One feature of these meetings was diagrams. I don’t have any of these diagrams because there were confidentiality rules. I followed those. This is what some of the diagrams resembled:

Source: https://mavink.com/

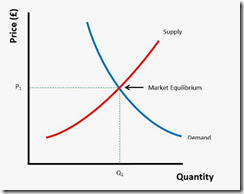

When I took a job at Booz, Allen, the firm had its own demand experts. The diagrams favored by one of the utility rate and demand experts looked like this. Note: Booz, Allen had rules, so the diagram comes from the cited source:

Source: https://vtchk.ru/photo/demand-curve/16

These curves speak volumes to the people who fund, engineer, and construct power generation facilities. The main idea for these semi-abstract curves is that balancing demand and supply is important. The price of electricity depends on figuring out the probable relationship of demand for power, the available supply and the supply that will come on line at estimated times in the future. The price people and organizations pay for electricity depend on these types of diagrams, the reams of data analysts crunch, and a group of people sitting in a green conference room at a plastic table agree the curves mean.

A recent report from Turner & Townsend (a UK consulting outfit) identifies some trends in the power generation sector with some emphasis on the data centers required for smart software. You can work through the report on the Turner & Townsend Web site by clicking this link. The main idea is that huge AI-centric data centers needed to power the Googley transformer-centric approach to smart software outstrips available power.

The response to this in the bit AI companies is, “We can put servers in orbit” and “We can build small nuclear reactors and park them near the data centers” and “We can buy turbines and use gas or other carbon fuels to power out data centers.” These are comments made by individuals who choose not to look at the wonky type of curves I show.

It takes time to build a conventional power generation facility. The legal process in the US has traditionally been difficult and expensive. A million dollars won’t even pay for a little environmental impact study. Lawyers can cost than a rail car loaded with specialized materials required for nuclear reactors. The costs for the PR required to place a baby nuke in Memphis next to a big data center may be more expensive than buying some Google ads and hiring a local marketing firm. Some people may not feel comfortable with a new, unproven baby nuke in their neighborhood. Coal- and oil-fired plants invite certain types of people to mount noisy and newsworthy protests. Putting a data center in orbit poses some additional paperwork challenges and a little bit of extra engineering work.

So what’s the big detailed report show. Here’s my diagram of the power, demand, and price future with those giant data centers in the US. You can work out the impact on non-US installations:

This diagram was whipped up by Stephen E Arnold.

The message in these curves reflects one of the “challenges” identified in the Turner & Townsend report: Cost.

What does this mean to those areas of the US where Big AI Boys plan to build large data centers? Answer: Their revenue streams need to be robust and their funding sources have open wallets.

What does this mean for the cost of electricity to consumers and run-of-the-mill organizations? Answer: Higher costs, brown outs, and fancy new meters than can adjust prices and current on the fly. Crank up the data center, and the Super Bowl broadcast may not be in some homes.

What does this mean for ubiquitous, 24×7 AI availability in software, home appliances, and mobile devices? Answer: Higher costs, brown outs, and degraded services.

How will the incredibly self aware, other centric, ethical senior managers at AI companies respond? Answer: No problem. Think thorium reactors and data centers in space.

Also, the cost of building new power generation facilities is not a problem for some Big Dogs. The time required for licensing, engineering, and construction. No problem. Just go fast, break things.

And overcoming resistance to turbines next to a school or a small thorium reactor in a subdivision? Hey, no problem. People will adapt or they can move to another city.

What about the engineering and the innovation? Answer: Not to worry. We have the smartest people in the world.

What about common sense and self awareness? Response: Yo, what do those terms mean are they synonyms for disco biscuits?

The next big thing lives on Big Rock Candy Mountain.

Stephen E Arnold, November 5, 2025

A Nice Way of Saying AI Will Crash and Burn

November 5, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a write up last week. Today is October 27, 2025, and this dinobaby has a tough time keeping AI comments, analyses, and proclamations straight. The old fashioned idea of putting a date on each article or post is just not GenAI. As a dinobaby, I find this streamlining like Google dumping apostrophes from its mobile keyboard ill advised. I would say “stupid,” but one cannot doubt the wisdom of the quantumly supreme AI PR and advertising machine, can one? One cannot tell some folks that AI is a utility. It may be important, but the cost may be AI’s Achilles’ heel or the sword on which AI impales itself.

A young wizard built a wonder model aircraft. But it caught on fire and is burning. This is sort of sad. Thanks, ChatGPT. Good enough.

These and other thoughts flitted through my mind when I read “Surviving the AI Capex Boom.” The write up includes an abstract, and you can work through the 14 page document to get the inside scoop. I will assume you have read the document. Here are some observations:

- The “boom” is visible to anyone who scans technical headlines. The hype for AI is exceeded only by the money pumped into the next big thing. The problem is that the payoff from AI is underwhelming when compared to the amount of cash pumped into a sector relying on a single technical innovation or breakthrough: The “transformer” method. Fragile is not the word for the situation.

- The idea that there are cheap and expensive AI stocks is interesting. I am, however, that cheap and expensive are substantively different. Google has multiple lines of revenue. If AI fails, it has advertising and some other cute businesses. Amazon has trouble with just about everything at the moment. Meta is — how shall I phrase it — struggling with organizational issues that illustrate managerial issues. So there is Google and everyone else.

- OpenAI is a case study in an entirely new class of business activities. From announcing that erotica is just the thing for ChatGPT to sort of trying to invent the next iPhone, Sam AI-Man is a heck of a fund raising machine. Only his hyperbole power works as well. His love of circular deals means that he survives, or he does some serious damage to a number of fellow travelers. I say, “No thanks, Sam.”

- The social impact of flawed AI is beginning to take shape. The consequences will be unpleasant in many ways. One example: Mental health knock ons. But, hey, this is a tiny percentage, a rounding error.

Net net: I am not convinced that investing in AI at this time is the wise move for an 81 year old dinobaby. Sorry, Kai Wu. You will survive the AI boom. You are, from my viewpoint, a banker. Bankers usually win. But others may not enjoy the benefits you do.

Stephen E Arnold, November 5, 2025

OpenAI Explains the Valueless Job

November 5, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted a Yahoo News story recycled from Futurism. The write up contains an allegedly true comment made by the outstanding professionals at OpenAI. “Sam Altman Says If Jobs Gets Wiped Out, Maybe They Weren’t Even “Real Work” to Start With” includes this statement but before I present it, I must remind you that the “new” Yahoo News is not the world champion of quickly resolving links. If you end up with a 404, contact the Yahooligans, not me. Now the quote:

… at OpenAI’s DevDay conference on Wednesday, Altman floated the idea that the work you do today, which might imminently be transformed or eliminated by AI, isn’t “real work.”

Let’s think about this.

First, work defines some people. If one’s work is valueless, that might annoy the people who do the work and enjoy it. Get enough of these people in one place and point out that they are valueless, and some excitement might ensue.

A large company values its individual employees. I know because the manager tells me so. Thanks, Venice ai. Good enough.

Second, when one questions the value of another person’s work, what does that reveal about the person making the assertion. Could it suggest a certain sense of superiority? Could that individual perceive the world as one of those wonky but often addled Greek gods? Could the individual have another type of mental issue?

Third, the idea that smart software, everything apps, Orbs, and World Networks will rule the world is not science fiction. OpenAI and his team are busy working to make this utopia happen. Are there other ideas about what online services should provide to farmers, for instance?

The cited article states:

“The thing about that farmer,” Altman said, is not only that they wouldn’t believe you, but “they very likely would look at what you do and I do and say, ‘that’s not real work.’” This, Altman said, makes him feel “a little less worried” but “more worried in some other ways.” “If you’re, like, farming, you’re doing something people really need,” Altman explained. “You’re making them food, you’re keeping them alive. This is real work.” But the farmer would see our modern jobs as “playing a game to fill your time,” and therefore not a “real job.” “It’s very possible that if we could see those jobs of the future,” Altman said, we’d think “maybe our jobs were not as real as a farmer’s job, but it’s a lot more real than this game you’re playing to entertain yourself.”

Sam AI-Man is a philosopher for our time. That’s a high value job, right. I think obtaining investment dollars to build smart software is a real job. It is much more useful than doing a valueless job like farming. You eat, don’t you, Sam?

Stephen E Arnold, November 5, 2025