Microsoft Could Be a Microsnitch

November 14, 2025

Remember when you were younger and the single threat of, “I’m going to tell!” was enough to send chills through your body? Now Microsoft plans to do the same thing except on an adult level. Life Hacker shares that, “Microsoft Teams Will Soon Tell Your Boss When You’re Not In The Office.” The article makes an accurate observation that since the pandemic most jobs can be done from anywhere with an Internet connection.

Since the end of quarantine, offices are fighting to get their workers back into physical workspaces. Some of them have implemented hybrid working, while others have become more extreme by counting clock-ins and badge swipes. Microsoft is adding its own technology to the fight by making it possible to track remote workers.

“As spotted by Tom’s Guide, Microsoft Teams will roll out an update in December that will have the option to report whether or not you’re working from your company’s office. The update notes are sparse on details, but include the following: ‘When users connect to their organization’s [wifi], Teams will soon be able to automatically update their work location to reflect the building they’re working from. This feature will be off by default. Tenant admins will decide whether to enable it and require end-users to opt-in.’”

Microsoft whitewashed the new feature by suggesting employees use it to find their teammates. The article’s author says it all:

“But let’s be real. This feature is also going to be used by companies to track their employees, and ensure that they’re working from where they’re supposed to be working from. Your boss can take a look at your Teams status at any time, and if it doesn’t report you’re working from one of the company’s buildings, they’ll know you’re not in the office. No, the feature won’t be on by default, but if your company wants to, your IT can switch it on, and require that you enable it on your end as well.”

It is ridiculous to demand that employees return to offices, but at the same time many workers aren’t actually doing their job. The professionals are quiet quitting, pretending to do the work, and ignoring routine tasks. Surveillance seems to be a solution of interest.

It would be easier if humans were just machines. You know, meat AI systems. Bummer, we’re human. If we can get away with something, many will. But is Microsoft is going too far here to make sales to ineffective “leadership”? Worker’s aren’t children, and the big tech company is definitely taking the phrase, “I’m going to tell!” to heart.

Whitney Grace, November 14, 2025

Walmart Plans To Change Shopping With AI

November 14, 2025

Walmart shocked the world when it deployed robots to patrol aisles. The purpose of the robot wasn’t to steal jobs but report outages and messes to employees. Walmart has since backtracked on the robots, but they are turning to AI to enhance and forever alter the consumer shopping experience. According to MSN, “Walmart’s Newest Plan Could Change How You Shop Forever.”

Walmart plus to make the shopping experience smarter by using OpenAI’s ChatGPT. Samsung is also part of this partnership that will offer product suggestions to shoppers of both companies. The idea of incorporating ChatGPT takes the search bar and search query pattern to the next level:

“Far from just a search bar and a click experience, Walmart says the AI will learn your habits, can predict what you need, and even plan your shopping before realizing you’re in need of it. “ ‘Through AI-first shopping, the retail experience shifts from reactive to proactive as it learns, plans, and predicts, helping customers anticipate their needs before they do,’ Walmart stared in the release.

Amazon, Walmart, and other big retailers have been tracking consumer habits for years and sending them coupons and targeted ads. This is a more intrusive way to make consumers spend money. What will they think of next? How about Kroger’s smart price displays. These can deliver dynamic prices to “help” the consumer and add a bit more cash to the retailer. Yeah, AI is great.

Whitney Grace, November 14, 2025

Sweet Dreams of Data Centers for Clippy Version 2: The Agentic Operation System

November 13, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

If you have good musical recall, I want you to call up the tune for “Sweet Dreams (Are Made of This) by the Eurythmics. Okay, with that sound track buzzing through your musical memory, put it on loop. I want to point you to two write ups about Microsoft’s plans for a global agentic operating system and its infrastructure. From hiring social media influencers to hitting the podcast circuit, Microsoft is singing its own songs to its often reluctant faithful. Let’s turn down “Sweet Dream” and crank up the MSFT chart climbers.

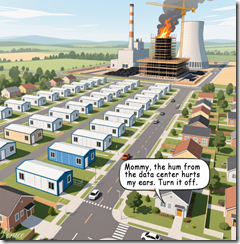

Trans-continental AI infrastructure. Will these be silent, reduce pollution, and improve the life of kids who live near the facilities? Of course, because some mommies will say, “Just concentrate and put in your ear plugs. I am not telling you again.” Thanks, Venice. Good enough after four tries. Par for the AI course.

The first write up is by the tantalizingly named consulting firm doing business as SemiAnalysis. I smile everything I think about how some of my British friends laugh when they see a reference to a semi-truck. One quipped, “What you don’t have complete trucks in the US?” That same individual would probably say in response to the company name SemiAnalysis, “What you don’t have a complete analysis in the US?” I have no answer to either question, but “SemiAnalysis” does strike me as more amusing a moniker than Booz, Allen, McKinsey, or Bain.

You can find a 5000 word plus segment of a report with the remarkable title “Microsoft’s AI Strategy Deconstructed – From Energy to Tokens” online. To get the complete report, presumably not the semi report, one must subscribe. Thus, the document is content marketing, but I want to highlight three aspects of the MBA-infused write up. These reflect my biases, so if you are not into dinobaby think, click away, gentle reader.

The title “Microsoft’s AI Strategy Deconstructed” is a rah rah rah for Microsoft. I noted:

- Microsoft was first, now its is fifth, and it will be number one. The idea is that the inventor of Bob and Clippy was the first out of the gate with “AI is the future.” It stands fifth in terms of one survey’s ranking of usage. This “Microsoft’s AI Strategy Deconstructed” asserts that it is going to be a big winner. My standard comment to this blending of random data points and some brown nosing is, “Really?”

- Microsoft is building or at least promising to build lots of AI infrastructure. The write up does not address the very interesting challenge of providing power at a manageable cost to these large facilities. Aerial photos of some of the proposed data centers look quite a bit like airport runways stuffed with bland buildings filled with large numbers of computing devices. But power? A problem looming it seems.

- The write up does not pay much attention to the Google. I think that’s a mistake. From data centers in boxes to plans to put these puppies in orbit, the Google has been doing infrastructure, including fiber optic, chips, and interesting investments like its interest in digital currency mining operations. But Google appears to be of little concern to the Microsoft-tilted semi analysis from SemiAnalysis. Remember, I am a dinobaby, so my views are likely to rock the young wizards who crafted this “Microsoft is going to be a Big Dog.” Yeah, but the firm did Clippy. Remember?

The second write up picks up on the same theme: Microsoft is going to do really big things. “Microsoft Is Building Datacenter Superclusters That Span Continents” explains that MSFT’s envisioned “100 Trillion Parameter Models of the Near Future Can’t Be Built in One Place” and will be sort of like buildings that are “two stories tall, use direct-to-chip liquid cooling, and consume “almost zero water.”

The write up adds:

Microsoft is famously one of the few hyperscalers that’s standardized on Nvidia’s InfiniBand network protocol over Ethernet or a proprietary data fabric like Amazon Web Service’s EFA for its high-performance compute environments. While Microsoft has no shortage of options for stitching datacenters together, distributing AI workloads without incurring bandwidth- or latency-related penalties remains a topic of interest to researchers.

The real estate broker Arvin Haddad uses the phrase “Can you spot the flaw?” Okay, let me ask, “Can you spot the flaw in Microsoft’s digital mansions?” You have five seconds. Okay. What happens if the text centric technology upon which current AI efforts are based gets superseded by [a] a technical breakthrough that renders TensorFlow approaches obsolete, expensive, and slow? or [b] China dumps its chip and LLM technology into the market as cheap or open source? My thought is that the data centers that span continents may end up like the Westfield San Francisco Centre as a home for pigeons, graffiti artists, and security guards.

Yikes.

Building for the future of AI may be like shooting at birds not in sight. Sure, a bird could fly though the pellets, but probably not if they are nesting in pond a mile away.

Net net: Microsoft is hiring influencers and shooting where ducks will be. Sounds like a plan.

Stephen E Arnold, November 13, 2025

AI Is a Winner: The Viewpoint of an AI Believer

November 13, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Bubble, bubble, bubble. This is the Silicon Valley version of epstein, epstein, epstein. If you are worn out from the doom and gloom of smart software’s penchant for burning cash and ignoring the realities of generating electric power quickly, you will want to read “AI Is Probably Not a Bubble: AI Companies Have Revenue, Demand, and Paths to Immense Value.” [Note: You may encounter a paywall when you attempt to view this article. Don’t hassle me. Contact those charming visionaries at Substack, the new new media outfit.]

The predictive impact of the analysis has been undercut by a single word in the title “Probably.” A weasel word appears because the author’s enthusiasm for AI is a bit of contrarian thinking presented in thought leader style. Probably a pig can fly somewhere at some time. Yep, confidence.

Here’s a passage I found interesting:

… unlike dot-com companies, the AI companies have reasonable unit economics absent large investments in infrastructure and do have paths to revenue. OpenAI is demonstrating actual revenue growth and product-market fit that Pets.com and Webvan never had. The question isn’t whether customers will pay for AI capabilities — they demonstrably are — but whether revenue growth can match required infrastructure investment. If AI is a bubble and it pops, it’s likely due to different fundamentals than the dot-com bust.

Ah, ha, another weasel word: “Whether.” Is this AI bubble going to expand infinitely or will it become a Pets.com?

The write up says:

Instead, if the AI bubble is a bubble, it’s more likely an infrastructure bubble.

I think the ground beneath the argument has shifted. The “AI” is a technology like “the Internet.” The “Internet” became a big deal. AI is not “infrastructure.” That’s a data center with fungible objects like machines and connections to cables. Plus, the infrastructure gets “utilized immediately upon completion.” But what if [a] demand decreases due to lousy AI value, [b] AI becomes a net inflater of ancillary costs like a Microsoft subscription to Word, or [c] electrical power is either not available or too costly to make a couple of football fields of servers to run 24×7?

I liked this statement, although I am not sure some of the millions of people who cannot find jobs will agree:

As weird as it sounds, an AI eventually automating the entire economy seems actually plausible, if current trends keep continuing and current lines keep going up.

Weird. Cost cutting is a standard operating tactic. AI is an excuse to dump expensive and hard-to-manage humans. Whether AI can do the work is another question. Shifting from AI delivering value to server infrastructure shows one weakness in the argument. Ignoring the societal impact of unhappy workers seems to me illustrative of taking finance classes, not 18th century history classes.

Okay, here’s the wind up of the analysis:

Unfortunately, forecasting is not the same as having a magic crystal ball and being a strong forecaster doesn’t give me magical insight into what the market will do. So honestly, I don’t know if AI is a bubble or not.

The statement is a combination of weasel words, crawfishing away from the thesis of the essay, and an admission that this is a marketing thought leader play. That’s okay. LinkedIn is stuffed full of essays like this big insight:

So why are industry leaders calling AI a bubble while spending hundreds of billions on infrastructure? Because they’re not actually contradicting themselves. They’re acknowledging legitimate timing risk while betting the technology fundamentals are sound and that the upside is worth the risk.

The AI giants are savvy cats, are they not?

Stephen E Arnold, November 13, 2025

US Government Procurement Changes: Like Silicon Valley, Really? I Mean For Sure?

November 12, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I learned about the US Department of War overhaul of its procurement processes by reading “The Department of War Just Shot the Accountants and Opted for Speed.” Rumblings of procurement hassles have been reaching me for years. The cherished methods of capture planning, statement of work consulting, proposal writing, and evaluating bids consumes many billable hours by consultants. The processes involve thousands of government professionals: Lawyers, financial analysts, technical specialists, administrative professionals, and consultants. I can’t omit the consultants.

According to the essay written by Steve Blank (a person unfamiliar to me):

Last week the Department of War finally killed the last vestiges of Robert McNamara’s 1962 Planning, Programming, and Budgeting System (PPBS). The DoW has pivoted from optimizing cost and performance to delivering advanced weapons at speed.

The write up provides some of the history of the procurement process enshrined in such documents as FAR or the Federal Acquisition Regulations. If you want the details, Mr. Blank provides I urge you to read his essay in full.

I want to highlight what I think is an important point to the recent changes. Mr. Bloom writes:

The war in Ukraine showed that even a small country could produce millions of drones a year while continually iterating on their design to match changes on the battlefield. (Something we couldn’t do.) Meanwhile, commercial technology from startups and scaleups (fueled by an immense pool of private capital) has created off-the-shelf products, many unmatched by our federal research development centers or primes, that can be delivered at a fraction of the cost/time. But the DoW acquisition system was impenetrable to startups. Our Acquisition system was paralyzed by our own impossible risk thresholds, its focus on process not outcomes, and became risk averse and immoveable.

Based on my experience, much of it working as a consultant on different US government projects, the horrific “special operation” delivered a number of important lessons about modern warfare. Reading between the lines of the passage cited above, two important items of information emerged from what I view as an illegal international event:

- Under certain conditions human creativity can blossom and then grow into major business operations. I would suggest that Ukraine’s innovations in the use of drones, how the drones are deployed in battle conditions, and how the basic “drone idea” reduce the effectiveness of certain traditional methods of warfare

- Despite disruptions to transportation and certain third-party products, Ukraine demonstrated that just-in-time production facilities can be made operational in weeks, sometimes days.

- The combination of innovative ideas, battlefield testing, and right-sized manufacturing demonstrated that a relatively small country can become a world-class leader in modern warfighting equipment, software, and systems.

Russia, with its ponderous planning and procurement process, has become the fall guy to a president who was a stand up comedian. Who is laughing now? It is not the perpetrators of the “special operation.” The joke, as some might say, is on individuals who created the “special operation.”

Mr. Blank states about the new procurement system:

To cut through the individual acquisition silos, the services are creating Portfolio Acquisition Executives (PAEs). Each Portfolio Acquisition Executive (PAE) is responsible for the entire end-to-process of the different Acquisition functions: Capability Gaps/Requirements, System Centers, Programming, Acquisition, Testing, Contracting and Sustainment. PAEs are empowered to take calculated risks in pursuit of rapidly delivering innovative solutions.

My view of this type of streamlining is that it will become less flexible over time. I am not sure when the ossification will commence, but bureaucratic systems, no matter how well designed, morph and become traditional bureaucratic systems. I am not going to trot out the academic studies about the impact of process, auditing, and legal oversight on any efficient process. I will plainly state that the bureaucracies to which I have been exposed in the US, Europe, and Asia are fundamentally the same.

Can the smart software helping enable the Silicon Valley approach to procurement handle the load and keep the humanoids happy? Thanks, Venice.ai. Good enough.

Ukraine is an outlier when it comes to the organization of its warfighting technology. Perhaps other countries if subjected to a similar type of “special operation” would behave as the Ukraine has. Whether I was giving lectures for the Japanese government or dealing with issues related to materials science for an entity on Clarendon Terrace, the approach, rules, regulations, special considerations, etc. were generally the same.

The question becomes, “Can a new procurement system in an environment not at risk of extinction demonstrate the speed, creativity, agility, and productivity of the Ukrainian model?”

My answer is, “No.”

Mr. Blank writes before he digs into the new organizational structure:

The DoW is being redesigned to now operate at the speed of Silicon Valley, delivering more, better, and faster. Our warfighters will benefit from the innovation and lower cost of commercial technology, and the nation will once again get a military second to none.

This is an important phrase: Silicon Valley. It is the model for making the US Department of War into a more flexible and speedy entity, particularly with regard to procurement, the use of smart software (artificial intelligence), and management methods honed since Bill Hewlett and Dave Packard sparked the garage myth.

Silicon Valley has been an model for many organizations and countries. However, who thinks much about the Silicon Fen? I sure don’t. I would wager a slice of cheese that many readers of this blog post have never, ever heard of Sophia Antipolis. Everyone wants to be a Silicon Valley and high-technology, move fast and break things outfit.

But we have but one Silicon Valley. Now the question is, “Will the US government be a successful Silicon Valley, or will it fizzle out?” Based on my experience, I want to go out on a very narrow limb and suggest:

- Cronyism was important to Silicon Valley, particularly for funding and lawyering. The “new” approach to Department of War procurement is going to follow a similar path.

- As the stakes go up, growth becomes more important than fiscal considerations. As a result, the cost of becoming bigger, faster, cheaper spikes. Costs for the majority of Silicon Valley companies kill off most start ups. The failure rate is high, and it is exacerbated by the need of the winners to continue to win.

- Silicon Valley management styles produce some negative consequences. Often overlooked are such modern management methods as [a] a lack of common sense, [b] decisions based on entitlement or short term gains, and [c] a general indifference to the social consequences of an innovation, a product, or a service.

If I look forward based on my deeply flawed understanding of this Silicon Valley revolution I see monopolistic behavior emerging. Bureaucracies will emerge because people working for other people create rules, procedures, and processes to minimize the craziness of doing the go fast and break things activities. Workers create bureaucracies to deal with chaos, not cause chaos.

Mr. Blank’s essay strikes me as generally supportive of this reinvention of the Federal procurement process. He concludes with:

Let’s hope these changes stick.

My personal view is that they won’t. Ukraine’s created a wartime Silicon Valley in a real-time, shoot-and-survive conflict. The urgency is not parked in a giant building in Washington, DC, or a Silicon Valley dream world. A more pragmatic approach is to partition procurement methods. Apply Silicon Valley thinking in certain classes of procurement; modify the FAR to streamline certain processes; and leave some of the procedures unchanged.

AI is a go fast and break things technology. It also hallucinates. Drones from Silicon Valley companies don’t work in Ukraine. I know because someone with first hand information told me. What will the new methods of procurement deliver? Answer: Drones that won’t work in a modern asymmetric conflict. With decisions involving AI, I sure don’t want to find myself in a situation about which smart software makes stuff up or operates on digital mushrooms.

Stephen E Arnold, November 12, 2025

Someone Is Not Drinking the AI-Flavored Kool-Aid

November 12, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

The future of AI is in the hands of the masters of the digital PT Barnum’s. A day or so ago, I wrote about Copilot in Excel. Allegedly a spreadsheet can be enhanced by Microsoft. Google is beavering away with a new enthusiasm for content curation. This is a short step to weaponizing what is indexed, what is available to Googlers and Mama, and what is provided to Google users. Heroin dealers do not provide consumer oriented labels with ingredients.

Thanks, Venice.ai. Good enough.

Here’s another example of this type of soft control: “I’ll Never Use Grammarly Again — And This Is the Reason Every Writer Should Care.” The author makes clear that Grammarly, developed and operated from Ukraine, now wants to change her writing style. The essay states:

What once felt like a reliable grammar checker has now turned into an aggressive AI tool always trying to erase my individuality.

Yep, that’s what AI companies and AI repackagers will do: Use the technology to improve the human. What a great idea? Just erase the fingerprints of the human. Introduce AI drivel and lowest common denominator thinking. Human, the AI says, take a break. Go to the yoga studio or grab a latte. AI has your covered.

The essay adds:

Superhuman [Grammarly’s AI solution for writers] wants to manage your creative workflow, where it can predict, rephrase, and automate your writing. Basically, a simple tool that helped us write better now wants to replace our words altogether. With its ability to link over a hundred apps, Superhuman wants to mimic your tone, habits, and overall style. Grammarly may call it personalized guidance, but I see it as data extraction wrapped with convenience. If we writers rely on a heavily AI-integrated platform, it will kill the unique voice, individual style, and originality.

One human dumped Grammarly, writing:

I’m glad I broke up with Grammarly before it was too late. Well, I parted ways because of my principles. As a writer, my dedication is towards original writing, and not optimized content.

Let’s go back to ubiquitous AI (some you know is there and other AI that operates in dark pattern mode). The object of the game for the AI crowd is to extract revenue and control information. By weaponizing information and making life easy, just think who will be in charge of many things in a few years. If you think humans will rule the roost, you are correct. But the number of humans pushing the buttons will be very small. These individuals have zero self awareness and believe that their ideas — no matter how far out and crazy — are the right way to run the railroad.

I am not sure most people will know that they are on a train taking them to a place they did not know existed and don’t want to visit.

Well, tough luck.

Stephen E Arnold, November 11, 2025

Temptation Is Powerful: Will Big AI Tech Take the Bait

November 12, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb.gif) Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I have been using the acronym BAIT for “big AI tech” in my talks. I find it an easy way to refer to the companies with the money and the drive to try to take over the use of software to replace most humans’ thinking. I want to raise a question, “Will BAIT take the bait?”

What is this lower case “bait”? In my opinion, lower case “bait” refers to information people and organizations would consider proprietary, secret, off limits, and out of bounds. Digital data about health, contracts, salaries, inventions, interpersonal relations, and similar categories of information would fall into the category of “none of your business” or something like “it’s secret.”

A calculating predator is about to have lunch. Thanks, Venice.ai. Not what I specified but good enough like most things in 2025.

Consider what happens when a large BAIT outfit gains access to the contents of a user’s mobile device, a personal computer, storage devices, images, and personal communications? What can a company committed to capturing information to make its smart software models more intelligent and better informed learn from these types of data? What if that data acquisition takes place in real time? In an organization or a personal life situation, an individual entity may not be able to cross tabulate certain data. The information is in the organization or the data stream for a household, but it is not connected. Data processing can acquire the information, perform the calculations, and “identify” the significant items. These can be sued to predict or guess what response, service, action, or investment can be made.

Microsoft’s efforts with Copilot in Excel raise the possibility and opportunity to examine an organization’s or a person’s financial calculations as part of a routine “let’s make the Excel experience better.” If you don’t know that data are local or on a cloud provider server, access to that information may not be important to you. But are those data important to a BAIT outfit? I think those data are tempting, desirable, and ultimately necessary for the AI company to “learn.”

One possible solution is for the BAIT company to tap into personal data, offering assurances that these types of information are not fodder for training smart software. Can people resist temptation? Some can. But others, with large amounts of money at stake, can’t.

Let’s consider a recent news announcement and then ask some hypothetical questions. I am just asking questions, and I am not suggesting that today’s AI systems are sufficiently organized to make use of the treasure trove of secret information. I do have enough experience to know that temptation is often hard to resist in a certain type of organization.

The article I noted today (November 6, 2025) is “Gemini Deep Research Can Tap into Your Gmail and Google Drive.” The write up reports what I assume to be accurate data:

After adding PDF support in May [2025], [Google] Gemini Deep Research can now directly tap information stored in your Gmail and Google Chat conversations, as well as Google Drive files…. Now, [Google] Deep Research can “draw on context from your [Google] Gmail, Drive and Chat and work it directly into your research.” [Google] Gemini will look through Docs, Slides, Sheets and PDFs stored in your Drive, as well as emails and messages across Google Workspace. [Emphasis added by Beyond Search for clarity]

Can Google resist the mouth watering prospect of using these data sources to train its large language models and its experimental AI technology?

There are some other hypotheticals to consider:

- What informational boundaries is Google allegedly crossing with this omnivorous approach to information?

- How can Google put meaningful barriers around certain information to prevent data leakage?

- What recourse do people or organizations have if Google’s smart software exposes sensitive information to a party not authorized to view these data?

- How will Google’s advertising algorithms use such data to shape or weaponize information for an individual or an organization?

- Will individuals know when a secret has been incorporated in a machine generated report for a government entity?

Somewhere in my reading I recall a statement attributed to Napoleon. My recollection is that in his letters or some other biographical document about Napoleon’s approach to war, he allegedly said something like:

Information in nine tenths of any battle.

The BAIT organizations are moving with purpose and possibly extreme malice toward systems and methods that give them access to data never meant to be used to train smart software. If Copilot in Excel happens and if Google processes data in their grasp, will these types of organizations be able to resist juicy, unique, high-calorie morsels zeros and ones?

I am not sure these types of organizations can or will exercise self control. There is money and power and prestige at stake. Humans have a long track record of doing some pretty interesting things. Is this omnivorous taking of information wrapped in making one’s life easier an example of overreach?

Will BAIT outfits take the bait? Good question.

Stephen E Arnold, November 12, 2025

Marketers and AI: Killing Sales and Trust. Collateral Damage? Meh

November 11, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

“The Trust Collapse: Infinite AI Content Is Awful” is an impassioned howl in the arctic space of zeros and ones. The author is what one might call collateral damage. When a UPS airplane drops a wing on take off, the people in the path of the fuel spewing aircraft are definitely not thrilled.

AI is a bit like a UPS-type of aircraft arrowing to an unhappy end, at least for the author of the cited article. The write up makes clear that smart software can vaporize a writer of marketing collateral. The cost for the AI is low. The penalty for the humanoid writer is collateral damage.

Let’s look at some of the points in the cited essay:

For the first time since, well, ever, the cost of creating content has dropped to essentially zero. Not “cheaper than before”, but like actually free. It’s so easy to generate a thousand blog posts or ten thousand ”personalized” emails and it barely costs you anything (for now).

Yep, marketing content appears on some of the lists I have mentioned in this blog. Usually customer service professionals top the list, but advertising copywriters and email pitch writers usually appear in the top five of AI-terminated jobs.

The write up explains:

What they [a prospect] actually want to know is “why the hell would I buy it from you instead of the other hundred companies spamming my inbox with identical claims?” And because everything is AI slop now, answering that question became harder for them.

The idea is that expensive, slow, time-consuming relationship selling is eroding under a steady stream of low cost, high volume marketing collateral produced by … smart software. Yes, AI and lousy AI at that.

The write up provides an interesting example of how low cost, high volume AI content has altered the sales landscape:

Old World (…-2024):

- Cost to produce credible, personalized outreach: $50/hour (human labor)

- Volume of credible outreach a prospect receives: ~10/week

- Prospect’s ability to evaluate authenticity: Pattern recognition works ~80% of time

New World (2025-…):

- Cost to produce credible, personalized outreach: effectively 0

- Volume of credible outreach a prospect receives: ~200/week

- Prospect’s ability to evaluate authenticity: Pattern recognition works ~20% of time

The signal-to-noise ratio has hit a breaking point where the cost of verification exceeds the expected value of engagement.

So what? The write up answers this question:

You’re representing a brand. And your brand must continuously earn that trust, even if you blend of AI-powered relevance. We still want that unmistakable human leadership.

Yep, that’s it. What’s the fix? What does a marketer do? What does a customer looking for a product or service to solve a problem?

There’s no answer. That’s the way smart software from Big AI Tech (BAIT) is supposed to work. The question, however, “What’s the fix? What’s your recommendation, dear author? Whom do you suggest solve this problem you present in a compelling way?”

Crickets.

AI systems and methods disintermediate as a normal function. The author of the essay does not offer a solution. Why? There isn’t one short of an AI implosion. At this time, too many big time people want AI to be the next big thing. They only look for profits and growth, not collateral damage. Traditional work is being rubble-ized. The build up of opportunity will take place where the cost of redevelopment is low. Some new business may be built on top of the remains of older operations, but moving a few miles down the road may be a more appealing option.

Stephen E Arnold, November 11, 2025

Agentic Software: Close Enough for Horse Shoes

November 11, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I read a document that I would describe as tortured. The lingo was trendy. The charts and graphs sported trendy colors. The data gathering seemed to be a mix of “interviews” and other people’s research. Plus the write up was a bit scattered. I prefer the rigidity of old-fashioned organization. Nevertheless, I did spot one chunk of information that I found interesting.

The title of the research report (sort of an MBA- or blue chip consulting firm-type of document) is “State of Agentic AI: Founder’s Edition.” I think it was issued in March 2025, but with backdating popular, who knows. I had the research report in my files, and yesterday (November 3, 2025) I was gathering some background information for a talk I am giving on November 6, 2025. The document walked through data about the use of software to replace people. Actually, the smart software agents generally do several things according to the agent vendors’ marketing collateral. The cited document restated these items this way:

- Agents are set up to reach specific goals

- Agents are used to reason which means “break down their main goal … into smaller manageable tasks and think about the next best steps.”

- Agents operate without any humans in India or Pakistan operating invisibly and behind the scenes

- Agents can consult a “memory” of previous tasks, “experiences,” work, etc.

Agents, when properly set up and trained, can perform about as well as a human. I came away from the tan and pink charts with a ball park figure of 75 to 80 percent reliability. Close enough for horseshoes? Yep.

There is a run down of pricing options. Pricing seems to be challenge for the vendors with API usage charges and traditional software licensing used by a third of the agentic vendors.

Now here’s the most important segment from the document:

We asked founders in our survey: “What are the biggest issues you have encountered when deploying AI Agents for your customers? Please rank them in order of magnitude (e.g. Rank 1 assigned to the biggest issue)” The results of the Top 3 issues were illuminating: we’ve frequently heard that integrating with legacy tech stacks and dealing with data quality issues are painful. These issues haven’t gone away; they’ve merely been eclipsed by other major problems. Namely:

- Difficulties in integrating AI agents into existing customer/company workflows, and the human-agent interface (60% of respondents)

- Employee resistance and non-technical factors (50% of respondents)

- Data privacy and security (50% of respondents).

Here’s the chart tallying the results:

Several ideas crossed my mind as I worked through this research data:

- Getting the human-software interface right is a problem. I know from my work at places like the University of Michigan, the Modern Language Association, and Thomson-Reuters that people have idiosyncratic ways to do their jobs. Two people with similar jobs add the equivalent of extra dashboard lights and yard gnomes to the process. Agentic software at this time is not particularly skilled in the dashboard LED and concrete gnome facets of a work process. Maybe someday, but right now, that’s a common deal breaker. Employees says, “I want my concrete unicorn, thank you.”

- Humans say they are into mobile phones, smart in-car entertainment systems, and customer service systems that do not deliver any customer service whatsoever. Change as somebody from Harvard said in a lecture: “Change is hard.” Yeah, and it may not get any easier if the humanoid thinks he or she will allowed to find their future pushing burritos at the El Nopal Restaurant in the near future.

- Agentic software vendors assume that licensees will allow their creations to suck up corporate data, keep company secrets, and avoid disappointing customers by presenting proprietary information to a competitor. Security is “regular” enterprise software is a bit of a challenge. Security in a new type of agentic software is likely to be the equivalent of a ride on roller coaster which has tossed several middle school kids to their death and cut off the foot of a popular female. She survived, but now has a non-smart, non-human replacement.

Net net: Agentic software will be deployed. Most of its work will be good enough. Why will this be tolerated in personnel, customer service, loan approvals, and similar jobs? The answer is reduced headcounts. Humans cost money to manage. Humans want health care. Humans want raises. Software which is good enough seems to cost less. Therefore, welcome to the agentic future.

Stephen E Arnold, November 11, 2025

Sure, Sam. We Trust Your with Our Data

November 11, 2025

OpenAI released a new AI service called “company knowledge” that collects and analyzes all information within an organization. Why does this sound familiar? Because malware does the same thing for nefarious purposes. The story comes from Computer World and is entitled, “OpenAI’s Company Knowledge Wants Access To All Of Your Internal Data.”

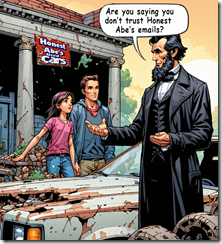

A major problem is that OpenAI is a still a relatively young company and organizations are reluctant to share all of their data with it. AI is still an untested pool and so much can go wrong when it comes to regulating security and privacy. Here’s another clincher in the deal:

“Making granting that trust yet more difficult is the lack of clarity around the ultimate OpenAI business model. Specifically, how much OpenAI will leverage sensitive enterprise data in terms of selling it, even with varying degrees of anonymization, or using it to train future models.”

What does the vice-president and principal analyst at Forrester, Jeff Pollard say?

“ ‘The capabilities across all these solutions are similar, and benefits exist: Context and intelligence when using AI, more efficiency for employees, and better knowledge for management.”

But there’s a big but that Pollard makes clear:

“ ‘Data privacy, security, regulatory, compliance, vendor lock-in, and, of course, AI accuracy and trust issues. But for many organizations, the benefits of maximizing the value of AI outweighs the risks.’”

The current AI situation is that applications are transiting from isolated to connected agents and agentic systems developed to maximize value for the users. In other words, according to Pollard, “high risk and high reward.” The rewards are tempting but the consequences are also alarming.

Experts say that companies won’t place all of their information and proprietary knowledge in the hands of a young company and untested technology. They could but there aren’t any regulations to protect them.

OpenAI should practice with its own company first, then see what happens.

Whitney Grace, November 11, 2025