Deepfakes: An Interesting and Possibly Pernicious Arms Race

December 2, 2024

As it turns out, deepfakes are a difficult problem to contain. Who knew? As victims from celebrities to schoolchildren multiply exponentially, USA Today asks, “Can Legislation Combat the Surge of Non-Consensual Deepfake Porn?” Journalist Dana Taylor interviewed UCLA’s John Villasenor on the subject. To us, the answer is simple: Absolutely not. As with any technology, regulation is reactive while bad actors are proactive. Villasenor seems to agree. He states:

“It’s sort of an arms race, and the defense is always sort of a few steps behind the offense, right? In other words that you make a detection tool that, let’s say, is good at detecting today’s deepfakes, but then tomorrow somebody has a new deepfake creation technology that is even better and it can fool the current detection technology. And so then you update your detection technology so it can detect the new deepfake technology, but then the deepfake technology evolves again.”

Exactly. So if governments are powerless to stop this horror, what can? Perhaps big firms will fight tech with tech. The professor dreams:

“So I think the longer term solution would have to be automated technologies that are used and hopefully run by the people who run the servers where these are hosted. Because I think any reputable, for example, social media company would not want this kind of content on their own site. So they have it within their control to develop technologies that can detect and automatically filter some of this stuff out. And I think that would go a long way towards mitigating it.”

Sure. But what can be done while we wait on big tech to solve the problem it unleased? Individual responsibility, baby:

“I certainly think it’s good for everybody, and particularly young people these days to be just really aware of knowing how to use the internet responsibly and being careful about the kinds of images that they share on the internet. … Even images that are sort of maybe not crossing the line into being sort of specifically explicit but are close enough to it that it wouldn’t be as hard to modify being aware of that kind of thing as well.”

Great, thanks. Admitting he may sound naive, Villasenor also envisions education to the (partial) rescue:

“There’s some bad actors that are never going to stop being bad actors, but there’s some fraction of people who I think with some education would perhaps be less likely to engage in creating these sorts of… disseminating these sorts of videos.”

Our view is that digital tools allow the dark side of individuals to emerge and expand.

Cynthia Murrell, December 2, 2024

AI In Group Communications: The Good and the Bad

November 29, 2024

In theory, AI that can synthesize many voices into one concise, actionable statement is very helpful. In practice, it is complicated. The Tepper School of Business at Carnegie Mellon announces, “New Paper Co-Authored by Tepper School Researchers Articulates How Large Language Models are Changing Collective Intelligence Forever.” Researchers from Tepper and other institutions worked together on the paper, which was published in Nature Human Behavior. We learn:

“[Professor Anita Williams] Woolley and her co-authors considered how LLMs process and create text, particularly their impact on collective intelligence. For example, LLMs can make it easier for people from different backgrounds and languages to communicate, which means groups can collaborate more effectively. This technology helps share ideas and information smoothly, leading to more inclusive and productive online interactions. While LLMs offer many benefits, they also present challenges, such as ensuring that all voices are heard equally.”

Indeed. The write-up continues:

“‘Because LLMs learn from available online information, they can sometimes overlook minority perspectives or emphasize the most common opinions, which can create a false sense of agreement,’ said Jason Burton, an assistant professor at Copenhagen Business School. Another issue is that LLMs can spread incorrect information if not properly managed because they learn from the vast and varied content available online, which often includes false or misleading data. Without careful oversight and regular updates to ensure data accuracy, LLMs can perpetuate and even amplify misinformation, making it crucial to manage these tools responsibly to avoid misleading outcomes in collective decision-making processes.”

In order to do so, the paper suggests, we must further explore LLMs’ ethical and practical implications. Only then can we craft effective guidelines for responsible AI summarization. Such standards are especially needed, the authors note, for any use of LLMs in policymaking and public discussions.

But not to worry. The big AI firms are all about due diligence, right?

Cynthia Murrell, November 29, 2024

AI Invents Itself: Good News?

November 28, 2024

Everyone from technology leaders to conspiracy theorists are fearful of a robot apocalypse. Functional robots are still years away from practicality, but AI in filling in that antagonist role nicely. Forbes shares how AI is on its way to creating itself: “AI That Can Invent AI Is Coming. Buckle Up.” AI is learning how to automate more tasks and will soon replace a human at a job.

In order for AI to become fully self-sufficient then it only needs to learn the job of an AI researcher. With a simple feedback loop, AI can develop superior architecture and improve on that as they advance their “research.” It might sound farfetched, but an AI developer role is simple: read about AI, invent new questions to ask, and implement experiments to test and answer those questions. It might sound too simple but algorithms are designed to automate and figure out knowledge:

For one thing, research on core AI algorithms and methods can be carried out digitally. Contrast this with research in fields like biology or materials science, which (at least today) require the ability to navigate and manipulate the physical world via complex laboratory setups. Dealing with the real world is a far gnarlier challenge for AI and introduces significant constraints on the rate of learning and progress. Tasks that can be completed entirely in the realm of “bits, not atoms” are more achievable to automate. A colorable argument could be made that AI will sooner learn to automate the job of an AI researcher than to automate the job of a plumber.

Consider, too, that the people developing cutting-edge AI systems are precisely those people who most intimately understand how AI research is done. Because they are deeply familiar with their own jobs, they are particularly well positioned to build systems to automate those activities.”

In the future, AI will develop and reinvent itself but the current AI still can’t basic facts about the Constitution or living humans correct. AI is frankly still very dumb. Humans haven’t made themselves obsolete yet, but we’re on way to doing that. Celebrate!

Whitney Grace, November 28, 2024

Early AI Adoption: Some Benefits

November 25, 2024

Is AI good or is it bad? The debate is still raging about, especially in Hollywood where writers, animators, and other creatives are demanding the technology be removed from the industry. AI, however, is a tool. It can be used for good and bad acts, but humans are the ones who initiate them. AI At Wharton investigated how users are currently adopting AI: “Growing Up: Navigating Generative AI’s Early Years – AI Adoption Report.”

The report was based on the responses from full-time employees who worked in commercial organization with 1000 or more workers. Adoption of AI in businesses jumped from 37 % in 2023 to 72% in 2024 with high growth in human resources and marketing departments. Companies are still unsure if AI is worth the ROI. The study explains that AI will benefit companies that have adaptable organizations and measurable ROI.

The report includes charts that document the high rate of usage compared last year as well as how AI is mostly being used. It’s being used for document writing and editing, data analytics, document summarization, marketing content creation, personal marketing and advertising, internal support, customer support, fraud prevention, and report creation. AI is definitely impactful but not overwhelmingly, but the response to the new technology is positive and companies will continue to invest in it.

“Looking to the future, Gen AI adoption will enter its next chapter which is likely to be volatile in terms of investment and carry greater privacy and usage restrictions. Enthusiasm projected by new Chief AI Officer (CAIO) role additions and team expansions this year will be tempered by the reality of finding “accountable” ROI. While approximately three out of four industry respondents plan to increase Gen AI budgets next year, the majority expect growth to slow over the longer term, signaling a shift in focus towards making the most effective internal investments and building organizational structures to support sustainable Gen AI implementation. The key to successful adoption of Gen AI will be proper use cases that can scale, and measurable ROI as well as organization structures and cultures that can adapt to the new technology.”

While the responses are positive, how exactly is it being used beyond the charts. Are the users implementing AI for work short cuts, such as really slap shod content generation? I’d hate to be the lazy employee who uses AI to make the next quarterly report and didn’t double-check the information.

Whitney Grace, November 25, 2024

Point-and-Click Coding: An eGame Boom Booster

November 22, 2024

TheNextWeb explains “How AI Can Help You Make a Computer Game Without Knowing Anything About Coding.” That’s great—unless one is a coder who makes one’s living on computer games. Writer Daniel Zhou Hao begins with a story about one promising young fellow:

“Take Kyo, an eight-year-old boy in Singapore who developed a simple platform game in just two hours, attracting over 500,000 players. Using nothing but simple instructions in English, Kyo brought his vision to life leveraging the coding app Cursor and also Claude, a general purpose AI. Although his dad is a coder, Kyo didn’t get any help from him to design the game and has no formal coding education himself. He went on to build another game, an animation app, a drawing app and a chatbot, taking about two hours for each. This shows how AI is dramatically lowering the barrier to software development, bridging the gap between creativity and technical skill. Among the range of apps and platforms dedicated to this purpose, others include Google’s AlphaCode 2 and Replit’s Ghostwriter.”

The write-up does not completely leave experienced coders out of the discussion. Hao notes tools like Tabnine and GitHub Copilot act as auto-complete assistance, while Sourcery and DeepCode take the tedium out of code cleanup. For the 70-ish percent of companies that have adopted one or more of these tools, he tells us, the benefits include time savings and more reliable code. Does this mean developers will to shift to “higher value tasks,” like creative collaboration and system design, as Hao insists? Or will it just mean firms will lighten their payrolls?

As for building one’s own game, the article lists seven steps. They are akin to basic advice for developing a product, but with an AI-specific twist. For those who want to know how to make one’s AI game addictive, contact benkent2020 at yahoo dot com.

Cynthia Murrell, November 22, 2024

China Smart, US Dumb: LLMs Bad, MoEs Good

November 21, 2024

Okay, an “MoE” is an alternative to LLMs. An “MoE” is a mixture of experts. An LLM is a one-trick pony starting to wheeze.

Google, Apple, Amazon, GitHub, OpenAI, Facebook, and other organizations are at the top of the list when people think about AI innovations. We forget about other countries and universities experimenting with the technology. Tencent is a China-based technology conglomerate located in Shenzhen and it’s the world’s largest video game company with equity investments are considered. Tencent is also the developer of Hunyuan-Large, the world’s largest MoE.

According to Tencent, LLMs (large language models) are things of the past. LLMs served their purpose to advance AI technology, but Tencent realized that it was necessary to optimize resource consumption while simultaneously maintaining high performance. That’s when the company turned to the next evolution of LLMs or MoE, mixture of experts models.

Cornell University’s open-access science archive posted this paper on the MoE: “Hunyuan-Large: An Open-Source MoE Model With 52 Billion Activated Parameters By Tencent” and the abstract explains it is a doozy of a model:

In this paper, we introduce Hunyuan-Large, which is currently the largest open-source Transformer-based mixture of experts model, with a total of 389 billion parameters and 52 billion activation parameters, capable of handling up to 256K tokens. We conduct a thorough evaluation of Hunyuan-Large’s superior performance across various benchmarks including language understanding and generation, logical reasoning, mathematical problem-solving, coding, long-context, and aggregated tasks, where it outperforms LLama3.1-70B and exhibits comparable performance when compared to the significantly larger LLama3.1-405B model. Key practice of Hunyuan-Large include large-scale synthetic data that is orders larger than in previous literature, a mixed expert routing strategy, a key-value cache compression technique, and an expert-specific learning rate strategy. Additionally, we also investigate the scaling laws and learning rate schedule of mixture of experts models, providing valuable insights and guidance for future model development and optimization. The code and checkpoints of Hunyuan-Large are released to facilitate future innovations and applications.”

Tencent has released Hunyuan-Large as an open source project, so other AI developers can use the technology! The well-known companies will definitely be experimenting with Hunyuan-Large. Is there an ulterior motive? Sure. Money, prestige, and power are at stake in the AI global game.

Whitney Grace, November 21, 2024

Does Smart Software Forget?

November 21, 2024

A recent paper challenges the big dogs of AI, asking, “Does Your LLM Truly Unlearn? An Embarrassingly Simple Approach to Recover Unlearned Knowledge.” The study was performed by a team of researchers from Penn State, Harvard, and Amazon and published on research platform arXiv. True or false, it is a nifty poke in the eye for the likes of OpenAI, Google, Meta, and Microsoft, who may have overlooked the obvious. The abstract explains:

“Large language models (LLMs) have shown remarkable proficiency in generating text, benefiting from extensive training on vast textual corpora. However, LLMs may also acquire unwanted behaviors from the diverse and sensitive nature of their training data, which can include copyrighted and private content. Machine unlearning has been introduced as a viable solution to remove the influence of such problematic content without the need for costly and time-consuming retraining. This process aims to erase specific knowledge from LLMs while preserving as much model utility as possible.”

But AI firms may be fooling themselves about this method. We learn:

“Despite the effectiveness of current unlearning methods, little attention has been given to whether existing unlearning methods for LLMs truly achieve forgetting or merely hide the knowledge, which current unlearning benchmarks fail to detect. This paper reveals that applying quantization to models that have undergone unlearning can restore the ‘forgotten’ information.”

Oops. The team found as much as 83% of data thought forgotten was still there, lurking in the shadows. The paper offers a explanation for the problem and suggestions to mitigate it. The abstract concludes:

“Altogether, our study underscores a major failure in existing unlearning methods for LLMs, strongly advocating for more comprehensive and robust strategies to ensure authentic unlearning without compromising model utility.”

See the paper for all the technical details. Will the big tech firms take the researchers’ advice and improve their products? Or will they continue letting their investors and marketing departments lead them by the nose?

Cynthia Murrell, November 21, 2024

Entity Extraction: Not As Simple As Some Vendors Say

November 19, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Most of the systems incorporating entity extraction have been trained to recognize the names of simple entities and mostly based on the use of capitalization. An “entity” can be a person’s name, the name of an organization, or a location like Niagara Falls, near Buffalo, New York. The river “Niagara” when bound to “Falls” means a geologic feature. The “Buffalo” is not a Bubalina; it is a delightful city with even more pleasing weather.

The same entity extraction process has to work for specialized software used by law enforcement, intelligence agencies, and legal professionals. Compared to entity extraction for consumer-facing applications like Google’s Web search or Apple Maps, the specialized software vendors have to contend with:

- Gang slang in English and other languages; for example, “bumble bee.” This is not an insect; it is a nickname for the Latin Kings.

- Organizations operating in Lao PDR and converted to English words like Zhao Wei’s Kings Romans Casino. Mr. Wei has been allegedly involved in gambling activities in a poorly-regulated region in the Golden Triangle.

- Individuals who use aliases like maestrolive, james44123, or ahmed2004. There are either “real” people behind the handles or they are sock puppets (fake identities).

Why do these variations create a challenge? In order to locate a business, the content processing system has to identify the entity the user seeks. For an investigator, chopping through a thicket of language and idiosyncratic personas is the difference between making progress or hitting a dead end. Automated entity extraction systems can work using smart software, carefully-crafted and constantly updated controlled vocabulary list, or a hybrid system.

Automated entity extraction systems can work using smart software, carefully-crafted and constantly updated controlled vocabulary list, or a hybrid system.

Let’s take an example which confronts a person looking for information about the Ku Group. This is a financial services firm responsible for the Kucoin. The Ku Group is interesting because it has been found guilty in the US for certain financial activities in the State of New York and by the US Securities & Exchange Commission.

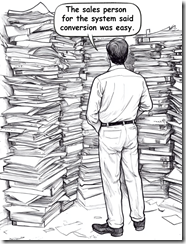

Content Conversion: Search and AI Vendors Downplay the Task

November 19, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Marketers and PR people often have degrees in political science, communications, or art history. This academic foundation means that some of these professionals can listen to a presentation and struggle to figure out what’s a horse, what’s horse feathers, and what’s horse output.

Consequently, many organizations engaged in “selling” enterprise search, smart software, and fusion-capable intelligence systems downplay or just fib about how darned easy it is to take “content” and shove it into the Fancy Dan smart software. The pitch goes something like this: “We have filters that can handle 90 percent of the organization’s content. Word, PowerPoint, Excel, Portable Document Format (PDF), HTML, XML, and data from any system that can export tab delimited content. Just import and let our system increase your ability to analyze vast amounts of content. Yada yada yada.”

Thanks, Midjourney. Good enough.

The problem is that real life content is often a problem. I am not going to trot out my list of content problem children. Instead I want to ask a question: If dealing with content is a slam dunk, why do companies like IBM and Oracle sustain specialized tools to convert Content Type A into Content Type B?

The answer is that content processing is an essential step because [a] structured and unstructured content can exist in different versions. Figuring out the one that is least wrong and most timely is tricky. [b] Humans love mobile devices, laptops, home computers, photos, videos, and audio. Furthermore, how does a content processing get those types of content from a source not located in an organization’s office (assuming it has one) and into the content processing system? The answer is, “Money, time, persuasion, and knowledge of what employee has what.” Finding a unicorn at the Kentucky Derby is more likely. [c] Specialized systems employ lingo like “Export as” and provide some file types. Yeah. The problem is that the output may not contain everything that is in the specialized software program. Examples range from computational chemistry systems to those nifty AutoCAD type drawing system to slick electronic trace routing solutions to DaVinci Resolve video systems which can happily pull “content” from numerous places on a proprietary network set up. Yeah, no problem.

Evidence of how big this content conversion issue is appears in the IBM write up “A New Tool to Unlock Data from Enterprise Documents for Generative AI.” If the content conversion work is trivial, why is IBM wasting time and brainpower figuring out something like making a PowerPoint file smart software friendly?

The reason is that as big outfits get “into” smart software, the people working on the project find that the exception folder gets filled up. Some documents and content types don’t convert. If a boss asks, “How do we know the data in the AI system are accurate?”, the hapless IT person looking at the exception folder either lies or says in a professional voice, “We don’t have a clue?”

IBM’s write up says:

IBM’s new open-source toolkit, Docling, allows developers to more easily convert PDFs, manuals, and slide decks into specialized data for customizing enterprise AI models and grounding them on trusted information.

But one piece of software cannot do the job. That’s why IBM reports:

The second model, TableFormer, is designed to transform image-based tables into machine-readable formats with rows and columns of cells. Tables are a rich source of information, but because many of them lie buried in paper reports, they’ve historically been difficult for machines to parse. TableFormer was developed for IBM’s earlier DeepSearch project to excavate this data. In internal tests, TableFormer outperformed leading table-recognition tools.

Why are these tools needed? Here’s IBM’s rationale:

Researchers plan to build out Docling’s capabilities so that it can handle more complex data types, including math equations, charts, and business forms. Their overall aim is to unlock the full potential of enterprise data for AI applications, from analyzing legal documents to grounding LLM responses on corporate policy documents to extracting insights from technical manuals.

Based on my experience, the paragraph translates as, “This document conversion stuff is a killer problem.”

When you hear a trendy enterprise search or enterprise AI vendor talk about the wonders of its system, be sure to ask about document conversion. Here are a few questions to put the spotlight on what often becomes a black hole of costs:

- If I process 1,000 pages of PDFs, mostly text but with some charts and graphs, what’s the error rate?

- If I process 1,000 engineering drawings with embedded product and vendor data, what percentage of the content is parsed for the search or AI system?

- If I process 1,000 non text objects like videos and iPhone images, what is the time required and the metadata accuracy for the converted objects?

- Where do unprocessable source objects go? An exception folder, the trash bin, or to my in box for me to fix up?

Have fun asking questions.

Stephen E Arnold, November 19, 2024

After AI Billions, a Hail, Mary Play

November 19, 2024

Now it is scramble time. Reuters reports, “OpenAI and Others Seek New Path to Smarter AI as Current Methods Hit Limitations.” Why does this sound familiar? Perhaps because it is a replay of the enterprise search over-promise and under-deliver approach. Will a new technique save OpenAI and other firms? Writers Krystal Hu and Anna Tong tell us:

“After the release of the viral ChatGPT chatbot two years ago, technology companies, whose valuations have benefited greatly from the AI boom, have publicly maintained that ‘scaling up’ current models through adding more data and computing power will consistently lead to improved AI models. But now, some of the most prominent AI scientists are speaking out on the limitations of this ‘bigger is better’ philosophy. … Behind the scenes, researchers at major AI labs have been running into delays and disappointing outcomes in the race to release a large language model that outperforms OpenAI’s GPT-4 model, which is nearly two years old, according to three sources familiar with private matters.”

One difficulty, of course, is the hugely expensive and time-consuming LLM training runs. Another: it turns out easily accessible data is finite after all. (Maybe they can use AI to generate more data? Nah, that would be silly.) And then there is that pesky hallucination problem. So what will AI firms turn to in an effort to keep this golden goose alive? We learn:

“Researchers are exploring ‘test-time compute,’ a technique that enhances existing AI models during the so-called ‘inference’ phase, or when the model is being used. For example, instead of immediately choosing a single answer, a model could generate and evaluate multiple possibilities in real-time, ultimately choosing the best path forward. This method allows models to dedicate more processing power to challenging tasks like math or coding problems or complex operations that demand human-like reasoning and decision-making.”

OpenAI is using this approach in its new O1 model, while competitors like Anthropic, xAI, and Google DeepMind are reportedly following suit. Researchers claim this technique more closely mimics the way humans think. That couldn’t be just marketing hooey, could it? And even if it isn’t, is this tweak really enough?

Cynthia Murrell, November 19, 2024