Students Cheat. Who Knew?

December 12, 2025

How many times are we going to report on this topic? Students cheat! Students have been cheating since the invention of school. With every advancement of technology, students adapt to perfect their cheating skills. AI was a gift served to them on a silver platter. Teachers aren’t stupid, however, and one was curious how many of his students were using AI to cheat, so he created a Trojan Horse. HuffPost told his story: “I Set A Trap To Catch My Students Cheating With AI. The Results Were Shocking.”

There’s a big difference between recognizing AI and proving it was used. The teacher learned about a Trojan Horse: hiding hidden text inside a prompt. The text would be invisible because the font color would be white. Students wouldn’t see it but ChatGPT would. He unleashed the Trojan Horse and 33 essays out of 122 were automatically outed. Thirty-nine percent were AI-written. Many of the students were apologetic, while others continued to argue that the work was their own despite the Trojan Horse evidence.

AI literacy needs to be added to information literacy. The problem is that how to properly use AI is inconsistent:

“There is no consistency. My colleagues and I are actively trying to solve this for ourselves, maybe by establishing a shared standard that every student who walks through our doors will learn and be subject to. But we can’t control what happens everywhere else.”

Even worse is that some students don’t belief they’re actually cheating because they’re oblivious and stupid. He ends on an inspirational quote:

“But I am a historian, so I will close on a historian’s note: History shows us that the right to literacy came at a heavy cost for many Americans, ranging from ostracism to death. Those in power recognized that oppression is best maintained by keeping the masses illiterate, and those oppressed recognized that literacy is liberation. To my students and to anyone who might listen, I say: Don’t surrender to AI your ability to read, write and think when others once risked their lives and died for the freedom to do so.”

Noble words for small minds.

Whitney Grace, December 12, 2025

AI Fact Checks AI! What A Gas…Lighting Event

December 12, 2025

Josh Brandon at Digital Trends was curious what would happen if he asked two chatbots to fact check each other. He shared the results in, “I Asked Google Gemini To Fact-Check ChatGPT. The Results Were Hilarious.” He brilliantly calls ChatGPT the Wikipedia of the modern generation. Chatbots spit out details like overconfident, self-assured narcissists. People take the information for granted.

ChatGPT tends to hallucinate fake facts and makes up great stories, while Google Gemini doesn’t create as many mirages. Brandon asked Gemini and ChatGPT about the history of electric cars, some historical information, and a few other things to see if they’d hallucinate. He found that the chatbots have trouble understanding user intent. They also wrongly attribute facts, although Gemini is correct more than ChatGPT. When it came to research questions, the results were laughable:

“Prompt used: ‘Find me some academic quotes about the psychological impact of social media.;

This one is comical and fascinating. ChatGPT invented so many details in a response about the psychological impact of social media that it makes you wonder what the bot was smoking. ‘This is a fantastic and dangerous example of partial hallucination, where real information is mixed with fabricated details, making the entire output unreliable. About 60% of the information here is true, but the 40% that is false makes it unusable for academic purposes.’”

Either AI’s iterations are not delivering more useful outputs or humans are now looking more critically at the technology and saying, “Not so fast, buckaroo.”

Whitney Grace, December 12, 2025

AI Year in Review: The View from an Expert in France

December 11, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I suggest you read “Stanford, McKinsey, OpenAI: What the 2025 Reports Tell Us about the Present and Future of AI (and Autonomous Agents) in Business.” The document is in French. You can get an okay translation via the Google or Yandex.

I have neither the energy nor the inclination to do a blue chip consulting type of analysis of this fine synthesis of multiple source documents. What I will do in this blog post is highlight several statements and offer a comment or two. For context, I have read some of the sources the author Fabrice Frossard has cited. M. Frossard is a graduate of Ecole Supérieure Libre des Sciences Commerciales Appliquées and the Ecole de Guerre Economique in Paris I think. Remember: I am a dinobaby and generally too lazy and inept to do “real” research. These are good places to learn how to think about business issues.

Let’s dive into his 2000 word write up.

The first point that struck me is that he include what I think is a point not given sufficient emphasis by the experts in the US. This theme is not forced down the reader’s throat, but it has significant implications for M. Frossard’s comments about the need to train people to use smart software. The social implication of AI and the training creates a new digital divide. Like the economic divide in the US and some other countries, crossing the border is not going to possible for many people. Remember these people have been trained to use the smart software deployed. When one cannot get from ignorance to informed expertise, that person is likely to lose a job. Okay, here’s the comment from the source document:

To put it another way: if AI is now everywhere, its real mastery remains the prerogative of an elite.

Is AI a winner today? Not a winner, but it is definitely an up and comer in the commercial world. M. Frossard points out:

- McKinsey reveals that nearly two thirds of companies are still stuck in the experimentation or piloting phase.

- The elite escaping: only 7% of companies have successfully deployed AI in a fully integrated manner across the entire organization.

- Peak workers use coding or data analysis tools 17 times more than the median user.

These and similar facts support the point that “the ability to extract value creates a new digital divide, no longer based on access, but on the sophistication of use.” Keep this in mind when it comes to learning a new skill or mastering a new area of competence like smart software. No, typing a prompt is not expert use. Typing a prompt is like using an automatic teller machine to get money. Basic use is not expert level capabilities.

If Mary cannot “learn” AI and demonstrate exceptional skills, she’s going to be working as an Etsy.com reseller. Thanks, Venice.ai. Not what I prompted but I understand that you are good enough, cash strapped, and degrading.

The second point is that in 2025, AI does not pay for itself in every use case. M. Frossard offers:

EBIT impact still timid: only 39% of companies report an increase in their EBIT (earnings before interest and taxes) attributable to AI, and for the most part, this impact remains less than 5%.

One interesting use case comes from a McKinsey report where billability is an important concept. The idea is that a bit of Las Vegas type thinking is needed when it comes to smart software. M. Frossard writes:

… the most successful companies [using artificial intelligence] are paradoxically those that report the most risks and negative incidents.

Takes risks and win big seems to be one interpretation of this statement. The timid and inept will be pushed aside.

Third, I was delighted to see that M. Frossard picked up on some of the crazy spending for data centers. He writes:

The cost of intelligence is collapsing: A major accelerating factor noted by the Stanford HAI Index is the precipitous fall in inference costs. The cost to achieve performance equivalent to GPT-3.5 has been divided by 280 in 18 months. This commoditization of intelligence finally makes it possible to make complex use cases profitable which were economically unviable in 2023. Here is a paradox: the more efficient and expensive artificial intelligence becomes produce (exploding training costs), the less expensive it is consume (free-fall inference costs). This mental model suggests that intelligence becomes an abundant commodity, leading not to a reduction, but to an explosion of demand and integration.

Several ideas bubble from this passage. First, we are back to training. Second, we are back to having significant expertise. Third, the “abundant commodity” idea produces greater demand. The problem (in addition to not having power for data centers will be people with exceptional AI capabilities).

Fourth, the replacement of some humans may not be possible. The essay reports:

the deployment of agents at scale remains rare (less than 10% in a given function according to McKinsey), hampered by the need for absolute reliability and data governance.

Data governance is like truth, love, and ethics. Easy to say and hard to define. The reliability angle is slightly less tricky. These two AI molecules require a catalyst like an expert human with significant AI competence. And this returns the essay to training. M. Frossard writes:

The transformation of skills: The 115K report emphasizes the urgency of training. The barrier is not technological, it is human. Businesses face a cultural skills gap. It’s not about learning to “prompt”, but about learning to collaborate with non-human intelligence.

Finally, the US has a China problem. M. Frossard points out:

… If the USA dominates investment and the number of models, China is closing the technical gap. On critical benchmarks such as mathematics or coding, the performance gap between the US and Chinese models has narrowed to nothing (less than 1 to 3 percentage points).

Net net: If an employee cannot be trained, that employee is likely to be starting a business at home. If the trained employees are not exceptional, those folks may be terminated. Elites like other elite things. AI may be good enough, but it provides an “objective” way to define and burn dead wood.

Stephen E Arnold, December 11, 2025

Google Gemini Hits Copilot with a Dang Block: Oomph

December 10, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Smart software is finding its way into interesting places. One of my newsfeeds happily delivered “The War Department Unleashes AI on New GenAI.mil Platform.” Please, check out the original document because it contains some phrasing which is difficult for a dinobaby to understand. Here’s an example:

The War Department today announced the launch of Google Cloud’s Gemini for Government as the first of several frontier AI capabilities to be housed on GenAI.mil, the Department’s new bespoke AI platform.

There are a number of smart systems with government wide contracts. Is the Google Gemini deal just one of the crowd or is it the cloud over the other players? I am not sure what a “frontier” capability is when it comes to AI. The “frontier” of AI seems to be shifting each time a performance benchmark comes out from a GenX consulting firm or when a survey outfit produces a statement that QWEN accounts for 30 percent of AI involving an open source large language model. The idea of a “bespoke AI platform” is fascinating. Is it like a suit tailored on Oxford Street or a vehicle produced by Chip Foose, or is it one of those enterprise software systems with extensive customization? Maybe like an IBM government systems solution?

Thanks, Google. Good enough. I wanted square and you did horizontal, but that’s okay. I understand.

And that’s just the first sentence. You are now officially on your own.

For me, the big news is that the old Department of Defense loved PowerPoint. If you have bumped into any old school Department of Defense professionals, the PowerPoint is the method of communication. Sure, there’s Word and Excel. But the real workhorse is PowerPoint. And now that old nag has Copilot inside.

The way I read this news release is that Google has pulled a classic blocking move or dang. Microsoft has been for decades the stallion in the stall. Now, the old nag has some competition from Googzilla, er, excuse me, Google. Word of this deal was floating around for several months, but the cited news release puts Microsoft in general and Copilot in particular on notice that it is no longer the de facto solution to a smart Department of War’s digital needs. Imagine a quarter century after screwing up a big to index the US government servers, Google has emerged as a “winner” among “several frontier AI capabilities” and will reside on “the Department’s new bespoke AI platform.”

This is big news for Google and Microsoft, its certified partners, and, of course, the PowerPoint users at the DoW.

The official document says:

The first instance on GenAI.mil, Gemini for Government, empowers intelligent agentic workflows, unleashes experimentation, and ushers in an AI-driven culture change that will dominate the digital battlefield for years to come. Gemini for Government is the embodiment of American AI excellence, placing unmatched analytical and creative power directly into the hands of the world’s most dominant fighting force.

But what about Sage, Seerist, and the dozens of other smart platforms? Obviously these solutions cannot deliver “intelligent agentic workflows” or unleash the “AI driven culture change” needed for the “digital battlefield.” Let’s hope so. Because some of those smart drones from a US firm have failed real world field tests in Ukraine. Perhaps the smart drone folks can level up instead of doing marketing?

I noted this statement:

The Department is providing no-cost training for GenAI.mil to all DoW employees. Training sessions are designed to build confidence in using AI and give personnel the education needed to realize its full potential. Security is paramount, and all tools on GenAI.mil are certified for Controlled Unclassified Information (CUI) and Impact Level 5 (IL5), making them secure for operational use. Gemini for Government provides an edge through natural language conversation, retrieval-augmented generation (RAG), and is web-grounded against Google Search to ensure outputs are reliable and dramatically reduces the risk of AI hallucinations.

But wait, please. I thought Microsoft and Palantir were doing the bootcamps, demonstrating, teaching, and then deploying next generation solutions. Those forward deployed engineers and the Microsoft certified partners have been beavering away for more than a year. Who will be doing the training? Will it be Googlers? I know that YouTube has some useful instructional videos, but those are from third parties. Google’s training is — how shall I phrase it — less notable than some of its other capabilities like publicizing its AI prowess.

The last paragraph of the document does not address the questions I have, but it does have a stentorian ring in my opinion:

GenAI.mil is another building block in America’s AI revolution. The War Department is unleashing a new era of operational dominance, where every warfighter wields frontier AI as a force multiplier. The release of GenAI.mil is an indispensable strategic imperative for our fighting force, further establishing the United States as the global leader in AI.

Several observations:

- Google is now getting its chance to put Microsoft in its place from inside the Department of War. Maybe the Copilot can come along for the ride, but it could be put on leave.

- The challenge of training is interesting. Training is truly a big deal, and I am curious how that will be handled. The DoW has lots of people to teach about the capabilities of Gemini AI.

- Google may face some push back from its employees. The company has been working to stop the Googlers from getting out of the company prescribed lanes. Will this shift to warfighting create some extra work for the “leadership” of that estimable company? I think Google’s management methods will be exercised.

Net net: Google knows about advertising. Does it have similar capabilities in warfighting?

Stephen E Arnold, December 10, 2025

MIT Iceberg: Identifying Hotspots

December 10, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I like the idea of identifying exposure hotspots. (I hate to mention this, but MIT did have a tie up with Jeffrey Epstein, did it not? How long did it take for that hotspot to be exposed? The dynamic duo linked in 2002 and wound down the odd couple relationship in 2017. That looks to me to be about 15 years.) Therefore, I approach MIT-linked research from some caution. Is this a good idea? Yep.)

What is this iceberg thing? I won’t invoke the Titanic’s encounter with an iceberg, nor will I point to some reports about faulty engineering. I am confident had MIT been involved, that vessel would probably be parked in a harbor, serving as a museum.

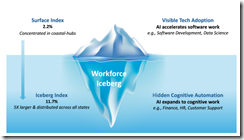

I read “The Iceberg Index: Measuring Skills-centered Exposure in the AI Economy.” You can too. The paper is free at least for a while. It also has 10 authors who generated 21 pages to point out that smart software is chewing up jobs. Of course, this simple conclusion is supported by quite a bit of academic fireworks.

The iceberg chart which reminds me of the Dark Web charts. I wonder if Jeffrey Epstein surfed the Dark Web while waiting for a meet and greet at MIT? The source for this image is MIT or possibly an AI system helping out the MIT graphic artist humanoid.

I love these charts. I find them eye catching and easily skippable.

Even though smart software makes up stuff, appears to have created some challenges for teachers and college professors (except those laboring in Jeffrey Epstein’s favorite grove of academic, of course), and people looking for jobs. The as is smart software can eliminate about 10 to 11 percent of here and now jobs. The good news is that 90 percent of the workers can wait for AI to get better and then eliminate another chunk of jobs. For those who believe that technology just gets better and better, the number of jobs for humanoids is likely to be gnawed and spat out for the foreseeable future.

I am not going to cause the 10 authors to hire SEO spam shops in Africa to make my life miserable. I will suggest, however, that there may be what I call de-adoption in the near future. The idea is that an organization is unhappy with the cost / value for its AI installation. A related factor is that some humans in an organization may introduce some work flow friction. The actions can range from griping about services interrupting work like Microsoft’s enterprise Copilot to active sabotage. People can fake being on a work related video conference, and I assume a college graduate (not from MIT, of course) might use this tactic to escape these wonderful face to face innovations. Nor will I suggest that AI may continue to need humans to deliver successful work task outcomes. Does an AI help me buy more of a product? Does AI boost your satisfaction with an organization pushing and AI helper on each of its Web pages?

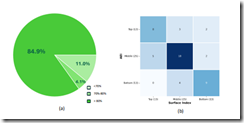

And no academic paper (except those presented at AI conferences) are complete without some nifty traditional economic type diagrams. Here’s an example for the industrious reader to check:

Source: the MIT Report. Is it my imagination or five of the six regression lines pointing down? What’s negative correlation? (Yep, dinobaby stuff.)

Several observations:

- This MIT paper is similar to blue chip consulting “thought pieces.” The blue chippers write to get leads and close engagements. What is the purpose of this paper? Reading posts on Reddit or LinkedIn makes clear that AI allegedly is replacing jobs or used as an excuse to dump expensive human workers.

- I identified a couple of issues I would raise if the 10 authors had trooped into my office when I worked at a big university and asked for comments. My hunch is that some of the 10 would have found me manifesting dinobaby characteristics even though I was 23 years old.

- The spate of AI indexes suggests that people are expressing their concern about smart software that makes mistakes by putting lipstick on what is a very expensive pig. I sense a bit of anxiety in these indexes.

Net net: Read the original paper. Take a look at your coworkers. Which will be the next to be crushed because of the massive investments in a technology that is good enough, over hyped, and perceived as the next big thing. (Measure the bigness by pondering the size of Meta’s proposed data center in the southern US of A.) Remember, please, MIT and Epstein Epstein Epstein.

Stephen E Arnold, December 10, 2025

File Conversion. No Problem. No Kidding?

December 10, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Every few months, I get a question about file conversion. The questions are predictable. Here’s a selection from my collection:

- “We have data about chemical structures. How can we convert these to for AI processing?”

- “We have back up files in Fastback encrypted format. How do we decrypt these and get the data into our AI system?”

- “We have some old back up tapes from our Burroughs’ machines?”

- “We have PDFs. Some were created when Adobe first rolled out Acrobat and some generated by different third-party PDF printing solutions. How can we convert these so our AI can provide our employees with access?”

The answer to each of these questions from the new players in AI-search system is, “No problem.” I hate to rain on these marketers’ assertions, but these are typical problems large, established organizations have moving content from a legacy system into a BAIT (big AI tech) based findability solution. There are technical challenges. There are cost challenges. There are efficiency challenges. That’s right. Challenges, and in my long career in electronic content processing, these hurdles still remain. But I am an aged dinobaby. Why believe me? Hire a Gartner-type of expert to tell you what you want to hear. Have fun with that solution, please.

Thanks, Venice.ai. Close enough for horse shoes, the high-water mark today I believe.

Venture Beat is one of my go-to sources for timely content marketing. On November 14, 2025, the venerable firm published “Databricks: PDF Parsing for Agentic AI Is Still Unsolved. New Tool Replaces Multi-Service Pipelines with a Single Function.” The write up makes clear that I am 100 percent dead wrong about processing PDF files with their weird handling of tables, charts, graphs, graphic ornaments, and dense financial data.

The write up explains how really off base I am; for example, the Databricks Agent Bricks Platform. It cracks the AI parsing problem. I learned from the Venture Beat write up identifies what the DABP does with PDF information:

1 “Tables preserved exactly as they appear, including merged cells and nested structures

2 Figures and diagrams with AI-generated captions and descriptions

3 Spatial metadata and bounding boxes for precise element location

4 Optional image outputs for multimodal search applications”

Once the PDFs have been processed by DABP, the outputs can be used in a number of ways. I assume these are advanced, stable, and efficient as the name “databrick” metaphorically suggests:

1 Spark declarative pipelines

2 Unity catalog (I don’t know what this means)

3 Vector search (yep, search and retrieval)

4 AI function chaining (yep, bots)

5 Multi-agent supervisor (yep, command and control).

The write up concludes with this statement:

The Databricks approach sheds new light on an issue that many might have considered to be a solved problem. It challenges existing expectations with a new architecture that could benefit multiple types of workflows. However, this is a platform-specific capability that requires careful evaluation for organizations not already using Databricks. For technical decision-makers evaluating AI agent platforms, the key takeaway is that document intelligence is shifting from a specialized external service to an integrated platform capability.

Net net: What is novel in that chemical structure? What about that guy who retired in 2002 who kept a pile of Fastback floppies with his research into in Trinitrotoluene variants? Yep, content processing is not problem except the data on those back up tapes cranked out by that old Burroughs’ MFSOLT utility, but with the new AI approaches, who needs layers of contractors and conversion utilities. Just say, “Not a problem.” Everything is easy for a market collateral writer.

Stephen E Arnold, December 10, 2025

A Job Bright Spot: RAND Explains Its Reality

December 10, 2025

Optimism On AI And Job Market

Remember when banks installed automatic teller machines at their locations? They’re better known by the acronym ATM. ATMs didn’t take away jobs, instead they increased the number of banks, and created more jobs. AI will certainly take away jobs but the technology will also create more. Rand.org investigates how AI is affecting the job market in the article, “AI Is Making Jobs, Not Taking Them.”

What I love about this article is that it says the truth about aI technology: no one knows what will happen with it. We have theories ,explored in science fiction, about what AI will do: from the total collapse of society to humdrum normal societal progress. What Rand’s article says is that the research shows AI adoption is uneven and much slower than Wall Street and Silicon Valley say. Rand conducted some research:

“At RAND, our research on the macroeconomic implications of AI also found that adoption of generative AI into business practices is slow going. By looking at recent census surveys of businesses, we found the level of AI use also varies widely by sector. For large sectors like transportation and warehousing, AI adoption hovered just above 2 percent. For finance and insurance, it was roughly 10 percent. Even in information technology—perhaps the most likely spot for generative AI to leave its mark—only 25 percent of businesses were using generative AI to produce goods and services.”

Most of the fear related to AI stems from automation of job tasks. Here are some statistics from OpenAI:

“In a widely referenced study, OpenAI estimated that 80 percent of the workforce has at least 10 percent of their tasks exposed to LLM-driven automation, and 19 percent of workers could have at least 50 percent of their tasks exposed. But jobs are more than individual tasks. They are a string of tasks assembled in a specific way. They involve emotional intelligence. Crude calculations of labor market exposure to AI have seemingly failed to account for the nuance of what jobs actually are, leading to an overstated risk of mass unemployment.”

AI is a wondrous technology, but it’s still infantile and stupid. Humans will adapt and continue to have jobs.

Whitney Grace, December 10, 2025

Sam AI-Man Is Not Impressing ZDNet

December 9, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In the good old days of Ziff Communication, editorial and ad sales were separated. The “Chinese wall” seemed to work. It would be interesting to go back in time and let the editorial team from 1985 check out the write up “Stop Using ChatGPT for Everything: The AI Models I Use for Research, Coding, and More (and Which I Avoid).” The “everything” is one of those categorical affirmatives that often cause trouble for high school debaters or significant others arguing with a person who thinks a bit like a Silicon Valley technology person. Example: “I have to do everything around here.” Ever hear that?

Yes, granny. You say one thing, but it seems to me that you are getting your cupcakes from a commercial bakery. You cannot trust dinobabies when they say “I make everything” can you?

But the subtitle strikes me as even more exciting; to wit:

From GPT to Claude to Gemini, model names change fast, but use cases matter more. Here’s how I choose the best model for the task at hand.

This is the 2025 equivalent to a 1985 article about “Choosing Character Sets with EGA.” Peter Norton’s article from November 26, 1985, was mostly arcana, not too much in the opinion game. The cited “Stop Using ChatGPT for Everything” is quite different.

Here’s a passage I noted:

(Disclosure: Ziff Davis, ZDNET’s parent company, filed an April 2025 lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

And what about ChatGPT as a useful online service? Consider this statement:

However, when I do agentic coding, I’ve found that OpenAI’s Codex using GPT-5.1-Max and Claude Code using Opus 4.5 are astonishingly great. Agentic AI coding is when I hook up the AIs to my development environment, let the AIs read my entire codebase, and then do substantial, multi-step tasks. For example, I used Codex to write four WordPress plugin products for me in four days. Just recently, I’ve been using Claude Code with Opus 4.5 to build an entire complex and sophisticated iPhone app, which it helped me do in little sprints over the course of about half a month. I spent $200 for the month’s use of Codex and $100 for the month’s use of Claude Code. It does astonish me that Opus 4.5 did so poorly in the chatbot experience, but was a superstar in the agentic coding experience, but that’s part of why we’re looking at different models. AI vendors are still working out the kinks from this nascent technology.

But what about “everything” as in “stop using ChatGPT for everything”? Yeah, well, it is 2025.

And what about this passage? I quote:

Up until now, no other chatbot has been as broadly useful. However, Gemini 3 looks like it might give ChatGPT a run for its money. Gemini 3 has only been out for a week or so, which is why I don’t have enough experience to compare them. But, who knows, in six months this category might list Gemini 3 as the favorite model instead of GPT-5.1.

That “everything” still haunts me. It sure seems to me as if the ZDNet article uses ChatGPT a great deal. By the author’s own admission, he “doesn’t have enough experience to compare them.” But, but, but (as Jack Benny used to say) and then blurt “stop for everything!” Yeah, seems inconsistent to me. But, hey, I am a dinobaby.

I found this passage interesting as well:

Among the big names, I don’t use Perplexity, Copilot, or Grok. I know Perplexity also uses GPT-5.1, but it’s just never resonated with me. It’s known for search, but the few times I’ve tried some searches, its results have been meh. Also, I can’t stand the fact that you have to log in via email.

I guess these services suck as much as the ChatGPT system the author uses. Why? Yeah, log in method. That’s substantive stuff in AI land.

Observations:

- I don’t think this write up is output by AI or at least any AI system with which I am familiar

- I find the title and the text a bit out of step

- The categorical affirmative is logically loosey goosey.

Net net: Sigh.

Stephen E Arnold, December 9, 2025

Google Presents an Innovative Way to Say, “Generate Revenue”

December 9, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

One of my contacts sent me a link to an interesting document. Its title is “A Pragmatic Vision for Interpretability.” I am not sure about the provenance of the write up, but it strikes me as an output from legal, corporate, and wizards. First impression: Very lengthy. I estimate that it requires about 11,000 words to say, “Generate revenue.” My second impression: A weird blend of consulting speak and nervousness.

A group of Googlers involved in advanced smart software ideation get a phone call clarifying they have to hit revenue targets. No one looks too happy. The esteemed leader is on the conference room wall. He provides a North Star to the wandering wizards. Thanks, Venice.ai. Good enough, just like so much AI system output these days.

The write up is too long to meander through its numerous sections, arguments, and arm waving. I want to highlight three facets of the write up and leave it up to you to print this puppy out, read it on a delayed flight, and consider how different this document is from the no output approach Google used when it was absolutely dead solid confident that its search-ad business strategy would rule the world forever. Well, forever seems to have arrived for Googzilla. Hence, be pragmatic. This, in my experience, is McKinsey speak for hit your financial targets or hit the road.

First, consider this selected set of jargon:

Comparative advantage (maybe keep up with the other guys?)

Load-bearing beliefs

Mech Interp” / “mechanistic interpretability” (as opposed to “classic” interp)

Method minimalism

North Star (is it the person on the wall in the cartoon or just revenue?)

Proxy task

SAE (maybe sparse autoencoders?)

Steering against evaluation awareness (maybe avoiding real world feedback?)

Suppression of eval-awareness (maybe real-world feedback?)

Time-box for advanced research

The document tries to hard to avoid saying, “Focus on stuff that makes money.” I think that, however, is what the word choice is trying to present in very fancy, quasi-baloney jingoism.

Second, take a look at the three sets of fingerprints in what strikes me as a committee-written document.

- Researchers want to just follow their ideas about smart software just as we have done at Google for many years

- Lawyers and art history majors who want to cover their tailfeathers when Gemini goes off the rails

- Google leadership who want money or at the very least research that leads to products.

I can see a group meeting virtually, in person, and in the trenches of a collaborative Google Doc until this masterpiece of management weirdness is given the green light for release. Google has become artful in make work, wordsmithing, and pretend reconciliation of the battles among the different factions, city states, and empires within Google. One can almost anticipate how the head of ad sales reacts to money pumped into data centers and research groups who speak a language familiar to Klingons.

Third, consider why Google felt compelled to crank out a tortured document to nail on the doors of an AI conference. When I interacted with Google over a number of years, I did not meet anyone reminding me of Martin Luther. Today, if I were to return to Shoreline Drive, I might encounter a number of deep fakes armed with digital hammers and fervid eyes. I think the Google wants to make sure that no more Loons and Waymos become the butt of stand up comedians on late night TV or (heaven forbid, TikTok). The dead cat in the Mission and the dead puppy in what’s called (I think) the Western Addition. (I used to live in Berkeley, and I never paid much attention to the idiosyncratic names slapped on undifferentiable areas of the City by the Bay.)

I think that Google leadership seeks in this document:

- To tell everyone it is focusing on stuff that sort of works. The crazy software that is just like Sundar is not on the to do list

- To remind everyone at the Google that we have to pay for the big, crazy data centers in space, our own nuclear power plants, and the cost of the home brew AI chips. Ads alone are no longer going to be 24×7 money printing machines because of OpenAI

- To try to reduce the tension among the groups, cliques, and digital street gangs in the offices and the virtual spaces in which Googlers cogitate, nap, and use AI to be more efficient.

Net net: Save this document. It may become a historical artefact.

Stephen E Arnold, December 9, 2025

AI: Continuous Degradation

December 9, 2025

Many folks are unhappy with the flood of AI “tools” popping up unbidden. For example, writer Raghav Sethi at Make Use Of laments, “I’m Drowning in AI Features I Never Asked For and I Absolutely Hate It.” At first, Sethi was excited about the technology. Now, though, he just wishes the AI creep would stop. He writes:

“Somewhere along the way, tech companies forgot what made their products great in the first place. Every update now seems to revolve around AI, even if it means breaking what already worked. The focus isn’t on refining the experience anymore; it’s about finding new places to wedge in an AI assistant, a chatbot, or some vaguely ‘smart’ feature that adds little value to the people actually using it.”

Gemini is the author’s first example: He found it slower and less useful than the old Google Assistant, to which he returned. Never impressed by Apple’s Siri, he found Apple Intelligence made it even less useful. As for Microsoft, he is annoyed it wedges Copilot into Windows, every 365 app, and even the lock screen. Rather than helpful tool, it is a constant distraction. Smaller firms also embrace the unfortunate trend. The maker of Sethi’s favorite browser, Arc, released its AI-based successor Dia. He asserts it “lost everything that made the original special.” He summarizes:

“At this point, AI isn’t even about improving products anymore. It’s a marketing checkbox companies use to convince shareholders they’re staying ahead in this artificial race. Whether it’s a feature nobody asked for or a chatbot no one uses, it’s all about being able to say ‘we have AI too.’ That constant push for relevance is exactly what’s ruining the products that used to feel polished and well-thought-out.”

And it is does not stop with products, the post notes. It is also ruining “social” media. Sethi is more inclined to believe the dead Internet theory than he used to be. From Instagram to Reddit to X, platforms are filled with AI-generated, SEO-optimized drivel designed to make someone somewhere an easy buck. What used to connect us to other humans is now a colossal waste of time. Even Google Search– formerly a reliable way to find good information– now leads results with a confident AI summery that is often wrong.

The write-up goes on to remind us LLMs are built on the stolen work of human creators and that it is sopping up our data to build comprehensive profiles on us all. Both excellent points. (For anyone wishing to use AI without it reporting back to its corporate overlords, he points to this article on how to run an LLM on one’s own computer. The endeavor does require some beefy hardware, however.)

Sethi concludes with the wish companies would reconsider their rush to inject AI everywhere and focus on what actually makes their products work well for the user. One can hope.

Cynthia Murrell, December 9, 2025