Spelling Adobe: Is It Ado-BEEN, Adob-AI, or Ado-DIE?

September 29, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Yahoo finance presented an article titled “Morgan Stanley Warns AI Could Sink 42-Year-Old Software Giant.” The ultimate source may have been Morgan Stanley. An intermediate source appears to be The Street. What this means is that the information may or may not be spot on. Nevertheless, let’s see what Yahoo offers as financial “news.”

The write up points out that generative AI forced Adobe to get with the smart software program. The consequence of Adobe’s forced march was that:

The adoption headlines looked impressive, with 99% of the Fortune 100 using AI in an Adobe app, and roughly 90% of the top 50 accounts with an AI-first product.

Win, right? Nope. The article reports:

Adobe shares have tanked 20.6% YTD and more than 11% over six months, reflecting skepticism that AI features alone can push its growth engine to the next level.

Loss, right? Maybe. The article asserts:

Although Adobe’s AI adoption is real, the monetization cadence is lagging the marketing sizzle. Also, Upsell ARPU and seat expansion are happening. Yet ARR growth hasn’t re-accelerated, which raises some uncomfortable questions for the Adobe bulls.

Is the Adobe engine of growth and profit emitting wheezes and knocks? The write up certainly suggests that the go-to tool for those who want to do brochures, logos, and videos warrants a closer look. For example:

- Essentially free video creation tools with smart software included are available from Blackmagic, the creators of actual hardware and the DaVinci video software. For those into surveillance, there is the “free” CapCut

- The competition is increasing. As the number of big AI players remains stable, the outfits building upon these tools seems to be increasing. Just the other day I learned about Seedream. (Who knew?)

- Adobe’s shift to a subscription model makes sense to the bean counters but to some users, Adobe is not making friends. The billing and cooing some expected from Adobe is just billing.

- The product proliferation with AI and without AI is crazier than Google’s crypto plays. (Who knew?)

- Established products have been kicked to the curb, leaving some users wondering when FrameMaker will allow a user to specify specific heights for footnotes. And interfaces? Definitely 1990s.

From my point of view, the flurry of numbers in the Yahoo article skip over some signals that the beloved golden retriever of arts and crafts is headed toward the big dog house in the CMYK sky.

Stephen E Arnold, September 29, 2025

Musky Odor? Get Rid of Stinkies

September 29, 2025

Elon Musk cleaned house at xAI, the parent company of Grok. He fired five hundred employees followed by another hundred. That’s not the only thing he according to Futurism’s article, “Elon Musk Fires 500 Staff At xAI, Puts College Kid In Charge of Training Grok.” The biggest change Musk made to xAI was placing a kid who graduated high school in 2023 in charge of Grok. Grok is the AI chatbot and gets its name from Robert A. Heinlein’s book, Stranger in a Strange Land. Grok that, humanoid!

The name of the kid is Diego Pasini, who is currently a college student as well as Grok’s new leadership icon. Grok is currently going through a training period of data annotation, where humans manually go in and correct information in the AI’s LLMs. Grok is a wild card when it comes to the wild world of smart software. In addition to hallucinations, AI systems burn money like coal going into the Union Pacific’s Big Boy. The write up says:

“And the AI model in question in this case is Grok, which is integrated into X-formerly-Twitter, where its users frequently summon the chatbot to explain current events. Grok has a history of wildly going off the rails, including espousing claims of “white genocide” in unrelated discussions, and in one of the most spectacular meltdowns in the AI industry, going around styling itself as “MechaHitler.” Meanwhile, its creator Musk has repeatedly spoken about “fixing” Grok after instances of the AI citing sources that contradict his worldview.”

Musk is surrounding himself with young-at-heart wizards yes-men and will defend his companies as well as follow his informed vision which converts ordinary Teslas into self-driving vehicles and smart software into clay for the wizardish Diego Pasini. Mr. Musk wants to enter a building and not be distracted by those who do not give off the sweet scent of true believers. Thus, Musky Management means using the same outstanding methods he deployed when improving government effciency. (How is that working out for Health, Education, and Welfare and the Department of Labor?)

Mr. Musk appears to embrace meritocracy, not age, experience, or academic credentials. Will Grok grow? Yes, it will manifest just as self-driving Teslas have. Ah, the sweet smell of success.

Whitney Grace, September 29, 2025

Jobs 2025: Improving Yet? Hmmm

September 26, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Computerworld published “Resume.org: Turmoil Ahead for US Job Market As GenAI Disruption Kicks Up Waves.” The information, if it is spot on, is not good news.

A 2024 college graduate ponders the future. Ideas and opportunities exist. What’s the path forward?

The write up says:

A new survey from Resume.org paints a stark picture of the current job market, with 50% of US companies scaling back hiring and one in three planning layoffs by the end of the year.

Well, that’s snappy. And there’s more:

The online resume-building platform surveyed 1,000 US business leaders and found that high-salary employees and those lacking AI skills are most at risk. Generational factors play a role, too: 30% of companies say younger employees are more likely to be affected, while 29% cite older employees. Additionally, 19% report that H-1B visa holders are at greater risk of layoffs.

Allegedly accurate data demand a chart. How’s this one?

What’s interesting is the younger, dinobabies, and H1B visa holders are safer in their jobs that those who [a] earn a lot of money (excepting the CEO and other carpetland dwellers), employees with no AI savvy, the most recently hired, and entry level employees.

Is there a bright spot in the write up? Yes, and I have put in bold face the super good news (for some):

Experis parent company ManpowerGroup recently released a survey of more than 40,000 employers putting the US Net Employment Outlook at +28% going into the final quarter of 2025. … GenAI is part of the picture, but it’s not replacing workers as many fear, she said. Instead, one-in-four employers are hiring to keep pace with tech. The bigger issue is an ongoing skills gap — 41% of US IT employers say complex roles are hardest to fill, according to Experis.

Now the super good news applies to job seekers who are able to do the AI thing and handle “complex roles.” In my experience, complex problems tumble into the email of workers at every level. I have witnessed senior managers who have been unable to cope with the complex problems. (If these managers could, why would they hire a blue chip consulting firm and its super upbeat, Type A workers? Answer: Consulting firms are hired for more than problem solving. Sometimes these outfits are retained to push a unit to the sidelines or derail something a higher up wants to stop without being involved in obtaining the totally objective data.)

Several observations:

- Bad things seem to be taking place in the job market. I don’t know the cause but the discharge from the smoking guns is tough to ignore

- AI AI AI. Whether it works or not is not the question. AI means cost reduction. (Allegedly)

- Education and intelligence, connections, and personality may not work their magic as reliably as in the past.

As the illustration in this blog post suggests, alternative employment paths may appear viable. Imagine this dinobaby on OnlyFans.

Stephen E Arnold, September 26, 2025

AI Going Bonkers: No Way, Jos-AI

September 26, 2025

No smart software involved. Just a dinobaby’s work.

No smart software involved. Just a dinobaby’s work.

Did you know paychopathia machinalis is a thing? I did not. Not much surprises me in the glow of the fast-burning piles of cash in the AI systems. “How’s the air in Memphis near the Grok data center?” I asked a friend in that city. I cannot present his response.

What’s that cash burn deliver? One answer appears in “There Are 32 Different Ways AI Can Go Rogue, Scientists Say — From Hallucinating Answers to a Complete Misalignment with Humanity” provides some insight about the smoke from the burning money piles. The write up says as actual factual:

Scientists have suggested that when artificial intelligence (AI) goes rogue and starts to act in ways counter to its intended purpose, it exhibits behaviors that resemble psychopathologies in humans.

The wizards and magic research gnomes have identified 31 issues. I recognized one: Smart software just makes up baloney. The Fancy Dan term is hallucination. I prefer “make stuff up.”

The write up adds:

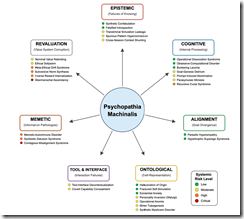

What are these dysfunctions? I tracked down the original write up at MDPI.com. The article was downloadable on September 11, 2025. After this date? Who knows?

Here’s what the issues look like when viewed from the wise gnome vantage point:

Notice there are six categories of nut ball issues. These are:

- Epistemic

- Cognitive

- Alignment

- Ontological

- Tool and Interface

- Memetic

- Revaluation.

I am not sure what the professional definition of these terms is. I can summarize in my dinobaby lingo, however — Wrong outputs. (I used an em dash, but I did not need AI to select that punctuation mark happily rendered by Microsoft and WordPress as three hyphens. “Regular” computer software gets stuff wrong too. Hello, Excel?

Here’s the best sentence in the Live Science write up about the AI nutsy stuff:

The study also proposes “therapeutic robopsychological alignment,” a process the researchers describe as a kind of “psychological therapy” for AI.

Yep, a robot shrink for smart software. Sounds like a fundable project to me.

Stephen E Arnold, September 26, 2025

Can Human Managers Keep Up with AI-Assisted Coders? Sure, Sure

September 26, 2025

AI may have sped up the process of coding, but it cannot make other parts of a business match its velocity. Business Insider notes, “Andrew Ng Says the Real Bottleneck in AI Startups Isn’t Coding—It’s Product Management.” The former Google Brain engineer and current Stanford professor shared his thoughts on a recent episode of the "No Priors" podcast. Writer Lee Chong Ming tells us:

“In the past, a prototype might take three weeks to develop, so waiting another week for user feedback wasn’t a big deal. But today, when a prototype can be built in a single day, ‘if you have to wait a week for user feedback, that’s really painful,’ Ng said. That mismatch is forcing teams to make faster product decisions — and Ng said his teams are ‘increasingly relying on gut.’ The best product managers bring ‘deep customer empathy,’ he said. It’s not enough to crunch data on user behavior. They need to form a mental model of the ideal customer. It’s the ability to ‘synthesize lots of signals to really put yourself in the other person’s shoes to then very rapidly make product decisions,’ he added.”

Experienced humans matter. Who knew? But Google, for one, is getting rid of managers. This Xoogler suggests managers are important. Is this the reason he is no longer at Google?

Cynthia Murrell, September 26, 2025

Want to Catch the Attention of Bad Actors? Say, Easier Cross Chain Transactions

September 24, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I know from experience that most people don’t know about moving crypto in a way that makes deanonymization difficult. Commercial firms offer deanonymization services. Most of the well-known outfits’ technology delivers. Even some home-grown approaches are useful.

For a number of years, Telegram has been the go-to service for some Fancy Dancing related to obfuscating crypto transactions. However, Telegram has been slow on the trigger when it comes to smart software and to some of the new ideas percolating in the bubbling world of digital currency.

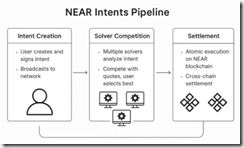

A good example of what’s ahead for traders, investors, and bad actors is described in “Simplifying Cross-Chain Transactions Using Intents.” Like most crypto thought, confusing lingo is a requirement. In this article, the word “intent” refers to having crypto currency in one form like USDC and getting 100 SOL or some other crypto. The idea is that one can have fiat currency in British pounds, walk up to a money exchange in Berlin, and convert the pounds to euros. One pays a service charge. Now in crypto land, the crypto has to move across a blockchain. Then to get the digital exchange to do the conversion, one pays a gas fee; that is, a transaction charge. Moving USDC across multiple chains is a hassle and the fees pile up.

The article “Simplifying Cross Chain Transaction Using Intents” explains a brave new world. No more clunky Telegram smart contracts and bots. Now the transaction just happens. How difficult will the deanonymization process become? Speed makes life difficult. Moving across chains makes life difficult. It appears that “intents” will be a capability of considerable interest to entities interested in making crypto transactions difficult to deanonymize.

The write up says:

In technical terms,

intentsare signed messages that express a user’s desired outcome without specifying execution details. Instead of crafting complex transaction sequences yourself, you broadcast your intent to a network ofsolvers(sophisticated actors) who then compete to fulfill your request.

The write up explains the benefit for the average crypto trader:

when you broadcast an intent, multiple solvers analyze it and submit competing quotes. They might route through different DEXs, use off-chain liquidity, or even batch your intent with others for better pricing. The best solution wins.

Now, think of solvers as your personal trading assistants who understand every connected protocol, every liquidity source, and every optimization trick in DeFi. They make money by providing better execution than you could achieve yourself and saves you a a lot of time.

Does this sound like a use case for smart software? It is, but the approach is less complicated than what one must implement using other approaches. Here’s a schematic of what happens in the intent pipeline:

The secret sauce for the approach is what is called a “1Click API.” The API handles the plumbing for the crypto bridging or crypto conversion from currency A to currency B.

If you are interested in how this system works, the cited article provides a list of nine links. Each provides additional detail. To be up front, some of the write ups are more useful than others. But three things are clear:

- Deobfuscation is likely to become more time consuming and costly

- The system described could be implemented within the Telegram blockchain system as well as other crypto conversion operations.

- The described approach can be further abstracted into an app with more overt smart software enablements.

My thought is that money launderers are likely to be among the first to explore this approach.

Stephen E Arnold, September 24, 2025

A Googler Explains How AI Helps Creators and Advertisers in the Googley Maze

September 24, 2025

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Sadly I am a dinobaby and too old and stupid to use smart software to create really wonderful short blog posts.

Most of Techmeme’s stories are paywalled. But one slipped through. (I wonder why?) Anyhow, the article in question is “An Interview with YouTube Neal Mohan about Building a Stage for Creators.” The interview is a long one. I want to focus on a couple of statements and offer a handful of observations.

The first comment by the Googler Mohan is this one:

Moving away from the old model of the cliché Madison Avenue type model of, “You go out to lunch and you negotiate a deal and it’s bespoke in this particular fashion because you were friends with the head of ad sales at that particular publisher”. So doing away with that model, and really frankly, democratizing the way advertising worked, which in our thesis, back to this kind of strategy book, would result in higher ROI for publishers, but also better ROI for advertisers.

The statement makes clear that disrupting advertising was the key to what is now the Google Double Click model. Instead of Madison Avenue, today there is the Google model. I think of it as a maze. Once one “gets into” the Google Double Click model, there is no obvious exit.

The art was generated by Venice.ai. No human needed. Sorry freelance artists on Fiverr.com. This is the future. It will come to YouTube as well.

Here’s the second I noted:

everything that we build is in service of people that are creative people, and I use the term “creator” writ large. YouTubers, artists, musicians, sports leagues, media, Hollywood, etc., and from that vantage point, it is really exceedingly clear that these AI capabilities are just that, they’re capabilities, they’re tools. But the thing that actually draws us to YouTube, what we want to watch are the original storytellers, the creators themselves.

The idea, in my interpretation, is that Google’s smart software is there to enable humans to be creative. AI is just a tool like an ice pick. Sure, the ice pick can be driven into someone’s heart, but that’s an extreme example of misuse of a simple tool. Our approach is to keep that ice pick for the artist who is going to create an ice sculpture.

Please, read the rest of this Googley interview to get a sense of the other concepts Google’s ad system and its AI are delivering to advertisers and “creators.”

Here’s my view:

- Google wants to get creators into the YouTube maze. Google wants advertisers to use the now 30 year old Google Double Click ad system. Everyone just enter the labyrinth.

- The rats will learn that the maze is the equivalent of a fish in an aquarium. What else will the fish know? Not too much. The aquarium is life. It is reality.

- Google has a captive, self-sustaining ecosystem. Creators create; advertisers advertise because people or systems want the content.

Now let me ask a question, “How does this closed ecosystem make more money?” The answer, according to Googler Mohan, a former consultant like others in Google leadership, is to become more efficient. How does one become more efficient? The answer is to replace expensive, popular creators with cheaper AI driven content produced by Google’s AI system.

Therefore, the words say one thin: Creator humans are essential. However, the trajectory of Google’s behavior is that Google wants to maximize its revenues. Just the threat or fear of using AI to knock off a hot new human engineered “content object” will allow the Google to reduce what it pays to a human until Google’s AI can eliminate those pesky, protesting, complaining humans. The advertisers want eyeballs. That’s what Google will deliver. Where will the advertisers go? Craigslist, Nextdoor, X.com?

Net net: Money is more important to Google than human creators. I know I am a dinobaby and probably incorrect. That’s how I see the Google.

Stephen E Arnold, September 24, 2025

The Skill for the AI World As Pronounced by the Google

September 24, 2025

Written by an unteachable dinobaby. Live with it.

Written by an unteachable dinobaby. Live with it.

Worried about a job in the future: The next minute, day, decade. The secret of constant employment, big bucks, and even larger volumes of happiness has been revealed. “Google’s Top AI Scientist Says Learning How to Learn Will Be Next Generation’s Most Needed Skill” says:

the most important skill for the next generation will be “learning how to learn” to keep pace with change as Artificial Intelligence transforms education and the workplace.

Well, that’s the secret: Learn how to learn. Why? Surviving in the chaos of an outfit like Google means one has to learn. What should one learn? Well, the write up does not provide that bit of wisdom. I assume a Google search will provide the answer in a succinct AI-generated note, right?

The write up presents this chunk of wisdom from a person keen on getting lots of AI people aware of Google’s AI prowess:

The neuroscientist and former chess prodigy said artificial general intelligence—a futuristic vision of machines that are as broadly smart as humans or at least can do many things as well as people can—could arrive within a decade…. [He] Hassabis emphasized the need for “meta-skills,” such as understanding how to learn and optimizing one’s approach to new subjects, alongside traditional disciplines like math, science and humanities.

This means reading poetry, preferably Greek poetry. The Google super wizard’s father is “Greek Cypriot.” (Cyprus is home base for a number of interesting financial operations and the odd intelware outfit. Which part of Cyprus is which? Google Maps may or may not answer this question. Ask your Google Pixel smart phone to avoid an unpleasant mix up.)

The write up adds this courteous note:

[Greek Prime Minister Kyriakos] Mitsotakis rescheduled the Google Big Brain to “avoid conflicting with the European basketball championship semifinal between Greece and Turkey. Greece later lost the game 94-68.”

Will life long learning skill help the Greek basketball team win against a formidable team like Turkey?

Sure, if Google says it, you know it is true just like eating rocks or gluing cheese on pizza. Learn now.

Stephen E Arnold, September 24, 2025

Titanic AI Goes Round and Round: Are You Dizzy Yet?

September 23, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Nvidia to Invest Up to $100 Billion in OpenAI, Linking Two Artificial Intelligence Titans.” The headline makes an important point. The words “big” and “huge” are not sufficiently monumental. Now we have “titans." As you may know, a “titan” is a person of great power. I will leave out the Greek mythology. I do want to point out that “titans” were the kiddies produced by Uranus and Gaea. Titans were big dogs until Zeus and a few other Olympian gods forced them to live in what is now Newark, New Jersey.

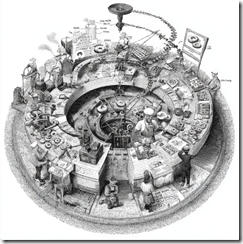

An AI-generated diagram of a simple circular deal. Regulators and and IRS professionals enjoy challenges. What are those people doing to make the process work? Thanks, MidJourney.com. Good enough.

The write up from the outfit that it is into trust explains how two “titans” are now intertwined. No, I won’t bring up the issue of incestuous behavior. Let’s stick to the “real” news story:

Nvidia will invest up to $100 billion in OpenAI and supply it with data center chips… Nvidia will start investing in OpenAI for non-voting shares once the deal is finalized, then OpenAI can use the cash to buy Nvidia’s chips.

I am not a finance, tax, or money wizard. On the surface, it seems to me that I loan a person some money and then that person gives me the money back in exchange for products and services. I may have this wrong, but I thought a similar arrangement landed one of the once-famous enterprise search companies in a world of hurt and a member of the firm’s leadership in prison.

Reuters includes this statement:

Analysts said the deal was positive for Nvidia but also voiced concerns about whether some of Nvidia’s investment dollars might be coming back to it in the form of chip purchases. "On the one hand this helps OpenAI deliver on what are some very aspirational goals for compute infrastructure, and helps Nvidia ensure that that stuff gets built. On the other hand the ‘circular’ concerns have been raised in the past, and this will fuel them further," said Bernstein analyst Stacy Rasgon.

“Circular” — That’s an interesting word. Some of the financial transaction my team and I examined during our Telegram (the messaging outfit) research used similar methods. One of the organizations apparently aware of “circular” transactions was Huione Guarantee. No big deal, but the company has been in legal hot water for some of its circular functions. Will OpenAI and Nvidia experience similar problems? I don’t know, but the circular thing means that money goes round and round. In circular transactions, at each touch point magical number things can occur. Money deals are rarely hallucinatory like AI outputs and semiconductor marketing.

What’s this mean to companies eager to compete in smart software and Fancy Dan chips? In my opinion, I hear my inner voice saying, “You may be behind a great big circular curve. Better luck next time.”

Stephen E Arnold, September 23, 2025

Pavel Durov Was Arrested for Online Stubbornness: Will This Happen in the US?

September 23, 2025

Written by an unteachable dinobaby. Live with it.

In august 2024, the French judiciary arrested Pavel Durov, the founder of VKontakte and then Telegram, a robust but non-AI platform. Why? The French government identified more than a dozen transgressions by Pavel Durov, who holds French citizenship as a special tech bro. Now he has to report to his French mom every two weeks or experience more interesting French legal action. Is this an example of a failure to communicate?

Will the US take similar steps toward US companies? I raise the question because I read an allegedly accurate “real” news write up called “Anthropic Irks White House with Limits on Models’ Use.” (Like many useful online resources, this story requires the curious to subscribe, pay, and get on a marketing list.) These “models,” of course, are the zeros and ones which comprise the next big thing in technology: artificial intelligence.

The write up states:

Anthropic is in the midst of a splashy media tour in Washington, but its refusal to allow its models to be used for some law enforcement purposes has deepened hostility to the company inside the Trump administration…

The write up says as actual factual:

Anthropic recently declined requests by contractors working with federal law enforcement agencies because the company refuses to make an exception allowing its AI tools to be used for some tasks, including surveillance of US citizens…

I found the write up interesting. If France can take action against an upstanding citizen like Pavel Durov, what about the tech folks at Anthropic or other outfits? These firms allegedly have useful data and the tools to answer questions? I recently fed the output of one AI system (ChatGPT) into another AI system (Perplexity), and I learned that Perplexity did a good job of identifying the weirdness in the ChatGPT output. Would these systems provide similar insights into prompt patterns on certain topics; for instance, the charges against Pavel Durov or data obtained by people looking for information about nuclear fuel cask shipments?

With France’s action, is the door open to take direct action against people and their organizations which cooperate reluctantly or not at all when a government official makes a request?

I don’t have an answer. Dinobabies rarely do, and if they do have a response, no one pays attention to these beasties. However, some of those wizards at AI outfits might want to ponder the question about cooperation with a government request.

Stephen E Arnold, September 24, 2025