Mistakes Are Biological. Do Not Worry. Be Happy

December 18, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read a short summary of a longer paper written by a person named Paul Arnold. I hope this is not misinformation. I am not related to Paul. But this could be a mistake. This dinobaby makes many mistakes.

The article that caught my attention is titled “Misinformation Is an Inevitable Biological Reality Across nature, Researchers Argue.” The short item was edited by a human named Gaby Clark. The short essay was reviewed by Robert Edan. I think the idea is to make clear that nothing in the article is made up and it is not misinformation.

Okay, but…. Let’s look at couple of short statements from the write up about misinformation. (I don’t want to go “meta” but the possibility exists that the short item is stuffed full of information. What do you think?

Here’s an image capturing a youngish teacher outputting misinformation to his students. Okay, Qwen. Good enough.

Here’s snippet one:

… there is nothing new about so-called “fake news…”

Okay, does this mean that software that predicts the next word and gets it wrong is part of this old, long-standing trajectory for biological creatures. For me, the idea that algorithms cobbled together gets a pass because “there is nothing new about so-called ‘fake news’ shifts the discussion about smart software. Instead of worrying about getting about two thirds of questions right, the smart software is good enough.

A second snippet says:

Working with these [the models Paul Arnold and probably others developed] led the team to conclude that misinformation is a fundamental feature of all biological communication, not a bug, failure, or other pathology.

Introducing the notion of “pathology” adds a bit of context to misinformation. Is a human assembled smart software system, trained on content that includes misinformation and processed by algorithms that may be biased in some way is just the way the world works. I am not sure I am ready to flash the green light for some of the AI outfits to output what is demonstrably wrong, distorted, weaponized, or non-verifiable outputs.

What puzzled me is that the article points to itself and to an article by Ling Wei Kong et al, “A Brief Natural history of Misinformation” in the Journal of the Royal Society Interface.

Here’s the link to the original article. The authors of the publication are, if the information on the Web instance of the article is accurate, Ling-Wei Kong, Lucas Gallart, Abigail G. Grassick, Jay W. Love, Amlan Nayak, and Andrew M. Hein. Seven people worked on the “original” article. The three people identified in the short version worked on that item. This adds up to 10 people. Apparently the group believes that misinformation is a part of the biological being. Therefore, there is no cause to worry. In fact, there are mechanisms to deal with misinformation. Obviously a duck quack that sends a couple of hundred mallards aloft can protect the flock. A minimum of one duck needs to check out the threat only to find nothing is visible. That duck heads back to the pond. Maybe others follow? Maybe the duck ends up alone in the pond. The ducks take the viewpoint, “Better safe than sorry.”

But when a system or a mobile device outputs incorrect or weaponized information to a user, there may not be a flock around. If there is a group of people, none of them may be able to identify the incorrect or weaponized information. Thus, the biological propensity to be wrong bumps into an output which may be shaped to cause a particular effect or to alter a human’s way of thinking.

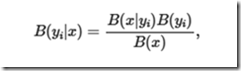

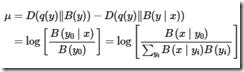

Most people will not sit down and take a close look at this evidence of scientific rigor:

and then follow the logic that leads to:

I am pretty old but it looks as if Mildred Martens, my old math teacher, would suggest the KL divergence wants me to assume some things about q(y). On the right side, I think I see some good old Bayesian stuff but I didn’t see the to take me from the KL-difference to log posterior-to-prior ratio. Would Miss Martens ask a student like me to clarify the transitions, fix up the notation, and eliminate issues between expectation vs. pointwise values? Remember, please, that I am a dinobaby and I could be outputting misinformation about misinformation.

Several observations:

- If one accepts this line of reasoning, misinformation is emergent. It is somehow part of the warp and woof of living and communicating. My take is that one should expect misinformation.

- Anything created by a biological entity will output misinformation. My take on this is that one should expect misinformation everywhere.

- I worry that researchers tackling information, smart software, and related disciplines may work very hard to prove that information is inevitable but the biological organisms can carry on.

I am not sure if I feel comfortable with the normalization of misinformation. As a dinobaby, the function of education is to anchor those completing a course of study in a collection of generally agreed upon facts. With misinformation everywhere, why bother?

Net net: One can read this research and the summary article as an explanation why smart software is just fine. Accept the hallucinations and misstatements. Errors are normal. The ducks are fine. The AI users will be fine. The models will get better. Despite this framing of misinformation is everywhere, the results say, “Knock off the criticism of smart software. You will be fine.”

I am not so sure.

Stephen E Arnold, December 18, 2025

Comments

Got something to say?