An AI Wrapper May Resolve Some Problems with Smart Software

July 15, 2025

No smart software involved with this blog post. (An anomaly I know.)

No smart software involved with this blog post. (An anomaly I know.)

For those with big bucks sunk in smart software chasing their tail around large language models, I learned about a clever adjustment — an adjustment that could pour some water on those burning black holes of cash.

A 36 page “paper” appeared on ArXiv on July 4, 2025 (Happy Birthday, America!). The original paper was “revised” and posted on July 8, 2025. You can read the July 8, 2025, version of “MemOS: A Memory OS for AI System” and monitor ArXiv for subsequent updates.

I recommend that AI enthusiasts download the paper and read it. Today content has a tendency to disappear or end up behind paywalls of one kind or another.

The authors of the paper come from outfits in China working on a wide range of smart software. These institutions explore smart waste water as well as autonomous kinetic command-and-control systems. Two organizations funding the “authors” of the research and the ArXiv write up are a start up called MemTensor (Shanghai) Technology Co. Ltd. The idea is to take good old Google tensor learnings and make them less stupid. The other outfit is the Research Institute of China Telecom. This entity is where interesting things like quantum communication and novel applications of ultra high frequencies are explored.

The MemOS is, based on my reading of the paper, is that MemOS adds a “layer” of knowledge functionality to large language models. The approach remembers the users’ or another system’s “knowledge process.” The idea is that instead of every prompt being a brand new sheet of paper, the LLM has a functional history or “digital notebook.” The entries in this notebook can be used to provide dynamic context for a user’s or another system’s query, prompt, or request. One application is “smart wireless” applications; another, context-aware kinetic devices.

I am not sure about some of the assertions in the write up; for example, performance gains, the benchmark results, and similar data points.

However, I think that the idea of a higher level of abstraction combined with enhanced memory of what the user or the system requests is interesting. The approach is similar to having an “old” AS/400 or whatever IBM calls these machines and interacting with them via a separate computing system is a good one. Request an output from the AS/400. Get the data from an I/O device the AS/400 supports. Interact with those data in the separate but “loosely coupled” computer. Then reverse the process and let the AS/400 do its thing with the input data on its own quite tricky workflow. Inefficient? You bet. Does it prevent the AS/400 from trashing its memory? Most of the time, it sure does.

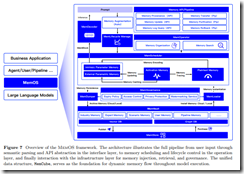

The authors include a pastel graphic to make clear that the separation from the LLM is what I assume will be positioned as an original, unique, never-before-considered innovation:

Now does it work? In a laboratory, absolutely. At the Syracuse Parallel Processing Center, my colleagues presented a demonstration to Hillary Clinton. The search, text, video thing behaved like a trained tiger before that tiger attacked Roy in the Siegfried & Roy animal act in October 2003.

Are the data reproducible? Good question. It is, however, a time when fake data and synthetic government officials are posting videos and making telephone calls. Time will reveal the efficacy of the ‘breakthrough.”

Several observations:

- The purpose of the write up is a component of the China smart, US dumb marketing campaign

- The number of institutions involved, the presence of a Chinese start up, and the very big time Research Institute of China Telecom send the message that this AI expertise is diffused across numerous institutions

- The timing of the release of the paper is delicious: Happy Birthday, Uncle Sam.

Net net: Perhaps Meta should be hiring AI wizards from the Middle Kingdom?

Stephen E Arnold, July 15, 2025