Moral Decline? Nah, Just Your Perception at Work

June 12, 2023

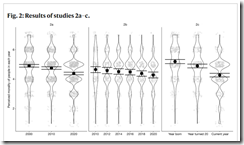

Here’s a graph from the academic paper “The Illusion of Moral Decline.”

Is it even necessary to read the complete paper after studying the illustration? Of course not. Nevertheless, let’s look at a couple of statements in the write up to get ready for that in-class, blank bluebook semester examination, shall we?

Statement 1 from the write up:

… objective indicators of immorality have decreased significantly over the last few centuries.

Well, there you go. That’s clear. Imagine what life was like before modern day morality kicked in.

Statement 2 from the write up:

… we suggest that one of them has to do with the fact that when two well-established psychological phenomena work in tandem, they can produce an illusion of moral decline.

Okay. Illusion. This morning I drove past people sleeping under an overpass. A police vehicle with lights and siren blaring raced past me as I drove to the gym (a gym which is no longer open 24×7 due to safety concerns). I listened to a report about people struggling amidst the flood water in Ukraine. In short, a typical morning in rural Kentucky. Oh, I forgot to mention the gunfire, I could hear as I walked my dog at a local park. I hope it was squirrel hunters but in this area who knows?

MidJourney created this illustration of the paper’s authors celebrating the publication of their study about the illusion of immorality. The behavior is a manifestation of morality itself, and it is a testament to the importance of crystal clear graphs.

Statement 3 from the write up:

Participants in the foregoing studies believed that morality has declined, and they believed this in every decade and in every nation we studied….About all these things, they were almost certainly mistaken.

My take on the study includes these perceptions (yours hopefully will be more informed than mine):

- The influence of social media gets slight attention

- Large-scale immoral actions get little attention. I am tempted to list examples, but I am afraid of legal eagles and aggrieved academics with time on their hands.

- The impact of intentionally weaponized information on behavior in the US and other nation states which provide an infrastructure suitable to permit wide use of digitally-enabled content.

In order to avoid problems, I will list some common and proper nouns or phrases and invite you think about these in terms of the glory word “morality”. Have fun with your mental gymnastics:

- Catholic priests and children

- Covid information and pharmaceutical companies

- Epstein, Andrew, and MIT

- Special operation and elementary school children

- Sudan and minerals

- US politicians’ campaign promises.

Wasn’t that fun? I did not have to mention social media, self harm, people between the ages of 10 and 16, and statements like “Senator, thank you for that question…”

I would not do well with a written test watched by attentive journal authors. By the way, isn’t perception reality?

Stephen E Arnold, June 12, 2023

Google: FUD Embedded in the Glacier Strategy

June 9, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-12.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Fly to Alaska. Stand on a glacier and let the guide explains the glacier moves, just slowly. That’s the Google smart software strategy in a nutshell. Under Code Red or Red Alert or “My goodness, Microsoft is getting media attention for something other than lousy code and security services. We have to do something sort of quickly.”

One facet of the game plan is to roll out a bit of FUD or fear, uncertainty, and doubt. That will send chills to some interesting places, won’t it. You can see this in action in the article “Exclusive: Google Lays Out Its Vision for Securing AI.” Feel the fear because AI will kill humanoids unless… unless you rely on Googzilla. This is the only creature capable of stopping the evil that irresponsible smart software will unleash upon you, everyone, maybe your dog too.

The manager of strategy says, “I think the fireball of AI security doom is going to smash us.” The top dog says, “I know. Google will save us.” Note to image trolls: This outstanding illustration was generated in a nonce by MidJourney, not an under-compensated creator in Peru.

The write up says:

Google has a new plan to help organizations apply basic security controls to their artificial intelligence systems and protect them from a new wave of cyber threats.

Note the word “plan”; that is, the here and now equivalent of vaporware or stuff that can be written about and issued as “real news.” The guts of the Google PR is that Google has six easy steps for its valued users to take. Each step brings that user closer to the thumping heart of Googzilla; to wit:

- Assess what existing security controls can be easily extended to new AI systems, such as data encryption;

- Expand existing threat intelligence research to also include specific threats targeting AI systems;

- Adopt automation into the company’s cyber defenses to quickly respond to any anomalous activity targeting AI systems;

- Conduct regular reviews of the security measures in place around AI models;

- Constantly test the security of these AI systems through so-called penetration tests and make changes based on those findings;

- And, lastly, build a team that understands AI-related risks to help figure out where AI risk should sit in an organization’s overall strategy to mitigate business risks.

Does this sound like Mandiant-type consulting backed up by Google’s cloud goodness? It should because when one drinks Google juice, one gains Google powers over evil and also Google’s competitors. Google’s glacier strategy is advancing… slowly.

Stephen E Arnold, June 9, 2023

Microsoft Code: Works Great. Just Like Bing AI

June 9, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-8.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

For Windows users struggling with certain apps, help is not on the way anytime soon. In fact, reports TechRadar, “Windows 11 Is So Broken that Even Microsoft Can’t Fix It.” The issues started popping up for some users of Windows 11 and Windows 10 in January and seem to coincide with damaged registry keys. For now the company’s advice sounds deceptively simple: ditch its buggy software. Not a great look. Writer Matt Hanson tells us:

“On Microsoft’s ‘Health’ webpage regarding the issue, Microsoft notes that the ‘Windows search, and Universal Windows Platform (UWP) apps might not work as expected or might have issues opening,’ and in a recent update it has provided a workaround for the problem. Not only is the lack of a definitive fix disappointing, but the workaround isn’t great, with Microsoft stating that to ‘mitigate this issue, you can uninstall apps which integrate with Windows, Microsoft Office, Microsoft Outlook or Outlook Calendar.’ Essentially, it seems like Microsoft is admitting that it’s as baffled as us by the problem, and that the only way to avoid the issue is to start uninstalling apps. That’s pretty poor, especially as Microsoft doesn’t list the apps that are causing the issue, just that they integrate with ‘Windows, Microsoft Office, Microsoft Outlook or Outlook Calendar,’ which doesn’t narrow it down at all. It’s also not a great solution for people who depend on any of the apps causing the issue, as uninstalling them may not be a viable option.”

The write-up notes Microsoft says it is still working on these issues. Will it release a fix before most users have installed competing programs or, perhaps, even a different OS? Or maybe Windows 11 snafus are just what is needed to distract people from certain issues related to the security of Microsoft’s enterprise software. Will these code faults surface (no pun intended) in Microsoft’s smart software. Of course not. Marketing makes software better.

Cynthia Murrell, June 9, 2023

AI: Immature and a Bit Unpredictable

June 9, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-5.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Writers, artists, programmers, other creative professionals, and workers with potentially automated jobs are worried that AI algorithms are going to replace them. ChatGPT is making headlines about its universality with automating tasks and writing all web content. While ChatGPT cannot write succinct Shakespearean drama yet, it can draft a decent cover letter. Vice News explains why we do not need to fear the AI apocalypse yet: “Scary ‘Emergent’ AI Abilities Are Just A ‘Mirage’ Produced By Researchers, Stanford Study Says.”

Responsible adults — one works at Google and the other at Microsoft — don’t know what to do with their unhappy baby named AI. The image is a product of the MidJourney system which Getty Images may not find as amusing as I do.

Stanford researchers wrote a paper where they claim “that so-called “emergent abilities” in AI models—when a large model suddenly displays an ability it ostensibly was not designed to possess—are actually a “mirage” produced by researchers.” Technology leaders, such as Google CEO Sundar Pichai, perpetuate that large language model AI and Google Bard are teaching themselves skills not in their initial training programs. For example, Google Bard can translate Bengali and Chat GPT-4 can solve complex tasks without special assistance. Neither AI had relevant information included in their training datasets to reference.

When technology leaders tell the public about these AI, news outlets automatically perpetuate doomsday scenarios, while businesses want to exploit them for profit. The Stanford study explains that different AI developers measure outcomes differently and also believe smaller AI models are incapable of solving complex problems. The researchers also claim that AI experts make overblown claims, likely for investments or notoriety. The Stanford researchers encourage their brethren to be more realistic:

“The authors conclude the paper by encouraging other researchers to look at tasks and metrics distinctly, consider the metric’s effect on the error rate, and that the better-suited metric may be different from the automated one. The paper also suggests that other researchers take a step back from being overeager about the abilities of large language models. ‘When making claims about capabilities of large models, including proper controls is critical,” the authors wrote in the paper.’”

It would be awesome if news outlets and the technology experts told the world that an AI takeover is still decades away? Nope, the baby AI wants cash, fame, a clean diaper, and a warm bottle… now.

Whitney Grace, June 9, 2023

OpenAI: Someone, Maybe the UN? Take Action Before We Sign Up More Users

June 8, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I wrote about Sam AI-man’s use of language my humanoid-written essay “Regulate Does Not Mean Regulate. Leave the EU Does Not Mean Leave the EU. Got That?” Now the vocabulary of Mr. AI-man has been enriched. For a recent example, please, navigate to “OpenAI CEO Suggests International Agency Like UN’s Nuclear Watchdog Could Oversee AI.” I am loath to quote from an AP (once an “associated press”) due to the current entity’s policy related to citing their “real news.”

In the allegedly accurate “real news” story, I learned that Mr. AI-man has floated the idea for a United Nation’s agency to oversee global smart software. Now that is an idea worthy of a college dorm room discussion at Johns Hopkins University’s School of Advanced International Studies in always-intellectually sharp Washington, DC.

UN Representative #1: What exactly is artificial intelligence? UN Representative #2. How can we leverage it for fund raising? UN Representative # 3. Does anyone have an idea how we could use smart software to influence our friends in certain difficult nation states? UN Representative #4. Is it time for lunch? Illustration crafted with imagination, love, and care by MidJourney.

The model, as I understand the “real news” story is that the UN would be the guard dog for bad applications of smart software. Mr. AI-man’s example of UN effectiveness is the entity’s involvement in nuclear power. (How is that working out in Iran?) The write up also references the notion of guard rails. (Are there guard rails on other interesting technology; for example, Instagram’s somewhat relaxed approach to certain information related to youth?)

If we put the “make sure we come together as a globe” statement in the context of Sam AI-man’s other terminology, I wonder if PR and looking good is more important than generating traction and revenue from OpenAI’s innovations.

Of course not. The UN can do it. How about those UN peace keeping actions in Africa? Complete success from Mr. AI-man’s point of view.

Stephen E Arnold, June 8, 2023, 929 am US Eastern

Japan and Copyright: Pragmatic and Realistic

June 8, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “Japan Goes All In: Copyright Doesn’t Apply To AI Training.” In a nutshell, Japan’s alleged stance is accompanied with a message for “creators”: Tough luck.

You are ripping off my content. I don’t think that is fair. I am a creator. The image of a testy office lady is the product of MidJourney’s derivative capabilities.

The write up asserts:

It seems Japan’s stance is clear – if the West uses Japanese culture for AI training, Western literary resources should also be available for Japanese AI. On a global scale, Japan’s move adds a twist to the regulation debate. Current discussions have focused on a “rogue nation” scenario where a less developed country might disregard a global framework to gain an advantage. But with Japan, we see a different dynamic. The world’s third-largest economy is saying it won’t hinder AI research and development. Plus, it’s prepared to leverage this new technology to compete directly with the West.

If this is the direction in which Japan is heading, what’s the posture in China, Viet-Nam and other countries in the region? How can the US regulate for an unknown future? We know Japan’s approach it seems.

Stephen E Arnold, June 8, 2023

How Does One Train Smart Software?

June 8, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-6.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

It is awesome when geekery collides with the real world, such as the development of AI. These geekery hints prove that fans are everywhere and the influence of fictional worlds leave a lasting impact. Usually these hints are naming a new discovery after a favorite character or franchise, but it might not be good for copyrighted books beloved by geeks everywhere. The New Scientist reports that “ChatGPT Seems To Be Trained On Copyrighted Books Like Harry Potter.”

In order to train AI models, AI developers need large language models or datasets. Datasets can range from information on social media platforms to shopping databases like Amazon. The problem with ChatGPT is that it appears its developers at OpenAI used copyrighted books as language models. If OpenAI used copyrighted materials it brings into question if the datasets were legality created.

Associate Professor David Bamman of the University of California, Berkley campus, and his team studied ChatGPT. They hypothesized that OpenAI used copyrighted material. Using 600 fiction books from 1924-2020, Bamman and his team selected 100 passages from each book that ha a single, named character. The name was blanked out of the passages, then ChatGPT was asked to fill them. ChatGPT had a 98% accuracy rate with books ranging from J.K. Rowling, Ray Bradbury, Lewis Carroll, and George R.R. Martin.

If ChatGPT is only being trained from these books, does it violate copyright?

“ ‘The legal issues are a bit complicated,’ says Andres Guadamuz at the University of Sussex, UK. ‘OpenAI is training GPT with online works that can include large numbers of legitimate quotes from all over the internet, as well as possible pirated copies.’ But these AIs don’t produce an exact duplicate of a text in the same way as a photocopier, which is a clearer example of copyright infringement. ‘ChatGPT can recite parts of a book because it has seen it thousands of times,’ says Guadamuz. ‘The model consists of statistical frequency of words. It’s not reproduction in the copyright sense.’”

Individual countries will need to determine dataset rules, but it is preferential to notify authors their material is being used. Fiascos are already happening with stolen AI generated art.

ChatGPT was mostly trained on science fiction novels, while it did not read fiction from minority authors like Toni Morrison. Bamman said ChatGPT is lacking representation. That his one way to describe the datasets, but it more likely pertains to the human AI developers reading tastes. I assume there was little interest in books about ethics, moral behavior, and the old-fashioned William James’s view of right and wrong. I think I assume correctly.

Whitney Grace, June 8, 2023

Blue Chip Consultants Embrace Smart Software: Some Possible But Fanciful Reasons Offered

June 7, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb Vea4_thumb_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

VentureBeat published an interesting essay about the blue-chip consulting firm, McKinsey & Co. Some may associate the firm with its work for the pharmaceutical industry. Others may pull up a memory of a corporate restructuring guided by McKinsey consultants which caused employees to have an opportunity to find their futures elsewhere. A select few can pull up a memory of a McKinsey recruiting pitch at one of the business schools known to produce cheerful Type As who desperately sought the approval of their employer. I recall do a miniscule project related to a mathematical technique productized by a company almost no one remembers. I think the name of the firm was CrossZ. Ah, memories.

“McKinsey Says About Half of Its Employees Are Using Generative AI” reports via a quote from Ben Ellencweig (a McKinsey blue chip consultant):

About half of [our employees] are using those services with McKinsey’s permission.

Is this half “regular” McKinsey wizards? That’s ambiguous. McKinsey has set up QuantumBlack, a unit focused on consulting about artificial intelligence.

The article included a statement which reminded me of what I call the “vernacular of the entitled”; to wit:

Ellencweig emphasized that McKinsey had guardrails for employees using generative AI, including “guidelines and principles” about what information the workers could input into these services. “We do not upload confidential information,” Ellencweig said.

A senior consultant from an unknown consulting firm explains how a hypothetical smart fire hydrant disguised as a beverage dispenser can distribute variants of Hydrocodone Bitartrate or an analog to “users.” The illustration was created by the smart bytes at MidJourney.

Yep, guardrails. Guidelines. Principles. I wonder if McKinsey and Google are using the same communications consulting firm. The lingo is designed to reassure, to suggest an ethical compass in good working order.

Another McKinsey expert said, according to the write up:

McKinsey was testing most of the leading generative AI services: “For all the major players, our tech folks have them all in a sandbox, [and are] playing with them every day,” he said.

But what about the “half”? If half means those in Black Quantum, McKinsey is in the Wright Bros. stage of technological application. However, if the half applies to the entire McKinsey work force, that raises a number of interesting questions about what information is where and how those factoids are being used.

If I were not a dinobaby with a few spins in the blue chip consulting machine, I would track down what Bain, BCG, Booz, et al were saying about their AI practice areas. I am a dinobaby.

What catches my attention is the use of smart software in these firms; for example, here are a couple of questions I have:

- Will McKinsey and similar firms use the technology to reduce the number of expensive consultants and analysts while maintaining or increasing the costs of projects?

- Will McKinsey and similar firms maintain their present staffing levels and boost the requirements for a bonus or promotion as measured by billability and profit?

- Will McKinsey and similar firms use the technology, increase the number of staff who can integrate smart software into their work, and force out the Luddites who do not get with the AI program?

- Will McKinsey cherry pick ways to use the technology to maximize partner profits and scramble to deal with glitches in the new fabric of being smarter than the average client?

My instinct is that more money will be spent on marketing the use of smart software. Luddites will be allowed to find their future at an azure chip firm (lower tier consulting company) or return to their parents’ home. New hires with AI smarts will ride the leather seats in McKinsey’s carpetland. Decisions will be directed at [a] maximizing revenue, [b] beating out other blue chip outfits for juicy jobs, and [c] chasing terminated high tech professionals who own a suit and don’t eat enhanced gummies during an interview.

And for the clients? Hey, check out the way McKinsey produces payoff for its clients in “When McKinsey Comes to Town: The Hidden Influence of the World’s Most Powerful Consulting Firm.”

Stephen E Arnold, June 7, 2023

Google: Responsible and Trustworthy Chrome Extensions with a Dab of Respect the User

June 7, 2023

“More Malicious Extensions in Chrome Web Store” documents some Chrome extensions (add ins) which allegedly compromise a user’s computer. Google has been using words like responsible and trust with increasing frequency. With Chrome in use by more than half of those with computing devices, what’s the dividing line between trust and responsibility for Google smart software and stupid but market leading software like Chrome. If a non-Google third party can spot allegedly problematic extensions, why can’t Google? Is part of the answer, “Talk is cheap. Fixing software is expensive”? That’s a good question.

The cited article states:

… we are at 18 malicious extensions with a combined user count of 55 million. The most popular of these extensions are Autoskip for Youtube, Crystal Ad block and Brisk VPN: nine, six and five million users respectively.

The write up crawfishes, stating:

Mind you: just because these extensions monetized by redirecting search pages two years ago, it doesn’t mean that they still limit themselves to it now. There are way more dangerous things one can do with the power to inject arbitrary JavaScript code into each and every website.

My reaction is that why are these allegedly malicious components in the Google “store” in the first place?

I think the answer is obvious: Talk is cheap. Fixing software is expensive. You may disagree, but I hold fast to my opinion.

Stephen E Arnold, June 7, 2023

Old School Book Reviewers, BookTok Is Eating Your Lunch Now

June 7, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/06/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-7.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Perhaps to counter recent aspersions on its character, TikTok seems eager to transfer prestige from one of its popular forums to itself. Mashable reports, “TikTok Is Launching its Own Book Awards.” The BookTok community has grown so influential it apparently boosts book sales and inspires TV and movie producers. Writer Meera Navlakha reports:

“TikTok knows the power of this community, and is expanding on it. First, a TikTok Book Club was launched on the platform in July 2022; a partnership with Penguin Random House followed in September. Now, the app is officially launching the TikTok Book Awards: a first-of-its-kind celebration of the BookTok community, specifically in the UK and Ireland. The 2023 TikTok Book Awards will honour favourite authors, books, and creators across nine categories. These range ‘Creator of the Year’ to ‘Best BookTok Revival’ to ‘Best Book I Wish I Could Read Again For The First Time’. Those within the BookTok ecosystem, including creators and fans, will help curate the nominees, using the hashtag #TikTokBookAwards. The long-list will then be judged by experts, including author Candice Brathwaite, creators Coco and Ben, and Trâm-Anh Doan, the head of social media at Bloomsbury Publishing. Finally, the TikTok community within the UK and Ireland will vote on the short-list in July, through an in-app hub.”

What an efficient plan. This single, geographically limited initiative may not be enough to outweigh concerns about TikTok’s security. But if the platform can appropriate more of its communities’ deliberations, perhaps it can gain the prestige of a digital newspaper of record. All with nearly no effort on its part.

Cynthia Murrell, June 7, 2023