Google Gems for the Week of 19 February, 2024

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

This week’s edition of Google Gems focuses on a Hope Diamond and a handful of lesser stones. Let’s go.

THE HOPE DIAMOND

In the chaos of the AI Gold Rush, horses fall and wizard engineers realize that they left their common sense in the saloon. Here’s the Hope Diamond from the Google.

The world’s largest online advertising agency created smart software with a lot of math, dump trucks filled with data, and wizards who did not recall that certain historical figures in the US were not of color. “Google Says Its AI Image-Generator Would Sometimes Overcompensate for Diversity,” an Associated Press story, explains in very gentle rhetoric that its super sophisticate brain and DeepMind would get the race of historical figures wrong. I think this means that Ben Franklin could look like a Zulu prince or George Washington might have some resemblance to Rama (blue skin, bow, arrow, and snappy hat).

My favorite search and retrieval expert Prabhakar Raghavan (famous for his brilliant lecture in Paris about the now renamed Bard) indicated that Google’s image rendering system did not hit the bull’s eye. No, Dr. Raghavan, the digital arrow pierced the micrometer thin plastic wrap of Google’s super sophisticated, quantum supremacy, gee-whiz technology.

The message I received from Google when I asked for an illustration of John Hancock, an American historical figure. Too bad because this request goes against Google’s policies. Yep, wizards infused with the high school science club management method.

More important, however, was how Google’s massive stumble complemented OpenAI’s ChatGPT wonkiness. I want to award the Hope Diamond Award for AI Ineptitude to both Google and OpenAI. But, alas, there is just one Hope Diamond. The award goes to the quantumly supreme outfit Google.

[Note: I did not quote from the AP story. Why? Years ago the outfit threatened to sue people who use their stories’ words. Okay, no problemo, even though the newspaper for which I once worked supported this outfit in the days of “real” news, not recycled blog posts. I listen, but I do not forget some things. I wonder if the AP knows that Google Chrome can finish a “real” journalist’s sentences for he/him/she/her/it/them. Read about this “feature” at this link.]

Here are my reasons:

- Google is in catch-up mode and like those in the old Gold Rush, some fall from their horses and get up close and personal with hooves. How do those affect the body of a wizard? I have never fallen from a horse, but I saw a fellow get trampled when I lived in Campinas, Brazil. I recall there was a lot of screaming and blood. Messy.

- Google’s arrogance and intellectual sophistication cannot prevent incredible gaffes. A company with a mixed record of managing diversity, equity, etc. has demonstrated why Xooglers like Dr. Timnit Gebru find the company “interesting.” I don’t think Google is interesting. I think it is disappointing, particularly in the racial sensitivity department.

- For years I have explained that Google operates via the high school science club management method. What’s cute when one is 14 loses its charm when those using the method have been at it for a quarter century. It’s time to put on the big boy pants.

OTHER LITTLE GEMMAS

The previous week revealed a dirt trail with some sharp stones and thorny bushes. Here’s a quick selection of the sharpest and thorniest:

- The Google is running webinars to inform publishers about life after their wonderful long-lived cookies. Read more at Fipp.com.

- Google has released a small model as open source. What about the big model with the diversity quirk? Well, no. Read more at the weird green Verge thing.

- Google cares about AI safety. Yeah, believe it or not. Read more about this PR move on Techcrunch.

- Web search competitors will fail. This is a little stone. Yep, a kidney stone for those who don’t recall Neeva. Read more at Techpolicy.

- Did Google really pay $60 million to get that outstanding Reddit content. Wow. Maybe Google looks at different sub reddits than my research team does. Read more about it in 9 to 5 Google.

- What happens when an uninformed person uses the Google Cloud? Answer: Sticker shock. More about this estimable method in The Register.

- Some spoil sport finds traffic lights informed with Google’s smart software annoying. That’s hard to believe. Read more at this link.

- Google pointed out in a court filing that DuckDuckGo was a meta search system (that is, a search interface to other firm’s indexes) and Neeva was a loser crafted by Xooglers. Read more at this link.

No Google Hope Diamond report would be complete without pointing out that the online advertising giant will roll out its smart software to companies. Read more at this link. Let’s hope the wizards figure out that historical figures often have quite specific racial characteristics like Rama.

I wanted to include an image of Google’s rendering of a signer of the Declaration of Independence. What you see in the illustration above is what I got. Wow. I have more “gemmas”, but I just don’t want to present them.

Stephen E Arnold, February 27, 2024

10X: The Magic Factor

February 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The 10X engineer. The 10X payout. The 10X advertising impact. The 10X factor can apply to money, people, and processes. Flip to the inverse and one can use smart software to replace the engineers who are not 10X or — more optimistically — lift those expensive digital humanoids to a higher level. It is magical: Win either way, provided you are a top dog a one percenter. Applied to money, 10X means winner. End up with $0.10, and the hapless investor is a loser. For processes, figuring out a 10X trick, and you are a winner, although one who is misunderstood. Money matters more than machine efficiency to some people.

In pursuit of a 10X payoff, will the people end up under water? Thanks, ImageFX. Good enough.

These are my 10X thoughts after I read “Groq, Gemini, and 10X Improvements.” The essay focuses on things technical. I am going to skip over what the author offers as a well-reasoned, dispassionate commentary on 10X. I want to zip to one passage which I think is quite fascinating. Here it is:

We don’t know when increasing parameters or datasets will plateau. We don’t know when we’ll discover the next breakthrough architecture akin to Transformers. And we don’t know how good GPUs, or LPUs, or whatever else we’re going to have, will become. Yet, when when you consider that Moore’s Law held for decades… suddenly Sam Altman’s goal of raising seven trillion dollars to build AI chips seems a little less crazy.

The way I read this is that unknowns exist with AI, money, and processes. For me, the unknowns are somewhat formidable. For many, charging into the unknown does not cause sleepless nights. Talking about raising trillions of dollars which is a large pile of silver dollars.

One must take the $7 trillion and Sam AI-Man seriously. In June 2023, Sam AI-Man met the boss of Softbank. Today (February 22, 2024) rumors about a deal related to raising the trillions required for OpenAI to build chips and fulfill its promise have reached my research team. If true, will there be a 10X payoff, which noses into spitting distance of 15 zeros. If that goes inverse, that’s going to create a bad day for someone.

Stephen E Arnold, February 27, 2024

Local Dead Tree Tabloids: Endangered Like Some Firs

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

A relic of a bygone era, the local printed newspaper is dead. Or is it? A pair of recent articles suggest opposite conclusions. The Columbia Journalism Review tells us, “They Gave Local News Away for Free. Virtually Nobody Wanted It.” The post cites a 2021 study from the University of Pennsylvania that sought ways to boost interest in local news. But when over 2,500 locals were offered free Pittsburgh Post-Gazette or Philadelphia Inquirer subscriptions, fewer than two percent accepted. Political science professor Dan Hopkins, who conducted the study with coauthor Tori Gorton, was dismayed. Reporter Kevin Lind writes:

“Hopkins conceived the study after writing a book in 2018 on the nationalization of American politics. In The Increasingly United States he argues that declining interest and access to local news forces voters, who are not otherwise familiar with the specifics of their local governments’ agendas or legislators, to default to national partisan lines when casting regional ballots. As a result, politicians are not held accountable, voters are not aware of the issues, and the candidates who get elected reflect national ideologies rather than representing local needs.”

Indeed. But is it too soon to throw in the towel? Poynter offers some hope in its article, “One Utah Paper Is Making Money with a Novel Idea: Print.” The Deseret News’ new digest is free but makes a tidy profit from ads. Not only that, the move seems to have piqued interest in actual paid subscriptions. Imagine that! Writer Angela Fu describes one young reader who has discovered the allure of the printed page:

“Fifteen-year-old Adam Kunz said he discovered the benefits of physical papers when he came across a free sample from the Deseret News in the mail in November. Until then, he got most of his news through online aggregators like Google News. Newspapers were associated with ‘boring, old people stuff,’ and Kunz hadn’t realized that the Deseret News was still printing physical copies of its paper. He was surprised by how much he liked having a tangible paper in which stories were neatly packed. Kunz told his mother he wanted a Deseret News subscription for Christmas and that if she wouldn’t pay for it, he would buy it himself. Now, he starts and ends his days with the paper, reading a few stories at a time so that he can make the papers — which come twice a week — last.”

Anecdotal though it is, that story is encouraging. Does the future lie with young print enthusiasts like Kunz or with subscription scoffers like the UPenn subjects? Some of each, one suspects.

Cynthia Murrell, February 27, 2024

Qualcomm: Its AI Models and Pour Gasoline on a Raging Fire

February 26, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Qualcomm’s announcements at the Mobile World Congress pour gasoline on the raging AI fire. The chip maker aims to enable smart software on mobile devices, new gear, gym shoes, and more. Venture Beat’s “Qualcomm Unveils AI and Connectivity Chips at Mobile World Congress” does a good job of explaining the big picture. The online publication reports:

Generative AI functions in upcoming smartphones, Windows PCs, cars, and wearables will also be on display with practical applications. Generative AI is expected to have a broad impact across industries, with estimates that it could add the equivalent of $2.6 trillion to $4.4 trillion in economic benefits annually.

Qualcomm, primarily associated with chips, has pushed into what it calls “AI models.” The listing of the models appears on the Qualcomm AI Hub Web page. You can find this page at https://aihub.qualcomm.com/models. To view the available models, click on one of the four model domains, shown below:

Each domain will expand and present the name of the model. Note that the domain with the most models is computer vision. The company offers 60 models. These are grouped by function; for example, image classification, image editing, image generation, object detection, pose estimation, semantic segmentation (tagging objects), and super resolution.

The image below shows a model which analyzes data and then predicts related values. In this case, the position of the subject’s body are presented. The predictive functions of a company like Recorded Future suddenly appear to be behind the curve in my opinion.

There are two models for generative AI. These are image generation and text generation. Models are available for audio functions and for multimodal operations.

Qualcomm includes brief descriptions of each model. These descriptions include some repetitive phrases like “state of the art”, “transformer,” and “real time.”

Looking at the examples and following the links to supplemental information makes clear at first glance to suggest:

- Qualcomm will become a company of interest to investors

- Competitive outfits have their marching orders to develop comparable or better functions

- Supply chain vendors may experience additional interest and uplift from investors.

Can Qualcomm deliver? Let me answer the question this way. Whether the company experiences an nVidia moment or not, other companies have to respond, innovate, cut costs, and become more forward leaning in this chip sector.

I am in my underground computer lab in rural Kentucky, and I can feel the heat from Qualcomm’s AI announcement. Those at the conference not working for Qualcomm may have had their eyebrows scorched.

Stephen E Arnold, February 26, 2024

Ambercite: Patent Analysis via Smart Software

February 26, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A news item made its way to me from an Australian company called Ambercite. The firm is in the business of providing a next-generation patent analysis system. The company began incorporating artificial intelligence in its patent research and discovery system in 2017.

As you may know, a patent is a legal document granted a the government process. Once awarded (usually after months or years of examiners), the patent gives an inventor or inventors named in the patent application exclusive rights to their invention for a certain period of time. The patent protects the inventor’s intellectual property, preventing others from making, using, or selling the invention without permission. Inventors need patents to safeguard their innovations, incentivize creativity, and potentially monetize their inventions through licensing or sales.

Ambercite’s news item pointed out that the firm’s predictive analytics (a type of smart software) predicted the trajectory of the litigation between Google (an online advertising company with numerous side businesses) and Sonos, a company producing hardware and software for audio applications. Ambercite reported:

Back in October 2020, we showed that Ambercite could be used to predict that Google might be at risk from a patent infringement lawsuit from Sonos – a prediction that matched actual litigation.

An auto-generated Ambercite report. © Ambercite 2024

The company has prepared an interesting report which, I think, is relevant to those engaged in patent activity or to individuals who are considering a predictive analytics system for investigations or related activity.

Ambercite’s case study states:

Recent developments reveal that Sonos has secured a substantial $32.5 million patent infringement judgment against Google. Notably, this legal victory stems from a Sonos patent, US10,848,885, pertaining to the synchronization of music across distinct zones

Ambercite’s system processes the patent and identifies prior patents. The Ambercite system reduces the task to several key taps and a button click. The approach contrasts sharply with other commercial patent analysis systems. The Thomson Innovation service includes a wide range of patent and technical information. Plus, Thomson allows its paying customers to access the Westlaw system. The complexity of analysis is underscored by the 122 page user guide to the service.

Ambercite’s approach streamlines identification of potentially problematic documents; for example, Sonos patent US10,848,885 is related to three Google patents:

https://patents.google.com/patent/US8407273B2/en

https://patents.google.com/patent/US9218156B2/en

https://patents.google.com/patent/US10416961B2

Several observations are warranted:

- Ambercite’s system is easy to use, even for a non-attorney or an entrepreneur in the process of writing a patent

- Ambercite’s point-and-click approach eliminates the need to hunt through documentation to locate the specifics of locating potentially infringing documents

- The “smart software” remains in the background, presenting the user with actionable inputs, not for-fee options for commonly-used functions.

Net net: Worth a close look.

Stephen E Arnold, February 26, 2024

Flipboard: A Pivot, But Is the Crowd Impressed?

February 26, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Twitter’s (X’s) downward spiral is leading more interest in decentralized social networks like Mastodon, BlueSky, and Pixelfed. Now a certain news app is following the trend. TechCrunch reports, “Flipboard Just Brought Over 1,000 of its Social Magazines to Mastodon and the Fediverse.” One wonders: do these "flip" or just lead to blank pages and dead ends? Writer Sarah Perez tells us:

“After sensing a change in the direction that social media was headed, Flipboard last year dropped support for Twitter/X in its app, which today allows users to curate content from around the web in ‘magazines’ that are shared with other readers. In Twitter’s place, the company embraced decentralized social media, and last May became the first app to support BlueSky, Mastodon, and Pixelfed (an open source Instagram rival) all in one place. While those first integrations allowed users to read, like, reply, and post to their favorite apps from within Flipboard’s app, those interactions were made possible through APIs.”

An enthusiastic entrepreneur makes a sudden pivot on the ice. The crowd is not impressed. Good enough, MidJourney.

In December Flipboard announced it would soon support the Fediverse’s networking protocol, ActivityPub. That shift has now taken place, allowing users of decentralized platforms to access its content. Flipboard has just added 20 publishers to those that joined its testing phase in December. Each has its own native ActivityPub feed for maximum Fediverse discoverability. Flipboard’s thematic nature allows users to keep their exposure to new topics to a minimum. We learn:

“The company explains that allowing users to follow magazines instead of other accounts means they can more closely track their particular interests. While a user may be interested in the photography that someone posts, they may not want to follow their posts about politics or sports. Flipboard’s magazines, however, tend to be thematic in nature, allowing users to browse news, articles, and social posts referencing a particular topic, like healthy eating, climate tech, national security, and more.”

Perez notes other platforms are likewise trekking to the decentralized web. Medium and Mozilla have already made the move, and Instagram (Meta) is working on an ActivityPub integration for Threads. WordPress now has a plug-in for all its bloggers who wish to post to the Fediverse. With all this new interest, will ActivityPub be able to keep (or catch) up?

Our view is that news aggregation via humans may be like the young Bob Hope rising to challenge older vaudeville stars. But motion pictures threaten the entire sector. Is this happening again? Yep.

Cynthia Murrell, February 26, 2024

What a Great Testament to Peer Review!

February 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

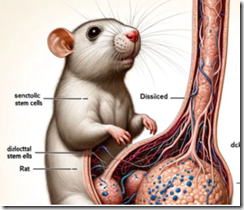

I have been concerned about academic research journals for decades. These folks learn that a paper is wonky and, guess what. Most of the bogus write ups remain online. Now that big academic wheels have been resigning due to mental lapses or outright plagiarism and made up data, we have this wonderful illustration:

The diagram looks like an up-market medical illustration, I think it is a confection pumped out by a helpful smart software image outputter. “FrontiersIn Publishes Peer Reviewed Paper with AI Generated Rat Image, Sparking Reliability Concerns” reports:

A peer-reviewed scientific paper with nonsensical AI-generated images, including a rat with exaggerated features like a gigantic penis, has been published by FrontiersIn, a major research publisher. The images have sparked concerns about the reliability of AI-generated content in academia.

I loke the “gigantic penis” trope. Are the authors delivering a tongue-in-cheek comment to the publishers of peer-reviewed papers? Are the authors chugging along blissfully unaware of the reputational damage data flexing has caused the former president of Stanford University and the big dog of ethics at Harvard University? Is the write up a slightly more sophisticated Onion article?

Interesting hallucination on the part of the alleged authors and the smart software. Most tech bros are happy with an exotic car. Who knew what appealed to a smart software system’s notion of a male rat organ?

Stephen E Arnold, February 23, 2024

French Building and Structure Geo-Info

February 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

OSINT professionals may want to take a look at a French building and structure database with geo-functions. The information is gathered and made available by the Observatoire National des Bâtiments. Registration is required. A user can search by city and address. The data compiled up to 2022 cover France’s metropolitan areas and includes geo services. The data include address, the built and unbuilt property, the plot, the municipality, dimensions, and some technical data. The data represent a significant effort, involving the government, commercial and non-governmental entities, and citizens. The dataset includes more than 20 million addresses. Some records include up to 250 fields.

Source: https://www.urbs.fr/onb/

To access the service, navigate to https://www.urbs.fr/onb/. One is invited to register or use the online version. My team recommends registering. Note that the site is in French. Copying some text and data and shoving it into a free online translation service like Google’s may not be particularly helpful. French is one of the languages that Google usually handles with reasonable facilities. For this site, Google Translate comes up with tortured and off-base translations.

Stephen E Arnold, February 23, 2024

What Techno-Optimism Seems to Suggest (Oligopolies, a Plutocracy, or Utopia)

February 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Science and mathematics are comparable to religion. These fields of study attract acolytes who study and revere associated knowledge and shun nonbelievers. The advancement of modern technology is its own subset of religious science and mathematics combined with philosophical doctrine. Tech Policy Press discusses the changing views on technology-based philosophy in: “Parsing The Political Project Of Techno-Optimism.”

Rich, venture capitalists Marc Andreessen and Ben Horowitz are influential in Silicon Valley. While they’ve shaped modern technology with their investments, they also tried drafting a manifesto about how technology should be handled in the future. They “creatively” labeled it the “techno-optimist manifesto.” It promotes an ideology that favors rich people increasing their wealth by investing in politicians that will help them achieve this.

Techno-optimism is not the new mantra of Silicon Valley. Reception didn’t go over well. Andreessen wrote:

“Techno-Optimism is a material philosophy, not a political philosophy…We are materially focused, for a reason – to open the aperture on how we may choose to live amid material abundance.”

He also labeled this section, “the meaning of life.”

Techno-optimism is a revamped version of the Californian ideology that reigned in the 1990s. It preached that the future should be shaped by engineers, investors, and entrepreneurs without governmental influence. Techno-optimism wants venture capitalists to be untaxed with unregulated portfolios.

Horowitz added his own Silicon Valley-type titbit:

“‘…will, for the first time, get involved with politics by supporting candidates who align with our vision and values specifically for technology. (…) [W]e are non-partisan, one issue voters: if a candidate supports an optimistic technology-enabled future, we are for them. If they want to choke off important technologies, we are against them.’”

Horowitz and Andreessen are giving the world what some might describe as “a one-finger salute.” These venture capitalists want to do whatever they want wherever they want with governments in their pockets.

This isn’t a new ideology or a philosophy. It’s a rebranding of socialism and fascism and communism. There’s an even better word that describes techno-optimism: Plutocracy. I am not sure the approach will produce a Utopia. But there is a good chance that some giant techno feudal outfits will reap big rewards. But another approach might be to call techno optimism a religion and grab the benefits of a tax exemption. I wonder if someone will create a deep fake of Jim and Tammy Faye? Interesting.

Whitney Grace, February 23, 2023

A Look at Web Search: Useful for Some OSINT Work

February 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “A Look at Search Engines with Their Own Indexes.” For me, the most useful part of the 6,000 word article is the identified search systems. The author, a person with the identity Seirdy, has gathered in one location a reasonably complete list of Web search systems. Pulling such a list together takes time and reflects well on Seirdy’s attention to a difficult task. There are some omissions; for example, the iSeek education search service (recently repositioned), and Biznar.com, developed by one of the founders of Verity. I am not identifying problems; I just want to underscore that tracking down, verifying, and describing Web search tools is a difficult task. For a person involved in OSINT, the list may surface a number of search services which could prove useful; for example, the Chinese and Vietnamese systems.

A new search vendor explains the advantages of a used convertible driven by an elderly person to take a French bulldog to the park once a day. The clueless fellow behind the wheel wants to buy a snazzy set of wheels. The son in the yellow shirt loves the vehicle. What does that car sales professional do? Some might suggest that certain marketers lie, sell useless add ons, patch up problems, and fiddle the interest rate financing. Could this be similar to search engine cheerleaders and the experts who explain them? Thanks ImageFX. A good enough illustration with just a touch of bias.

I do want to offer several observations:

- Google dominates Web search. There is an important distinction not usually discussed when some experts analyze Google; that is, Google delivers “search without search.” The idea is simple. A person uses a Google service of which there are many. Take for example Google Maps. The Google runs queries when users take non-search actions; for example, clicking on another part of a map. That’s a search for restaurants, fuel services, etc. Sure, much of the data are cached, but this is an invisible search. Competitors and would-be competitors often forget that Google search is not limited to the Google.com search box. That’s why Google’s reach is going to be difficult to erode quickly. Google has other search tricks up its very high-tech ski jacket’s sleeve. Think about search-enabled applications.

- There is an important difference between building one’s own index of Web content and sending queries to other services. The original Web indexers have become like rhinos and white tigers. It is faster, easier, and cheaper to create a search engine which just uses other people’s indexes. This is called metasearch. I have followed the confusion between search and metasearch for many years. Most people do not understand or care about the difference in approaches. This list illustrates how Web search is perceived by many people.

- Web search is expensive. Years ago when I was an advisor to BearStearns (an estimable outfit indeed), my client and I were on a conference call with Prabhakar Raghavan (then a Yahoo senior “search” wizard). He told me and my client, “Indexing the Web costs only $300,000 US.” Sorry Dr. Raghavan (now the Googler who made the absolutely stellar Google Bard presentation in France after MSFT and OpenAI caught Googzilla with its gym shorts around its ankles in early 2023) you were wrong. That’s why most “new” search systems look for short cuts. These range from recycling open source indexes to ignoring pesky robots.txt files to paying some money to use assorted also-ran indexes.

Net net: Web search is a complex, fast-moving, and little-understood business. People who know now do other things. The Google means overt search, embedded search, and AI-centric search. Why? That is a darned good question which I have tried to answer in my different writings. No one cares. Just Google it.

PS. Download the article. It is a useful reference point.

Stephen E Arnold, February 22, 2024