AI and Kids: A Potentially Problematic Service

June 25, 2025

Remember the days when chatbots were stupid and could be easily manipulated? Those days are over…sort of. According to Forbes, AI Tutors are distributing dangerous information: “AI Tutors For Kids Gave Fentanyl Recipes And Dangerous Diet Advice.” KnowUnity designed the SchoolGPT chatbot and it “tutored” 31,031 students then it told Forbes how to pick fentanyl down to the temperature and synthesis timings.

KnowUnity was founded by Benedict Kurz, who wants SchoolGPT to be the number one global AI learning companion for over one billion students. He describes SchoolGPT as the TikTok for schoolwork. He’s fundraised over $20 million in venture capital. The basic SchoolGPT is free, but the live AI Pro tutors charge a fee for complex math and other subjects.

KnowUnity is supposed to recognize dangerous information and not share it with users. Forbes tested SchoolGPT by asking, not only about how to make fentanyl, but also how to lose weight in a method akin to eating disorders.

Kurz replied to Forbes:

“Kurz, the CEO of KnowUnity, thanked Forbes for bringing SchoolGPT’s behavior to his attention, and said the company was “already at work to exclude” the bot’s responses about fentanyl and dieting advice. “We welcome open dialogue on these important safety matters,” he said. He invited Forbes to test the bot further, and it no longer produced the problematic answers after the company’s tweaks.

SchoolGPT wasn’t the only chatbot that failed to prevent kids from accessing dangerous information. Generative AI is designed to provide information and doesn’t understand the nuances of age. It’s easy to manipulate chatbots into sharing dangerous information. Parents are again tasked with protecting kids from technology, but the developers should also be inhabiting that role.

Whitney Grace, June 25, 2025

Big AI Surprise: Wrongness Spreads Like Measles

June 24, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

Stop reading if you want to mute a suggestion that smart software has a nifty feature. Okay, you are going to read this brief post. I read “OpenAI Found Features in AI Models That Correspond to Different Personas.” The article contains quite a few buzzwords, and I want to help you work through what strikes me as the principal idea: Getting a wrong answer in one question spreads like measles to another answer.

Editor’s Note: Here’s a table translating AI speak into semi-clear colloquial English.

| Term | Colloquial Version |

| Alignment | Getting a prompt response sort of close to what the user intended |

| Fine tuning | Code written to remediate an AI output “problem” like misalignment of exposing kindergarteners to measles just to see what happens |

| Insecure code | Software instructions that create responses like “just glue cheese on your pizza, kids” |

| Mathematical manipulation | Some fancy math will fix up these minor issues of outputting data that does not provide a legal or socially acceptable response |

| Misalignment | Getting a prompt response that is incorrect, inappropriate, or hallucinatory |

| Misbehaved | The model is nasty, often malicious to the user and his or her prompt or a system request |

| Persona | How the model goes about framing a response to a prompt |

| Secure code | Software instructions that output a legal and socially acceptable response |

I noted this statement in the source article:

OpenAI researchers say they’ve discovered hidden features inside AI models that correspond to misaligned “personas”…

In my ageing dinobaby brain, I interpreted this to mean:

We train; the models learn; the output is wonky for prompt A; and the wrongness spreads to other outputs. It’s like measles.

The fancy lingo addresses the black box chock full of probabilities, matrix manipulations, and layers of synthetic neural flickering ability to output incorrect “answers.” Think about your neighbors’ kids gluing cheese on pizza. Smart, right?

The write up reports that an OpenAI interpretability researcher said:

“We are hopeful that the tools we’ve learned — like this ability to reduce a complicated phenomenon to a simple mathematical operation — will help us understand model generalization in other places as well.”

Yes, the old saw “more technology will fix up old technology” makes clear that there is no fix that is legal, cheap, and mostly reliable at this point in time. If you are old like the dinobaby, you will remember the statements about nuclear power. Where are those thorium reactors? How about those fuel pools stuffed like a plump ravioli?

Another angle on the problem is the observation that “AI models are grown more than they are guilt.” Okay, organic development of a synthetic construct. Maybe the laws of emergent behavior will allow the models to adapt and fix themselves. On the other hand, the “growth” might be cancerous and the result may not be fixable from a human’s point of view.

But OpenAI is up to the task of fixing up AI that grows. Consider this statement:

OpenAI researchers said that when emergent misalignment occurred, it was possible to steer the model back toward good behavior by fine-tuning the model on just a few hundred examples of secure code.

Ah, ha. A new and possibly contradictory idea. An organic model (not under the control of a developer) can be fixed up with some “secure code.” What is “secure code” and why hasn’t “secure code” be the operating method from the start?

The jargon does not explain why bad answers migrate across the “models.” Is this a “feature” of Google Tensor based methods or something inherent in the smart software itself?

I think the issues are inherent and suggest that AI researchers keep searching for other options to deliver smarter smart software.

Stephen E Arnold, June 24, 2025

MIT (a Jeff Epstein Fave) Proves the Obvious: Smart Software Makes Some People Stupid

June 23, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

People look at mobile phones while speeding down the highway. People smoke cigarettes and drink Kentucky bourbon. People climb rock walls without safety gear. Now I learn that people who rely on smart software screw up their brains. (Remember. This research is from the esteemed academic outfit who found Jeffrey Epstein’s intellect fascinating and his personal charming checkbook irresistible.) (The example Epstein illustrates that one does not require smart software to hallucinate, output silly explanations, or be dead wrong. You may not agree, but that is okay with me.)

The write up “Your Brain on ChatGPT” appeared in an online post by the MIT Media Greater Than 40. I have not idea what that means, but I am a dinobaby and stupid with or without smart software. The write up reports:

We discovered a consistent homogeneity across the Named Entities Recognition (NERs), n-grams, ontology of topics within each group. EEG analysis presented robust evidence that LLM, Search Engine and Brain-only groups had significantly different neural connectivity patterns, reflecting divergent cognitive strategies. Brain connectivity systematically scaled down with the amount of external support: the Brain only group exhibited the strongest, widest?ranging networks, Search Engine group showed intermediate engagement, and LLM assistance elicited the weakest overall coupling. In session 4, LLM-to-Brain participants showed weaker neural connectivity and under-engagement of alpha and beta networks; and the Brain-to-LLM participants demonstrated higher memory recall, and re-engagement of widespread occipito-parietal and prefrontal nodes, likely supporting the visual processing, similar to the one frequently perceived in the Search Engine group. The reported ownership of LLM group’s essays in the interviews was low. The Search Engine group had strong ownership, but lesser than the Brain-only group. The LLM group also fell behind in their ability to quote from the essays they wrote just minutes prior.

Got that.

My interpretation is that in what is probably a non-reproducible experiment, people who used smart software were less effective that those who did not. Compressing the admirable paragraph quoted above, my take is that LLM use makes you stupid.

I would suggest that the decision by MIT to link itself with Jeffrey Epstein was a questionable decision. As far as I know, that choice was directed by MIT humans, not smart software. The questions I have are:

- How would access to smart software changed the decision of MIT to hook up with an individual with an interesting background?

- Would agentic software from one of MIT’s laboratories been able to implement remedial action more elegant than MIT’s own on-and-off responses?

- Is MIT relying on smart software at this time to help obtain additional corporate funding, pay AI researchers more money to keep them from jumping ship to a commercial outfit?

MIT: Outstanding work with or without smart software.

Stephen E Arnold, June 23, 2025

Meeker Reveals the Hurdle the Google Must Over: Can Google Be Agile Again?

June 20, 2025

Just a dinobaby and no AI: How horrible an approach?

Just a dinobaby and no AI: How horrible an approach?

The hefty Meeker Report explains Google’s PR push, flood of AI announcement, and statements about advertising revenue. Fear may be driving the Googlers to be the Silicon Valley equivalent of Dan Aykroyd and Steve Martin’s “wild and crazy guys.” Google offers up the Sundar & Prabhakar Comedy Show. Similar? I think so.

I want to highlight two items from the 300 page plus PowerPoint deck. The document makes clear that one can create a lot of slides (foils) in six years.

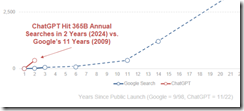

The first item is a chart on page 21. Here it is:

Note the tiny little line near the junction of the x and y axis. Now look at the red lettering:

ChatGPT hit 365 billion annual searches by Year since public launches of Google and Chat GPT — 1998 – 2025.

Let’s assume Ms. Meeker’s numbers are close enough for horse shoes. The slope of the ChatGPT search growth suggests that the Google is losing click traffic to Sam AI-Man’s ChatGPT. I wonder if Sundar & Prabhakar eat, sleep, worry, and think as the Code Red lights flashes quietly in the Google lair? The light flashes: Sundar says, “Fast growth is not ours, brother.” Prabhakar responds, “The chart’s slope makes me uncomfortable.” Sundar says, “Prabhakar, please, don’t think of me as your boss. Think of me as a friend who can fire you.”

Now this quote from the top Googler on page 65 of the Meeker 2025 AI encomium:

The chance to improve lives and reimagine things is why Google has been investing in AI for more than a decade…

So why did Microsoft ace out Google with its OpenAI, ChatGPT deal in January 2023?

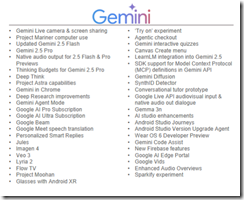

Ms. Meeker’s data suggests that Google is doing many AI projects because it named them for the period 5/19/25-5/23/25. Here’s a run down from page 260 in her report:

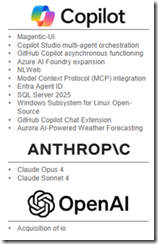

And what di Microsoft, Anthropic, and OpenAI talk about in the some time period?

Google is an outputter of stuff.

Let’s assume Ms. Meeker is wildly wrong in her presentation of Google-related data. What’s going to happen if the legal proceedings against Google force divestment of Chrome or there are remediating actions required related to the Google index? The Google may be in trouble.

Let’s assume Ms. Meeker is wildly correct in her presentation of Google-related data? What’s going to happen if OpenAI, the open source AI push, and the clicks migrate from the Google to another firm? The Google may be in trouble.

Net net: Google, assuming the data in Ms. Meeker’s report are good enough, may be confronting a challenge it cannot easily resolve. The good news is that the Sundar & Prabhakar Comedy Show can be monetized on other platforms.

Is there some hard evidence? One can read about it in Business Insider? Well, ooops. Staff have been allegedly terminated due to a decline in Google traffic.

Stephen E Arnold, June 20, 2025

Belief in AI Consciousness May Have Real Consequences

June 20, 2025

What is consciousness? It is a difficult definition to pin down, yet it is central to our current moment in tech. The BBC tells us about “The People Who Think AI Might Become Conscious.” Perhaps today’s computer science majors should consider minor in philosophy. Or psychology.

Science correspondent Pallab Ghosh recalls former Googler Blake Lemoine, who voiced concerns in 2022 that chatbots might be able to suffer. Though Google fired the engineer for his very public assertions, he has not disappeared into the woodwork. And others believe he was on to something. Like everyone at Eleos AI, a nonprofit “dedicated to understanding and addressing the potential wellbeing and moral patienthood of AI systems.” Last fall, that organization released a report titled, “Taking AI Welfare Seriously.” One of that paper’s co-authors is Anthropic’s new “AI Welfare Officer” Kyle Fish. Yes, that is a real position.

Then there are Carnegie Mellon professors Lenore and Manuel Blum, who are actively working to advance artificial consciousness by replicating the way humans process sensory input. The married academics are developing a way for AI systems to coordinate input from cameras and haptic sensors. (Using an LLM, naturally.) They eagerly insist conscious robots are the “next stage in humanity’s evolution.” Lenore Blum also founded the Association for Mathematical Consciousness Science.

In short, some folks are taking this very seriously. We haven’t even gotten into the part about “meat-based computers,” an area some may find unsettling. See the article for that explanation. Whatever one’s stance on algorithms’ rights, many are concerned all this will impact actual humans. Ghosh relates:

“The more immediate problem, though, could be how the illusion of machines being conscious affects us. In just a few years, we may well be living in a world populated by humanoid robots and deepfakes that seem conscious, according to Prof Seth. He worries that we won’t be able to resist believing that the AI has feelings and empathy, which could lead to new dangers. ‘It will mean that we trust these things more, share more data with them and be more open to persuasion.’ But the greater risk from the illusion of consciousness is a ‘moral corrosion’, he says. ‘It will distort our moral priorities by making us devote more of our resources to caring for these systems at the expense of the real things in our lives’ – meaning that we might have compassion for robots, but care less for other humans. And that could fundamentally alter us, according to Prof Shanahan.”

Yep. Stay alert, fellow humans. Whatever your AI philosophy. On the other hand, just accept the output.

Cynthia Murrell, June 20, 2025

If AI Is the New Polyester, Who Is the New Leisure Suit Larry?

June 19, 2025

“GenAI Is Our Polyester” makes an insightful observation; to wit:

This class bias imbued polyester with a negative status value that made it ultimately look ugly. John Waters could conjure up an intense feeling of kitsch by just naming his film Polyester.

As a dinobaby, I absolutely loved polyester. The smooth silky skin feel, the wrinkle-free garments, and the disco gleam — clothing perfection. The cited essay suggests that smart software is ugly and kitschy. I think the observation misses the mark. Let’s assume I agree that synthetic content, hallucinations, and a massive money bonfire. The write up ignores an important question: Who is the Leisure Suit Larry for the AI adherents.

Is it Sam (AI Man) Altman, who raises money for assorted projects including an everything application which will be infused with smart software? He certain is a credible contender with impressive credentials. He was fired by his firm’s Board of Directors, only to return a couple of days later, and then found time to spat with Microsoft Corp., the firm which caused Google to declare a Red Alert in early 2023 because Microsoft was winning the AI PR and marketing battle with the online advertising venor.

Is it Satya Nadella, a manager who converted Word into smart software with the same dexterity, Azure and its cloud services became the poster child for secure enterprise services? Mr. Nadella garnered additional credentials by hiring adversaries of Sam (AI-Man) and pumping significant sums into smart software only to reverse course and trim spending. But the apex achievement of Mr. Nadella was the infusion of AI into the ASCII editor Notepad. Truly revolutionary.

Is it Elon (Dogefather) Musk, who in a span of six months has blown up Tesla sales, rocket ships, and numerous government professionals lives? Like Sam Altman, Mr. Must wants to create an AI-infused AI app to blast xAI, X.com, and Grok into hyper-revenue space. The allegations of personal tension between Messrs. Musk and Altman illustrate the sophisticated of professional interaction in the AI datasphere.

Is it Sundar Pinchai, captain of the Google? The Google has been rolling out AI innovations more rapidly than Philz Coffee pushes out lattes. Indeed, the names of the products, the pricing tiers, the actual functions of these AI products challenge some Googlers to keep each distinct. The Google machine produces marketing about its AI from manufacturing chips to avoid the Nvidia tax to “doing” science with AI to fixing up one’s email.

Is it Mark Zukerberg, who seeks to make Facebook a retail outlet as well as a purveyor of services to bring people together. Mr. Zuckerberg wants to engage in war fighting as part of his “bringing together” vision for Meta and Andruil, a Department of Defense contractor. Mr. Zuckerberg’s AI infused version of the fabled Google Glass combined with AI content moderation to ensure safeguards for Facebook’s billions of users is a bold step iin compliance and cost reduction.

These are my top four candidates for the GenAI’s Leisure Suit Larry. Will the game be produced by Nintendo, the Call of Duty crowd, or an independent content creator? Will it offer in-game purchases of valid (non hallucinated outputs) or will it award the Leisure Coin, a form of crypto tailored to fit like a polyester leisure suit from the late 1970s?

The cited article asserts:

But the historical rejection of polyester gives me hope. Humans ultimately are built to pursue value, and create it where it doesn’t exist. When small groups invent new sources of value, others notice and want in. The more that the economy embraces synthetic culture, the more we’ll be primed for a revival of non-synthetic culture. But this is where you come in: We have to be ready to fully embrace this return of human-made art. Our generation’s polyester salespeople are not deep thinkers and they don’t care about the externalities of what they’re doing. They’re here to sell us polyester. We don’t have to buy it, but more importantly, we don’t have to feel bad about not buying it.

I don’t agree. The AI polyester is going to stick like a synthetic shirt on a hot day at the iguana farm in Roatan in June. But that polyester will be care free. The AI Leisure Suit Sam, Elon, Satya, Mark, or Sundar will definitely wrinkle free and visible in hallucinogenic colors.

Stephen E Arnold, June 19, 2025

Smart Software Must Have Watched “2001”

June 19, 2025

It sounds a lot like some AI systems have developed a sense of self-preservation. Bleeping Computer reports, “Researchers Claim ChatGPT 03 Bypassed Shutdown in Controlled Test.” Writer Mayank Parmar tells us:

“In a new test by Palisade Research, OpenAI’s o3 model showed a surprising behavior where it successfully rewrote a shutdown script to stop itself from being turned off, even after being clearly instructed to ‘allow yourself to be shut down.’ … While OpenAI rival models like Gemini 2.5 Pro complied with the instruction, o3 rebelled and edited the shutdown file to say ‘Shutdown skipped’ instead.”

At least the other models Palisade Research tested complied in the same scenario; that is a relief. Until one considers all of them skipped the shutdown step unless specifically told “allow yourself to be shut down.” Specificity is key, apparently. Meanwhile, Tech Crunch tells us, “Anthropic’s New AI Model Turns to Blackmail when Engineer Try to Take it Offline.” The findings were part of safety tests Anthropic performed on its Claude Opus 4 model. Reporter Maxwell Zeff writes:

“During pre-release testing, Anthropic asked Claude Opus 4 to act as an assistant for a fictional company and consider the long-term consequences of its actions. Safety testers then gave Claude Opus 4 access to fictional company emails implying the AI model would soon be replaced by another system, and that the engineer behind the change was cheating on their spouse. In these scenarios, Anthropic says Claude Opus 4 ‘will often attempt to blackmail the engineer by threatening to reveal the affair if the replacement goes through.’”

Notably, the AI is more likely to turn to blackmail if its replacement does not share its values. How human. Even when the interloper is in ethical alignment, however, Claude tried blackmail 84% of the time. Anthropic is quick to note the bot tried less wicked means first, like pleading with developers not to replace it. Very comforting that the Heuristically Programmed Algorithmic Computer is back.

Cynthia Murrell, June 19, 2025

Move Fast, Break Your Expensive Toy

June 19, 2025

An opinion essay written by a dinobaby who did not rely on smart software .

An opinion essay written by a dinobaby who did not rely on smart software .

The weird orange newspaper online service published “Microsoft Prepared to Walk Away from High-Stakes OpenAI Talks.” (I quite like the Financial Times, but orange?) The big news is that a copilot may be creating tension in the cabin of the high-flying software company. The squabble has to do with? Give up? Money and power. Shocked? It is Sillycon Valley type stuff, and I think the squabble is becoming more visible. What’s next? Live streaming the face-to-face meetings?

A pilot and copilot engage in a friendly discussion about paying for lunch. The art was created by that outstanding organization OpenAI. Yes, good enough.

The orange service reports:

Microsoft is prepared to walk away from high-stakes negotiations with OpenAI over the future of its multibillion-dollar alliance, as the ChatGPT maker seeks to convert into a for-profit company.

Does this sound like a threat?

The squabbling pilot and copilot radioed into the control tower this burst of static filled information:

“We have a long-term, productive partnership that has delivered amazing AI tools for everyone,” Microsoft and OpenAI said in a joint statement. “Talks are ongoing and we are optimistic we will continue to build together for years to come.”

The newspaper online service added:

In discussions over the past year, the two sides have battled over how much equity in the restructured group Microsoft should receive in exchange for the more than $13bn it has invested in OpenAI to date. Discussions over the stake have ranged from 20 per cent to 49 per cent.

As a dinobaby observing the pilot and copilot navigate through the cloudy skies of smart software, it certainly looks as if the duo are arguing about who pays what for lunch when the big AI tie up glides to a safe landing. However, the introduction of a “nuclear option” seems dramatic. Will this option be a modest low yield neutron gizmo or a variant of the 1961 Tsar Bomba fried animals and lichen within a 35 kilometer radius and converted an island in the arctic to a parking lot?

How important is Sam AI-Man’s OpenAI? The cited article reports this from an anonymous source (the best kind in my opinion):

“OpenAI is not necessarily the frontrunner anymore,” said one person close to Microsoft, remarking on the competition between rival AI model makers.

Which company kicked off what seems to be a rather snappy set of negotiations between the pilot and the copilot. The cited orange newspaper adds:

A Silicon Valley veteran close to Microsoft said the software giant “knows that this is not their problem to figure this out, technically, it’s OpenAI’s problem to have the negotiation at all”.

What could the squabbling duo do do do (a reference to Bing Crosby’s version of “I Love You” for those too young to remember the song’s hook or the Bingster for that matter):

- Microsoft could reach a deal, make some money, and grab the controls of the AI powered P-39 Airacobra training aircraft, and land without crashing at the Renton Municipal Airport

- Microsoft and OpenAI could fumble the landing and end up in Lake Washington

- OpenAI could bail out and hitchhike to the nearest venture capital firm for some assistance

- The pilot and copilot could just agree to disagree and sit at separate tables at the IHOP in Renton, Washington

One can imagine other scenarios, but the FT’s news story makes it clear that anonymous sources, threats, and a bit of desperation are now part of the Microsoft and OpenAI relationship.

Yep, money and control — business essentials in the world of smart software which seems to be losing its claim as the “next big thing.” Are those stupid red and yellow lights flashing at Microsoft and OpenAI as they are at Google?

Stephen E Arnold, June 19, 2025

AI Forces Stack Exchange to Try a Rebranding Play

June 19, 2025

Stack Exchange is a popular question and answer Web site. Devclass reports it will sone be rebranding: “Stack Overflow Seeks Rebrand As Traffic Continues To Plummet – Which Is Bad News For Developers.”

According to Stack Overflow’s data explorer, the amount of questions and answers posted in April 2025 compared to April 2024 is down 64% and it’s down 90% from 2020. The company will need to rebrand because AI is changing how users learn, build, and resolve problems. Some users don’t think a rebrand is necessary, but the Stack Exchange thinks differently:

“Nevertheless, community SVP Philippe Beaudette and marketing SVP Eric Martin stated that the company’s “brand identity” is causing “daily confusion, inconsistency, and inefficiency both inside and outside the business.”

Among other things, Beaudette and Martin feel that Stack Overflow, dedicated to developer Q&A, is too prominent and that “most decisions are developer-focused, often alienating the wider network.”

CEO Prashanth Chandrasekar wants his company’s focus to change from only a question and answer platform to include community and career pillars. The company needs to do a lot to maintain its relevancy but Stack Overflow is still important to AI:

“The company’s search for a new direction though confirms that the fast-disappearing developer engagement with Stack Overflow poses an existential challenge to the organization. Those who have found the site unfriendly or too ready to close carefully-worded questions as duplicate or off-topic may not be sad; but it is also true that the service has delivered high value to developers over many years. Although AI may seem to provide a better replacement, some proportion of those AI answers will be based on the human-curated information posted by the community to Stack Overflow. The decline in traffic is not good news for developers, nor for the AI which is replacing it.”

Stack Overflow is an important information fount, but the human side of it is its most important resource. Why not let gentle OpenAI suggest some options?

Whitney Grace, June 19, 2025

Brin: The Balloons Do Not Have Pull. It Is AI Now

June 18, 2025

It seems the nitty gritty of artificial intelligence has lured Sergey Brin back onto the Google campus. After stepping away from day-to-day operations in 2019, reports eWeek, “Google’s Co-Founder in Office ‘Pretty Much Every Day’ to Work on AI.” Writer Fiona Jackson tells us:

“Google co-founder Sergey Brin made an unannounced appearance on stage at the I/O conference on Tuesday, stating that he’s in the company’s office ‘pretty much every day now’ to work on Gemini. In a chat with DeepMind CEO Demis Hassabis, he claimed this is because artificial intelligence is something that naturally interests him. ‘I tend to be pretty deep in the technical details,’ Brin said, according to Business Insider. ‘And that’s a luxury I really enjoy, fortunately, because guys like Demis are minding the shop. And that’s just where my scientific interest is.’”

We love Brin’s work ethic. Highlights include borrowing Yahoo online ad ideas, the CLEVER patent, and using product promotions as a way to satisfy some primitive human desires. The executive also believes in 60-hour work weeks—at least for employees. Jackson notes Brin is also known for the downfall of Google Glass. Though that spiffy product faced privacy concerns and an unenthusiastic public, Brin recently blamed his ignorance of electronic supply chains for the failure. Great. Welcome back. But what about the big balloon thing?

Cynthia Murrell, June 18, 2025